Duckling Sizes

MotherDuck implements a distinct tenancy architecture that diverges from traditional database systems. The platform utilizes a hypertenancy model, which provisions isolated read-write Ducklings (compute instances) for each Organization member.

This architecture ensures dedicated compute resources and Duckling-level configuration at the individual user level, allowing users to independently optimize performance parameters according to their specific workload requirements. Each Duckling size has different performance characteristics and billing implications. MotherDuck uses fast SSDs for spill space, so queries can exceed their memory limits with minimal performance impact. DuckDB caches data in memory, and MotherDuck uses fast local disks for storage, which improves cold start times.

Duckling Sizes

| Duckling Size | Plans | Use Case | Cooldown Period | Startup Time | Read-Write Duckling Enabled? | Read Scaling Duckling Enabled? |

|---|---|---|---|---|---|---|

| Pulse | Lite, Business | Good for small workloads | 1 second | ~100ms | Yes | Yes |

| Standard | Business | Good for most data loading workloads | 60 seconds | ~100ms | Yes | Yes |

| Jumbo | Business | Better for large, complex transformations during loading | 60 seconds | ~100ms | Yes | Yes |

| Mega | Business | Optimal for demanding jobs with even larger scale and volumes than a Jumbo can handle | 5 minutes | ~a few minutes | Yes | Yes |

| Giga | Business, and in Free Trial on request | Best used for your largest and toughest workloads like batch jobs that run overnight or on weekends | 10 minutes | ~a few minutes | Yes | No |

- We recommend keeping the cooldown periods in mind when planning batch sizes

PULSE

Optimized for ad-hoc analytics and read-only workloads

Pulse Ducklings are auto-scaling and designed for efficiency, making them ideal for:

- Running ad-hoc queries (Note complex queries involving spatial analysis or regex-like functions may perform better on larger Duckling sizes)

- Read-optimized workflows with high concurrent user access, such as those in customer-facing analytics.

- Powering data apps and embedded analytics where quick, short queries are common.

- High-concurrency, read-optimized workflows

Learn how Pulse Ducklings are billed.

STANDARD

Production-grade Duckling designed for analytical processing and reporting

Standard Ducklings offer a balance of resources for consistent performance, suited for:

- Core analytical workflows requiring balanced performance metrics.

- Development and validation environments for production workflows.

- Standard ETL/ELT pipeline implementation, including:

- Parallel execution of incremental ingestion jobs.

- Multi-threaded transformation processing.

Learn how Standard Ducklings are billed.

JUMBO

A larger Duckling built for high-throughput processing and faster performance

Jumbo Ducklings provide resources for heavy workloads, including:

- Large-scale batch processing and ingestion operations.

- Complex query execution on high-volume datasets.

- Advanced join operations and aggregations.

- RAM-intensive processing of deeply-nested JSON structures or other large data objects.

Learn how Jumbo Ducklings are billed.

MEGA

Built for high-throughput processing on demanding jobs at even larger scale than a Jumbo's capacity

Mega Ducklings provide compute resources to help expedite large-scale transformations and complex operations, perfect for:

- Batch processing and high-volume ingestion operations.

- Running a weekly job that rebuilds all of your tables that needs to run quickly, in minutes - not hours.

- Complex query execution on high-volume datasets that a Jumbo Duckling won't be able to handle in a time crunch.

- Advanced operations for users with 10x the data volume as other users who require low-latency, swift performance.

Learn how Mega Ducklings are billed.

GIGA

Our largest Duckling, built for the toughest workloads with massive scale and complexity

Giga Ducklings provide compute resources for the most demanding tasks, perfect for:

- Complex, large-scale workloads and jobs that won't run on any other Duckling size.

- Running one-time jobs that need to complete overnight or over the weekend, like restating revenue actuals for 10 years's worth of high-volume data.

- Huge volumes of advanced join operations and aggregations.

- Very large amounts of RAM-intensive processing of deeply-nested JSON structures or other large data objects.

Learn how Giga Ducklings are billed.

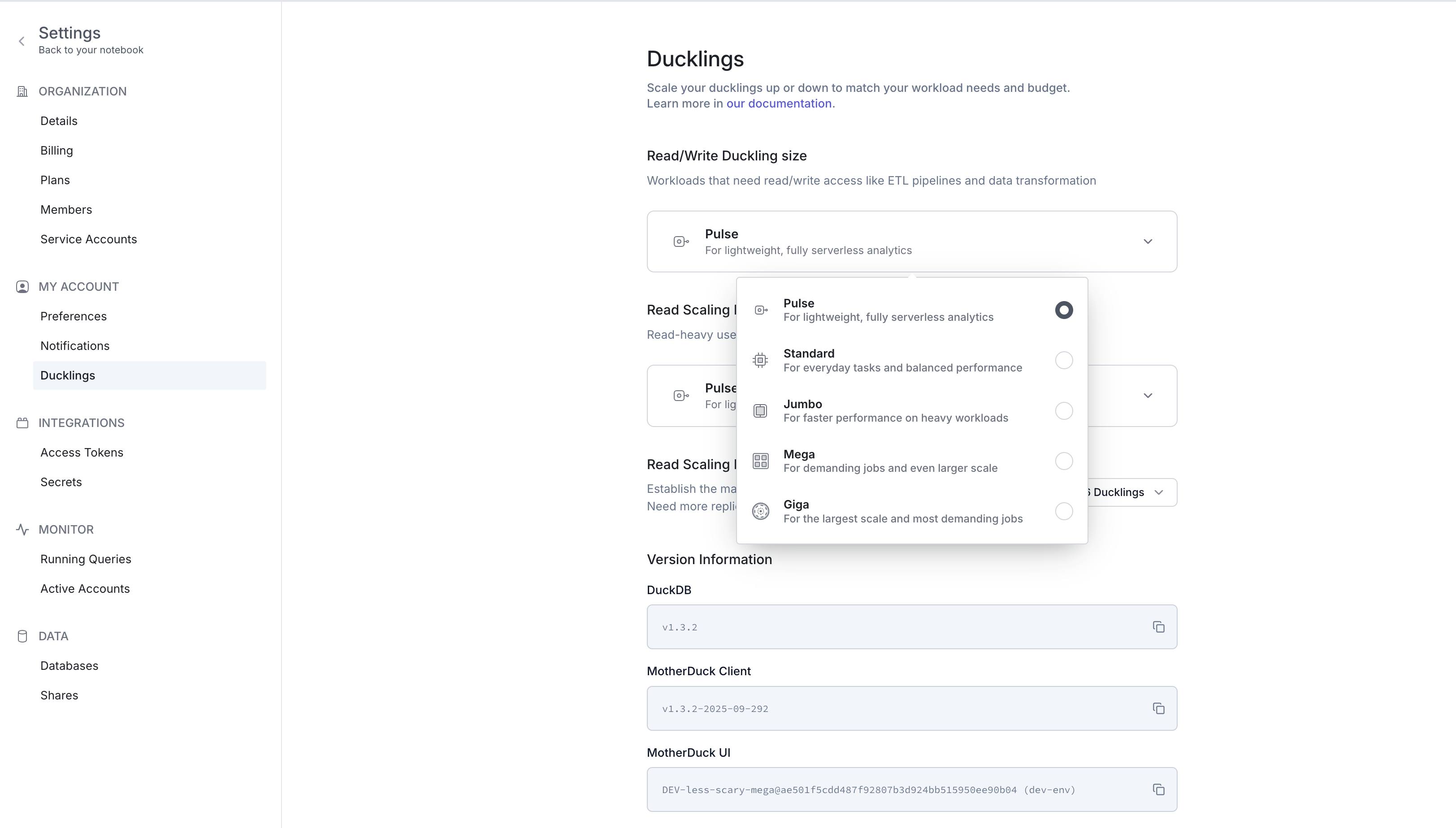

Changing Duckling Sizes

Duckling sizes can be changed in MotherDuck UI by clicking on the icon in the top right, or under "Settings > Ducklings". Here you can choose the desired Read/Write and Read Scaling size. Changing Duckling size can take a few minutes while your new Duckling wakes up.

The Duckling size for a user or service account can also be set using the Set user Ducklings REST API.

Note: Changing Duckling size in the UI or via our REST API takes

- 2 minutes for Pulse, Standard and Jumbo

- 5 minutes for Mega

- 10 minutes for Giga