Streamkap

Streamkap is a stream processing platform built for Change Data Capture (CDC) and event sources. It makes it easy to move operational data into analytics systems like MotherDuck with low latency and high reliability. Streamkap offers various sources, including PostgreSQL, MySQL, SQL Server, a range of SQL and NoSQL databases, Kafka, and other storage systems.

Streamkap is designed to get you streaming in minutes without a heavy setup. You focus on your business, and Streamkap handles the hard parts:

- Lightweight in-stream transformations let you preprocess, clean, and enrich data with minimal latency and cost.

- Automatically adapts to schema changes—added or removed fields, renamed columns, evolving data types, and nested structures.

- Built-in observability and automated recovery reduce operational overhead.

- Fully managed via API or Terraform, integrates with CI/CD workflows, and automates environment provisioning.

- Deploy multiple service versions to isolate workloads—logically (per microservice or environment) or physically (across regions or infrastructure).

- Choose from Streamkap Cloud or BYOC (Bring Your Own Cloud) for maximum flexibility and security.

You can explore Streamkap’s MotherDuck integration and examples in the official documentation.

Overview

This guide explains how to stream data from Streamkap into the MotherDuck database using Amazon S3 as an intermediary. We'll utilise the S3 connector to first stream data into an S3 bucket. Then, you can configure MotherDuck to read from the S3 bucket to ingest the data into your database.

- Streamkap to S3: Streamkap is Kafka-based, so Kafka messages are streamed into an Amazon S3 bucket via an existing dedicated S3 connector. Please refer to the Streamkap’s Kafka to S3 Streaming Guide for detailed instructions.

- S3 to MotherDuck: MotherDuck is configured to read the data from the S3 bucket and load it into the database.

Prerequisites

- Amazon S3 Bucket: A bucket in Amazon S3 where data from Streamkap will be streamed.

- MotherDuck Account: A valid MotherDuck account and database setup where the data will be loaded.

- Streamkap’s Kafka S3 Connector: Your Kafka to S3 connector configured and running.

MotherDuck Setup

Once data is available in the S3 bucket, you can configure MotherDuck to read from the S3 bucket and load it into your database. Follow these steps:

Configure the S3 Source in MotherDuck

To read data from the S3 bucket into MotherDuck, you need to configure a data source that points to the S3 bucket. This involves creating a connection between MotherDuck and your S3 bucket using AWS credentials.

- Log in to MotherDuck and navigate to your workspace or database.

- Go to the Secrets.

- Add new secret and choose Amazon S3 as the secret type.

- Provide the necessary details to access the S3 bucket:

- Secret Name: The name of your source connection details.

- Region: The region of your S3 bucket (e.g., us-west-2).

- Access Key ID: Your AWS Access Key ID.

- Secret Access Key: Your AWS Secret Access Key.

SQL Command for Secret Configuration

Alternatively, you can configure the secret using SQL. Below is an example configuration for setting up the secret:

CREATE SECRET IN MOTHERDUCK (

TYPE S3,

KEY_ID 'access_key',

SECRET 'secret_key',

REGION 'us-east-1'

);

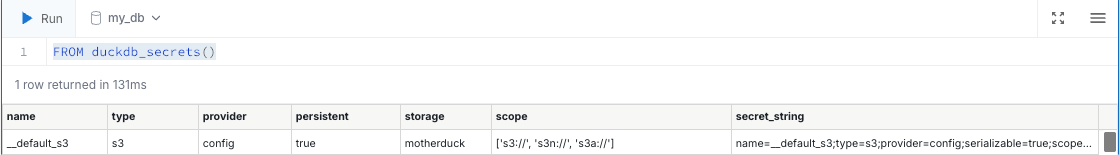

Verify Existing Secrets

To check your existing secrets, you can run the following SQL command:

FROM duckdb_secrets()`

Query Data from the S3 Bucket

Once the connection between MotherDuck and your S3 bucket is established, you can define a schema and table in MotherDuck or simply query the data directly from the S3 bucket.

Since your Kafka stream might be writing multiple files to the S3 bucket, we recommend using a wildcard * to read all files in a folder. This will enable MotherDuck to automatically pick up new files as they are written to the S3 bucket.

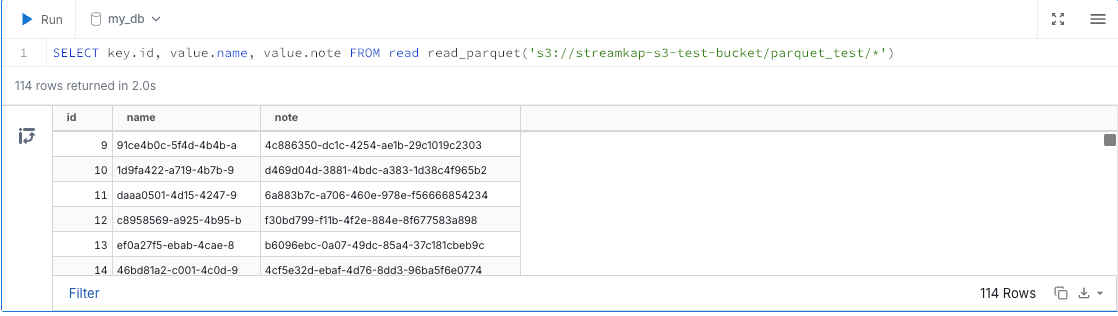

Here is an example SQL query to read data from your S3 bucket (using a wildcard for streaming):

SELECT key.id, value.name, value.note

FROM read read_parquet('s3://streamkap-s3-test-bucket/parquet_test/*')