Using the MotherDuck MCP Server

The MotherDuck remote MCP Server connects AI assistants like Claude, ChatGPT, and Cursor to your data. This guide covers effective workflows for getting accurate, useful results from your AI-powered data analysis. If you haven't already, set up your remote MCP connection and by the end, you'll be able to guide an AI assistant of your choice to writing accurate, insightful queries.

This guide is written for the remote MCP (hosted by MotherDuck, read-only). Most of the tips apply to the local MCP (self-hosted, read-write) as well. For local MCP setup and details, see the MCP reference (Local server section).

Prerequisites

To use the MotherDuck remote MCP server, you will need:

- A MotherDuck account with at least one database

- An AI client like Claude, Cursor, or ChatGPT already connected to the remote MCP server (setup instructions)

The MotherDuck remote MCP Server provides read-only access to your databases. You can explore schemas and run SELECT queries, but the AI cannot modify your data. If you need to run write operations like UPDATE or CREATE TABLE AS statements, ask the AI to generate the query and then run it yourself in the MotherDuck UI or your preferred client. For fully automated write operations, see the MCP reference (Local server section).

Start with schema exploration

Before diving into analysis, help the AI understand your data. This is a form of context engineering: by exploring your schema upfront, you hydrate the conversation with knowledge about your tables, columns, and relationships. This context carries forward, helping the AI write more accurate queries throughout your session.

Start conversations by asking about your database structure:

Good first prompts:

- "What databases and tables do I have access to?"

- "Describe the schema of my

analyticsdatabase" - "What columns are in the

orderstable and what do they contain?"

The remote MCP server provides tools for schema exploration that surface table relationships, data types, and any documentation you've added to your schema.

If you have well-documented tables with COMMENT ON descriptions, the AI can use these to better understand your data's business meaning.

Frame questions with context

The more context you provide, the better the results. Include relevant details like:

- Time ranges: "Show me orders from the last 30 days" vs "Show me orders"

- Filters: "Analyze customers in the US with more than 5 purchases"

- Metrics: "Calculate revenue as

quantity * unit_price" - Output format: "Return results as a summary table with percentages"

Example - Vague vs. Specific:

| ❌ Vague | ✅ Specific |

|---|---|

| "Show me sales data" | "Show me total sales by product category for Q4 2024, sorted by revenue descending" |

| "Find top customers" | "Find the top 10 customers by total order value in the last 12 months" |

| "Analyze trends" | "Compare monthly active users month-over-month for 2024, showing growth rate" |

Iterate

Complex analysis works best as a conversation. Start simple, validate the results, then build up. Each exchange adds shared context, helping the AI write better queries as you go. While there is a temptation to get the perfect query in one shot, often insight comes as part of the process of data exploration.

When iterating, it can be helpful to have source data nearby to help verify outputs. Our users have noted that using their existing BI dashboard to quickly validate that metrics are correct helps to develop intuition about the information provided by the AI assistants.

Common workflow patterns

Data profiling

Quickly understand a new dataset:

"Profile the `transactions` table - show me:

- Row count and date range

- Distribution of key categorical columns

- Summary statistics for numeric columns

- Any null values or data quality issues"

DuckDB has a few SQL functions that are great for hydrating context:

DESCRIBEwhich retrieves the metadata for a specific tableSUMMARIZEwhich gets summary stats for a table (can be large)- The

USING SAMPLE 10clause (at the end of the query) which samples the data (can be large) - using it with a where clause to narrow down is very helpful for performance

Generating charts

Some AI clients can generate visualizations directly from your query results. ChatGPT on the web and Claude Desktop both support creating charts as "artifacts" alongside your conversation.

Visualizations help you spot trends and outliers faster than scanning tables, validate that query results make sense at a glance, and share insights with stakeholders who prefer visual formats.

Example prompts:

- "Chart monthly revenue for 2024 as a line graph"

- "Create a bar chart showing the top 10 customers by order count"

- "Visualize the distribution of order values as a histogram"

- "Show me a time series of daily active users with a 7-day moving average"

Once you have a chart, you can iterate on it just like query results: "Add a trend line", "Change to a stacked bar chart", or "Break this down by region".

When using the MCP with more IDE-like interfaces, the MCP plays very nicely with libraries like matplotlib for building more traditional charts.

Querying private S3 buckets

You can use the MCP to analyze files in private S3 buckets (Parquet, CSV, JSON) by storing your AWS credentials as a secret in MotherDuck.

Option 1: Add credentials through the MotherDuck UI

You can create secrets directly in the MotherDuck UI under Settings → Secrets.

Option 2: Use AWS SSO with credential chain (recommended for desktop AI clients)

If you use AWS SSO, you can refresh your credentials and store them in MotherDuck:

- Authenticate with AWS SSO:

aws sso login --profile your-profile

- Open a DuckDB client (e.g., the CLI) and create a secret using the credential chain:

ATTACH 'md:';

CREATE OR REPLACE SECRET IN MOTHERDUCK (

TYPE s3,

PROVIDER credential_chain

);

This stores your current AWS credentials in MotherDuck, making them available to the remote MCP server.

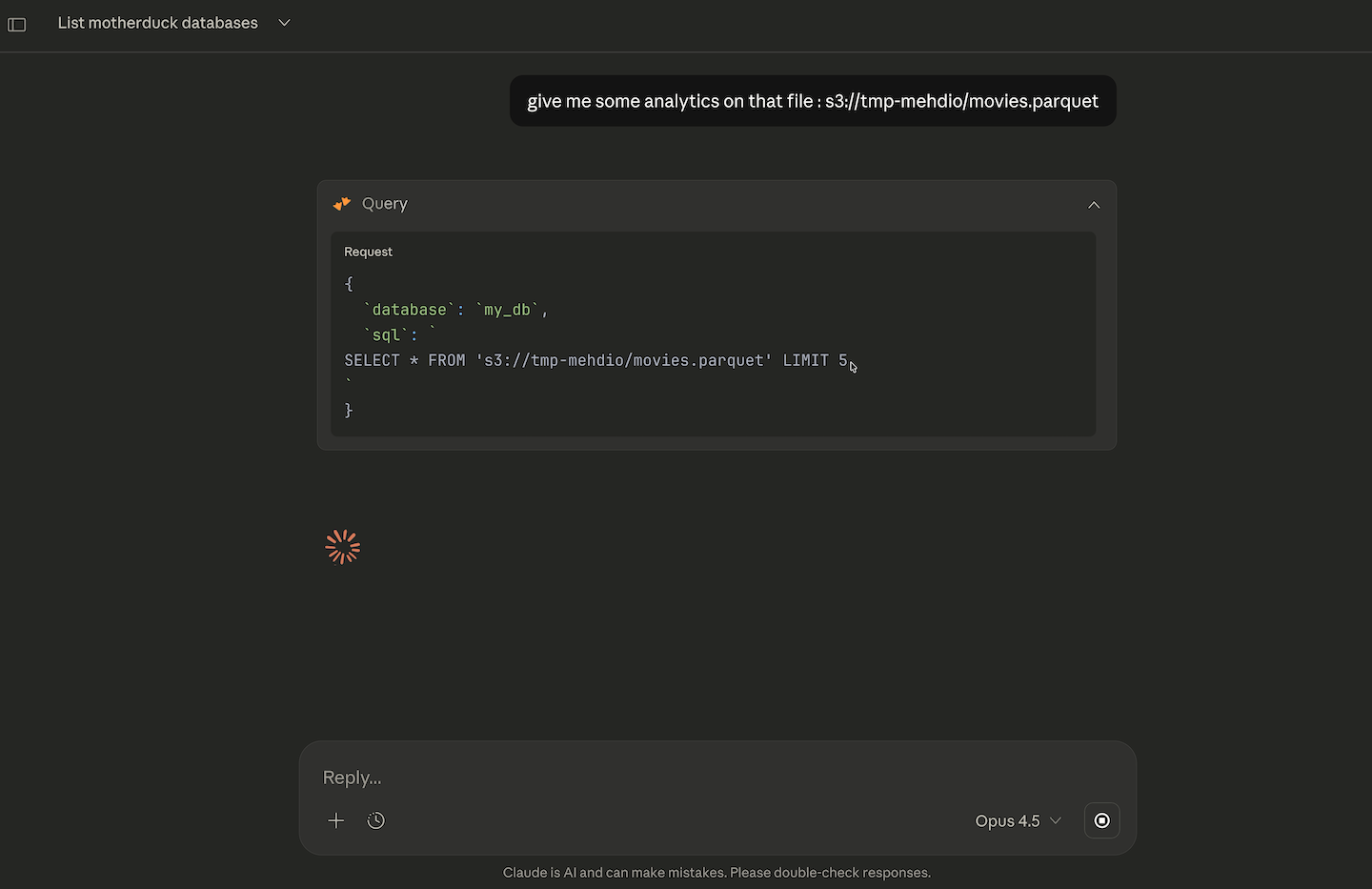

- Now you can ask your AI assistant to query any S3 bucket you have access to:

"Give me some analytics about s3://my-bucket/sales-data.parquet"

If you use temporary credentials (SSO, IAM roles), you'll need to refresh the secret when they expire by running the CREATE OR REPLACE SECRET command again.

Ad-hoc investigation

The MCP is especially useful for exploratory debugging when you're not sure what you're looking for. Rather than writing queries upfront, you can describe the problem and let the AI help you dig in.

"I noticed a spike in errors on Dec 10th. Help me investigate:

- What types of errors increased?

- Were specific users or endpoints affected?

- What changed compared to the previous week?"

One pattern we use at MotherDuck is loading logs or event data into a database and using the MCP to interrogate it conversationally. Instead of manually crafting regex patterns or grep commands, you can ask questions like "What are the most common error messages in the last hour?" or "Show me all requests from user X that resulted in a 500 error". This turns log analysis from a tedious grep session into an interactive investigation where each answer informs the next question.

Working with query results

Refining results

Results rarely come out perfect on the first try. The conversational nature of MCP means you can refine incrementally rather than rewriting queries from scratch. If you're seeing test data mixed in, just say "Add a filter to exclude test accounts". If the granularity is wrong, ask to "Change the grouping from daily to weekly". Small adjustments like changing sort order or adding a column are easy follow-ups.

Understanding queries

When the AI generates complex SQL, don't hesitate to ask for an explanation. This is useful both for validating the approach and for learning. Ask "Explain what this query is doing step by step" to understand the logic, or "Are there any edge cases this query might miss?" to sanity-check the results before relying on them.

Exporting for further use

Once you have the results you need, ask for output in the format that fits your workflow. You can request a markdown table for documentation, CSV-friendly output for spreadsheets, or a written summary to share with your team. The AI can also help you format results for specific tools or audiences. Sometimes it can also be a great jumping off for further analysis with an expert, so asking for the final query to hand-off can also be a great step.

Tips for better results

Be explicit about assumptions

Your data likely has business rules that aren't obvious from the schema alone. If a "completed" order means status is either 'shipped' or 'delivered', say so. If revenue calculations should exclude refunds, mention it upfront. The AI can't infer these domain-specific rules, so stating them early prevents incorrect results and saves iteration time.

Reference specific tables and columns

When you already know your schema, being specific helps the AI get it right the first time. Instead of asking about "the timestamp", say "Use the user_events.event_timestamp column". If you know how tables relate, specify the join: "Join orders to customers on customer_id". This is especially helpful in larger schemas where column names might be ambiguous.

Ask for validation

When accuracy matters, ask the AI to sanity-check its own work. Questions like "Does this total match what you'd expect based on the row counts?" or "Can you verify this join doesn't create duplicates?" can catch subtle bugs before you rely on the results. The AI can run quick validation queries to confirm the logic is sound.

Troubleshooting

The remote MCP server includes tools beyond just running queries. Most are metadata lookups or search functions for finding tables and columns, but the ask docs question tool is particularly useful when you're stuck on tricky syntax or DuckDB-specific features. If the AI is struggling with a query pattern, try asking it to look up the relevant documentation first.

| Issue | Solution |

|---|---|

| AI queries wrong table | Ask: "What tables are available?" then specify the correct one |

| Results don't look right | Ask: "Show me sample data from the source table" to verify the data |

| Query is slow | Ask: "Can you optimize this query?", add filters to reduce data scanned, or increase your Duckling size |

| AI doesn't understand the question | Rephrase with more specific column names and business context |

| Can't type fast enough | Use voice-to-text to interact with your AI assistant |

Related resources

- MCP Server Setup - Remote and local MCP installation and configuration

- AI Features in the UI - Built-in AI features for the MotherDuck interface

- Building Analytics Agents - Build custom AI agents with MotherDuck