Data Warehousing How-to

Introduction to MotherDuck for Data Warehousing

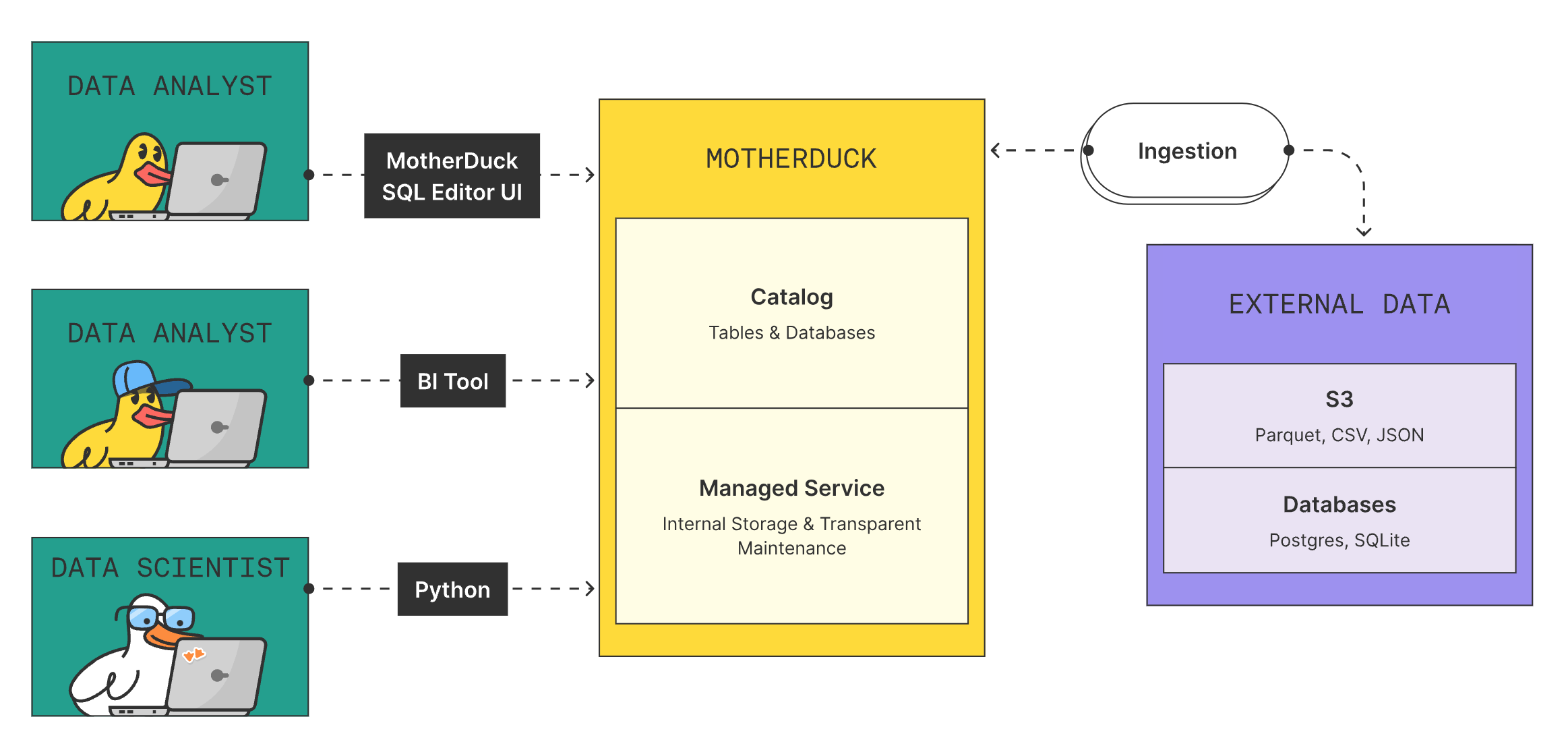

MotherDuck is a cloud-native data warehouse built on top of DuckDB, a fast in-process analytical database. While DuckDB provides the core analytical engine capabilities, MotherDuck adds cloud storage, sharing, and collaboration features that make it a complete data warehouse solution. Key advantages include its serverless architecture that eliminates infrastructure management, an intuitive interface that simplifies data analysis, and hybrid execution that intelligently processes queries across local and cloud resources.

MotherDuck is an ideal choice for organizations seeking a modern data warehouse solution. It excels at ad-hoc analytics by providing instant compute resources for each user, serves well as a departmental data mart with its simplified sharing model, and enables powerful embedded analytics through its WASM capabilities. Different personas benefit uniquely - data analysts get an intuitive SQL interface with AI assistance, engineers can leverage familiar APIs and tools like dbt, and data scientists can seamlessly combine local and cloud data processing.

The modern data stack with MotherDuck integrates seamlessly with popular tools across the ecosystem. As shown in the ecosystem diagram, this includes ingestion tools like Fivetran and Airbyte for loading data, transformation tools like dbt for modeling, BI tools like Tableau and PowerBI for visualization, and orchestration tools like Airflow and Dagster for pipeline management. This comprehensive integration enables teams to build complete data warehousing solutions while leveraging their existing tooling investments.

MotherDuck Basics: Concepts to Understand Before You Start

MotherDuck's core architecture is built on a serverless foundation that eliminates infrastructure management overhead. The platform handles data storage with enterprise-grade durability and security, while optimizing performance through intelligent data organization. Each user gets their own isolated compute resource called a "Duckling" that sits on top of the storage layer, and the separation of storage and compute enables independent scaling of these resources based on workload demands.

The dual execution model is a unique capability that allows MotherDuck to seamlessly query both local and cloud data. The query planner intelligently determines the optimal execution path, deciding whether to process data locally, in the cloud, or using a hybrid approach. This enables efficient querying across data sources while minimizing data movement and optimizing for performance.

MotherDuck follows a familiar hierarchical structure with databases containing schemas and tables. Databases serve as the primary unit of organization and access control, while schemas help logically group related tables together. This structure provides a clean way to organize data while maintaining compatibility with common SQL patterns and tools.

Authentication in MotherDuck is handled through secure token-based access, with comprehensive user and organization management capabilities. The platform uses a simplified access model where users either have full access to a database or none at all. The SHARES feature enables secure data sharing within organizations and with external parties through zero-copy clones that maintain data consistency and security.

The MotherDuck user interface provides a modern notebook-style environment for data interaction. The SQL IDE includes powerful features like intelligent autocomplete, AI-powered query suggestions and fixes, and an interactive Column Explorer that helps users understand and analyze their data structure. These features combine to create an intuitive and productive environment for data analysis.

While MotherDuck is designed for analytical workloads, it's important to note that it's not optimized for high-frequency small transactions like traditional OLTP databases. The platform works best with batch operations and analytical queries, and users should consider using queues for streaming workloads to achieve optimal performance. Additionally, the database-level security model means access cannot be controlled at the schema or table level.

Data Ingestion: Getting Your Data In

MotherDuck provides multiple strategies for ingesting data into your data warehouse. The platform leverages DuckDB's powerful data loading capabilities while adding cloud-native features for seamless data ingestion at scale. You can load data through direct file imports, cloud storage connections, database migrations, or specialized ETL tools like Fivetran and Airbyte depending on your needs. The MotherDuck Web UI provides an intuitive interface for data loading and exploration.

Loading local data

Loading data from local files is straightforward with support for common formats like CSV, Parquet, and JSON. The MotherDuck UI provides an intuitive interface for uploading files directly, while the Python client enables programmatic loading using DuckDB's native functions. For example, you can use read_csv(), read_parquet(), or read_json() to efficiently load data files while taking advantage of DuckDB's parallel processing capabilities.

Interacting with cloud storage (S3, GCS, etc)

Cloud storage integration allows you to directly query and load data from major providers including AWS S3, Google Cloud Storage, Azure Blob Storage, and Cloudflare R2. Using SQL commands like SELECT FROM read_parquet('s3://bucket/file.parquet'), you can seamlessly access cloud data. MotherDuck handles credential management securely through environment variables or configuration settings.

Database-to-database data loading

For database migrations, MotherDuck supports importing data from other databases like PostgreSQL and MySQL. You can directly connect to these sources using database connectors and execute queries to extract and load data. Existing DuckDB databases can be imported efficiently since MotherDuck is built on DuckDB's core engine.

Fetching data from APIs

Data ingestion tools like Fivetran, Airbyte, dltHub and Estuary integrate with MotherDuck to provide automated, reliable data pipelines. These tools handle complex ETL workflows, data validation, and transformation while offering features like scheduling, monitoring and error handling that simplify ongoing data operations.

For real-time data needs, MotherDuck works with streaming partners like Estuary to enable continuous data ingestion. While DuckDB is optimized for batch operations, these integrations allow you to build streaming pipelines that buffer and load data in micro-batches for near real-time analytics.

Unstructured data integrations

When working with unstructured data like documents, emails or images, tools like Unstructured.io can pre-process and structure the data before loading into MotherDuck. This enables you to analyze unstructured data alongside your structured data warehouse tables.

Loading Performance Notes

For optimal performance, follow DuckDB's recommended practices around batch sizes and data types. Load data in reasonably sized batches (at leasts 122k rows) to balance memory usage and throughput. Use appropriate data types like TIMESTAMP for datetime values and avoid unnecessary type conversions. Sort data by columns that are frequently queried together such as TIMESTAMPs. Monitor recent queries during large loads and adjust batch sizes accordingly.

Data Transformation: Shaping Your Data for Analysis

Data transformation is a critical step in the data warehousing process that converts raw data into analysis-ready formats. MotherDuck provides powerful SQL capabilities inherited from DuckDB for transforming data directly within the warehouse. You can leverage DuckDB's rich library of SQL functions to clean, reshape, and model your data through operations like filtering, joining, aggregating and pivoting.

Transformation Tools

-

- Native MotherDuck adapter for seamless integration to dbt core

- Enables version controlled, modular SQL transformations

- Supports testing, documentation and lineage tracking

- Recommended for complex transformation workflows

- See our blog post for detailed examples

-

- Compatible with MotherDuck through DuckDB support

- Provides data pipeline and transformation management

- Enables incremental processing and scheduling

-

- Modern data transformation platform built for DuckDB/MotherDuck

- Offers collaborative development environment

- Includes version control and deployment tools

Orchestration: Automating Your Data Pipelines

Orchestration is essential for keeping data up to date with MotherDuck. Scheduling data loads and transformations ensures your data warehouse stays current by running ingestion jobs at appropriate intervals to capture new data from your sources. Managing dependencies between tasks allows you to create reliable pipelines where transformations only run after their prerequisite data loads complete successfully. Monitoring and alerting capabilities help you track pipeline health and quickly address any issues that arise.

For orchestrating MotherDuck workflows, you have several options:

Popular workflow orchestration platforms like Airflow, Dagster, Kestra, Prefect and Bacalhau provide robust scheduling, dependency management and monitoring capabilities.

For simpler use cases, basic scheduling tools like cron jobs or GitHub Actions can effectively orchestrate straightforward data pipelines.

Many ingestion & transformation tools also come with built-in orchestration features, allowing you to schedule and monitor data loads without additional tooling.

When orchestrating MotherDuck pipelines, follow these best practices:

- Design idempotent jobs that can safely re-run without duplicating or corrupting data.

- Implement proper error handling and retries to gracefully handle temporary failures.

- Set up logging and monitoring to maintain visibility into pipeline health and performance.

Connecting BI Tools and Data Applications

MotherDuck provides robust support for business intelligence and reporting through its cloud data warehouse capabilities. The platform enables organizations to build scalable analytics solutions by connecting their data warehouse to popular visualization and reporting tools. With isolated compute tenancy per user, analysts can run complex queries without impacting other users' performance.

For connecting popular BI tools, MotherDuck offers several integration options. Tableau users can connect via the cloud and server connectors, with support for both token-based and environment variable authentication methods. The platform works with both live and extracted connections, and Tableau Bridge enables cloud connectivity. Microsoft Power BI integration is achieved through the DuckDB ODBC driver and Power Query connector, supporting both import and DirectQuery modes. Other supported BI tools include Omni, Metabase, Preset/Superset, and Rill, typically connecting through standard JDBC/ODBC interfaces.

MotherDuck seamlessly integrates with data science and AI tools through its native APIs and connectors. Python users can leverage the DuckDB SDK and Pandas integration for data analysis workflows. The platform supports R for statistical computing, while AI applications can be built using LangChain or LlamaIndex integrations. Notebook tools like Hex and Jupyter provide both hosted and on-prem environments for data exploration.

For building custom data applications, MotherDuck's unique architecture enables novel approaches through its WASM-powered 1.5-tier architecture. The platform runs DuckDB in the browser via WebAssembly, allowing for highly interactive visualizations with near-zero latency. Developers can use MotherDuck's APIs and SDKs in languages like Python and Go to create custom data applications that leverage both local and cloud-based data processing.

Advanced Topics & Best Practices

Performance Tuning and Optimization in MotherDuck

MotherDuck inherits DuckDB's powerful query optimization capabilities. You can analyze query performance using the EXPLAIN command to view execution plans and identify bottlenecks. While DuckDB doesn't use traditional indexes, it automatically creates statistics and metadata to optimize query execution with row groups. As a result, sorting the data on insert is very effective way to improve query performance.

Data Sharing and Collaboration

MotherDuck implements a straightforward data sharing model through SHARES, which provide read-only access to specific databases. To create a share, use the CREATE SHARE command and specify the database you want to share. Recipients can then access the shared data through their own MotherDuck account while maintaining data isolation.

Monitoring and Logging MotherDuck Usage

DuckDB's meta-queries like EXPLAIN ANALYZE provide detailed query execution statistics. You can also use the platform's built-in profiling capabilities to monitor query performance and resource utilization, helping identify optimization opportunities and troubleshoot performance issues. Recent queries and historical queries can be observed as well, in order to further optimize the warehouse load.

Cost Management

While MotherDuck's pricing model is still evolving, you can optimize costs by efficiently managing compute resources. Consider implementing data lifecycle policies to archive or delete old data. Monitor query patterns to identify opportunities for optimization and avoid unnecessary data processing.

Security Best Practices for Your MotherDuck Warehouse

- Implement robust security practices by following MotherDuck's database-level security model.

- Use token-based authentication for all connections and avoid sharing credentials.

- When integrating with tools, leverage environment variables for secure credential management.

- Regularly audit database access and maintain an inventory of active shares.

Leveraging AI Features within MotherDuck

MotherDuck enhances DuckDB with AI-powered features to improve productivity. The platform includes a SQL AI fixer that helps identify and correct query syntax issues. The prompt() function enables natural language interactions with your data warehouse, allowing users to generate SQL queries from plain English descriptions. These are just a few of the AI capabilities that help make data analysis more accessible while maintaining the power and flexibility of SQL.

Further Guides:

Orchestration

1 item

Replication

4 items

Appendix

Troubleshooting Common Issues

When working with MotherDuck, you may encounter challenges around data loading, query performance, or connectivity. For data loading issues, refer to our best practices for programmatic loading which covers optimizing batch sizes and file formats. For query performance, review our dual execution capabilities to understand how MotherDuck optimizes query execution across local and cloud resources. For connectivity problems, check our authentication guides and ensure you're following the recommended connection patterns.

Useful SQL Snippets for MotherDuck

MotherDuck supports a wide range of SQL functionality inherited from DuckDB. For data ingestion, refer to our PostgreSQL replication examples which demonstrate common patterns for loading data. For building customer facing analytics, check our guide which includes examples of data processing and visualization queries. The DuckDB SQL documentation provides comprehensive reference for the SQL dialect.

Links to Further Resources (MotherDuck Docs, Community)

To deepen your understanding of data warehousing with MotherDuck, explore our data warehousing concepts guide which covers architectural principles and best practices. For hands-on examples, the free DuckDB in Action eBook provides real-world scenarios and solutions. If you need help, don't hesitate to contact our support team or explore our ecosystem integrations for additional tools and capabilities.

Please do not hesitate to contact us if you need help along your journey.