Why Your Snowflake Bill is High and How to Fix It with a Hybrid Approach

17 min readBY

Your Snowflake bill is high primarily because of its compute billing model, which enforces a 60-second minimum charge each time a warehouse resumes. This creates a significant "idle tax" on the frequent, short-running queries common in BI dashboards and ad-hoc analysis. You're often paying for compute you don't actually use.

A surprisingly high bill for a modest amount of data is frustrating. We see it all the time. The immediate question is, "Why is my bill so high when my data isn't that big?" The cost isn't driven by data at rest, it's driven by data in motion, specifically by compute patterns. For many modern analytical workflows, the bill inflates from thousands of frequent queries accumulating disproportionately high compute charges.

If you don't address this, you'll face budget overruns, throttled innovation, or pressure to undertake a costly and risky platform migration. The solution isn't always abandoning a powerful platform like Snowflake. You can augment it intelligently instead.

This guide provides a practical playbook for understanding the root causes of high Snowflake costs and a strategy for reducing them using internal optimizations and a modern hybrid architecture.

The Real Reason Your Snowflake Bill is So High

To control costs effectively, you need to diagnose the problem first. The primary driver of inflated Snowflake bills for bursty, interactive workloads is the platform's billing model for compute. It creates a significant "Big Data Tax".

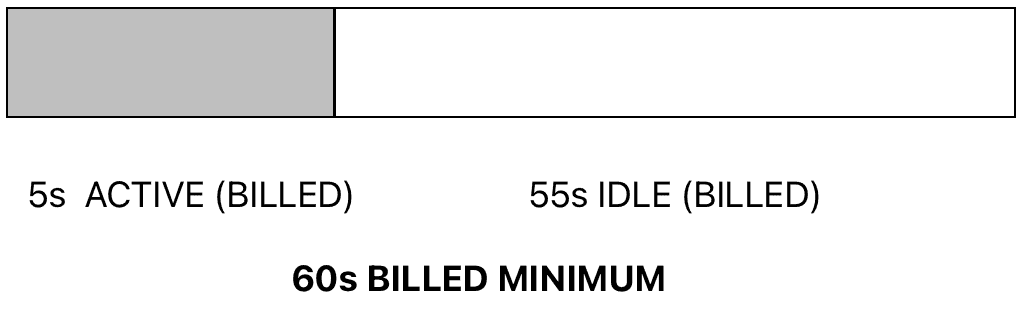

Snowflake bills for compute per-second, but only after a 60-second minimum is met each time a virtual warehouse resumes from a suspended state. A query that takes only five seconds to execute gets billed for a full minute of compute time. In this common scenario, you're paying for 55 seconds (over 91%) of compute resources that sit idle.

Here's what this looks like on a timeline. For a 5-second query, the billed duration on Snowflake versus a usage-based platform like MotherDuck is stark.

Snowflake (X-Small Warehouse):

MotherDuck (Pulse Compute):

When a BI dashboard executes 20 quick queries upon loading, each taking three seconds, this single page view could trigger 1,200 seconds (20 minutes) of billed compute time. The actual work took only one minute.

This problem gets worse with warehouse sizing. Each incremental size increase in a Snowflake warehouse doubles its credit consumption rate. We often see teams defaulting to 'Medium' or 'Large' warehouses for all tasks. That creates a 4x to 8x cost premium for workloads that could easily run on an 'X-Small' warehouse.

This combination of minimum billing increments and oversized compute creates exponential cost leak. Serverless features like Automatic Clustering and Materialized Views consume credits in the background too, contributing to credit creep that's difficult to trace without diligent monitoring.

| Warehouse Size | Credits per Hour | Relative Cost |

|---|---|---|

| X-Small | 1 | 1x |

| Small | 2 | 2x |

| Medium | 4 | 4x |

| Large | 8 | 8x |

| X-Large | 16 | 16x |

First Aid: A Playbook to Immediately Optimize Snowflake

Before considering architectural changes, you can achieve significant savings by optimizing your existing Snowflake environment. These internal fixes are your first line of defense against cost overruns. They can often reduce spend by 20-40%.

1. Master Warehouse Management (Set AUTO_SUSPEND to 60s)

Set aggressive yet intelligent warehouse timeouts. For most workloads, set the AUTO_SUSPEND parameter to exactly 60 seconds. This ensures the warehouse suspends after one minute of inactivity, stopping credit consumption. Setting it lower than 60 seconds is counterproductive. A new query arriving within that first minute could trigger a second 60-second minimum charge.

Right-size warehouses by defaulting to smaller configurations. Use 'X-Small' warehouses by default and only scale up when a specific workload fails to meet its performance SLA. Consolidate workloads onto fewer, appropriately sized warehouses to prevent warehouse sprawl. Multiple underutilized compute clusters add up on your bill.

We helped one analytics team save approximately $38,000 annually by moving its BI queries from a Medium to a Small warehouse. They accepted a marginal 4-second increase in query time.

2. Leverage Snowflake's Caching Layers (Result & Warehouse)

Snowflake's multi-layered cache is one of its most powerful cost-saving features. Not using it leaves money on the table.

Result Cache: If you run the exact same query as one run previously (by anyone in the account) and the underlying data hasn't changed, Snowflake returns the results instantly from a global result cache. No warehouse starts. That's free compute. It's especially effective for BI dashboards where multiple users view the same default state.

Warehouse Cache (Local Disk Cache): When a query runs, the required data from storage gets cached on the SSDs of the active virtual warehouse. Subsequent queries that need the same data read it from this much faster local cache instead of remote storage. This dramatically speeds up queries and reduces I/O. Keeping a warehouse warm for related analytical queries can be beneficial.

Design workloads to maximize cache hits through consistent query patterns.

3. Optimize Inefficient Queries (Prune Partitions & Avoid SELECT *)

Poorly written queries burn credits unnecessarily. While comprehensive query tuning is a deep topic, these practices provide immediate savings:

Avoid SELECT *: Select only the columns you need. This reduces the amount of data processed and moved, improving caching and query performance.

Filter Early and Prune Partitions: Apply WHERE clauses that filter on a table's clustering key as early as possible. This lets Snowflake prune massive amounts of data from being scanned. It's the single most effective way to speed up queries on large tables.

Use QUALIFY for Complex Window Function Filtering: Instead of using a subquery or CTE to filter window function results, use the QUALIFY clause. It's more readable and often more performant.

4. Implement Cost Guardrails with Resource Monitors

Implement resource monitors as a critical safety net. Resource monitors track credit consumption and trigger actions like sending notifications or automatically suspending compute when usage hits predefined thresholds. They're the most effective tool for preventing budget overruns from runaway queries or misconfigured pipelines.

Copy code

-- Create a monitor that notifies at 75% and suspends at 100%

CREATE OR REPLACE RESOURCE MONITOR monthly_etl_monitor

WITH CREDIT_QUOTA = 5000

TRIGGERS ON 75 PERCENT DO NOTIFY

ON 100 PERCENT DO SUSPEND;

-- Assign the monitor to a warehouse

ALTER WAREHOUSE etl_heavy_wh SET RESOURCE_MONITOR = monthly_etl_monitor;

Actively monitor serverless feature costs too. Query the serverless_task_history view to track credits consumed by Automatic Clustering, Search Optimization, and other background tasks. This helps you understand your hidden costs and tune these features appropriately.

These internal fixes can significantly lower your Snowflake bill. To eliminate entire categories of spend, particularly from non-production workloads, you need a different approach to compute location.

Go Local: Slashing Dev & Test Costs with DuckDB

A substantial portion of cloud data warehouse spend gets consumed by non-production workloads. Every dbt run, data validation script, and ad-hoc analysis performed by engineers during development consumes expensive cloud compute credits. By adopting a local-first development workflow, you can shift this entire category of work off the cloud and reduce these costs to zero.

DuckDB makes this shift possible. It's a fast, in-process analytical database designed to run complex SQL queries directly on your laptop or within a CI/CD runner. DuckDB queries data files like Parquet and CSV directly. You don't need to load data into a separate database for local development. Engineers can build, test, and iterate on data models and pipelines locally before incurring any cloud costs.

This workflow saves money and dramatically improves developer velocity. You shorten the feedback loop from minutes (waiting for a cloud warehouse to provision and run) to seconds.

A typical local development pattern in Python is straightforward. You can prototype rapidly without any cloud interaction.

Copy code

import duckdb

import pandas as pd

# Analyze a local Parquet file instantly

# No cloud warehouse, no compute credits consumed

df = duckdb.sql("""

SELECT

product_category,

COUNT(DISTINCT order_id) as total_orders,

AVG(order_value) as average_value

FROM 'local_ecommerce_data.parquet'

WHERE order_date >= '2024-01-01'

GROUP BY ALL

ORDER BY total_orders DESC;

""").df()

print(df)

Running analytics locally is powerful for development. For sharing insights and powering production dashboards, this local-first approach extends into a hybrid architecture.

The Hybrid Solution: MotherDuck for Cost-Effective Interactive Analytics

MotherDuck is a serverless data warehouse as a service built on DuckDB. It provides a simpler, Scale-up solution for workloads that are inefficient on traditional distributed data warehouses. It directly solves the idle tax problem by replacing the provisioned warehouse model with per-query, usage-based compute that bills in one-second increments.

This billing model profoundly impacts the cost of interactive analytics. Let's quantify the savings with a realistic scenario.

Breaking Down the Costs: A Tale of Two Queries

Consider a common BI dashboard used by an operations team. It refreshes every 5 minutes during an 8-hour workday to provide timely updates. Each refresh executes 10 small queries to populate various charts. Each query takes 4 seconds to run.

Workload Parameters:

- Queries per refresh: 10

- Execution time per query: 4 seconds

- Refresh frequency: Every 5 minutes (12 refreshes per hour)

- Operational hours: 8 hours/day, 22 days/month

Snowflake Cost Calculation (X-Small Warehouse):

Because of the high frequency, the team can't let the warehouse suspend between refreshes without incurring repeated 60-second minimums. Their most cost-effective option is running an X-Small warehouse continuously during the workday.

- Total active hours per month: 8 hours/day * 22 days/month = 176 hours

- Credits consumed per hour (X-Small): 1

- Total credits per month: 176 hours * 1 credit/hour = 176 credits

- Estimated Monthly Cost (@ $3.00/credit): 176 credits * $3.00/credit = **$528**

This assumes perfect management. A more common scenario where the warehouse runs 24/7 would cost $2,160 (720 hours * 1 credit/hr * $3.00/credit).

MotherDuck Cost Calculation (Pulse Compute):

MotherDuck bills only for the actual compute time used by queries.

- Total queries per month: 10 queries/refresh * 12 refreshes/hr * 8 hrs/day * 22 days/month = 21,120 queries

- Total execution time per month: 21,120 queries * 4 seconds/query = 84,480 seconds

- Total execution hours: 84,480 seconds / 3,600 s/hr = 23.47 hours

- Estimated Monthly Cost (@ $0.25/CU-hour, assuming 1 CU): 23.47 CU-hours * $0.25/CU-hour = **$5.87**

Even assuming a more complex query consuming 4 Compute Units, the cost would only be $23.48. This example shows how a usage-based model eliminates waste for bursty workloads, reducing costs by over 95% in this scenario.

This calculation focuses on compute cost, the primary driver. While negligible for this interactive pattern, a full TCO analysis would include data storage and egress, where MotherDuck also offers competitive pricing.

MotherDuck's architecture introduces "dual execution." Its query planner intelligently splits work between the local DuckDB client and the MotherDuck cloud service. This minimizes data transfer and latency by performing filters and aggregations locally before sending smaller, pre-processed datasets to the cloud. This hybrid model works ideal for interactive analytics, BI dashboards, and ad-hoc queries on sub-terabyte hot data.

Copy code

-- Connect to MotherDuck from any DuckDB-compatible client

[ATTACH 'md:';](https://motherduck.com/docs/getting-started/)

-- This query joins a large cloud table with a small local file.

-- The filter on the local file is pushed down, so only matching

-- user_ids are ever requested from the cloud, minimizing data transfer.

SELECT

cloud_events.event_name,

cloud_events.event_timestamp,

local_users.user_department

FROM my_db.main.cloud_events

JOIN read_csv_auto('local_user_enrichment.csv') AS local_users

ON cloud_events.user_id = local_users.user_id

WHERE local_users.is_priority_user = TRUE;

Proven in Production: Real-World Case Studies of Significant Cost Savings

The savings from this new architecture aren't just theoretical. Companies are already using this model to achieve significant results.

Case Study: Definite Slashes Costs by 70% The SaaS company Definite migrated its entire data warehouse from Snowflake to a DuckDB-based solution. The results were quick and significant, achieving an over 70% reduction in their data warehousing expenses. In their detailed write-up, the engineering team noted that even after accounting for the migration effort, the savings freed up a significant portion of their budget for core product development.

Case Study: Okta Eliminates a $60,000 Monthly Snowflake Bill Okta's security engineering team needed to process trillions of log records for threat detection, with data volumes spiking daily. Their Snowflake solution was costing approximately $2,000 per day ($60,000 monthly). By building a clever system that used thousands of small DuckDB instances running in parallel on serverless functions, they significantly reduced their processing costs. This case shows that even at a large scale, the DuckDB ecosystem can be much cheaper than traditional cloud warehouses.

Case Study: A 79% BI Spend Reduction with a Simple Caching Layer A data engineering team shared their story of implementing a smart caching layer for their BI tool. Instead of having every dashboard query hit Snowflake directly, they routed smaller, frequent queries to a DuckDB instance that served cached results. Large, complex queries were still sent to Snowflake. The impact was a 79% immediate reduction in their Snowflake BI spend, and average query times sped up by 7x. This highlights the power of a hybrid "best tool for the job" approach.

A Framework for Workload Triage

Understanding the tool landscape is one thing. Systematically deciding which of your workloads belong where requires a data-driven approach. Rather than relying on abstract performance benchmarks, you should analyze query history to classify every workload and route it to the most efficient engine.

The two most important axes for classification are Execution Time and Query Frequency. Consider a third axis too: data freshness requirements. A dashboard needing near real-time data has different constraints than one running on a nightly batch refresh.

A simple 2x2 matrix provides a clear framework for triage:

- Low Execution Time, High Frequency: Short, bursty queries that run often.

- Low Execution Time, Low Frequency: Quick, sporadic, ad-hoc queries.

- High Execution Time, Low Frequency: Long-running, scheduled batch jobs.

- High Execution Time, High Frequency: Often an anti-pattern indicating a need for data modeling or architectural redesign. It can occur in complex, near-real-time operational analytics.

You can analyze Snowflake's query_history using SQL to categorize your workloads. This query provides a starting point. We use MEDIAN instead of AVG for execution time because it's more robust to outliers and gives a better sense of typical query duration.

Copy code

-- Analyze query patterns over the last 30 days

WITH query_stats AS (

SELECT

warehouse_name,

user_name,

query_id,

execution_time / 1000 AS execution_seconds

FROM

snowflake.account_usage.query_history

WHERE

start_time >= DATEADD('day', -30, CURRENT_TIMESTAMP())

AND warehouse_name IS NOT NULL

AND execution_status = 'SUCCESS'

)

SELECT

warehouse_name,

user_name,

COUNT(query_id) AS query_count,

MEDIAN(execution_seconds) AS median_execution_seconds, -- More robust than AVG

CASE

WHEN query_count > 1000 AND median_execution_seconds < 30 THEN 'Interactive BI / High Frequency'

WHEN query_count <= 1000 AND median_execution_seconds < 60 THEN 'Ad-Hoc Exploration'

WHEN median_execution_seconds >= 300 THEN 'Batch ETL / Heavy Analytics'

ELSE 'General Purpose'

END AS workload_category

FROM

query_stats

GROUP BY

warehouse_name, user_name

ORDER BY

query_count DESC;

Once categorized, map these workloads to the optimal tool:

-

Interactive BI / High Frequency (Short & Bursty): Prime candidates for migration to MotherDuck. The per-second, usage-based billing model eliminates the idle tax, offering dramatic cost savings for dashboards and embedded analytics.

-

Ad-Hoc Exploration (Short & Sporadic): This category fits well with MotherDuck or local DuckDB. For queries on smaller datasets or local files, DuckDB provides instant, free execution. For shared datasets, MotherDuck offers a cost-effective cloud backend.

-

Batch ETL / Heavy Analytics (Long & Scheduled): These large, resource-intensive jobs often work best on Snowflake. Its provisioned warehouses provide predictable performance for multi-terabyte transformations. Its mature ecosystem simplifies complex data pipelines.

-

Development & CI/CD: Move all non-production workloads to local DuckDB, regardless of their characteristics. This completely eliminates cloud compute costs during development and testing.

When the Hybrid Approach Isn't the Right Fit: Sticking with Snowflake

To build an effective architecture, you need to know a tool's limitations. The hybrid approach isn't a universal solution. Certain workloads are best suited for a mature, large-scale data warehouse like Snowflake. Acknowledging this builds trust and leads to better technical decisions.

Massive Batch ETL/ELT: For scheduled jobs processing many terabytes of data, Snowflake's provisioned compute model provides predictable power and performance. The 60-second minimum doesn't matter for jobs that run for hours.

Enterprise-Grade Governance and Security: Organizations with complex data masking requirements, deep Active Directory integrations, or strict regional data residency rules often rely on Snowflake's mature and comprehensive features.

Highly Optimized, Long-Running Workloads: If you have a workload that already runs consistently on a warehouse and maximizes its uptime (like a data science cluster running for 8 hours straight), the idle tax isn't a problem. There's little cost benefit to moving it.

The goal of a hybrid architecture is using the right tool for the right job, not replacing a tool that's already performing efficiently.

The Modern Alternatives Landscape: Where Does MotherDuck Fit?

While the Snowflake-and-MotherDuck hybrid model effectively addresses many common workloads, the broader data platform market offers other specialized solutions. Understanding where they fit provides a complete picture for architectural decisions.

Data lake query engines like Starburst and Dremio are powerful for organizations wanting to query data directly in object storage like S3. They offer flexibility but often come with significant operational overhead.

For use cases demanding sub-second latency at very high concurrency (like real-time observability), specialized engines like ClickHouse often provide superior price-performance.

Within classic cloud data warehouses, Google BigQuery presents a different pricing model. Its on-demand, per-terabyte-scanned pricing can be cost-effective for sporadic forensic queries. But it carries the risk of a runaway query where a single mistake leads to a massive bill.

MotherDuck carves a unique niche. It combines the serverless simplicity of BigQuery with the efficiency of a local-first workflow powered by DuckDB. This makes it highly cost-effective and productive for teams focused on speed, iteration, and interactive analytics. You don't get the cost penalty of a traditional warehouse or the operational complexity of a data lake.

| Workload Type | Recommended Primary Tool | Rationale |

|---|---|---|

| Local Dev/Testing | DuckDB | Eliminates cloud compute cost for non-production work. |

| Interactive Dashboards (<5TB) | MotherDuck | Per-second billing avoids idle tax on bursty query patterns. |

| Large Batch ETL (>10TB) | Snowflake | Predictable performance and mature features for heavy jobs. |

| Real-Time Observability (High QPS) | ClickHouse | Optimized architecture for sub-second latency at high concurrency. |

| Sporadic Forensic Queries | BigQuery (On-Demand) / MotherDuck | Pay-per-use model is efficient for unpredictable, infrequent queries. |

Conclusion and Path Forward

The path to a more efficient and cost-effective analytics stack doesn't require abandoning existing investments. You augment them intelligently. By adopting a three-tiered strategy, organizations gain control over their cloud data warehouse spending while empowering teams with better tools.

The strategy is simple:

-

Tune: Implement Snowflake-native optimizations like 60-second auto-suspend timers, right-sized warehouses, and resource monitors to immediately reduce waste.

-

Go Local: Shift all development and testing workloads to a local-first workflow with DuckDB. This eliminates an entire category of cloud compute spend.

-

Go Hybrid: Use the workload triage framework to identify bursty, interactive workloads. Offload them to MotherDuck, replacing the idle tax with fair, usage-based billing.

This hybrid architecture uses each platform's strengths. Snowflake handles massive, scheduled batch processing and enterprise governance. The DuckDB/MotherDuck ecosystem handles cost-effective development, ad-hoc exploration, and interactive analytics.

Start with your own data. Analyze your Snowflake query_history using the provided script. If you see a high volume of queries with median execution times under 30 seconds, that workload is a prime candidate for migration.

From there:

- Audit: Use the provided SQL scripts to identify your most expensive and inefficient warehouses.

- Experiment: Download DuckDB and run your next data model test locally.

- Prototype: Sign up for MotherDuck's free tier, upload a dataset, and connect a BI tool to experience the performance and simplicity firsthand.

By taking these steps, teams transform their analytics budget from a source of stress into a driver of innovation.

Start using MotherDuck now!

FAQS

Why is my Snowflake bill so high when my data isn’t that big?

Your bill is likely high due to compute costs, which often account for over 80% of the total. Snowflake's pricing includes a 60-second minimum charge every time a warehouse activates, creating an "idle-compute tax" on the short, frequent queries common in development and BI.

What are cost-effective alternatives to Snowflake for data warehousing?

For many modern analytical workloads, a hybrid architecture using DuckDB for local processing and MotherDuck for a serverless cloud backend is a highly cost-effective alternative. This model is designed to eliminate idle compute costs and can reduce data warehousing bills by 70-90%.

Can any tools reduce my Snowflake spend by handling queries locally?

Yes. The open-source DuckDB Snowflake Extension allows you to query data from your Snowflake warehouse directly within a local DuckDB instance. This lets you handle development, testing, and iterative analysis on your laptop for free, significantly reducing Snowflake credit consumption.

How does a hybrid local-cloud analytics model optimize costs?

It shifts the bulk of analytics work—especially development and ad-hoc queries—from an expensive, minute-metered cloud warehouse to your local machine, where it's free. You only use the serverless cloud backend (like MotherDuck) for collaboration or larger queries, paying only for the actual seconds of compute used.

How can we optimize Snowflake resource allocation without hurting performance?

Start by right-sizing warehouses (default to XS/S), setting aggressive auto-suspend policies (30-120 seconds), and consolidating workloads. For a bigger impact, offload development and BI workloads to a hybrid DuckDB/MotherDuck architecture to isolate and reduce the most inefficient costs.

Is MotherDuck a full replacement for Snowflake?

For many startups and teams with data under a few terabytes, it can be a full replacement. For enterprises with petabyte-scale batch processing needs, it serves as a powerful complement to offload expensive interactive and development workloads that Snowflake handles inefficiently.

Can MotherDuck connect to my existing BI tools like Tableau or Power BI?

Yes. MotherDuck and DuckDB support standard connection protocols like JDBC and ODBC, allowing them to integrate with most major BI and data visualization tools. You can power dashboards from either a local DuckDB instance or the MotherDuck serverless backend.