Hey, friend 👋

I hope you're doing well. I'm Simon, and I am excited to share another monthly newsletter with highlights and the latest updates about DuckDB, delivered straight to your inbox.

In this May issue, I gathered 10 links highlighting updates and news from DuckDB's ecosystem. This time, we have exciting innovations like SQL-based 3D graphics, Reddit discussions around DuckDB, geospatial capabilities, Metabase integration, and performance optimizations for working with JSON and Parquet files. Check out this edition below.

| Featured Community Member |

Robin Moffatt

Robin is a technologist whose career spans from COBOL to Kafka!

Beyond the code, he’s a skilled communicator and prolific tech content creator, blogging since 2009. Recently, Robin has published several insightful posts exploring DuckDB, including:

- Building a data pipeline with DuckDB

- Exploring UK Environment Agency data in DuckDB and Rill

You can find more of Robin’s writing at rmoff.net.

| Top DuckDB Links this Month |

Metabase DuckDB Driver shipped as 3rd party plugin

TL;DR: The metabase_duckdb_driver plugin enables Metabase (BI tool) to use DuckDB as a data source, allowing direct SQL queries on Parquet files and in-memory databases.

The open-source metabase_duckdb_driver plugin allows Metabase to connect to DuckDB databases, supporting both file-based and in-memory (:memory:) modes. A key feature is the ability to directly query Parquet files without loading data into a database. For example, a query can be executed directly from the Metabase SQL editor. The driver also supports using DuckDB in-memory, which can help process data without persisting.

Normalizing Repeated JSON Fields in FDA Drug Data Using DuckDB

TL;DR: Normalizing nested JSON fields in the FDA drug event dataset using DuckDB. A practical showcase of increasing significant performance can be achieved by creating lookup tables for repeated values.

Chris encountered performance bottlenecks due to high cardinality nested fields within the FDA's raw JSON data. To address this, he created tables that normalized nested JSON with unique values and IDs, reducing the original query from several minutes to just 0.166 seconds. A practical takeaway: This is a good example of how normalizing high-cardinality JSON fields in DuckDB can substantially improve query performance with indexing and scanning less data, especially when dealing with large, semi-structured datasets.

FlockMTL: Beyond Quacking: Deep Integration of Language Models and RAG into DuckDB

TL;DR: Researchers have developed FlockMTL, an open-source DuckDB extension that deeply integrates language models and retrieval-augmented generation capabilities directly into SQL workflows.

FlockMTL introduces model-driven scalar and aggregate functions that enable SQL queries to perform semantic operations like classification, summarization, and re-ranking using LLMs. The extension introduces two new first-class schema objects — MODEL and PROMPT — alongside traditional TABLE objects, allowing resource independence when updating models or prompts without changing application logic. Find the GitHub repo and DuckDB extension in these links.

Abusing DuckDB-WASM by making SQL draw 3D graphics (Sort Of)

TL;DR: Text-based Doom clone running entirely in DuckDB-WASM, implementing raycasting and game physics through SQL queries at 6-7 FPS in the browser.

Patrick's project uses DuckDB-WASM to manage game state, collision detection, and 3D rendering through SQL queries. The wild part and innovation lie in the render_3d_frame SQL VIEW, which employs recursive CTEs for raycasting and perspective correction and uses JavaScript for orchestration and Z-buffer sprite handling. A practical takeaway: This demonstrates DuckDB-WASM's potential for unconventional applications beyond traditional data analytics. Find source code on GitHub.

DuckDB is Probably the Most Important Geospatial Software of the Last Decade

TL;DR: DuckDB's spatial extension significantly lowers the barrier to entry for geospatial data analysis within SQL.

The Geospatial extension statically bundles standard FOSS GIS packages, including the PROJ database, and offers them across multiple platforms, including WASM, eliminating transitive dependencies (except libc). This allows users to convert between geospatial formats using GDAL and perform transformations via SQL. 📝: Spatial join optimization was recently merged on the dev branch.

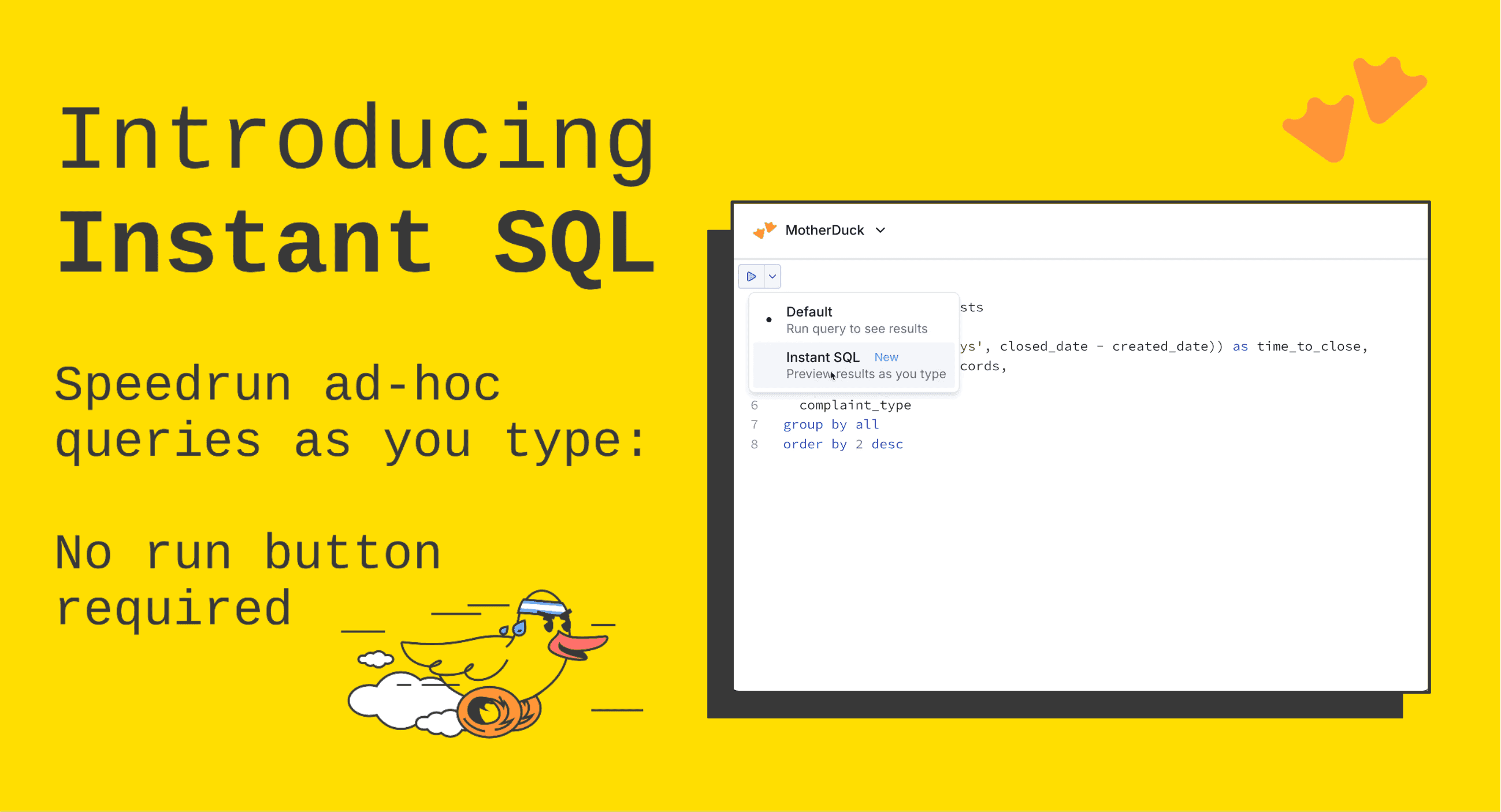

Instant SQL is here: Speedrun ad-hoc queries as you type

TL;DR: MotherDuck introduces Instant SQL, a feature for real-time query result previews as you type, leveraging DuckDB's architecture and query rewriting capabilities.

Instant SQL, available in MotherDuck and the DuckDB Local UI, speeds up query building and debugging by providing result set previews that update instantly as the SQL is typed. A key feature includes CTE inspection capabilities as a time-saver for debugging complex SQL queries. A central component uses DuckDB's JSON extension to obtain an abstract syntax tree (AST) from SELECT statements via a SQL scalar function, enabling parser-powered features.

An alternative connection method uses the Amazon SageMaker Lakehouse (AWS Glue Data Catalog) Iceberg REST Catalog endpoint: ATTACH 'account_id:s3tablescatalog/namespace_name' AS (TYPE iceberg, ENDPOINT_TYPE glue);. The extension also supports Iceberg's schema evolution, allowing users to follow changes in the table's schema.

Some serious questions regarding DuckDB on Reddit: r/dataengineering

TL;DR: Insights from the data engineering community on a Reddit discussion around DuckDB.

The discussion reveals that DuckDB is used in production for tasks like ingesting various file formats, online interactive spatial queries using spatial extension, applying custom logic to arrays with lambdas, and performing repeated joins on smaller datasets. It's also favored for local analysis and for handling poorly formatted CSV files. Some users integrate DuckDB with Ray for distributed chunk processing. This discussion showcases DuckDB's versatility (as discussed in an earlier article).

Merge Parquet with DuckDB

TL;DR: Use DuckDB to efficiently merge multiple Parquet files into one file, optionally performing data transformations during the process.

The article demonstrates how DuckDB can consolidate multiple Parquet files into one. The process involves using DuckDB's SQL interface to read Parquet files via a glob pattern. The read_parquet function offers parameters like filename = true to add a column indicating the source file for each row and union_by_name to handle differing schemas across files. Showcases show data transformations with hashing with md5, column renaming, and the COPY statement to write the transformed data into a single Parquet file.

DuckDB's CSV Reader and the Pollock Robustness Benchmark: Into the CSV Abyss

TL;DR: DuckDB's CSV reader achieves top ranking in the Pollock Benchmark due to its robustness in handling non-standard CSV files.

DuckDB's CSV parser prioritizes reliability alongside speed and ease of use. The parser's flexibility is demonstrated through options like strict_mode = false, which allows parsing CSVs with unescaped quotes or inconsistent column counts, and null_padding = true, which handles missing values. Setting these options will enable DuckDB to correctly read 99.61% of data from the Pollock Benchmark files. The blog post details how to use DuckDB's CSV reader to handle common CSV errors and achieve a valid result from faulty CSV files.

My browser WASM’t prepared for this. Using DuckDB, Apache Arrow and Web Workers in real life

TL;DR: Motif Analytics explores using DuckDB WASM, Apache Arrow, and Web Workers for in-browser analytics, highlighting performance trade-offs and schema consistency challenges.

Przemyslaw details the implementation of an in-browser analytics tool leveraging DuckDB WASM for SQL queries, Apache Arrow for data interchange between Web Workers, and Web Workers for parallel processing and highlighting real-world challenges in maintaining schema consistency when using multiple Arrow tables from different workers. It addresses limitations like the 4GB memory limit in Chrome for WASM and potential bugs in the WASM. Takeaway: Despite all of this, DuckDB WASM is currently one of the fastest (if not the fastest) engines for querying fully in-browser.

| Upcoming Events |

Getting Started with MotherDuck

Thu, May 08 09:30 PST - Online

Looking to get started with MotherDuck and DuckDB? Join us for a live session to learn how MotherDuck makes analytics fun, frictionless, and ducking awesome!

Stay in Flow with MotherDuck's Instant SQL

May 14 09:30 PST - Online

ODSC East: Making Big Data Feel Small with DuckDB

May 15 - In-person [US - San Francisco]

Ryan Boyd, co-founder at MotherDuck, will speak at ODSC East. Learn how well an “embedded database” scales! DuckDB is being used in production to process terabytes and petabytes of data.

Subscribe to DuckDB Newsletter