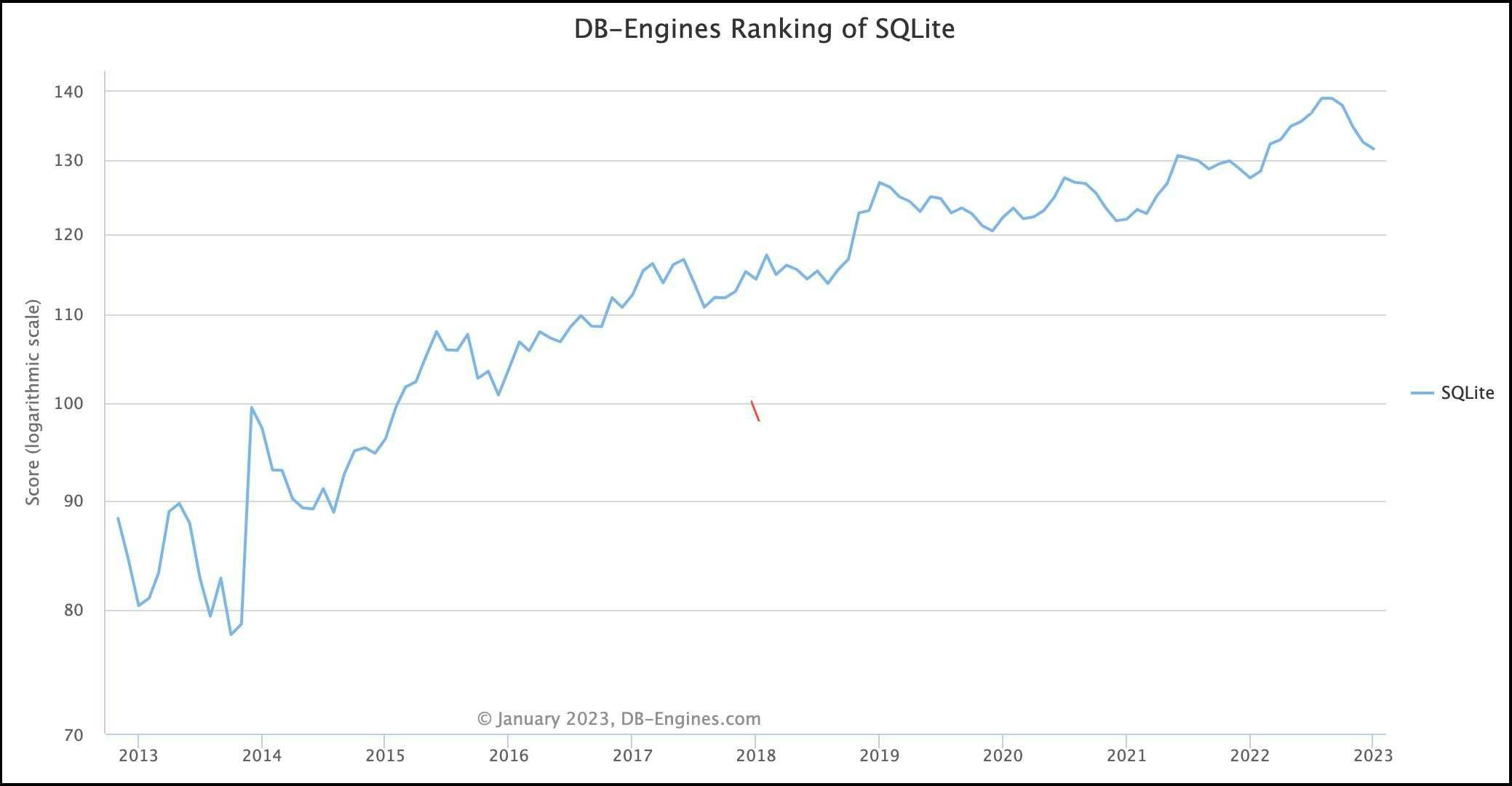

DuckDB is often referred to as the 'SQLite for analytics.' This analogy helps us understand several key properties of DuckDB: it's for analytics (OLAP), it's embeddable, it's lightweight, it's self-contained and it's widely deployed. Okay, the latter may not be a given yet for DuckDB, but SQLite says it's likely the most widely used and deployed database engine and, with the rising popularity of analytics, it's quite possible DuckDB will eventually be competitive.

It should be noted that while the original row-based architecture of SQLite lends itself well to transactional workloads (heavy reads and writes, few aggregations), there has been some work being done to make SQLite better for analytics workloads. Simon Willison summarizes the work in a blog post from last fall based on a VLDB paper and CIDR presentation from the SQLite team.

Working with SQLite databases in DuckDB

The DuckDB team added support to query SQLite databases directly from DuckDB using the sqlitescanner extension. This extension makes a SQLite database available as read-only views within DuckDB.

For this blog post, we'll use the SQLite Sakila Sample Database to show you how SQLite in DuckDB works. This database is a SQLite port of the original MySQL sample database representing a ficticious DVD rental store.

If you prefer watching videos to learn, Mark Needham has a short video tutorial on this topic that's worth a watch.

Loading the database

In order to load the database inside DuckDB, you'll need to install and load the extension.

Copy code

$ duckdb D INSTALL sqlite; D LOAD sqlite;

Next, you'll want to attach the SQLite database. If you downloaded the database from Kaggle above and have it in your current directory, you'll call the sqlite_attach procedure as follows.

Copy code

CALL sqlite_attach('sqlite-sakila.db');

Exploring the data and running analytics queries

Copy code

D SHOW tables; ┌────────────────────────┐ │ name │ │ varchar │ ├────────────────────────┤ │ actor │ │ address │ │ category │ │ city │ │ country │ │ customer │ │ customer_list │ │ film │ │ film_actor │ │ film_category │ │ film_list │ │ film_text │ │ inventory │ │ language │ │ payment │ │ rental │ │ sales_by_film_category │ │ sales_by_store │ │ staff │ │ staff_list │ │ store │ ├────────────────────────┤ │ 21 rows │ └────────────────────────┘

Now let's try to get the top film categories based on the number of rentals. Note that each film is only in one category.

Query:

Copy code

SELECT c.name, count(*) cs

FROM rental r

LEFT JOIN inventory i USING (inventory_id)

LEFT JOIN film_category fc USING (film_id)

LEFT JOIN category c USING (category_id)

GROUP BY c.name

ORDER BY cs DESC;

Result:

Copy code

┌─────────────┬───────┐ │ name │ cs │ │ varchar │ int64 │ ├─────────────┼───────┤ │ Sports │ 1179 │ │ Animation │ 1166 │ │ Action │ 1112 │ │ Sci-Fi │ 1101 │ │ Family │ 1096 │ │ Drama │ 1060 │ │ Documentary │ 1050 │ │ Foreign │ 1033 │ │ Games │ 969 │ │ Children │ 945 │ │ Comedy │ 941 │ │ New │ 940 │ │ Classics │ 939 │ │ Horror │ 846 │ │ Travel │ 837 │ │ Music │ 830 │ ├─────────────┴───────┤ │ 16 rows 2 columns │ └─────────────────────┘

It looks like Sports movies are the most popular. Sigh, sportsball.

Differences between SQLite and DuckDB

There are some noticeable differences between SQLite and DuckDB in how data is stored. SQLite, as a data store focused on transactions, stores data row-by-row while DuckDB, as a database engine for analytics, stores data by columns. Additionally, SQLite doesn't strictly enforce types in the data -- this is known as being weakly typed (or flexibly typed).

Let's look at the customer table.

Copy code

D DESCRIBE customer; ┌─────────────┬─────────────┬─────────┬───────┬─────────┬───────┐ │ column_name │ column_type │ null │ key │ default │ extra │ │ varchar │ varchar │ varchar │ int32 │ varchar │ int32 │ ├─────────────┼─────────────┼─────────┼───────┼─────────┼───────┤ │ customer_id │ BIGINT │ YES │ │ │ │ │ store_id │ BIGINT │ YES │ │ │ │ │ first_name │ VARCHAR │ YES │ │ │ │ │ last_name │ VARCHAR │ YES │ │ │ │ │ email │ VARCHAR │ YES │ │ │ │ │ address_id │ BIGINT │ YES │ │ │ │ │ active │ VARCHAR │ YES │ │ │ │ │ create_date │ TIMESTAMP │ YES │ │ │ │ │ last_update │ TIMESTAMP │ YES │ │ │ │ └─────────────┴─────────────┴─────────┴───────┴─────────┴───────┘

You'll notice that the store_id is a BIGINT, which makes sense. The data in the example SQLite database we're using abides by that typing, but it's not guaranteed since it's not strongly-typed.

Copy code

D SELECT * FROM customer; ┌─────────────┬──────────┬────────────┬───────────┬───┬────────────┬─────────┬─────────────────────┬─────────────────────┐ │ customer_id │ store_id │ first_name │ last_name │ … │ address_id │ active │ create_date │ last_update │ │ int64 │ int64 │ varchar │ varchar │ │ int64 │ varchar │ timestamp │ timestamp │ ├─────────────┼──────────┼────────────┼───────────┼───┼────────────┼─────────┼─────────────────────┼─────────────────────┤ │ 1 │ 1 │ MARY │ SMITH │ … │ 5 │ 1 │ 2006-02-14 22:04:36 │ 2021-03-06 15:53:36 │

Let's show you how a user might take advantage of the "flexible typing" in SQLite.

Queries:

Copy code

sqlite> UPDATE customer SET store_id='first' WHERE first_name='MARY';

sqlite> SELECT * from customer WHERE first_name='MARY'

Result:

Copy code

1|first|MARY|SMITH|MARY.SMITH@sakilacustomer.org|5|1|2006-02-14 22:04:36.000|2023-01-22 22:06:20

Oops! We now have a store_id that's a string instead of an integer! Because it's weakly typed, this will have little effect on SQLite, but if we pop over into the strongly-typed DuckDB and try to query this table, we'll get an error.

Copy code

D SELECT * FROM customer; Error: Invalid Error: Mismatch Type Error: Invalid type in column "store_id": column was declared as integer, found "first" of type "text" instead.

To avoid this error, we can set the sqlite_all_varchar option to ignore the data types specified in SQLite and interpret all data in the DuckDB views as being of the VARCHAR type.

Copy code

SET GLOBAL sqlite_all_varchar=true;

Note that this option has to be set before we attach the SQLite database, or we will receive a different error:

Copy code

D SELECT * FROM customer; Error: Binder Error: Contents of view were altered: types don't match!

Loading data into DuckDB from SQLite

In order to take advantage of all the performance optimizations of DuckDB's columnar-vectorized query engine, you might wish to load the SQLite data into native DuckDB tables. You can do this very easily if you don't have any type matching problems as discussed above.

For example, to create the customer table in DuckDB as customerf, you can do:

Copy code

CREATE TABLE customerf AS SELECT * FROM customer

If this doesn't work because of mismatched types, you can set the sqlite_all_varchar option discussed earlier and load the data into DuckDB, taking advantage of DuckDB's implicit type casting.

Copy code

CREATE TABLE customerf(customer_id bigint, store_id bigint, first_name varchar, last_name varchar, email varchar, address_id bigint, active varchar, create_date timestamp, last_update timestamp);

INSERT INTO customerf SELECT * FROM customer WHERE TRY_CAST(store_id AS BIGINT) IS NOT NULL;

You'll notice that customer had 599 rows, while the new customerf has 598. You can now correct the unclean row of data and insert it again manually.

What about other databases?

Although this post discussed using SQLite databases within DuckDB, you can now also query PostgreSQL databases from within DuckDB.

What other databases would you like to see supported?

Start using MotherDuck now!