What Are Small AI Models?

Small AI models are compact versions of large language models that can run on ordinary hardware like laptops or even phones. While cloud-based models from providers like OpenAI or Anthropic typically have hundreds of billions or even trillions of parameters, small models range from 0.5 to 70 billion parameters and are only a few gigabytes in size.

These models share the same underlying architecture and research foundations as their larger counterparts - they're based on the transformer architecture that powers most modern AI systems. The key difference is their size, which makes them practical for local deployment without expensive GPU clusters.

Why Small Models Matter for Local Development

Faster Performance Through Local Execution

Running models locally provides surprising speed advantages. Small models execute faster due to their reduced parameter count - since transformer compute time scales quadratically with parameters, a model with 1 billion parameters runs dramatically faster than one with hundreds of billions. Additionally, eliminating network round trips means zero latency for API calls, making the entire inference process remarkably quick.

Data Privacy and Freedom to Experiment

Local models keep data on your machine, eliminating concerns about sharing sensitive information with cloud providers. This isn't just about privacy paranoia - it liberates developers to experiment freely without worrying about security controls, approval processes, or compliance requirements. Teams can prototype and test ideas without the friction of corporate security reviews.

Cost Structure Benefits

While local models aren't free (you still need hardware), they avoid the per-token pricing of cloud APIs. Modern hardware is increasingly optimized for AI workloads - Intel claims 100 million AI-capable computers will ship within a year, and Apple Silicon dedicates roughly a third of its chip area to neural processing. This hardware investment pays dividends across all your AI experiments without ongoing API costs.

Getting Started with Ollama

Ollama provides an easy way to run these models locally. Here's a simple example using Python:

Copy code

import ollama

# Chat with a model

response = ollama.chat(model='llama3.1', messages=[

{'role': 'user', 'content': 'What is DuckDB? Keep it to two sentences.'}

])

The models run entirely on your local machine, providing responses as fast or faster than cloud providers. Popular models include:

- Llama 3.1 from Meta

- Gemma from Google

- Phi from Microsoft Research

- Qwen 2.5 from Alibaba

Combining Small Models with Local Data

Retrieval Augmented Generation (RAG)

Small models excel when combined with existing factual data through a technique called Retrieval Augmented Generation. Since smaller models may hallucinate when asked about specific facts, RAG compensates by providing relevant data snippets at runtime.

The process involves:

- Pre-processing your data into a vector store

- When queried, retrieving relevant data snippets

- Augmenting the model's prompt with this factual information

- Getting accurate responses grounded in your actual data

Tools like LlamaIndex and LangChain simplify implementing RAG patterns, allowing models to answer questions about your specific datasets accurately.

Tool Calling and Small Agents

A newer capability enables models to decide when and how to fetch data themselves through "tool calling." Instead of pre-building all the plumbing to connect data sources, the model can:

- Analyze what information it needs

- Write and execute database queries

- Interpret results in context

- Provide natural language responses

For example, newer models like Qwen 2.5 Coder can write SQL queries against DuckDB databases, execute them, and interpret the results - all based on natural language prompts.

Practical Use Cases

Internal Tooling and Back Office Applications

The most successful deployments start with internal tools rather than customer-facing applications:

- IT help desk automation

- Security questionnaire processing

- Data engineering and reporting tasks

- Engineering productivity tools for issue management and code review

Combining Small and Large Models

Small models don't replace cloud-scale models entirely. Like hot and cold data storage, you can use small models for most queries and escalate to larger models when needed. Apple and Microsoft already use this hybrid approach in their AI products.

The Future is Small and Local

Open source models are rapidly improving, with performance gaps between small and large models shrinking. New models emerge weekly with better capabilities, specialized functions, and permissive licenses. Combined with hardware improvements specifically targeting AI workloads, local model deployment is becoming increasingly practical for production use cases.

The ecosystem around small models continues to expand, with thousands of fine-tuned variants available for specific tasks. As these models improve and hardware accelerates, the ability to run powerful AI locally transforms from a nice-to-have into a competitive advantage for development teams.

Transcript

0:00[Music]

0:15hey everybody it's so good to be here uh my name's Jeff I'm one of the founders of an open source project uh named olama it's the easiest way to get up and running uh with large language models locally on your Mac windows or Linux computer Olam is especially at running small models and I'm going to be talking

0:32a bit more about those today um but before jumping into that I just want to share a little bit about my own small and local Journey um before working on this open source project named AMA I was at Docker for five years where I worked on Docker desktop which was a way to run containers locally um and I've been

0:50working on this kind of problem space for a good uh five to six years and it ends up that you know even though kind of Docker originated from software that was used in production at Google and cloud computing was its primary purpose um a lot of what makes Docker Docker actually the the local experience around it and Docker desktops used by over 20

1:12million developers today um after Docker you know these new models were coming out you may have heard of them like Llama Or Gemma and you know we kind of realized that we weren't AI experts some of us on on the team but we really kind of wanted to run these models and there there's a lot of problems with running

1:31AI models that felt very similar to running um these kind of uh Linux containers um and so I'm here to talk a little bit about these small models and how to run them locally um I'll start off with a little bit of an introduction for those who aren't familiar uh with these models um and then talk about two

1:50primary use cases um that the team and I working on o Lama have been seeing very consistently uh around deploying small models locally um we'll talk a bit about how you can get started with small models and what are actual use cases that are being used in companies today around small models okay so small models I'm sure everyone here is probably used

2:09chatu BT or Claude or Gemini um or

2:13tooling around that like perplexity um and the whole idea behind small AI models um is really similar to these large AI models that really took the World by storm um kind of in 2023 and is obviously uh continuing to grow really fast today um they have a very similar architecture in core idea they're based on the same white papers ultimately in

2:36research um the differen is though is that they're really small whereas these Cloud models although they're not disclosed probably have hundreds of billions of of parameters uh they call it or even trillions of parameters um these small models only have maybe um 0.5 to 70 billion parameters um they're only a few gigabytes in size which means they definitely fit on your laptop I

2:58mean heck they even fit on a phone phone um and they run on ordinary Hardware so you don't really need these really expensive hard-to buy clusters of GS all wired up in a special way to run them you can actually run them um right here on your existing computer and I'll show a little bit of that today um the most

3:16interesting part about it is similar to like a lot of the most powerful database software is that they're openly available so they're not gated you don't have to put your credit card um you can go and download them a lot of them even have very um easy to adopt licenses like MIT or the Apache License um but you know great that these

3:34things exist the kind of miniature versions of what really started this whole AI wave um but why do they matter and there's this kind of misconception that small models are kind of just shitty versions of what you can get um from open AI or anthropic um and that's really untrue and so there are kind of three major reasons um the first one is

3:54that counterintuitively even though you're running them on kind of you know existing consumer Hardware um they're actually a lot faster um for two really simple reasons a lot of them are why running a local database is faster um the first one is that you ultimately with less parameters the model just runs a lot faster um the compute time for a

4:15AI model today that uses the transform architecture which is what most of them do um is quadratic with the number of parameters so it's N squared so just by going from you know hundreds of billions of parameters to one billion parameters for example um you're cutting a serious amount of Compu time um and the second and more interesting use case is that

4:34because they're local there's no round trips and this shares all the benefits of why uh small data runs so incredibly fast is that you don't need to go off to the internet you can run everything just locally and so the whole the Network's not even part of the equation it's also the fact that you know the model runs

4:51locally means that you can keep your data to yourself and this isn't just about kind of the paranoia of sharing your personal data which is where a lot of the kind of um rhetoric is today around data privacy and and these small models it's actually more about the fact that you're liberated to do whatever you want with the data and you don't have to

5:08worry about security controls and privacy and getting approval to use these models um you can kind of have the freedom to just experiment locally um and the third and most interesting one is just the cost structure around these models um they're not free to run like they still take compute you still have to buy your computer um but one section of the small

5:29data man esto that I really loved was the part that talked about Modern Hardware catching up and and Jordan spoke about it this morning um that's even more true with these AI workloads um basically every Hardware manufacturer on Earth is changing right now to accelerate Ai workloads and within a year untils um supposedly it says we're going to have 100 million AI computers

5:51uh out there on the market um Apple huge percentage of Apple silicon is something like a third is dedicated to neuron processing um which is incredible it's a huge chunk of of their the Silicon that they produce in every MacBook um and then what's more interesting than the fact that um you know we have all this Hardware that make these models

6:13incredibly fast locally um are just the hidden cost that we don't really talk that much about but you really face when you you're trying to adopt AI models um there's two that are really common and they were honestly shared in the data world too and it's why a lot of the most relied upon uh software and databases

6:29and by no means am I expert with with data and small data even um was the fact that open source software is just removes a lot of the cost hidden costs right like if you want to switch Cloud providers if you want to upgrade your machine um if you want to change the application um there's a lot of cases

6:46where relying on something that's openly available just cuts a ton of pain time and risk and that's very true with open models as well and so whether you're thinking about I'm not sure where this model is going to run yet I want to run it in one place locally also want to deliver it to a customer environment um

7:01you don't have to worry about the kind of the the hidden cost from switching around or hey maybe I have a customer that has a unique environment that wants to deploy differently um you don't have to think about these kind of really hidden costs that aren't really coming with the price tag of the model you don't really pay per per token for that

7:19um and so a lot of this is kind of a repeat of the benefits we get from small data um it translates kind of one to one to these small AI models quite nicely in the last one that's kind of an interesting um development that wasn't totally expected is just how many of these small models are available it's

7:37it's really incredible for you know um the cloud scale AI models you might have a selection you know you can count them on one hand from most companies how many you can buy um or use or try um but there are already thousands of small models available of different specialty sizes um almost every trillion dollar company in the world's open source to

7:57model already whether it's apple or you have the Llama models from meta and Gemma from Google and fi from Microsoft research um each of these has already been fine-tuned and you know for example nvidia's distilled the Llama models into a whole new type of model so there's been this incredible development of models and a whole ecosystem forming

8:16which you just will not get through um you know what ultimately the cloud Scale Models um it's kind of oneto one with a lot of like the extensions you might see for like postgress which make these models extremely versatile in customizable for your use case um every company knows their customers best they ultimately want to customize these

8:35models for that use case um open models by far are the best way to do that okay done preaching about open models I think um but the most interesting um is what are people doing with them uh before that though I'd love to show a really quick demo of just what they look like for those who aren't familiar um there

8:51are quite a few ways to run these open models um if you're really familiar kind of with machine learning and um kind of the Python environments there's a ton of tooling around that um ol is built for everyday developers that may not be comfortable with machine learning tooling so I'm give a quick demo of what ol llama looks like and so in this case

9:09let's run the first one that that was on that list llama 3.1 um and this will load it directly here on my Mac um and I can talk with it so in this case I'll say you know say hi and you can see it answers quite quickly um as I mentioned the smaller the model this is an 8B

9:24model um if we try to run a 2B model for example like Gemma 2B um you can see that even by cutting Maybe by a third the parameter count we get quite a speed up in um in output and so you're getting speeds that are on par if not faster than what you would get with a uh Cloud scale AI provider all

9:46running on your laptop um they're not just good for chatting either right so they're not only kind of an alternative to uh chat gbt in fact that's not really where they shine um where they're really powerful is when you make them part of your application um so I've got a really simple python script here um that will

10:03take the AMA python package and just chat with it um for those who have built stuff on open AI this is quite familiar um and so I'll ask llama 3.1 hey what's duck DB and you know keep the answer to two sentences um and I'll just run that and um within a few lines of code you can take these small models um that are

10:21running locally on your Mac or even remotely on a server you might have um a single node server and um you can run these locally so so in that case uh uh

10:34oh let's do that one more

10:41time I'm gonna just restart Alum really

10:47quick okay so now we get an output and it's really fast and that kind of speaks to the latency um uh point that we were thinking about earlier which is because it's running locally you're only really Bound by the compute you have um the roundtrip time for the network is zero effectively um and these are just two of

11:07the models llama 3.1 and Gemma 22b um there's a ton of other models that are available on ama.com you can also find them on other model um kind of hubs or repositories like hugging face for example um or kaggle okay so that's how you get up and running with the small model um and you know how you can

11:26integrate it with your application and a few lines of code um that's just getting it feet wet um but you know most usage

11:34of these small models we're seeing aren't just really talking with the model in fact because the models are so small um they're not great for being a replacement to say Google um whereas the really large Cloud models are so big where they actually have quite a bit of factual information um um at the size of them they're able to produce quite true

11:56factual information small models that isn't really their strength instead small models work really well when they're combined with existing factual data because they're small they tend to hallucinate um uh which means that they'll kind of almost try to guess the data or approximate it um but the good news is that when you combine it with small data you can kind of have the best

12:19of both worlds where you get fast and local models but you also um get factual data that you already have locally um and so how does small data beat small models um the most kind of common approach we see for this um is this pretty complicated uh term called retrieval augmented generation or rag um which ultimately is a pretty simple idea which

12:43is instead of just giving a Model A simple prompt um augment that prompt with some real data that comes from an existing data source locally and so at a high level what this looks like is you know what we just showed is kind of the leftand side of this which is hey I give the model a prompt and then it gives me

13:00back a response and by augmenting the model with data um you're kind of building up this Vector store um this this kind of separate data store of Snippets of data that the model um can use at runtime and so there's some pre-processing work required a little bit and there's some incredible tools to use around this such as llama index or

13:18Lang chain I'll give a quick demo of that right after this um but effectively what you can do is compensate for the fact that these models may not know about factual data in the world they may and especially even the cloud ones won't know about your data so you can provide this data to the model at runtime and then you get

13:37this really great experience where you get the benefits of of a model where it's speaking a natural language it's able to reason about the data um but it also has real factual data um that it can use so I'll give you a quick look of what that looks like and so switching back to My Demo here um in my kind of

13:55demo bucket directory here um we have some duck facts and so um ton of cool facts in here like hey what's the fastest duck ever recorded um you know uh are City ducks different than country Ducks um and so let's see how we can um talk to this data effectively and so um there's a lot of code in here um this is

14:14a llama index example but the most important ones is that um we're taking the Gemma 22b model um and then we're taking this fax. txt file that I just showed and we're kind of creating this Vector store of all these facts that the model can use at runtime and and then by connecting the vector store of data to

14:32the model Gemma 22b um we can query the model and then talk to it and so if we go over to um if we run this rag example you can see here it's loading the fax file and then splitting it into 23 different chunks of data and then now we can talk to it so you know how fast

14:49do ducks fly you can see here that it kind of went and grabbed that 100 miles per hour figure and kind of brought it back and say um do ducks dive like there was a

15:01and yes ducks can dive okay well that's not Super H F helpful um but we can say

15:07you know how high can ducks fly I found this fact really cool ducks can fly up to 21,000 feet which is pretty much like a commercial airliner um so it's pretty interesting fact um as you can see here you kind of get this really the best of both World a small model Snappy responses but you also get

15:26um real factual data that the model can can use at run time so that's a quick example of retrieval augmented generation and kind of the what we're seeing as today the most common use case with small models um next I want to talk about kind of the new wave of of applications that are being built with small models um and best word I have for

15:44this is small agents and agents everything's an agent these days it's a really popular term um but the core idea behind this is some of that stuff I just showed there's quite a bit of Plumbing that needed to be set up to connect the data to the model and the core idea here is well what if the uh model itself

16:02could decide when to go get data and how to go get it that way you don't have to build all this plumbing and so this is where tool calling comes in and it's a really new feature of small models it was launched in the big cloud models about a year ago um but only in the last few months have

16:16we seen the foundation kind of Frontier small model support tool calling and so the idea here is well instead of like having that plumbing can the LM just decide if it needs to go fetch some data from a database and then use that to answer a question I'll give a quick demo of what that looks like as

16:35well so switching back to My Demo here I have a really small duct DB database here um called ducks.

16:41dctb and um I'm just going to grab some some uh records from that so I've got a database of ducks um and I'm going to

16:50limit it to 10 Ducks okay so here we've got some ducks got their first name last name the color their feathers great um and so how am I supposed to get the model to use as data so the next uh example I'll show you is a tool calling example and again there's a little bit of Plumbing here but it's generally kind

17:06of a set once and forget um what we're going to do is connect to our duct DB database and we're going to create a tool using Lang chain called uh the query tool and all that does is take um the query uh that you want it uh that the model decides to write and it executes it and Returns the model the

17:24response so it's extremely simple and then what we're going to do is tell the model your provider this duct DB schema we'll give it the schema to help it um and say Here's a query we need help with um you know we don't even tell it to generate queries or anything just answer the question and then what we'll do is a

17:40little bit of code to make sure that if the model needs to run a function we'll do it on their behalf the model isn't kind of sentient it can't go and just start running stuff on my computer um but it can elect to run one function at least great so let's go ahead and run that now um and what that'll do is run uh the

17:57the uh the new model um that came out last week called quen 2.5 which is a new kind of Frontier open source model by Alibaba um in a section of the models have uh cross-section have a quite permissive license as well so they're really easy to get up and running and um kind of don't have much to lose but

18:14what's cool about these models is they're both small and really good at tool calling what's even more interesting about this model Quinn 2.5 coder is that's really good at code and so let's say you know what color is Marty McFly and so we'll prompt that

18:39oops okay so um here we've got a uh and

18:44I will preface that tool calling is a pretty new feature of these models so there's a little bit of work required to get them to really click but once they do they they tend to do quite reliably and so here we have for example the query that the U model decided to write which was um hey go get the select the uh color

19:02where the first name is Marty and the last name is McFly so he's able to split the name into two understand that we're fetching the color column um and then it's able to fetch a record we can ask how many ducks are yellow and here you can see that I went in a Deb account an aggre aggregation

19:18query to go and figure out how many ducks are yellow so it's writing all these queries and we're just executing on on its behalf and it's able to go fetch this data and then once it fetches the data it's able to it to interpret the response in the context of the original prompt and so here it got the

19:33number eight back it combined the two and it said okay well here's the prompt here's the output from the tool now we can answer the final response which is there are eight ducks in the yellow ducks in the database and this is just the start reading data is only the beginning these small models could also write the code to edit

19:49data and so how do you get started so this is just some examples some coding examples of what you can do but I want to talk a tiny bit to wrap things up about how people are using these today the first use case is really and this the one we see the most common is don't go start with something that's customer

20:06facing where a customer needs to talk to a model that's kind of what people jump to but in reality what we're seeing is these internal tooling this back office stuff that's boring not really customer uh facing um and it's stuff you don't really want to spend that much time on anyways within your team and so things like it help desk or boring security

20:23questionnaires or data engineering and Reporting um and not the fun parts of this but the parts where you're actually just doing kind of regular requests internally for example versus actually going and innovating on how you're querying data and storing it um and lastly the most kind of common one we're seeing by far is just engineering teams using it to become more productive

20:42internally so they can focus on new features and not on managing issues or code review um small models also it's not a choice of whether to use small or big they they actually work really well together and this kind of is similar to the hot and kind of cold data analogy where if you really need a big model you

20:58can Resort to that if you have to but for a lot of queries you can just use small models and they actually work really well in Tandem and this is already what apple and Microsoft co-pilot are doing with their own um small and big model stacks and most importantly it's never been a better time to start small um open source

21:14models are rapidly catching up to these Cloud Scale Models um this is kind of a hard to- read graph but as you can see the lines are converging and while a lot of the best open source models are very big as well um the small models tend to be growing at the same speed in terms of performance and Benchmark results and so

21:28it's only a matter of time before these small models aren't just small but really Mighty as well thank you so much for having me I'm so excited to see what everyone builds with small models um here are some of the tools that were used in the demos um as well as some information uh about myself and AMA um

21:44it's never been a better time to start with small models I'm just so excited to see what everyone builds thank you so much [Applause] [Music]

FAQS

What are small AI models and why should you run them locally?

Small AI models have 0.5 to 70 billion parameters (compared to hundreds of billions or trillions for cloud models like ChatGPT), are only a few gigabytes, and run on ordinary hardware including laptops and phones. Running locally has three main advantages: faster inference because there are no network round trips and fewer parameters (compute time is quadratic with parameter count), data privacy since nothing leaves your machine, and lower cost since modern hardware like Apple Silicon dedicates roughly a third of its chip to neural processing.

How do you combine small AI models with DuckDB for better results?

Small models tend to hallucinate when asked factual questions, but they work well when combined with real data. Using tool calling, a small model like Qwen 2.5 Coder can automatically write and execute SQL queries against a DuckDB database to answer questions. The model receives the database schema, decides what query to write, executes it, interprets the results, and generates a natural language response. This pairs the reasoning capabilities of AI with the factual accuracy of your local data.

What is Ollama and how do you get started with local AI models?

Ollama is an open-source tool that makes it easy to run large language models locally on Mac, Windows, or Linux. You install it, then run a model like Llama 3.1 or Gemma 2B with a single command. For integration into applications, the Ollama Python package provides an API similar to OpenAI's, requiring just a few lines of code to chat with models programmatically. Thousands of open-source models are available, including fine-tuned variants from Meta, Google, Microsoft, Apple, and Nvidia.

What are the best use cases for small AI models in production?

The most common production use cases are internal tooling rather than customer-facing applications: things like IT help desks, security questionnaires, data engineering reports, and engineering productivity (code review, issue management). Small and large models also work well together in a tiered approach, similar to hot and cold data. Use small local models for most queries and fall back to cloud models for complex tasks. This is already how Apple Intelligence and Microsoft Copilot architect their AI stacks.

Related Videos

1:00:10

2026-02-25

Shareable visualizations built by your favorite agent

You know the pattern: someone asks a question, you write a query, share the results — and a week later, the same question comes back. Watch this webinar to see how MotherDuck is rethinking how questions become answers, with AI agents that build and share interactive data visualizations straight from live queries.

Webinar

AI ML and LLMs

MotherDuck Features

9:09

2026-02-13

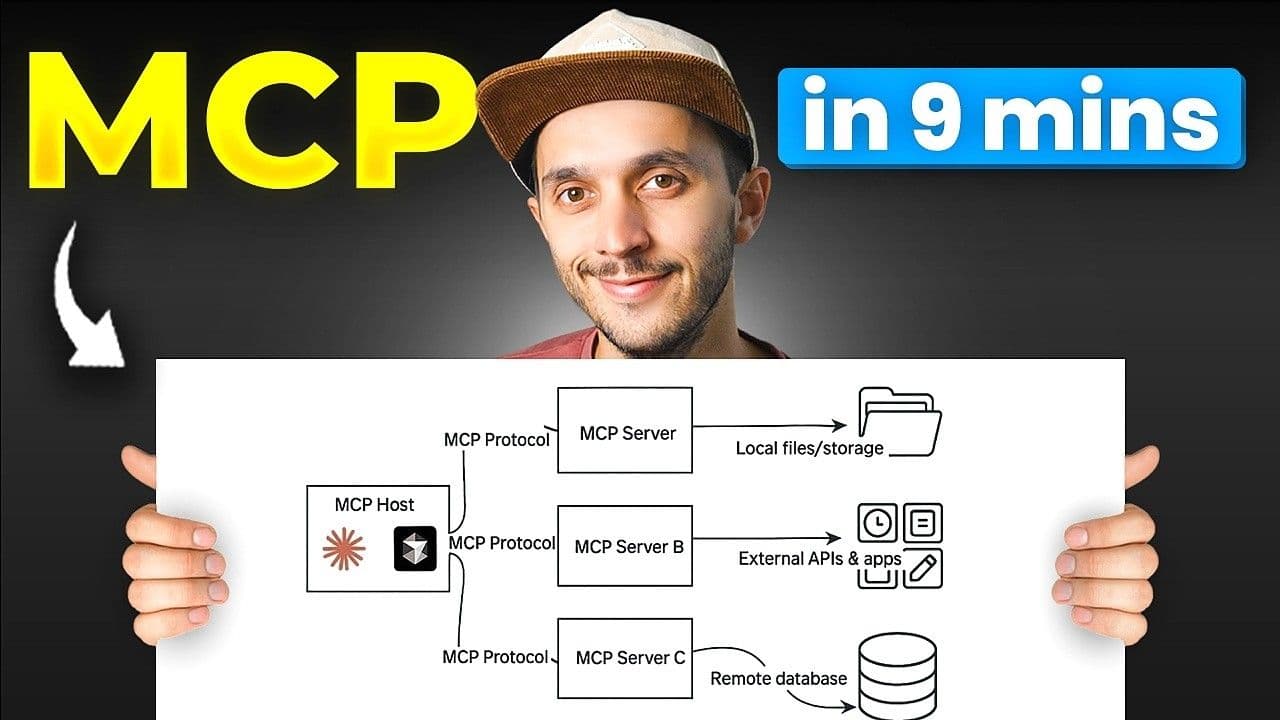

MCP: Understand It, Set It Up, Use It

Learn what MCP (Model Context Protocol) is, how its three building blocks work, and how to set up remote and local MCP servers. Includes a real demo chaining MotherDuck and Notion MCP servers in a single prompt.

YouTube

MCP

AI, ML and LLMs

2026-01-27

Preparing Your Data Warehouse for AI: Let Your Agents Cook

Jacob and Jerel from MotherDuck showcase practical ways to optimize your data warehouse for AI-powered SQL generation. Through rigorous testing with the Bird benchmark, they demonstrate that text-to-SQL accuracy can jump from 30% to 74% by enriching your database with the right metadata.

AI, ML and LLMs

SQL

MotherDuck Features

Stream

Tutorial