From MySQL to Modern Analytics: A Data Platform Transformation Story

Gardyn, the innovative indoor hydroponic gardening company, faced a critical challenge as they scaled their operations. With smart devices generating vast amounts of sensor data, computer vision outputs, and customer interaction metrics, their data infrastructure needed a complete overhaul to keep pace with business growth.

The Starting Point: Executives Running Production Queries

When Rob joined Gardyn three years ago as their first full-time data scientist, the data landscape was minimal. Executives were running queries directly against the production MySQL database, creating risks for system performance and customer experience. The immediate priority was establishing basic analytics capabilities while separating analytical workloads from production systems.

Building the Initial Infrastructure

Rob's first steps involved creating a MySQL replica for analytics and deploying a Kubernetes cluster to run Apache Airflow for orchestration. This homegrown solution included:

- Custom Python scripts for data ingestion

- Raw SQL transformations managed through Airflow

- Jupyter notebooks for ad hoc analysis

- Plotly Dash applications for dashboards

While this approach initially worked, it quickly became unsustainable. As the data volume grew and transformation complexity increased, the daily pipeline runtime ballooned from one hour to over 24 hours, creating significant operational challenges.

The Modern Data Stack Migration

Facing these scaling limitations, Gardyn embarked on a comprehensive platform modernization. The new architecture centered around several key components:

Data Warehouse: MotherDuck

The migration from MySQL to MotherDuck delivered immediate performance improvements. Pipeline runtime dropped from 24+ hours to just 10 minutes, enabling the team to build date-spined models and perform complex time series analysis that was previously impossible.

Transformation Layer: dbt

Moving from raw SQL to dbt eliminated the need to manually manage dependencies and made the transformation logic more maintainable and scalable.

Orchestration: Dagster

The switch from Airflow to Dagster was driven by seamless dbt integration. Dagster's asset-based approach simplified dependency management and provided better visibility into the data pipeline.

Analytics Tools: Hex and Hashboard

These platforms replaced the self-hosted Jupyter notebooks and Dash applications, providing:

- Hex for Python-based analysis and machine learning workflows

- Hashboard for self-service BI with a semantic layer that enables non-technical users to explore data safely

Unlocking Business Value Through Integrated Data

The new platform enabled Gardyn to finally integrate data from multiple sources:

- Device sensor readings

- Computer vision model outputs detecting plant health

- Customer app interactions

- Website engagement metrics

This unified view of the customer journey has powered new features, including a "care score" that gives customers insights into how well they're maintaining their devices. The computer vision system can now detect issues with plants and automatically notify users, as well as celebrate positive milestones like new flowers or ripe vegetables.

Key Lessons for Scaling Data Operations

Rob's advice for other data professionals facing similar challenges emphasizes thinking long-term even when pressured for quick answers. Building generalizable models and scalable infrastructure from the start, even if it takes extra time initially, pays dividends as the business grows.

The transformation also freed Rob to focus on actual data science work rather than infrastructure maintenance. With the Kubernetes cluster retired and managed services handling the heavy lifting, the team can now concentrate on delivering insights that improve the customer experience and drive business growth.

Looking Forward

Gardyn's data team is now focused on modeling customer journeys in greater detail and expanding their computer vision capabilities. The solid foundation built through this migration enables them to tackle increasingly sophisticated analytical challenges while maintaining the performance and reliability their growing business demands.

The journey from production database queries to a modern data platform illustrates how thoughtful architecture choices and the right tool selection can transform a company's ability to leverage its data assets effectively.

Transcript

0:00Let's um let's jump into it. Um I'm going to we're going to uh do a quick intro here and then we will hop into the really cool stuff that Rob is up to at Garden. Um so before that I just want to say hi. Uh I'm Jacob Matson. I work in Devril here at Motherduck. Uh I came

0:18here through a very fun path. Um uh I

0:22will just share briefly my career began in accounting and it turns out just counting and summing things over a time series is a very valuable skill for data and uh I've been able to apply that to larger and larger data sets with larger and larger calculators which has led me here to doing that same type of thing

0:41and helping others do that here uh at Motherduck. So, I'm very excited uh to bring this to you all uh with Rob. And Rob, why don't you introduce yourself as well? Sure. Yeah, thank you so much for having me. Um my name is Rob. Uh I'm a data scientist at Garden. I joined there about three years ago. Um I started my

1:04career in neuroscience actually. So I did a PhD in neuroscience in uh in Amsterdam. I worked on that for about five years. uh after that or during that actually I realized that uh I didn't really want to stay in academia didn't want to be a professor uh so I decided you know I I I really liked uh working

1:22with data uh didn't necessarily like doing experiments so much like in the lab um so I actually picked up a job as

1:31a like a side job as a data scientist for a music company in in LA uh a sync

1:38licensing company I should say so I did some uh computer vision stuff for them and after that uh when I was done with my PhD I I decided I wanted to do data science full-time. So found a job as a data science consultant for a small consulting firm back in the Netherlands.

1:57Um had two clients before I I joined Garden. So one one of them was uh the Dutch tax authority and the other one was the Dutch central bank. So, so very different style companies from from Garden.

2:11Uh, and then, uh, I actually met the CTO of Garden at a concert uh, that my wife was playing. Uh, so that's a funny story. Uh, but I met him there. We started talking about work. Um, that's great. And, uh, yeah, they had a they had a position for a data scientist, so I applied and, uh, here I am. That's

2:29really cool. That's a really cool story, Rob. Love to hear it. It's kind of funny how everyone everyone kind of snakes through their career to where they get to. Um it's it's often not a straightforward path. Um all right, cool. So, we're we're going to uh talk about kind of where things started with garden and where they got to and all of

2:51the pieces in between that. And so, this is going to be a uh kind of loosely structured interview where Rob walks us through it, how they ended up with what they ended up with. um you know where things were and I'm really excited just to explore this because I think um at least for me you know this story really

3:09resonates as like this is kind of what it looks like to take something that is you know kind of raw and unformed and build the thing that actually can help you uh you know meet your business goals. So I'm I'm super super excited.

3:24Um before I turn this slide off, Rob, do you want to tell us about what garden is?

3:30Yeah. Yeah. So um garden is a company that makes indoor hydroponic uh gardening device. So uh we sell a device which is really cool actually.

3:41Put it in your house. It's very small only two square ft and uh you can grow 30 plants in it uh produce or or flowers for decoration. Um and it's all man it's the smart garden. So it's managed by an app.

3:55Uh there's a pump in it, lights, cameras. Uh so we have computer vision that is watching your plants uh basically make sure uh they don't die.

4:05So essentially you just buy this device you put in your house put seeds in it that we provide and uh start growing.

4:10You don't have to have a green thumb and uh you'll save a lot of water. I have a lot of veggies. Um so I'm really excited about about this product. I think it's amazing to have this in my house. I used to kill my plants all the time. you know, the ones I've tried and grow in my

4:25house or my yard and now I can eat salads every day. So, it's great.

4:30Amazing. Amazing. And obviously, there's a lot of data uh associated with a product like that, right? Yes. Yeah, for sure. So I mean that's that's definitely the reason why I got excited about garden as a company and why I wanted to join uh is because we have this this device with with sensors, cameras and it provides a very um let's say consistent

4:52growing environment for for the plants which we can uh get a lot of data from.

4:58So what makes this really cool is that we have people all over the country uh using our seeds to grow the same plants in the same you know consistent environment and we can get data on that.

5:08I think that's a gold mine uh that not a lot of other companies have something like that you know so that that's why I got really excited to you know get my hands on that data and work with it.

5:18That's super cool. Okay so let's let's jump into uh our set of questions here then. So um uh what where did things kind of start?

5:30So like what what uh what do you what do you kind of like what are you responsible for at garden today?

5:38That's a big question. Um when I when I joined three years ago, I mean there was nothing. I was the first uh data like full-time data person that they hired. Yeah. So they had a contractor working uh once a month. He he would analyze some data for them. But for the most part, if anyone had a question, one of the executives would go

5:57into the production database uh and run queries in there. So it was kind of there wasn't much, let's say. So when I when I joined, one of the first things I did was uh create a copy of the MySQL at the time database that we were running.

6:12Um just just to separate analytics from from production systems because I was a little bit worried about the load impacting customers, you know. So um yeah that's where we were when I joined and uh uh the main thing I started looking at in the beginning was was just very basic stuff like membership membership data so we offer a subscription right can buy a

6:35subscription for uh you know for the service that we they don't have to but they can um and uh we just had to know like how many people are members are they renewing or not you know stuff like that very basic things yeah yeah and so I imagine like even like so so I was the environment you were walking into like

6:54CEO and the CFO of like prod credentials they're like running queries like okay yeah they had stored queries and they would run them in DB and clients like that but it was the same connection as you know it was just production it was only one thing okay so you're you're coming in there they they have a prod database that's

7:13running running the app you know doing dealing with things like membership um I assume like data collection for for like you know the actual devices is going somewhere else or is that also kind of running into the into the app? Um no so there are several other production systems that currently we pull from. So so back then it was really my focus was

7:33on that my SQL database the data that came from there later on we started connecting other sources. So we have a whole host of yeah like cloud services that we use. Okay to totally makes sense. Got it. So that but but ultimately okay so that's where we're that's where we're starting with um so so there's a there's a my SQL replica

7:53you know set up so that you can start kind of querying things and answering like the basic questions so that you guys can figure out where to focus. Um tell me a little bit about the progress from there to where you got to like you're like okay this is like breaking I need like I need to do something

8:07different. Yeah. Yeah. So I start I started by just running some queries on those on those tables in the replica.

8:15Um pretty quickly we had more complicated requests for data coming in. Uh so I started building additional tables on top of those like transformations essentially. Yeah.

8:25Throughout this whole process um I was discouraged from calling this a data warehouse uh to avoid the impression that I was building something big that had only long-term benefits. So everything was short-term like very specific use cases, very specific questions that need to be answered, specific dashboards that need to be made.

8:44um you know but even if you don't call it data warehouse when when you're answering these questions ultimately it becomes one anyway so we we were basically I I mean at some point I started running this in Kubernetes uh so I I deployed the Kubernetes cluster and I uh deployed airflow within that Apache Airflow to orchestrate everything at that point I

9:06was just extending that my SQL replica with just additional MySQL tables that I would create uh using using airflow just using raw SQL in there. Uh and that just kept on growing. At some point we had a pipeline of tables that were just you know depend on each other and uh you know at some point that just started

9:28taking forever. In the beginning we could run that in an hour and and produce all those tables once per day.

9:34But at the end when it started to you know break at the seams is you know when this pipeline started taking more than 24 hours. So it would overlap with the next day's run. Oh, sure. So you're like, "All right, I I guess I'll know that tomorrow." Or you'd have like a failure and like, "Okay, well, I will,

9:48you know, I guess I'll start I'll restart the job and hopefully tomorrow it will work." Yeah, sure. Oh man, that's brutal. I I think that's fair fairly common. I I spent a lot of time working on um SQL Server deployments, doing very similar things, and eventually the the pain is just so high. I never I never deployed my own uh

10:10Kubernetes cluster though. That's uh that's next level. That's pretty cool. It gave me a lot of flexibility, but also a lot of headache, I guess. Were you Were you running other stuff in there, too? Were you running like Jupiter?

10:22Okay. I I I started using it just because I wanted to run Airflow and yeah, that's the only way I knew how to how to deploy Airflow was in Kubernetes.

10:31So, yeah. Uh but then I had a Kubernetes cluster and then I was like, might as well run other stuff in there as well.

10:36So, I started running, yeah, Jupyter notebook for ad hoc stuff. Uhhuh. Uh-huh. Whenever I had to do more complicated analysis, make graphs and stuff like that, I would do it in a notebook, which I would run in there.

10:47And then uh if there was something more consistent and more permanent that we wanted to monitor like a dashboard, I would make that in uh in plotly dash and deploy it uh you know, engine X in Kubernetes as well. In Kubernetes. Yeah.

11:00Of course. Of course. Kubernetes. Yeah. Yeah. All right. So, you're you're handroll you're kind of like handrolling your own your own data platform. I mean, that's pretty as just Rob, right? I mean that was pretty much just you kind of contributing to that or was there others also? No, it was just me. Nobody nobody else worked on there. There's some

11:16analysts who were uh they were full-time at garden but not full-time analysts, you know, they would Yeah, sure. So those people would use the dashboards and use the output that generated. Yeah, absolutely. Okay, that makes a ton of sense to me. I'm definitely wow that is a lot uh lot to maintain, right? Because whenever you'd have an infrastructure

11:36issue, you're dealing with that instead of dealing with like delivering value, you know, on the analytics. Oh man, it's so tough. Yeah. So that's when that's definitely when uh uh I started feeling empowered to uh you know to ask for time to actually build a data warehouse and a more more sustainable scalable solution for for these things you know looking at

11:57uh buying versus building because the maintenance just started becoming like so much work and uh yeah that's when we started branching out a little bit and looking at the the next step in our tools. That makes that makes a ton of sense, right? You you build this thing, it's work, it's proving value, and now to get to the next level,

12:13it's like, okay, like you either need to hire a bunch more people or like rethink your approach. So, yeah, that makes sense. So, uh, as you kind of, you know, realized your what you had was had achieved its initial goal, but, you know, it was time to go to the next thing. What was your kind of overall

12:30philosophy in thinking about this stuff? Were you looking for a specific set of capabilities or was it kind of just like you know I just want to solve my problem that I have right now? Yeah. So yeah the latter. So definitely we wanted to make sure that this pipeline would keep running and we could keep building on it

12:49uh but then more sustainably. So not within 24 hours but within you know uh something more manageable. Um actually the first thing we did was uh we got rid of those you know Jupyter notebook and the and the dash uh situation those were replaced first. So we we moved uh from those to hex for the hex.te for the um

13:14for the notebook stuff and hashboard which back then was still called clean uh for the for the BI. Yeah. Yeah. uh and and those guys actually the hashboard people uh recommended me to look into mother duck. I was you know as a next step I was exploring how can I make my data warehouse faster because my SQL obviously wasn't a good solution for

13:35a data warehouse. I started looking through Snowflake, BigQuery and all the you know all the standard names but then they said maybe you should take a look at mother duck. Mhm. I I don't think they thought I was going to be like serious about that as an option because this was like really early in your guys's Yeah. Yeah. Yeah. journey, I

13:53think. Um Yeah. But yeah, we end up ended up going with Mother Duck because it was just it worked and it was really fast. So, yeah. Cool. No, that's that's really really interesting. Yeah. I mean, um so like how did you think about like fitting these pieces together, right?

14:11Obviously you had like your data ingestion which we haven't really talked about yet. Um and actually let's let's go into data ingestion. Let's just talk a little bit about that. Right. So you kind of mentioned earlier you have a bunch of other sources that are not just this my SQL database. So like what was the um you know what was the data flow?

14:29How are you thinking about um how are you thinking about getting data from wherever it was into where it needed to be? And like I want to hear a little bit more about that, you know, from a standpoint of hey, here's what we're doing in Airflow and then, you know, here's what here's what was working, here's what wasn't working. You know, it

14:47sounds like you um initial eventually landed at Dagster there. So, I'd kind of be curious to hear about that, too.

14:53Yeah. So, Airflow was working well for ingestion. Uh so, I I was just running custom Python code to to take a bunch of different sources and put them in directly in my my SQL. Okay. Um, as I started exploring mother duck, I I started doing several changes at the same time. So, I started uh I didn't want to maintain raw SQL anymore. Uh,

15:13because that was just too complicated managing dependencies. I wanted something, you know, more automated. I just wanted to use DBT essentially because that was like uh that was becoming much more popular very quickly and I realized it would solve a lot of the issues I was having. Um, so I moved to DBT and and mother do at the same

15:32time. Uh, and then I thought, you know, h how do I deploy this? How do I orchestrate this DBT workflow? Um, and it was kind of tricky to figure out how to do that in Airflow, but then I I I knew about Dagster and I just I was kind of following like what was happening there. And uh at some point I saw this

15:51thing where you know they had some advertising about you know just take your dbt pipeline run this one command and it converts everything into a like a like a Dagster native um uh job you know

16:05that you can just run and it was sound it sounded like traditional marketing like just do this one thing it'll be super easy and I was very skeptical I was like might as well try it you know if it's just one line of code but it worked and to my surprise. These things never work, but this one time it worked.

16:22I just run this one line and everything was in Dager and I could just run it. I was like, "This is amazing. I have to switch to Daxter right now." So, so I did and you know, I moved everything to Dager from Air Airflow, all ingestion, all my transformation and uh yeah, that's that's kind of how that went.

16:40That's interesting. What was that process like kind of like of of changing from like tasks and jobs to like assets and graphs? Like what was that what was that like?

16:50That was um it was a lot of work, but it wasn't like it wasn't complicated. It was just a lot of work because I had to rewrite a lot of stuff. But because I went from my SQL to uh to docb essentially, there was a lot of rewriting that I had to do anyway. Yeah. Yeah. Yeah. This is before

17:07Chad GBT, so I had to actually sure.

17:11So I was just rewriting all those queries, uh all those models that I had.

17:16But then the thing that made it easier was I didn't have to, you know, in Airflow you have to maintain this whole like dependency uh YAML configuration stuff, you know, like to make sure your models run in the in the correct order.

17:29I don't anymore because in dbt that's just uh because you use the references and the sources that just it knows it.

17:36So that made it that made it pretty easy um you know to to make the switch. Yeah, it just took some time. Yeah, that makes no that makes a lot of sense, right?

17:44because you do have like different um um uh SQL dialects, right? So, it's that you're already having to kind of like change change some of that nuance and the way some of that stuff works. Um okay. No, that's great. Um let's talk a little bit about um uh the mother duck piece of it. So, like what was

18:06the what did you find uh was hard about

18:11kind of going from my SQL to an analytical database like Motherduck and like what did you find was easy? I'm just kind of curious kind of on your perspective there. What was hard? I I didn't think anything was particularly hard. I just No, there was a lot of stuff that was that made it easier.

18:28uh the thing that I mentioned before, but also I could just get rid of a lot of parts of my queries because I had a lot of stuff that would like create indexes or uh just my I had to do stuff

18:43to make my queries actually work like have them be efficient. Whereas in mother do I could just or you know using duct tob um I didn't have to think about it so much so I you know I took out all the uh all the index situation I didn't need that anymore right yeah and and the you know

19:02just the friendlier sequel was something I really enjoyed working with you know select star exclude Yeah that's great all the time now. Yeah me too me too.

19:14Yeah, stuff like that, you know, I I think it just made it more intuitive and and easier to work with in general.

19:20Uhuh. Uhuh. Yeah, that's a that's a that's really interesting. I mean, you know, I spent a bunch of time with SQL Server, so I I had a very similar experience, which is like, you know, uh as my as I actually get the data in the hands of my analysts, right, it's like, okay, now I got to think about all these

19:35indexes. Now I got to build them and maintain them, make sure my licensing is right. I don't know if you had that challenge on my SQL, but I definitely had that challenge on SQL Server. Um, uh, yeah. So, there's a lot of like pieces there that you just have to think about because, you know, it's designed for like this high transactional

19:52throughput and you're kind of using it, um, for these aggregates. Um, so yeah, definitely definitely interesting. Let's talk a little bit about kind of, you know, that the hex process. Um, uh, so that was that was I I guess there's there's also kind of hashboard in there too, but if you want to kind of talk about those two together and like what

20:10it looked like, it sounded like those were kind of already kind of ripped out as a separate step from your data warehouse migration because you had kind of Yeah. found them to be valuable.

20:17Yeah. Okay. Yeah, we switched to them uh probably about half year or maybe even a year before we started migrating to Mother Duck. So they were used with the MySQL back end for a long time. Yeah. Um and uh yeah like I said we used hashboard for BI so I would I would create you know I I really enjoyed their

20:37semantic layer uh because uh I was the only person who was using SQL for data

20:45at the time. So I was really uh there was a lot of analysts who who wanted to like explore the data on their own like self-service but I I needed a way to make that easy for them and to provide some guard rails. So that's where the semantic layer really came in. So I I could define models in hashboard uh with

21:05attributes and aggregate like ways to aggregate the data, ways to join the data to other models um that I could define in the back and then they could just use it and it would work and there would be documentation right there um you know as opposed to traditional BI tools where they'd have to use SQL and understand what's in the

21:22data warehouse. So that's that's why that's why we went with hashboard to begin with and then I needed something to do just more like Python stuff like whenever I needed to do more complicated um analysis or machine learning I had to you know had to do that in a Jupyter notebook environment which which hex provided for us and that grew into a

21:47whole new thing where you know now I'm building apps in there for people to explore data okay okay you know on their own like from many different sources that aren't even in the data warehouse. So that's been very useful for for more of that ad hoc stuff.

22:01Yeah, that um that makes that makes a lot of sense. Um I think uh so so are you did you I guess my my my question here is how much of this is like integrated into kind of like the Dagster asset graph from like an endto-end perspective? Is it pretty much everything these days?

22:24um everything that's in the data warehouse. So all data we ingest uh and then transform. So we ingest our data from multiple different sources into a data lake where it's just stored in in US file storage. Yeah. Daxter does that through mostly and then we have some five trend running in the background but it's mostly Dagster. Sure. Sure. Um uh

22:45so so that's how the data gets in and then from there either using Dagster you know Python scripts or using DBT balls we get it from the data lake into mother duck there gets transformed and then it gets exposed um that that's all Dagster. Yeah. Okay.

23:00That's very cool. Yeah. I think one one thing that's kind of really cool and one of my favorite things that you can kind of do is is what just exactly what you called out which is um you know you can drop stuff into file storage and then pick it up with dbt because duct DB and motheruck can just read from from S3 or

23:16GCS or whatever you're using which is just awesome like I don't have to like think about it my data engineers can just like get that data in there and then I can just you know take it from there and handle my models in a way that's like very um uh uh just kind of like easy and and nice to handle. Um,

23:33you know, they don't have to think about the schema. I can handle the schema, right? It's just data. They're just putting files in there for me to consume, which is good and bad. But, uh, um, so like uh h uh let's bring it like kind of all together here. Like how did you kind of make sure that these components like

23:53tackling ingestion, warehouse, analytics like would integrate and like fit well together or was it just kind of like organically trying stuff or were you kind of from the beginning like okay these things need to work together really nicely?

24:06When I did that migration I had to think I thought about it for a while before I started building it and there was some back and forth with uh with the CTO in particular and and some other people were involved in the decision. So I really uh I didn't just look at mother duck liked it and and go with it. I

24:21really did a thorough comparison to other you know other solutions other alternatives. Yeah. Sure. Sure. Same with Dagster. Uh same with DBT. You know

24:30I I I had to make sure it all fit. I mean especially the only reason we we ended up going with Mother Duck or the only reason we could is because Hex and Hashport already supported it. It was already compatible. And if it wouldn't have been, I don't think we would have gone with to begin with. Yeah, totally.

24:49Yeah, that makes sense. Yeah, like you're right. It's hard like this this is a value chain problem, right? Like all these pieces need to fit together and then maybe you can get good analytics out of the end, right? Um yeah. All right. Uh a personal question. How did it feel to turn off the Kubernetes cluster? Did that feel

25:08good? That was a weight off my shoulders. I think a lot of people have have thought about that and never been able to accomplish it. So, uh, congratulations on being able to get to the point where you stood it up and then you were able to turn it off and, um, that is a huge huge accomplishment. Um,

25:29so props on that. Uh, okay. So, now you kind of have this stack in place. Let's talk a little bit about like um, you know, what you saw in terms of of benefits. So it sounds like one of the big things was just like, hey, now that the jobs didn't take 24 hours anymore, you were able to like actually kind of,

25:47you know, fix things in real time. Can you talk a little about that? Yeah, there was just a much bigger focus on uh what we could do with the data instead of just babysitting pipelines and making sure, you know, that get to the end of it. Um, so so one thing we were working on at the time uh was we were moving

26:04from like current state models to time series models. So, so for example, uh we had a lot of data on like all our devices but like their their current state and we just keep changing over time you know but we wanted to look at how they change how you know historically we wanted to look at devices and what they were doing a month

26:22ago. Um so we started building we wanted to build date spinded models and that was just not possible with the amount of devices we had and the amount of days in our history uh that just did not compute in my SQL would crash all the time. I had to go in and restart the server and stuff like that. Uh so we we tried doing

26:42monthspine models in in my SQL. That's kind of the last thing we were working on back then. Um but in in mother duck, I mean that would run the whole pipeline ran within 10 minutes after we just moved, you know, from 24 hours. Uh so that was pretty big uh change and then we could just build on that very easily

27:03because I could easily add models and just comput like even in development I could just run it on production data quickly and see the result. Uh so it just sped up my development cycle by a lot and uh we started getting a lot more data into the system that otherwise wouldn't have been possible. for example uh computer vision output and uh stuff

27:25people are doing in you know interactions in the app and uh interactions from the website all that data is coming together now in our data warehouse um so that's there's really opens up really allows us to deliver on that initial reason why I joined garden you know I was saying earlier we had this gold mine of of information uh from

27:44our system and from the the app that people are are using to interact with it uh moving to mother do and Having this all centralized in one data warehouse where we can actually join it and just play with that data really allowed us to start delivering on um getting insights from this data that ultimately we want to uh improve the customer experience,

28:03right? We want to allow people to grow healthier plants more quickly uh you know better better tasting all that stuff. And now we can use the data that we have to actually give us insights uh in that regard. That's really cool. I mean that's like the ultimate goal of a lot of these a lot of these platform you

28:22know plays that that people go into right is like hey we we see that there's a problem over here but we have to get all this other stuff right before we can actually attack that problem. Um that's really cool. Um so it sounds like you spend a lot less time um thinking about reliability although you know obviously

28:39that's still something that you know you need to keep things running lights on doors open. Um, what else has kind of changed from like a day-to-day perspective in terms of like what you're working on? I have more time for doing actual data science and not just data engineering. That's that's definitely one of the big things. Yeah, I love

28:55that. I love that. That's a that's a very positive message for all of the temporary data engineers who have data science titles. There is another way.

29:05Yeah. Yeah, for sure. That's super funny. Uh, yeah. I mean, I think that's really cool, too, right? It's like uh sometimes the only way out is through.

29:12I've definitely experienced that and you just got to do it and then and then you can do the thing that you love to do. Um it's really cool. Um so we talked a little bit about kind of you and how your interactions are. What about for like the the team using the data? What does that look like now? What's the

29:25biggest transformation you've seen kind of with the team? Yeah. Um it's faster and they just they just have access to more. So there were there were several things that really people in the team had been waiting for for a long time.

29:39for example, uh specifically stuff regarding the devices, how healthy plants are on those devices, uh data coming from the sensors and being able to join that with uh what customers are doing uh their their engagement with the device that we know through the app. Uh you know, since I joined, people wanted to look at that data and it just wasn't possible to join

30:02it all together in a in a in a useful way and and now it is. So we we are answering much bigger questions now than we were 3 years ago. So I think that's that's definitely had the biggest impact. That's amazing. And like um is there have you been able to take this kind of this this u this data platform

30:21you've built? Have you have you been able to take that and you know flip it to actually uh improve the customer experience as well? Yeah, for sure. I mean, we we launched a feature recently uh uh that gives the customer insight into how, you know, uh how they're caring for their device. We call the care care score, care index. Um it it

30:43pulls a bunch of data from uh uh you know, like their interactions with the device and it tells them how they're doing. Uh so the data isn't computed in the data warehouse but you know we used insights from the data warehouse to get to develop this feature and to get to a point where um you know we we we made

31:03sure that it would actually be useful for the customer. Yeah that's really cool. I mean it's an ecosystem play right? You again you have to get all the parts right and then once all the parts are right then you can get everything else um um rolling right. Um okay

31:18so that's really cool. Um, is there anything that you that you that comes to mind as like a example or story of something that really illustrates kind of the overall business impact of the new stack? Something that um, you know, other than what you've talked about already, something other than we've talked about already. Um, or you can go more in depth if you if

31:40you feel if you feel like you know really jumping into the deep end, that's totally fine, too. Yeah. Yeah. I feel like it's it's all the stuff around computer vision and and getting that output from the models. You know, we we run those models in production and uh they there's a whole separate system that that um you know we we run the

31:58computer vision on the pictures that the cameras take of the user devices every day and uh they they provide feedback to the customer you know goes through a process of we raise what we call flags which trigger communications to the customer that they can go and respond to in some way in the app. uh just just having that having access to that data

32:17and and figuring out what works and what doesn't work being able to join that with the data we get back from the device in different ways. Um yeah that's that's really been the the biggest the biggest thing I think you know so that ties back to all everything I talked about. So the for example the the dates

32:33spine models play a role into this because that's how we analyze that data right now. That's where we get all this data together. So those models have just been getting wider and wider uh with with data from different sources and really allows us to zoom in on what customers are doing and what works and what doesn't work. Um yeah that's really

32:53cool. I I mean the the computer vision part is really cool. Uh you know just being able to I I'm assuming those those models are like uh you can take a picture of a plant and kind of like assess health and like if there's things that are missing in the environment or things like that. Is that is that right?

33:07Yeah, it runs uh it runs in the back end so it's not triggered by the customer.

33:10So our our models look at every device um and there's there's a bunch of different models that uh you know detect things uh and then trigger notifications for the customer. So, it's if there's something wrong with your plants, then you'll get a notification uh that tells you what to do to fix it. Uh but also positive things like if you have a new

33:30flower or if you grew a pepper or something, it tells you that. Oh, that's really cool. Hey, we see a pepper though. We see that's really cool. Uh no, I mean that's that's awesome. I mean, I think ultimately a lot of stuff I think about is like how do we make actionable insights and it sounds you guys have been able to accomplish that

33:46which is no small feat. That's that's incredible. Um, okay. So, let's uh let's hop in a little bit to, you know, talking about uh advice and, you know, making it a little bit actionable. Um, so as you kind of reflect on this, like what's the biggest piece of advice you'd have for other data folks who are kind of in that

34:08similar scaling challenge? I would say uh probably think about the long term. So, I think it's it's easy not to uh especially when from the business you're getting a lot of questions about, you know, short-term answers to short-term questions. Uh it's it's appealing to just go and write a query that you can run on the data that

34:31you have without taking a couple of extra steps and building some models uh that you can use later on as well. So, I think, you know, it almost goes against what's being asked of you. Like, it it'll take more time and you're not being asked to build uh a long-term scalable solution from scratch, you know, to to answer

34:54questions that haven't been asked yet. But I think if you want to be scalable and if you want to continue to grow with the business, that's something that you can start thinking about already and uh just put in a little bit extra work to um to create generalizable models that allow you to answer your questions. Uh you know, and that that doesn't just

35:12apply to writing queries, but it applies to everything you do like all the infrastructure decisions that you're making. Yeah, that that makes a lot of sense, right? like how do I how do I think about this thing as like a or your role as like a system that answers questions or that can you know find insights and it's not just about you

35:30know answering a a question as a specific single ask right um no that's a I love that I love that answer um so what's next what's next

35:41sorry what's next for data at garden like what are you excited about now that you have this foundation um we're starting to model customer journeys in more detail uh that's that's Yeah, that's the main thing that we're doing right now. Uh more computer vision, uh integrating more of that, you know, what what happens in that side because it's a different there's a

35:59different people working on that. Configuring the models, training the models, getting more of that data into the the data lake. Uh yeah, just extending what what we have already.

36:09That's really cool. Yeah. Okay. So, there's there's some there's some really hardcore analysis on the horizon, it sounds like. Yes, for sure. Amazing.

36:18Amazing. All right. Um, thanks Rob. That's all that uh we had kind of canned here um why don't we see if we have any questions. I'm going to pull this up here and we'll go through them here um from the audience here. Okay, so first one here is what is the average ROI for mother tuck plus Dagster data

36:37stack? Well, I guess that's an that's a question for me. Um okay, that's a really interesting question to think about in terms of like ROI and and these data stacks. Um, you know, I think the the biggest thing is making sure that the questions you're answering matter to the business, right? Um, if they don't matter, like ROI is not going to save

36:58you. And if they do matter, ROI doesn't matter. Like broadly, like, okay, are you doing it for like a fair price? Then if then it needs to be done, you should do it. And so I kind of think about like I find like ROI questions a little bit challenging to answer kind of because I think they're a little bit they little

37:16bit indexed too much on like is this necessary and important for us or um you know or is it just nice to have and necessary and important is where we want to make sure this stuff lands. I don't know if you have any kind of thoughts on that too Rob.

37:31Yeah I mean I think the question inherently is about is it worth it right? I think if you want to be a data driven company, you're going to have some data coming in from some places.

37:42You're going to have to analyze that in some way and uh I mean, yeah, you can do that in Snowflake or BigQuery and you're going to have worse ROI because it's more expensive. Sure. Sure. Absolutely.

37:53Or you can do it in spreadsheet and uh then you're going to have a hard time and probably more headaches. Uh I think mother duck is really there in between like you know. Yeah, that's yeah that that that makes a lot of sense. Yeah. I mean, I think a lot of my from like my my experience is a lot of why I ended up

38:09going away from spreadsheets and into um into databases is I actually really cared about the time series data. I wanted I didn't want to know what my model said today and then just only know, you know, know that once a month.

38:20I wanted to be able to like know every day and I wanted to be able to see what that trend looks like and I want to be able to understand how different factors influence it, right? And so that was a big that was a big uh big thing for me personally early in my career and so yeah, super super stoked about that. Um,

38:34any other questions from the audience here? We don't have anything else in the Q&A box. Happy to take in the chat as

38:45well. Give it a few more minutes. By the way, for those of you that are in Seattle, feel free to visit the garden showroom. That's the mother duck office.

38:54Drop us a note. We have a great one. Um,

38:58doing pretty well actually in the Seattle office. Um, we actually had to move it, Rob. It was getting too much sun in the room it was in. Oh no. Yeah.

39:07Yeah. It has its own lighting. So, you know, are you guys still competing within or between offices? I think so.

39:13Yeah. Yeah. Um yeah, there's there's a

39:17couple of them, isn't there? Yeah. Um okay, looks like there's no more questions. We'll wrap this up. Um thanks everybody for joining and uh we will share this out uh with everyone who registered uh at the end. And uh Rob, thank you so much for being a mother duck customer and talking about, you know, the great ecosystem that we're

39:35building here and um uh laying it out for everyone. Awesome. Yeah, thank you so much for letting me talk about data.

39:42Of course. All right, try later everybody. Thanks.

FAQS

How did Gardyn migrate from MySQL to MotherDuck for their data warehouse?

Gardyn's solo data scientist, Rob, ran a MySQL replica as a data warehouse with Airflow orchestrating raw SQL transformations on Kubernetes. When the pipeline started taking over 24 hours to run and overlapping with the next day's execution, he migrated to MotherDuck with dbt and Dagster. The entire pipeline dropped from 24+ hours to about 10 minutes, and he was able to shut down the self-managed Kubernetes cluster entirely.

Why did Gardyn choose MotherDuck over Snowflake or BigQuery?

Gardyn evaluated Snowflake, BigQuery, and other alternatives but chose MotherDuck because it was fast, simple, and already compatible with their existing tools (Hex for notebooks and Hashboard for BI). Key benefits included not needing to create indexes or worry about query optimization, DuckDB's friendly SQL features like SELECT * EXCLUDE, and dramatically faster query performance that allowed development iteration directly on production data.

How does Gardyn's modern data stack work with Dagster and dbt?

Gardyn ingests data from multiple sources (operational databases, cloud services, computer vision output) into an S3 data lake using Dagster Python scripts and some Fivetran connectors. From the data lake, dbt models transform the data into MotherDuck, with Dagster orchestrating the entire workflow. The migration from Airflow to Dagster was surprisingly easy. A single command converted the dbt pipeline into Dagster-native jobs, and dbt's reference system eliminated the need for manual dependency YAML configurations.

What business insights did Gardyn unlock after moving to MotherDuck?

With MySQL, Gardyn could not build date-spined time series models because the queries would crash the server. After migrating to MotherDuck, they could join device sensor data, computer vision output, app interactions, and customer engagement data in one warehouse. This let them analyze how customers interact with their smart garden devices over time, develop a customer-facing "care score" feature, and use computer vision insights to improve plant health recommendations. That was the original data science mission that had been blocked by infrastructure limitations.

What advice does Gardyn's data scientist have for building a scalable data platform?

Rob recommends thinking long-term even when the business demands short-term answers. Instead of writing one-off queries for each request, invest a little extra time to build generalizable models that can answer future questions too. This applies to both SQL models and infrastructure decisions. Although it may seem to go against what is being asked of you, building reusable data models is what allows a single data person to scale their impact alongside the business.

Related Videos

1:00:10

2026-02-25

Shareable visualizations built by your favorite agent

You know the pattern: someone asks a question, you write a query, share the results — and a week later, the same question comes back. Watch this webinar to see how MotherDuck is rethinking how questions become answers, with AI agents that build and share interactive data visualizations straight from live queries.

Webinar

AI ML and LLMs

MotherDuck Features

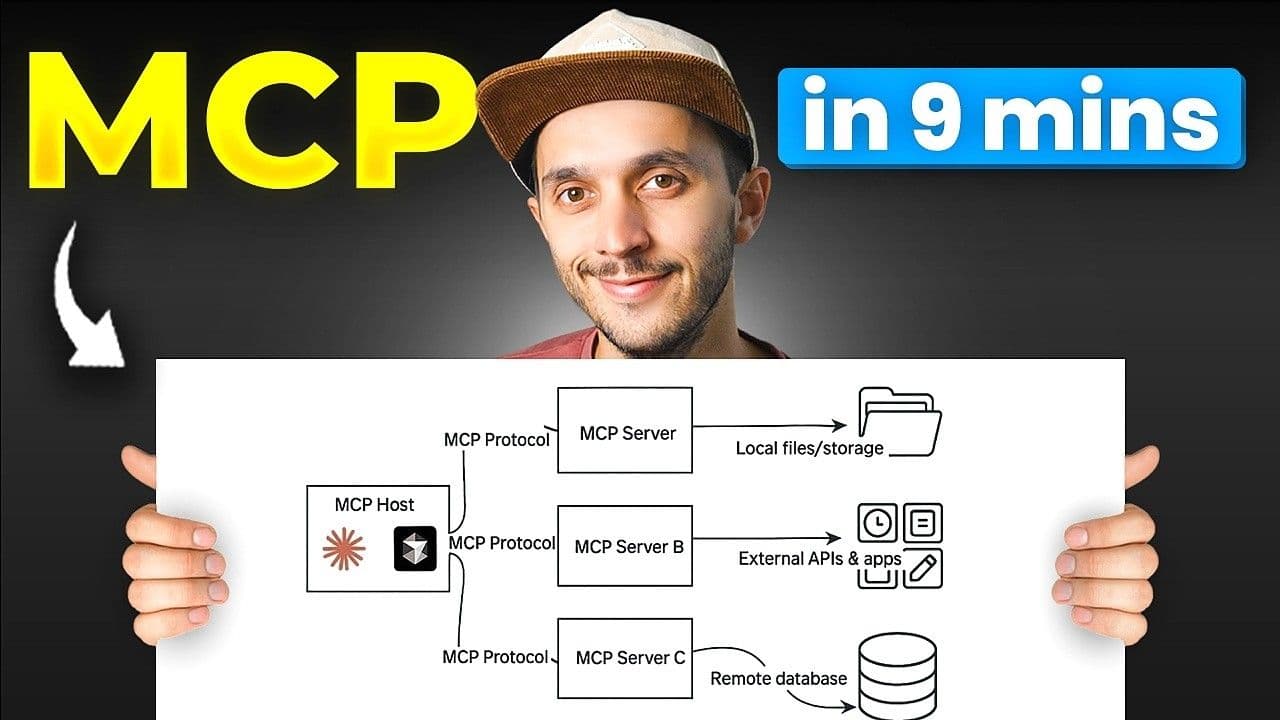

9:09

2026-02-13

MCP: Understand It, Set It Up, Use It

Learn what MCP (Model Context Protocol) is, how its three building blocks work, and how to set up remote and local MCP servers. Includes a real demo chaining MotherDuck and Notion MCP servers in a single prompt.

YouTube

MCP

AI, ML and LLMs

2026-01-27

Preparing Your Data Warehouse for AI: Let Your Agents Cook

Jacob and Jerel from MotherDuck showcase practical ways to optimize your data warehouse for AI-powered SQL generation. Through rigorous testing with the Bird benchmark, they demonstrate that text-to-SQL accuracy can jump from 30% to 74% by enriching your database with the right metadata.

AI, ML and LLMs

SQL

MotherDuck Features

Stream

Tutorial