Why DuckDB is Revolutionizing Data Analytics

DuckDB has experienced explosive growth in popularity, with download statistics showing remarkable adoption rates, particularly in the Python ecosystem. This open-source analytical database is designed to handle everything from quick data exploration to complex data pipelines, and it's already trusted by multiple companies in production environments.

Understanding the Small and Medium Data Revolution

The tech industry has long operated under the assumption that analytics requires big data infrastructure. However, this paradigm is being challenged by three key insights:

-

Most workloads aren't big data: According to data from AWS Redshift and other cloud analytical databases, approximately 83% of users work with query sizes up to 1TB, and 94% work with data below 10TB.

-

Modern hardware is incredibly powerful: Today's single machines can handle up to 24TB of memory on AWS, making distributed systems unnecessary for most use cases.

-

Distributed systems are expensive: They require significant IO network traffic for coordination, and the development experience is considerably more complex.

DuckDB capitalizes on these realities by enabling users to work with large datasets on a single machine, whether locally on a laptop or in the cloud via a scale-up strategy.

How DuckDB Works: The In-Process Advantage

DuckDB is an open-source, in-process analytical database written in C++, designed as a single self-contained binary with all dependencies included. This architecture sets it apart from traditional databases.

Traditional Database Architecture

Databases typically fall into two categories:

-

OLTP (Online Transaction Processing): Databases like PostgreSQL and MySQL, optimized for handling transactions with small datasets. Query times typically range from 1-10 milliseconds.

-

OLAP (Online Analytical Processing): Databases like BigQuery and Snowflake, built for analytical queries processing large datasets. Query times can range from 100 milliseconds to several minutes.

Most traditional databases use a client-server architecture where the database runs as a separate process, and applications connect to it through SQL queries.

The In-Process Revolution

In-process databases run directly within the application process itself. While SQLite pioneered this approach for OLTP workloads, DuckDB introduces something new: an in-process OLAP database optimized for analytical workloads.

This design enables DuckDB to:

- Run on virtually any platform (laptops to cloud workflows)

- Integrate seamlessly with any programming language

- Execute in web browsers via WebAssembly

- Eliminate network overhead for local operations

Getting Started with DuckDB

Installation and Basic Usage

DuckDB can be installed through various methods:

- Direct binary download for CLI usage

- Package managers (Homebrew for macOS)

- Language-specific packages (Python, R, Java, etc.)

The CLI provides a powerful interface for data exploration:

Copy code

-- Simple query reading from S3

FROM 's3://bucket/path/to/file.parquet' LIMIT 5;

Key Features in Action

Friendly SQL Dialect: DuckDB extends standard SQL with productivity enhancements, such as the FROM-first syntax shown above.

Automatic File Format Detection: DuckDB automatically detects and handles various file formats including Parquet, CSV, JSON, Iceberg, and Delta Lake.

Extension System: DuckDB's functionality is modular through extensions. Core extensions (like HTTPFS for S3 access) are auto-loaded when needed, while community extensions can be installed manually.

Data Persistence and the DuckDB File Format

By default, DuckDB operates in-memory, but it offers powerful persistence options:

Creating and Managing Databases

Copy code

-- Attach or create a database

ATTACH 'mydatabase.ddb';

-- Create a table from a query

CREATE TABLE mytable AS SELECT * FROM source_data;

The DuckDB File Format

DuckDB's native file format (.ddb or .db) is:

- Self-contained (all tables and metadata in one file)

- ACID-compliant

- Highly compressed

- Optimized for analytical workloads

Exporting Data

DuckDB supports seamless data export:

Copy code

-- Export to CSV

COPY (SELECT * FROM mytable) TO 'output.csv';

Managing Secrets and Authentication

DuckDB includes a comprehensive secret management system for secure cloud access:

Copy code

-- Create temporary secret using AWS credential chain

CREATE SECRET (

TYPE S3,

PROVIDER credential_chain

);

This approach supports:

- AWS SSO authentication

- Temporary and persistent secrets

- Multiple cloud providers

- Secure credential storage

Scaling to the Cloud with MotherDuck

MotherDuck supercharges DuckDB by transforming it from a single-player to a multiplayer analytics experience. The integration is remarkably simple:

Connecting to MotherDuck

Copy code

-- Connect to MotherDuck with one command

ATTACH 'md:';

Authentication requires only a MotherDuck token, which can be set as an environment variable.

Key MotherDuck Features

Dual Execution: MotherDuck enables intelligent query execution, automatically determining whether to run computations locally or in the cloud based on data location and query requirements.

Database Sharing: Create and share cloud databases with simple commands:

Copy code

-- Create a share

CREATE SHARE myshare FROM mydatabase;

Performance Benefits: Leveraging cloud infrastructure provides:

- High-bandwidth connections to cloud storage

- Elimination of local network bottlenecks

- Seamless collaboration features

Cloud Storage Integration

MotherDuck dramatically improves performance when querying cloud storage. In benchmarks, queries that take 11 seconds locally can complete in just 2 seconds when leveraging MotherDuck's cloud infrastructure and network proximity to storage.

The Complete Analytics Toolkit

DuckDB and MotherDuck together provide:

- Simplicity: One-command installation and cloud connection

- Flexibility: Run anywhere from browsers to cloud environments

- Performance: Lightning-fast analytical queries on small to medium datasets

- Integration: Native support for numerous file formats and cloud providers

- Collaboration: Easy data sharing and team workflows

Whether you're analyzing data on your laptop, scaling computations in the cloud, or building production data pipelines, DuckDB offers a modern approach to analytics that challenges traditional big data assumptions while delivering exceptional performance and developer experience.

Transcript

0:00wgb has been skyrocketing in popularity lately with download stats going to the roof just look at this SCP from PPI and that's just from python usage and wait are you telling me a duck built this database well DB isn't just a fun name it's a powerful open source analytical database designed to handle everything from Quick data exploration to complex

0:26data pipelines and it's already trusted by multiple companies in production in this video we'll understand what makes darkb so unique we'll break down its Core Concepts show you why it's different from traditional databases and of course dive into practical examples with coat so that you also feel like you have the duck power in the palm of your

0:51hand and in the second part of this video we'll explore how you can move your dark DB workloads smoothly to the cloud with Mod which supercharges Dub's features so let's di in for intended so why dub in the past decade the tech word has often treated Big Data as the default for analytics the mantal is simple if your data is growing and is

1:15Big you scale out by using distributed systems with common Hardwares massive infrastructures and honestly uh complex tools to handle it but this assumption is increasingly being challenged for tree reasons first most workloads are in Big Data dgdb represents a counter narrative to this big data Story by focusing on small and medium data and that's the vast majority of real world

1:42use cases if you want some real numbers around what is Big Data the red shift team from adbs and other Cloud antical database reported this data for the common workload roughly we get 83% of users where the query size is up to 1 Tab and 94 4% of user below 10 terby and

2:02by the way this is a hard drive today that contains 4 tab so you get basically two of these and you cover most of companies's workloads so this led me to my second point the hardware you can get today on a single machine is powerful but it's also that our big data reference has kind of changed indeed

2:21back in the days we had to divide and conquer computes because workloads were too big for a single machine today on AWS you can get up to to 24 terab of memory and yes that's the largest machine but that's just to give you the big picture compared to what it used to be and finally the third point

2:40is that distribute system is expensive you have a lot of IO Network traffic to cordinate things and development experience is also much more painful so du DB can easily crunch data set in this range and the performance and simplicity of deck DB let you work with large data set on a single machine locally on your laptop or in the cloud via scaleup

3:03strategy tchb really Embraces a new paradigm in database architecture while delivering performance on par with traditional distributed database without the overhead it also has building integration with everything you need for data would it be reading from Json CSV par Delta Lake using itbs S3 Google cloud storage and much more it even has its own power format a self-contained

3:32efficient and acid compliant format that stores all tables and metadata in a single file with great compression so now you understand with ddb you have a complete analytics Powerhouse in a single tool so how does ddb work so ddb is an open source in process analytical database written in C++ designed as a single self contained binary with all

3:55dependencies included and that later on is often underrated because in the open source word you may get easily stuck in some spaghetti dependencies and issue with third party library but it's not the case here you get the full package so let's focus on the inprocess part and compare them to traditional databases so databases generally fall into two

4:18categories all oftp online transaction processing databases like postgress and myql these are optimized for handling transaction involving small dates or queries on a few rows and they are ideal for fast frequent operation and are often referred as the operational databases the backbone of your e-commerce your banking system or whatever application you have town of a query is typically between 1 to 10

4:48millisecond the second category is Ola online analytical processing these are databases like big query and snowflake that are built for antical queries they are great at processing large data sets Computing metrics across many columns and supporting complex analyz in query time can go here from 100 milliseconds to several minutes most traditional databases whether allp or all app use a

5:15client server architecture and in this setup the database run as a separate process and applications the clients connect to it typically through SQL queries to the server where the data is stored and computation take place in contrast inprocess database run directly within the application process itself a well-known example is SQL light allp in process database you simply include it

5:40as a library in your app and it handles transaction and queries without requiring a server so dgdb however is an inprocess olap database while sqlite is a well-known inprocess ATP database ddb introduced something new an inprocess allab database of optimiz for analytical workloads it's really kind of a new database and while it shared the Simplicity and portability of SQL light

6:08it goals and use case are different WB is designed specifically for analytics and can run on virtually any platform from laptops to cloud-based workflow so yeah Doug DB runs everywhere and that's due to its inprocess nature it can live in any programming language and exists in two different clients python are Bing Java whatever is your gem you name it

6:32you just add the WB package and you are ready to go another common way people run Deb without any language server is to the CLI you download it no matter your distribution Mac OS Linux and yes even windows and you are ready to Crunch data finally it's also worth noting like Doom ddb can really run anywhere even in

6:54the web browser to web assembly web assembly wasm runs high performance code typically written in C++ in browsers enabling apps like figma to work efficiently without native installs so it's commonly used to speed up web apps by running compile code alongside JavaScript so if you go to shell. dub.

7:15org you're going to get an example of tug DB running in the browser nothing is processed on any server because there isn't and you can shut down your Wi-Fi to check it out run a query and everything is going to work in your browser nothing is computed on the server side how cool is that and you know what else is cool liking this video

7:35and subscribing to the channel you know consider it as a gentleman's agreement if you got value from this video show some love or share it to your enemies if you didn't like it so DB extensions so the last important bit to understand about DB is its extension mechanism I mentioned earlier that it contains a lot of integration to do your data work and

7:57I like to think it as a s army knife and DB is designed with extension where each specific function like reading over par or from Excel yes you can also do that has its own specific extension some are core extensions supported by dgdb and some are because yes you can also write your own extension write and maintain by

8:20the community but and of talk let's jump into the real meat and understand the basics of ddb while finding some fun insights about hacker you know like how often do people talk about duck for this Endzone part we'll use the duck DB CLI but as a reminder you can use Doug DB with multiple programming language and it's just a library to install a popular

8:42usage of dougb is also with python and if that's your jam I prepare for you a shared collab notebook that you can click and follow along and don't worry no matter your client you are using what you are going to see is mostly SQL once you initialize the connection the rest of the code is mostly SQL I'm going to

9:02use the CLI which is great because it's easy to install no programming language to set up and it's pretty powerful to install the CLI if you are on Linux or Mac OS you can copy paste the on line installation command on the website run it to your favorite terminal and I would just download the binary and make it

9:21ready for you note that if you're on Mac OS you can also use the package manager om brew and don't worry if you are on under other operating system like Windows you can also either directly download the binary or use a package manager once your Doug vcl is installed you can just launch it by using dougb as

9:40you can see I've opened my editor which is here vs code and one workflow I like to use is basically open a SQL text file and just send the commands to the terminal that way I can get my file use all the features of my ID co-pilot auto complete and it's a really simple setup the only thing I need is basically just

10:03a shortcut command so for example let's run our first SQL query like this and as you can see I just sent this SQL line to the terminal the dgdb CLI and success on vs code if you go to your shortcuts you can use any key command to this command to send the text to the terminal and of

10:24course this kind of setup you can reproduce it in any editor or ID and by the way I made a full video on that topic if you're interesting to watch Link in the description all right let's do a real quer we're going to qu a parket file over a bucket on awss tree and for that I'm just using the from

10:43keyword and I just put a limit five and that's it I can see the content of my I new parket file with just one query so how easy and fast it is and that's the world philosophy of Doug DB to be easy to use so note that our ads S3 bucket is public here and we'll come back on the authentification methods

11:05later so a lot happen in this simple query so let's break it down into three points the first one is the from statement DB support from first statement and of course it supports standard sqls so this is the equivalent of doing select star from but DB has enrich it SQL to make it even easier to work with data that's a lot of easy so

11:28this one is just a neat example of this friendly SQL dialect to increase your productivity when writing SQL and I put a resource in the description if you want to have more example about this friendly SQL dialect all right the second point is the S3 URI scheme as I said earlier DB works with extensions and when you are creating a file over an

11:48N3 path it will detect it needs to autoload some extensions as we are not reading a local file but a file over an object story so here the https FS extension is needed which is part of the core extension maintained by the so it is installed an autoload for you in the background when we run this query and

12:09actually you can inspect what are the core extension available install and loaded so if I go over here and run from wgb extensions which is a table function I see I have a column which is loaded and installed and if I go over the httpfs I just told you about for quing over s stre you see this one is install

12:32uh loads is loaded and installed most of the time core extension are actually autoloaded for you but you might need it to install and load it manually for install if you want to use the special extension then you just use the keyword install I'm going to install the special extension and then I'm going to load it and now if I'm displaying again the

12:54table you see here it was it was installed but not loaded I can see the special extension is correctly loaded and installed another time you're going to need to install extension manually is the one provided by the community because yes you can also write your own extension so here for example if I go on the Google sheet on which enable you to

13:15read directly and write to a Google sheet you see I have to explicitly use the install keyword with the from the community not that with any open source project you install this at your own risk those are are not maintained by the dark DB team but that's roughly common sense all right coming back to our initial query which query over S3 we

13:36noted that is used from first statement it's loaded the https function behind the scene and a third point is that the format of the file is autodetected as well I haven't specified read parket function for example and there is a read parket function that I can use like this so if I run this one it's basically equivalent to the sa I have also more

13:58options on this read parket function so I can pass different parameters needed if I want to which it was not the case here so you can just forget it again Simplicity of course dougb supports multiple F format from gson CSV table format like Iceberg and Delta lak and again behind the scene is loaded and install the right extension for you

14:20finally it's worth noting that we've been working on N ss3 but WB Support also cing from Google Glass storage Cloud fair and so all right in one query we have understand that ddb has a friendly SQL syntax supercharging basic SQL it can read over s Tre or other object storage specific file without any external dependency finally he has an

14:43extension mechanism that most of the time will load and install internal dependency for you all right this is great but how do I puras the data can I just also get the ball data ready to query locally to avoid back and forth to S3 well of course ddb supports reading and writing to different file format like parking csbg and and others but it

15:05happens also that dgdb has its own file format so let's talk about it so ddb file format is an efficient acid compliant uh format that contains all tables and all beta data and I can store the result of my previous query into a table by simply doing create table iure

15:24new sample let's say and then has and my query that I just run above so now when I'm going to run this one and I can show all tables I'm going to see that I'm going to have the table ion news sample that I just created and I can CV this one again with just the from first

15:46statement and here I'm create the local table of this sample of data set but but if I exit the process I'm doing uh contrl D here of my terminal I'm not anymore in the DB process process going back again another dougb process show all tables it's gone and that's because of the nature of Doug DB of its in

16:06process everything is happening in memory and by default nothing is persisted on this so to persist the data I can create a database and a classic way to create a database file is to use the attach command so let's say I'm going to use my accur news stat and then

16:26I can use any extension file name would works but typically we use DB or ddb for

16:33ddb database so now if I do this you're going to see that because this file was not existing it will create a database file if the database exists dgdb will attach this database so that you can query all the tables and metadata that is in this database again one command to make it easy no matter if you create or

16:54attach an existing database this command is also similar in other clients if you use python that works as well note that when you use the attach command the default selected database is not the one you just attach you do have to use the use keyword with your database name uh to select this one so all the operation

17:14now is going to be by default on this database but you can also you know put all your query with the full name if I include it for example here database name that works also all right now let's recreate this table now if I do all show tables you see that I have a table in my database my acar new stats and a name of

17:33the table which is acar new simple if I exit the process run again run my attach commands I can see that my table is there this is not going to work because as I mention by default you need to use the use statement and you can carry back the table from your acur news stats database small information if you're

17:54using the CLI you can also when launching the process just specify the database you want to read so here my acur news table and therefore you're going to open a connection to this table and going to make directly available to query that works all right let's test a bit how fast is TB I'm going to create a

18:14larger table and this one is roughly 10 GB of data so the first time of course when you create the table it takes a bit of time depending on your internet connection so now I have load this larger table into the D be database and so let's do just a simple count here to check what site you can see I have H 42

18:36uh Millions Z so this data set basically contain as you can see on the file name uh data from acar news from 2016 up to January 2025 and let's do a query a bit more complex now where I'm going to extract uh the domain name to kind of like know which is the you know domain website the most shared on hacker and

18:59before reading that query I'm going to add a timer and for that I can add the timer just by timer on and now if I'm executing this query you're going to see that in less than a second I have the results over this data set and it Crees so fat that you don't have time to blame so let's attach actually another

19:18database let's call it I can use that and and on this one I'm going to create a table say top domains has and let me

19:28uh specify the full path of this one the database and table me all right so now I have another database with the stat uh containing the top domains and I'm going to run another query uh and this one is basically trying to see uh the dougb keyword so when people are talking about duck or dougb rather here um either in

19:51the title or in the text and I'm Computing over uh years and months and you can see that uh you know things have been uh growing so the data set is stopping uh beginning of January so you see we have now roughly 50 times of mention per month on acar news either in the title or in a comment right so one

20:11thing I can also do here um with uh we can persist again this table under our arker new stat so let's actually do that and let's call it D be menion has and like this I'm rerunning the query and you see it's going really fast roughly one so now I have two database basically I have my iar news uh stats contain

20:33basically the roll table and the iar new stats uh database which contains uh the computed table around the top domains and the um mention of the DB so you see the performance here is really fast because I store data in Duck DB and not only I have a persistance but performance is better and that's just the general approach for any database

20:55typically you have better performance if you use the native file format rather than an external file plus here important here I don't rely on the my internet connection my compute is Clos to my storage but of course if you want you can export those data sets directly as CSV or par so let's do that to a copy

21:16command so if I do copy and then my select queries so here let's say from I can use stats. tdb or let's say the top domains and then basically to the format I want and here I'm going to export it as a CSV and that's it actually simple as that so let me reopen my uh WB process which was still there I just hid

21:39it for a sec running this commands and um as you can see now I have my CSV file right there which contain the top domain and the count so copying again really simple of course I can specify the file format explicitly and have option if it's a CSV to you know mention the delimeter and so on but by default again

22:00dougb you know recognized by the extension the file format you want to export to and do the magic for you how easy is that an important information is that Doug can also process uh data that doesn't fit all in your memory it will spill it into this all right through this uh exercise with the attach and the

22:18database file we learned that Doug DB store the data by default in memory and it can persist on this through a DB file format that contains all table and metadata we can attach one or more database as we did to the attach commands and you can export the data directly using the copy command uh based on a select query all right let's talk

22:39now about how managing secret because the example was nice and easy because it used a public EDS S3 bucket however in the real world where we act all responsible as adults buckets should be private and secure so how do I authenticate to my cloud provider when using Doug DB dougb has a secret manager and there are multiple uh types of

23:02Secrets as you can see here and in general you have two ways of creating secret either temporary or persisted as you can see here in the whing persisted secret are stored in an encrypted binary format so the typical usage is usually temporary sequel so this is of course the format you can do for S3 you provide uh the key ID is secret and you

23:25mentioned type but in practice you don't want to create static ad Skys especially if you're running it locally but you use IM roll and if you use locally you probably use the ads CLI and use the SSO mechis so typically what you would do you use your ads CLI like this to SSO through a defined profile you

23:46authenticate and so that would create basically the relevant Secret in your ads folder then when you launch tdb you can now create a secret based on a provider credential chain and so what it does is basically fetching the relevant SSO credential on your adbs folder and I can check what is available as secrets we have another table function which is

24:11WB secrets and that's it I'm ready to query private buckets by the all again those are temporary if you want persistence you can do persistent secret but again true SSO it doesn't really matter because you have to refresh them again you know most every day or every X hour all right so far we've been running the compute locally uh on our laptops

24:35and storage can be separate and leave on a street as you've seen but for some use case you need to scale either to a bigger machine or by scaling jobs and this is where the cloud comes in and ddb can run in any process so typically you could run it in any runtime pattern runtime that you have already on your

24:53Cloud but then the question is how do you manage your storage how do you use your Doug DB database file and how to make sure there isn't any conflict when writing and how do you actually share easily this database file and if it's running on Naes then you still have this network back and forth that's where mother duck comes in it supercharge your

25:14duck DB with features that turns it from a solo mode let's say to multiplayer analytics experience and the good news is that moving from a local deck DB uh to a mod deck cloud is just one command and authenticate to mod deck is also one command and you can do that to any dougb client because anywhere you can run dgdb

25:35you can run mother do how easy is that so let's open again another ddb process I'm going to attach the database uh that I created with the top domain and the number of mention of uh DB so if I show the tables here I have two table and now let's say I want to connect to modu and

25:55move this data to the cloud so that I can and share it to other people but I can also use other bi tool or data uation tool that will use modu right to to create my dashboard because modu support a lot in the Eos data ecosystem tools and for days well I need to have my data somewhere hosted so that you

26:18know other people can create dashboards for instance so let's connect to matteru with one command and look at this it's our famous attach command so I'm just going to do attach and that's it now I'm connected to the cloud because if I do show tables I'm going to have a lot of tables because that's all my database

26:39also in the cloud let me just put a show database here and you see I have 30 database in the cloud how did that black magic attached to MD work well well the only thing you need once you log to modu and create an account is a token modu has a feature so you can go ahead and

26:59play around and once you have your account ready you can go to settings and basically you have your access token here and you can create any access token that you need so my access token is set up in my environment variable so let me quickly quit again the ddb process to show you and here I have the mod duck

27:21token variable and so that's how it's authenticated when using the CLI for instance if you don't have a mod do token you can also authenticate to a web flow so you click to a link authenticate to the web application and then you're you're good to go but best practice is to set up a mod duck token as an

27:38environment viable so yeah going back to duck DB and attaching uh the and connecting to mod is just one line and then you're ready to go and query on the cloud and so now for example if I run uh this query which is look famar it's kind of like exactly the same query that we run but it's used a database which is

27:58hosted on mod deck right so this sample of acon new data set is hosted over there so if I run this one you see that now this query is actually comput on the cloud and that's the beauty of dgdb because you have a inprocess uh dgdb database you have now a database in your client and a database in your server

28:18that can act in concert and that's what we call dual execution it's a feature from Mod deck where we're going to try to make the best out of each computer environment would it be your local or client environments and mother do Cloud so for instance you can adjoin data from your local storage with the cloud and depending on the query is going to

28:40either you know push the compute on the cloud which is the case here or locally if it's a local database and you can actually know that by uh running an explain in front of the query so if I run and explain here I can see I have uh my different operation and r C remote

28:59which is run on basically mod Dock and then I'm downloading the result if I'm attaching if I'm attaching again my local uh ddb database and I'm running same query and let's say I'm just running an explain from acur news STS dotop domains you see that here everything is happening locally now let's say I want to push back the

29:23results of my local compute to the cloud so I'm going to create a cloud database which going to be you know cloud acur news stat so this one is created on the cloud and now I can uh create a table again same semantic we saw let's say top domains has select from acur news. Top the so what is going to do here you see

29:46it went super fast because well the did said it's just a couple of rows but now I have this table available as a cloud database of course here I create a new table but you can do insert statement if you want incremental loads or you can copy a full database let's take the opportunity to explore the modu UI and

30:06the mod de UI is essentially a SQL notebook so you can have different uh notebook over there you have your list of attached database and we're going to come directly here uh with the share database so first we have the attached database and on my attach database you can see I have my cloud uh Network database and you can see I have

30:28information here about uh directly uh the data here the the sample is really small so it's not really relevant but you have the colon Explorer let me share it with you on a larger table so you see here it's contain uh all the the downloads uh from uh ddb on Python and I can see you know based on the dates the

30:50downloads uh increasing over the years uh between especially in tuta 2024 and so that's that's enable you have quick information uh about the set without doing any query now of course I can uh start writing query here and let's say the other query uh which is based on a simple uh data which is hosted on mod deck this is a shared database so we're

31:13going to understand what a share database here but I just want to uh run um this one and be doing mistake explicitly and running so this not going to work um and here we see you have fix it which going to fix by line uh your query so it detect the schema on the target table you see that's probably

31:33a group by domain that you mentioned here and already doesn't found it's URL so yes it's running boom in two click I fixed my query so this query is again a Shar database and a shared database as you can see as this little icon here which is you know I have read permission on it now coming back to my database

31:52here I can also decide to share this one and here I have a couple of information I can either make this available to my organization so anyone can access it or not and I can also make it public with uh with a link and I can create share like this and you see this command is just an attached

32:10command again so if I'm running any dug DB client and I'm connected to modac I can attach the share database just like this and start querying it and so we have a couple of uh shared database with open data set that we maintain I invite you to go to our documentation on the example data set you see we have the

32:30acar news uh database either sample or the full data set we have Stocker flow uh data with some schema and some uh squarey tent and of course here I use the UI to show you around the Modi but you can of course use any client to create a share and just do create share my name on of the share and then the

32:54database you want to share and that's it all right the last thing I would like to do again with mother duck is quering file over S3 and while this is important because yeah first if you want to qu over an object storage you need secrets so we saw how the secrets manager for ddb works now how do I query using the

33:14mon Dark Cloud compute I need to have the Secret store over there and so for that let's take back uh the command we use for dub and just add in mod deck now

33:25my secret using my SSO mechanis I'm already authenticated locally using the AWS SSO CLI I can create the secrets and all the secrets is available for modu so now I can start creating private buckets using modu but that's not the only thing fun is that I can use the netor band with between mod deck and UBS so if I

33:47run a query there I'm not anymore depending on my internet connection so let's do a small Benchmark we're going to run the same query but directly over A3 so without any intermediate step where we load the data from a street to a ddb table and we're going to first do this by just using DB without modd so

34:08let me run the timer on and then the

34:16query all right so it took uh 11 seconds for this specific query to run and now let's do the same but true mod duck still using my duck DB client but now I'm going to Leverage The Cloud compute and network band weave between my buckets and

34:40Moc and boom two seconds so hopefully you see the advantage clearly of having a storage and a compute on the cloud and a ddb client locally you can do a lot of different things optimizing your cost when you need to compute locally or l in the the cloud and the network between your blob storage if you have data out

35:02there so what we learn about mother duck is that authentication is just to one command to the attach and a Modoc token provided in your environment moving data from a local dgdb to mod deck is just one query you can just do a create table as as you would create a table locally but you target a cloud database I can

35:25create a share to easily share Cloud database I can do this from any dougb clients anywhere you can run dougb you can run mod deck I can leverage the mod deck UI to use the SQL notebook the AI fix it features or the Colin Explorer and I can leverage a cloud Network to speed up craying over uh object storage

35:46and safely secure my secrets all right let's swap up we had a lot of things to talk about why du DB is such a GameChanger for analytics whether are you crunching data on your laptop scaling up in the cloud with mod duck are just amazed that a database can run inside a browser hopefully you learn the core concept around dug DB and also

36:06around mdck and we have a lot more resource around dougb and mdck so feel free to check the links again yes in the description in the meantimes keep quacking and keep coding

36:19[Music]

FAQS

What is DuckDB and why is it different from traditional databases?

DuckDB is an open-source, in-process analytical database (OLAP) written in C++ that runs as a single self-contained binary with no external dependencies. Unlike client-server databases such as Snowflake or BigQuery, DuckDB runs directly within your application process, similar to how SQLite works for transactional data but optimized for analytical workloads. It can crunch large datasets on a single laptop, supports a friendly SQL dialect, and reads directly from CSV, JSON, Parquet, S3, and more. Get started with our DuckDB tutorial for beginners.

How do you persist data in DuckDB using database files?

By default, DuckDB runs entirely in memory and data is lost when the process exits. To persist data, use the ATTACH command to create or connect to a .db or .duckdb file. This file stores all tables and metadata in DuckDB's ACID-compliant format. You can attach multiple databases at once and switch between them with the USE statement. When launching the CLI, you can also pass the database file path directly as an argument to open it.

How do you connect DuckDB to MotherDuck cloud?

Connecting to MotherDuck from any DuckDB client takes one command: ATTACH 'md:'. This uses the MotherDuck extension to authenticate and connect to the cloud. You need a MotherDuck token set as an environment variable (motherduck_token), which you can get from the MotherDuck UI settings page. Once connected, you can query cloud databases, push local data to the cloud with CREATE TABLE statements targeting cloud databases, and share datasets with other users. Learn more in our getting started docs.

What is dual execution in MotherDuck and how does it optimize queries?

Dual execution is a MotherDuck feature where the query planner automatically decides whether to run computation locally on your DuckDB client, in the MotherDuck cloud, or both. If you query a local database, processing stays local at zero cost. If you query a cloud database, processing runs on MotherDuck's servers. You can even join local and cloud data in a single query. Use EXPLAIN in front of any query to see which operations run remotely versus locally.

How does DuckDB handle extensions and auto-loading?

DuckDB packages specific capabilities (like reading Parquet, querying S3 via HTTPFS, or spatial analysis) as separate extensions. Core extensions maintained by the DuckDB team are auto-installed and auto-loaded when needed. For example, querying an S3 path automatically loads the HTTPFS extension. You can also manually install extensions using INSTALL extension_name and LOAD extension_name, and install community-built extensions with INSTALL extension_name FROM community.

Related Videos

1:00:10

2026-02-25

Shareable visualizations built by your favorite agent

You know the pattern: someone asks a question, you write a query, share the results — and a week later, the same question comes back. Watch this webinar to see how MotherDuck is rethinking how questions become answers, with AI agents that build and share interactive data visualizations straight from live queries.

Webinar

AI ML and LLMs

MotherDuck Features

9:09

2026-02-13

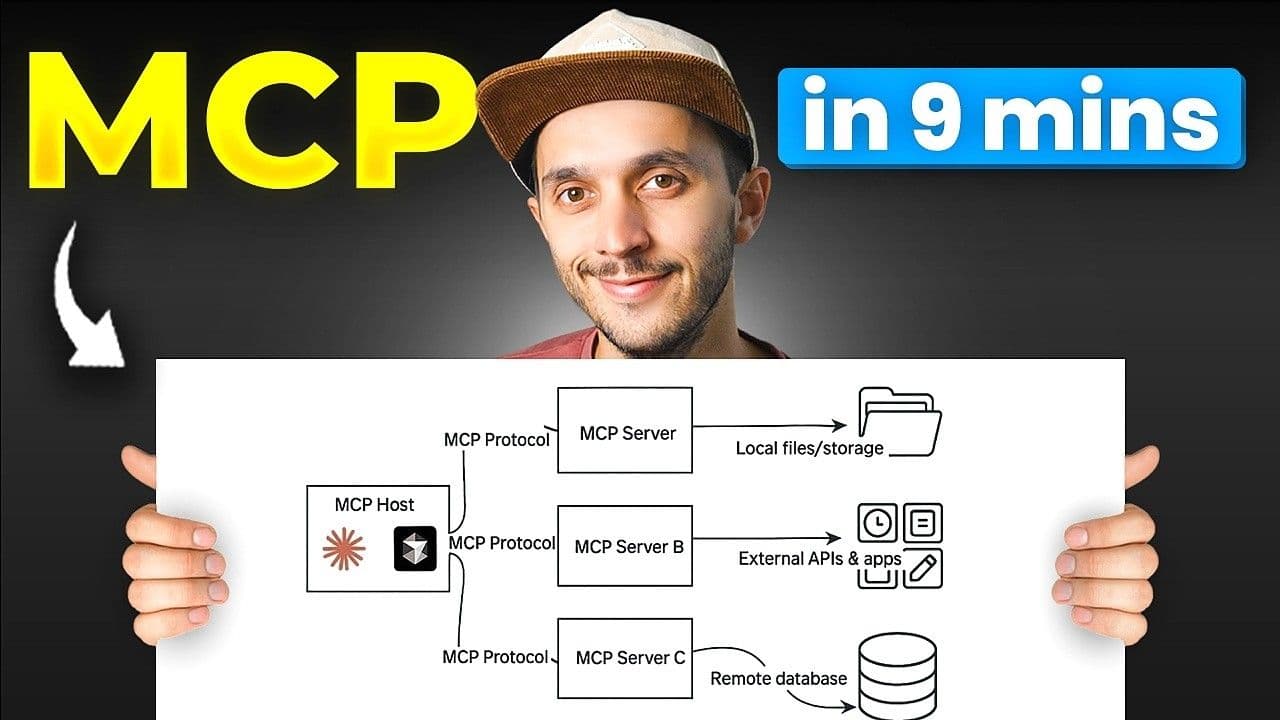

MCP: Understand It, Set It Up, Use It

Learn what MCP (Model Context Protocol) is, how its three building blocks work, and how to set up remote and local MCP servers. Includes a real demo chaining MotherDuck and Notion MCP servers in a single prompt.

YouTube

MCP

AI, ML and LLMs

2026-01-27

Preparing Your Data Warehouse for AI: Let Your Agents Cook

Jacob and Jerel from MotherDuck showcase practical ways to optimize your data warehouse for AI-powered SQL generation. Through rigorous testing with the Bird benchmark, they demonstrate that text-to-SQL accuracy can jump from 30% to 74% by enriching your database with the right metadata.

AI, ML and LLMs

SQL

MotherDuck Features

Stream

Tutorial