From Curiosity to Impact How DoSomething Democratized Data

2025/09/10Featuring: ,Hear how DoSomething's data team escaped the enterprise data trap, achieving 20X cost reduction and transforming hours-long queries into seconds with MotherDuck.

Transcript

0:00Yo, hey everybody. Uh, good to have you here with us. Uh, I am Jacob here at Motherduct and I have Sahil here with me from Do Something. Uh, we're going to do something really cool here where we talk about self-s serve analytics with Do Something. And uh, this is going to be an interactive session. So, if you've

0:22got questions, throw them in the chat. We will get them at the end. Um, we are super super stoked to get rolling here.

0:29So I'm going to throw it over to Sahil now to uh take us away. Sahil, I'm gonna hide on the stage here. I'm still I'm still lurking.

0:38>> Sure. So hey everybody, my name is Sahil. I am the senior data engineer at a nonprofit called do somethingthing.org.

0:48Today we're going to talk about how do something saves 20x on cost for and achieved self-s serve analytics which was previously something we'd only dreamed of. Uh mainly leveraging mother duck um and thanks to our partners at there for putting together this webinar.

1:07So going to dive in with a little video that we made on our 30th anniversary.

1:30We call BS.

2:20[Music]

2:35[Music]

2:47So hopefully that gives you a little taste of what we're all about. Um, if you see at the bottom here, we have our staff. We're a New York City based 501c3 nonprofit. And the strip at the top represents the people that we are hoping to serve, mainly young people in the United States, ages 13 to 25.

3:06Our mission is to fuel young people to change the world. We spent three decades doing this. I think we're 32 years now.

3:15And do something was part of many of the activism, especially youth activism chapters of the last 32 years. Um, some were mentioned in the video. Some especially technology focused uh activism movements like trying to get uh skin color emojis onto the iPhones and Do Something had a huge part to play in that. We also were very popular during

3:37the MTV era. I don't know if any of you remember um but do something with sort of a very accessible way for young people to get involved in their communities and has from the beginning leveraged technology and sort of cutting edge marketing um whether it's social we were also one of the first nonprofits to use SMS as a sort of codebased way to

4:01interact with our our population. So, do something really has this history of being at the forefront of technology in the nonprofit space, which can tend to lag behind um sort of generally.

4:15So, about four or five years ago, we revised our strategic plan and came up with these strategic priorities. The first one is to foster a diverse and inclusive collective of young people. The second was to change the economic and political conditions that block young people from using their power. The third was to cultivate a culture of belonging and

4:37purpose within our organization and the collective of members that we serve. And I'll use that word members to mean the young people that are part of our our website. And we also want to build a sustainable organization positioned for long-term impact, which is probably the way um in which Mother Duck has helped us the most.

4:55So this is what our website looks like today. We've gone through many iterations. um do something has had many different evolutions. Um this is what you see when you land on the page and um are not logged in. We have sort of this call to action that um allows you to find your cause and start doing causes

5:13or different cause areas like climate, mental health, education. Um so these are the different ways in which young people who come to the website are prompted to to get involved. If they were to click on this call to action or the take action button here, they would be taken to this page.

5:30We have a highlighted action um called wave makers that has to do with protecting our waterways through cleaning up trash that ends up flowing into water bodies. We also have um an area for them to choose other kinds of opportunities to engage in. One is programs. Programs are what we call a collection of actions, events, and leaderships that tell a larger story.

5:52events. So, in person or online events where people, young people can get together to talk about an issue or to take action. And leadership opportunities are sort of how we envision uh people who are repeat actors on the site evolving their their membership into leading others. And we have a lot of um interesting stories of people who are once do something members

6:14going on to uh hold office, political office, going on to um host do something events of their own, start do something clubs at schools. So for us, especially now with our new strategic plan, we're really trying to evolve um the quality of membership and the depth of engagement from actions to programs, events, and leaderships.

6:35So if you were to scroll down, you would see some opportunities. We have two actions here, safe summer crew and 100 for 100. These were in association with GM around safe driving um as well as safe summer season which was also is actually the overarching program that encompasses um these two actions.

6:55Another action example is ripple effect um which is an association with Niagara bottling and has to do with um reducing the amount of pollution in our waterways. So if you were to click on that action, you'd be taken to this page. This is the sort of the get started page for a given action. You get some metadata about what is involved in

7:16the action. Is there a scholarship opportunity? Um in this case there is. What is the deadline? Do you get volunteer credit? A lot of our young people come to us because their school has a mandatory service requirement for graduation and so volunteer credit is something they're looking for and most of our opportunities do have them do offer volunteer credit and also

7:35an estimated time to completion and we'll use the time that they that they self-report um to produce their certificate for volunteer credit.

7:44We have a description of the action and some steps that will be involved. So if you were to get started and go to the first step, you would get some information. If you go to the second step, you would um get some more information, maybe watch a video, uh be taken to other resources. And finally, the third step is where uh the user is

8:04supposed to enter their their report back we call it where they report back to us what they did, how they did it, how they felt about it, um how many hours is spent, how many of their peers engaged and this is sort of their their proof of work of their engagement.

8:22Then on the back end, um our staff will go in and approve it or request more information. and if it's approved, they'll be eligible for a scholarship if there's an associated scholarship and they'll get their certificate if we offer volunteer credit for the section.

8:37So, our impact um which is relevant to tell you about do something but also to begin to tell our data story um over 8 million people have been activated through do something since 1993.

8:50We found that members are three times more likely to volunteer or advocate for social issues after they engage with do something.

8:57And there have been 650,000 actions taken by do something members um since 2018. Now within that um we have various cause areas but to highlight climate and sustainability um we had 28,000 climate and sustainability actions taken. 87% of members reported requiring skills essential essential for sustainable living and um we were able to divert 1.074 tons of toxic chemicals from

9:27landfill. So these are just some highlights very top level data that would be available on our website. But this is only one way which we use data.

9:34Data is important to us for for many reasons. uh probably foremost among them is that the the organizations that fund us have certain requirements for reporting um in order to to disperse funds and we also have an obligation to our board and to our membership to report um exactly how effective our offerings had been.

9:57So now to get into um more technical side of things our previous data system and I like I mentioned do something has been through a lot of evolutions. So in this particular version of do something that existed from 2015 2015 to 2024 there was significant data investment.

10:13Um like I said do something has has long been at the forefront of using technology as a nonprofit. We used to even call ourselves if you go to our GitHub um sort of organization description. We call ourselves a tech company for good. And so we took that startup mindset and tech forward mindset and built a a pretty large engineering

10:31team. Um, and it would they were able to collaborate with each other and sort of build a lot of novel and custom services that were at the time uh relevant and um and useful to sort of demonstrate what our system was. If you follow sort of ETL ELT, we were ELT. Um, we extracted from using Fiverr from many sources and we also had

10:58some custom scripts for ingestion. Um, we loaded all that data into a Postgress data warehouse. I'll put it in quotes because it's not it's not optimized to be a data warehouse and we'll get into that. Um, but that's the database that the previous team chose um in order to to store our analytical data and we would transform that data every

11:20night with DBT cloud. Now uh in the extraction side of things uh we were collecting from various sources including our social media ads, our social media performance and web events which is sort of something that will come up. Um we collected a lot of data but and this is something I'm I'm sure will resonate with many of you. Um

11:43the the ratio of of using that using that data compared to what was collected was very low. um especially for web events. Web events using snowplow captured pretty much everything that happened on the website, every modal open, every click, every hover. Um and that's just something that we weren't leveraging whatsoever. Um except in very small use cases that that could be

12:06achieved through simpler means, which is sort of where we'll get to. and fiverr at this volume, especially for a nonprofit, had a very high cost that was not justified by the analysis value downstream that we were able to achieve.

12:22In many ways, Fiverr was the largest single line item um within our our our

12:30data team and much larger than um the

12:34value we were we were able to extract. PO Postgress um in general is not able to handle heavy analytical queries in a reasonable time frame. There were times when I would leave a query running overnight and hope that it would finish in the morning. Um, and there are many times where I had to tell internal stakeholders that sorry, this query just

12:56can't happen as you want it. Um, because it's not tenable with our our system.

13:03And it was also expensive. Um, it might

13:07be surprising that a nonprofit has has medium-sized data at 4 terabytes. Um, but that, you know, largely due to our web event collection sort of ballooned over time.

13:20And every night our DBT cloud models which were sort of highly custom ad hoc

13:27one model per dashboard kind of thing took eight hours to run. So it run every night from from midnight to 8 am with DBT cloud which was also expensive and just very difficult to iterate on. Um you know if you want to do a a middle of the day run it would just be untenable.

13:47So I sort of already highlighted the need for change but just to summarize queries took hours if they ran at all especially the web the web event queries the cost was way too high for the value.

14:01Um our organization in reality relied only on a variety of relatively lowhanging metrics for our core work. Um none of which which were justified by our bill and non-technical team members lack direct access to insights. Um we have Tableau um and we have a variety of dashboards. First of all, they were too slow because everybody wanted live data

14:24and live data running on Postgress. You know, a query every click meant that creating and using Tableau dashboards was incredibly slow. Most of the time was just in watching the the loading spinner. Um and also it resulted in something that is also very common across the industry which is data request dependence.

14:43um every single metric that was not accessible in Tableau and Tableau um you know was just even if it was there it was too cumbersome for people to use more or less every single data question came um to the data team or to me and

15:01required sort of um manual intervention

15:06and they weren't able to answer any of their own questions. So at some point when we were rethinking our platform so do something went through an entire platform rebuild in 2024 mid 2024 and as I was taking the data side of that I stumbled onto duct DB and the sort of small data the beginnings of the small data movement

15:30and it started with exploring duct DB and realizing that it was easier to use and and um better for out of memory data sizes than pandas. And so I just sort of picked it up as my favorite nimble local analysis tool. And something Hannis, who's one of the the creators of DuckDB, mentioned is that his goal was to to

15:53minimize data fear using duct DB. And that's something especially as someone up and coming um you know sort of new to to heavy data work um was experiencing

16:06know we were evaluating whether we need to move to snowflake or data bricks or bigquery and there was I I'll tell you a lot of data fear um and the cost cost fear right what if I write a query and it just pops out with a bill that we as a nonprofit will find very difficult to manage um there was a lot of setup an

16:26infrastructure fear as to do I have the capacity to manage this infrastructure and especially for local analysis you know duct DB was just it made everything feel very comfortable and and uh user friendly and then we found out about Motherduck this was when Motherduck was still in beta I believe in February of of last year and we started talking to

16:50the folks over there and I'd like to shout out Alex Monahan who was sort our key contact in motherduck and they guided us through this process of operationalizing the benefits we got from ductb and taking it to the data warehouse level and that's something that I I kind of had hoped had ex would exist when I was playing with ductb and

17:13when motherduck came around and um it just seemed almost too good to be true but we were we were guided through this onboarding process and were able to collaborate on a lot of the use cases that we had specifically Um, and that led to us choosing Mother Duck as our our data warehouse for our rebuild.

17:31So now what does our our new data system look like? So this went into to effect June of last year and has been running since then. Um, it represents cost effective data investments. So in our old data system, I mentioned that we had significant data investment and a single data person is our is our reality now. Um, which is me. Um, like I

17:53said before, we had a 6 to eight person data team and just through the realities of our new strategic plan and the scope um the landscape of nonprofit fundraising in today's date and also just a refocusing of what we want to do as a nonprofit towards our mission um and away from sort of technological investment just for technologies sake.

18:15This is the reality in which we we find ourselves and and sort of the small data impact came at the perfect time. So what does it look like now for extraction? We still use Fiverr but we've used it only for G4 um which is Google Analytics 4.

18:32Um and that's only because we haven't been able to find a an easier way to ingest G4. If we were to do so we would be able to move off of five trend completely.

18:44There's a couple of custom ingestion jobs that I've written that are are very straightforward thanks to um a lot of duct DB quality of life um sort of an easy SQL and and that sort of thing.

18:57Now instead of loading into Postgress uh we load into mother duck and we're on their light plan and able to to get by very well at that price level and we transform using a dbt core via GitHub action. So like I said, DBT cloud took us eight hours and had its own cost structure and now DBT core although it's

19:20not apples to apples we have changed our philosophy around analytics but just to give a a headline our DBT core project takes about two to three minutes to run which falls very easily into the free GitHub actions minutes that we we receive every month from GitHub.

19:41So uh what went into these changes? We limited our sources. So we evaluated what what data do we actually need which I think is sort of key to the small data philosophy. We focused on our operational data from our operational databases and only aggregated web events. So we connect to G4 but we don't take in every single event that G4

20:03collects. We you know have sort of internally advised stakeholders that if you if you need detailed J4 analysis go to J4 and they have dashboard building tools there. We don't need to bring everything into Tableau.

20:15But if there are reports that they want to bring from G4 into Tableau or into motherduck then we're able to stream just those reports.

20:27In terms of uh loading, Fiverr at this volume has us eligible for the free tier which is was unimaginable in our in our previous iteration. Like I said, Fiverr was by far our biggest cost in the data world. And um and now we we're not on the free tier, but just in case we sort of broach that boundary, but we could be

20:48if if we just so chose. And we also were able to through this process of of limiting our sources and and using um tactical custom ingestion, we were able to make Fiverr a less critical component of our data system which you know if we need to move off of Fiverr or we want to switch to something else. Um just saved

21:09us a lot of sort of single point of failure um concern. And I mentioned that you know our transformation is is incredibly faster and and now free.

21:22So there are too many wins to name. This paradigm shift rebuild from the ground up with um sort of right sizing our data system to our actual size has come with so many improvements that they don't even really feel like improvements. They feel like a brand new world. But I'll I'll list the top three. Our costs are

21:44roughly 5% or less of what they were. And given the reality of constrained nonprofit resources, especially in this fundraising environment, reducing these costs to rights size against our downstream value, made remaining a data driven operation sustainable. Every nonprofit wants to be data driven. Um, but the reality is that the vast majority of nonprofits lack the resources to to truly be data driven.

22:10And reducing our cost has made sure, you know, going back to our strategic priorities to to have a sustainable organization. Reducing our cost is not just sort of to to pad our our bottom line, so to speak, but but really to enable us to be comfortable saying that data will always be a part of the do something story and make that commitment

22:32to our members and to our funders um and to the public. Our data system is now

22:40simple and fast, which as a single data person is incredibly valuable. I'm able to coherently develop, test, and iterate. Um, and it's not only a much simpler system, but orders of magnitude more performant. We talked about DBT runs going from eight hours to two minutes, although it's on the same models, but achieves the same purpose for us as an organization.

23:04And in general the performance of motherduck a dedicated OLAP system for the kinds of queries that um we and any organization is likely to run are just not comparable to to Postgress especially um they are just miles and orders of magnitude uh faster

23:26and I'll mention that our our mother duck costs are also um roughly 20x less

23:33less than what we were paying to AWS for our previous data system for accessibility. And this is something um we will jump into now. We had sort of a revoly moment or I had a revoly moment when I was talking to an internal stakeholder and they mentioned that they had a data question and I was sort of through my experience

24:03ready to jump in and solve that question for them and then they told me that they had already jumped into mother duck because I had given them access previously and already answered the question themselves.

24:15And I said, "Wow, I didn't know you needed SQL." They said, "I don't." And and that's something that we'll we'll show. Um and so we start to get to a world in which the buzzword or buzz

24:29phrase of self-service analytics becomes reality.

24:37So, like I started to talk about, we went from a world of, "Hey, can you pull this for me to never mind, I already got what I needed, which for us was a a dream come true without any specific training without me even knowing they were going to do so." non-technical team members were able to jump in, answer their own quick data

24:59questions. Not um nothing that was so

25:03complex that it sort of would uproot a data analyst, but but thing the sort of data requests that are sort of the the bulk of data requests. Maybe not the long tail, but things like how many X do we have? When was the last time we did Y? um a simple group buy or a minimum or a maximum

25:22and all that without knowing SQL and this led to our internal non-technical stakeholders having a clearer understanding of our usual user activity and the sort of topline metrics that we we talk about. And so when they go to when they do a webinar or when they uh talk to funders or they go do an in-person activation, they have not only

25:43the numbers at their disposal, but they have just a better sense of of what the picture is in the data.

25:51and one or two internal stakeholders specifically who are let's say uh somewhat technical but not in data specifically were able to with the help of chat GPT or um many of Mother Duck's features do even deeper dives and answer answer questions that would have taken me at least a day or two.

26:17So on that note, we're going to jump in jump into a demo that's going to talk about how can nontechnical team members explore, understand, and investigate data for themselves using using mother duck. So I will pass it over to Jacob.

26:32>> Hi Seal. Great. Okay, I'm going to share my screen here and we're just going to do a little exploration of Motherduck.

26:42Um, so this is the motherduck web UI and uh I'm just going to show a couple of things here that uh let us get into the self-s served notion which um you know lets you kind of run your data team in a in a way that lets you serve your users in a really powerful way. Um so

27:03the first thing that I have here is this notion of an attach statement. Now those of you who are like duct DB uh users may

27:12understand what this attach does but for those of you who don't um this is like a linked server in SQL server or a foreign data wrapper in uh Postgress. It lets us connect to another database in our system seamlessly. In this case Sahil made actually a public share for me so that I can reach uh I can access it

27:31between organizations inside your own org. you don't need to do it this way, but this is how he, um, uh, shared with me, which is great. Um, and so this is just a sample data set that we're going to do some exploration in. And so, uh, I run this and it gets attached and I can actually see it on the lefth hand side.

27:47I've got like a sidebar here and I have some notebooks, but I don't really care about those. What I do care about is this do something database, which is now added. And you'll see this little person icon that tells me it's a share. Now, something that's really nice about this, too, is it comes with permissions baked in. So um this is a readonly share. So

28:05if you're giving something like this to maybe an analyst, you don't need to worry about them messing up the data.

28:11They can only read it um you know if they want to create their own views, they can do it in their own database, but they cannot kind of u mess with the underlying data which is great. Um so I'm going to jump into this demo data set and we're just going to take a look at a couple things here. So what I have

28:27now on the left, let me see if I can make this a little bigger. Here we go.

28:29All right, that's fine. So this is our column explorer here on the left. And so it's just doing a bunch of calculations and aggregations for us kind of on the fly. Um I've got kind of three questions here we can just take a look at. So one question we might look at is like what kind of like active actions do we have,

28:46right? And so we can scroll through this

28:50um and we can look at the status column and we can see what we've got in there. So we've got 87 active, 26 hidden, five closed. Right?

29:00This is a very small data set, but it gives you a sense of the data very quickly, right? Um we can also check on other things. Volunteer dreaded, right?

29:07Okay. 16 of these are sourced here. 58 uh false, right? So 16 true, 58 false.

29:15And we've got a bunch of nulls in here, too, right? And so you can see my my what my percentages are here kind of as an absolute of the column. So I can very quickly kind of get an understanding of the data that I'm looking at. And then we can kind of look at um timestamps here like when are these things getting

29:30created. Let's see created at right here. Um so I can see that this data set is um from from June to July or sorry June not June it's not even June that's uh that's April April to August. And we can see kind of how many there are by bucket here. Not too many by other buckets but there's a bunch right here on this first

29:51date. Again this is just a sample data set that he'll um shared with me. So that's the first thing. The next thing we can do is um take a look at table summary which is uh here. So we can just

30:05take a look at what's actually in this data. Um and we can see what's really cool about this is um I believe the underlying source sahil is right >> in this case it's our it's our CMS but in general our our operational database is a MongoDB equivalent.

30:25>> Yeah. Okay. Okay. Got it. Got it. But what that means is we can just drop, you know, um nested things in here. Um and I

30:33can see what see what's in them kind of very easily. We're going to actually jump into like a SQL query in a minute.

30:38And of course, if I want to see my table definition, I can see that here too, right? Um and so I can kind of see what the shape is of my strrus. In this case, this is like a nested data set, right?

30:49Um which is very helpful. Um, let's go

30:53look at average scholarship amount. Again, we can do the s some more kind of quick analysis on this. Great. We've got our uh mean here at uh 7 uh 83. And then

31:07we can take a look at our end date to answer one more question, which is like, hey, what actions are open-ended at the moment? Find the end date column. Here it is. Right. Um, so we can see

31:19actually this is really interesting. 75% of this column is null. So a lot of these actions are still open. They have not been resolved yet. So like without without doing too much here, right, of writing any I have not written any SQL yet, right? I have gotten familiar with this as a user merely by interacting with this thing on the left sidebar. And

31:36of course, if I um I can query this, right? Um if I open it, oops, it's the wrong one. Let's open this one. Here we go. Uh, I can do something like from do something actions. Oops, there we go. And I run it. Um, oh, demo.

31:56I need the schema. That's my bad. So I get the data back and I can hide my left or my left hand sidebar and open up the righth hand sidebar. I get the same data set here. Where this is really helpful is on the left it's just bounded to a table. On the right it's not. And so I

32:10can say, you know, where end date uh is not null or something, right? And

32:18now I get only 30 columns back. So for ones that don't have an end date, we can then kind of like again look at like our status column and we can see, oh, ones that have end date are mostly hidden.

32:28Actually, they're not there's not very many active, right? Um that's really great in terms of just quickly exploring. Um, sometimes you want to like drill down maybe into a column. And so I'm just going to show that quickly here. Um, so we're going to do more traditional SQL query. You'll notice I skipped the word select, right? We don't

32:48need select. It's optional. Uh, by default it does select star. So now I have the exact same query.

32:54Um, I think I want to um,

32:59let's take a look at this causes column. Let's see if we can get this in a data set for So we can use this function called unnst pauses like this uh as flat right um and it comes back and then here it's on the right we can see all of our okay that actually didn't it did one

33:22level of the nesting I think I needed to do two levels on this one but um uh that's slightly more complex so we're not going to look at it at the moment but um that's kind of what it looks like to take a look at these sets of keys. Um, and you can see we went from 118 rows to

33:40202 rows, right? And why we did that is because some of these um causes have multiple rows inside them, right? So, we have this like deeply nested data structure. We're not quite quite shredding the JSON, but we're beginning to kind of look at it and explore it in a very kind of uh simple way. Now, this is um uh uh you know, a little bit

34:03exploratory with the um with the data here, but it's if you you do need to know SQL for what I was just showing you, but there's another option here, which is where we can take a look at what we call command K and we can ask natural language questions. Okay, so um I'm just going to hit command K and just

34:22copy this question in. Now, we only have one table right now. So, um, obviously

34:28we're fairly constrained here, but what's happening is when we do ask these natural language questions, the selected database up here, all of the metadata is packed into the question as well. So, we're asking this question, uh, in this case, it's using GPT4.1.

34:45Um, you can choose other models. Uh, it's kind of baked into Mother Duck's kind of backend. So, we're making these calls on your behalf. Um, you know, just build through one place. But you get the you get this action here. And now I can see okay I have I have a query. Okay great. Um this makes sense not null

35:03right count star. Okay that is that is a uh an interesting query to take a look at. This can also handle joins. We don't have a joinable data set at the moment but um uh it's very helpful. One of the things that h that works in here too for those of you who are kind of thinking about maybe AI workflows and like how do

35:18you make these querying easier is um motherduck also includes column comments in the context that it passes to the LLM. So if you want to add column comments to your underlying data set you can do that and then that'll enrich and make it much much easier to query. Um and then we can do something like this um without altering the table. Sure.

35:42Okay. Let's take a look at the action subtitles first. So, just comment this one out.

35:50Go down here, hit enter, and let's uh let's do select star from um demo.do

35:57something actions. Let's just bring our data up again. Okay. And so, we have this action subtitles. Let's see what we have here. Subtitles.

36:10Subtitle. Here we go. Okay. Um,

36:15so what we can do in this case is let's just do subtitle, which we'll do like ID. I think there's an ID, right? Okay. No, there's not.

36:25But, uh, again, because we have a kind of AI built into the UI, it came back and said, actually, it's underscore ID.

36:32Okay, great. Thank you for fixing that for me. I'm not familiar with the data set. Cool. So, we can use this prompt function and we can say something like um uh let me check prompt. You know what? Actually, what we're going to do is I'm going to do it slightly differently because I do not remember the specific syntax and I don't

36:52like reading the docs. So, I'm just going to say um add a column that uses prompt the prompt

37:01function to uh create a oneword summary

37:09of the subtitle column. Okay. So, it's going to build a um it's

37:18going to build a query for me. Um, okay. And this looks good. Uh, let's apply it and we can run it. And so this actually, what's really cool about this is it's it's making a call for each row in my data set, right? Um, and uh, it looks good. We can also do something like um, uh, sentiment ranking in here. We can

37:44also do a few a few other pieces. You know, how I like to think about these things is actually get a get a um get like a list of maybe 10 categories and then say given a list of these 10 categories, you know, categorize each object in this, right? Um I think we we've uh we have some researchers on our

38:04team kind of on the AI side and something like 40 40% of data inside your database is text. So just by putting the prompt inside it, you can do these really really kind of neat neat functions. Um so uh yeah, that that was

38:20the other piece that I wanted to show you here. There's of course vectors and kind of more advanced kind of full text search and hybrid search type stuff that you can do too, but this is just the tip of the iceberg and I wanted to show kind of what is possible for a user that may not be an expert but can just kind of

38:33like ask natural language questions. They're an expert in what the business how the business operates and if you have a nicely shaped data model, you can take a lot of ground. um pretty quickly here. All right, I'm gonna stop sharing um and we are going to hop into um Q&A next. So, um

38:53sweet. Uh I think I I have a couple of questions to tease us up while everyone uh if you have questions, we can we can take them.

39:01Um so I think you talked about this. My first question was uh how big is the data team? It's now you're you're you're a solo operator now. Is that right?

39:10>> That's right. It used to be, you know, previous generation of do something used to be six to eight people.

39:16>> Yeah. Yeah. >> But ever since I joined, it's it's just been me. >> Yeah. Yeah. Makes sense. Um h what did migration look like? you know, you showed a couple slides of like the pre like the 2024 world versus 2025 like um how did planning and execution go from like you know old stack and to new stack and then you know sunsetting

39:37old stack. >> Yeah. So migration was very comprehensive I'll say because we rebuilt the entirety of do something from the ground up. So it wasn't just moving one data system to a new data system. Do something as a whole was built from scratch again.

39:53um which in some ways made it easier because I was able to embed the sort of mother duck small data philosophy from the ground up. I didn't have to sort of make things work but even if I had had to so I didn't migrate data from from Postgress to mother duck.

40:07>> Yeah. >> Took me all of one day perhaps despite the the four terabyte size.

40:12>> Sure. >> Um and because duct DB SQL is a subset

40:17of or sorry a superset of Postgress. >> Yeah. um migrating those models was relatively easy, although we also did rebuild them from scratch just to to be more mindful of of resources. So, um I would say migration was a a big lift that was only made easier by by using duct and mother.

40:37>> Yeah. >> Yeah, that makes sense. Um, that's definitely a uh uh always a pain like you you could you can see where you want to go and it's like wow I got to do a lot of work to get there.

40:50>> Um it's really really cool that it was it was you know even possible with a very lean team.

40:55>> Um what is something you learned you know over the last year during this experience that you wish you could go back and tell the um the younger version of yourself?

41:08Good question. I think this whole idea of non-technical team members having access to the data was such an unexpected win but so huge and sort of

41:19mental model shifting that if I had known it earlier it would have been even more of a no-brainer to make this switch. Um it already was you know

41:31despite mother duck being quite young at that time just the sort of support and um technical sophistication we felt from from the mother duck team gave us a lot of confidence to to move away um from Postgress and even to eliminate alternatives like snowflake bigquery data bricks but if we had known this particularly I think it would have just

41:53been a sort of a a non-decision that would have just been very straightforward Because I think when you try to sell um data system evolutions to to the business or in our case to the executive team and the board, you you don't really win any hearts or minds by talking about performance or talking about sort of the technicals.

42:17You win on cost and sort of internal experience. And the cost story was very powerful. But I think in a lot of ways, and this is sort of where I think the magic and the energy comes around the dubd ecosystem, the magic is not only have we made it cheaper, not only have we made it faster, but it also feels

42:38better. It's like, you know, those like meme triangles where it's like you can only choose two out of the three.

42:43>> Yeah. >> Somehow with duct DB and Mother Duck, it it feels like you get all three.

42:47>> Y and that that sales story to your internal stakeholders is very powerful. >> Yeah. Yeah, that makes sense. Um, yeah, I mean, especially, you know, for technical folks, like the speed is great, but um, like what does it mean for me as a business stakeholder, I think, is what it always comes back to.

43:05And like, you know, once you understand how to build those tighter feedback loops, um, you know, it's hard to get them away from that. Um, looks like we've got a few questions here in the chat. Um, Gerald, do you want to serve these up? Do you want me to? You got it.

43:18Okay, perfect. Seal, you want to take this one? >> Sure. So I'll just read the question out loud from Patrick Garcia. When you say nontechnical users have data access, do you mean the business users are mother deck are using the mother duck interface directly now? So that is true for for four or five of the mother duck um of

43:37the of our internal team members. Um some of them on the engineering team, some of them outside um they are using the mother duck interface directly. Now none of them know SQL, so they're interacting in exactly the ways that Jacob just showed you. Um, and and I was surprised, I think many of you will experience if you make this make the the

43:58shift that the sorts of questions that get asked, 95% of them in my experience

44:05are these sorts of simple group eyes, simple statistics, um, when was the most recent X, how many users do we have in California? Um, these sorts of questions represent the bulk of the curve. And these are exactly the sorts of questions that you can answer very easily with Motherduck. And now with the command K, you can even answer deeper questions. Um

44:27but it's just it's sort of funny when you realize that um the distribution of questions and complexity is not is not normal. It is heavily skewed towards these sorts of statistical highlights.

44:42And these questions are really easy to answer in Mother Duck. So that's what they're doing for the most part. But they're also using command K and chat GBT to to formulate deeper questions.

44:52>> Yeah, that makes sense. Um, related to that also from Patrick, one more here.

44:59>> Uh, so I'll read it again. So is the self-service PI still Tableau for the end users in that case? How was the transition integration process? So we're still using Tableau. We use Tableau cloud um in the both in the previous generation and now as well. Um, I did end up sort of rebuilding all of our dashboards from scratch. Um, not because

45:18it was strictly necessary, but more so because our our data models have shifted so much and the questions that we wanted to answer as an organization um have changed. So, it was better for efficiency. They go really fast now, right? So, that's one huge thing.

45:34>> Yeah. >> Um, they are are relatively instantaneous, I would say. um to the point where people question if they are working properly because they're so used to one click equaling one minute um in in our previous generation.

45:47>> Yeah. >> So in that sense the transition has been really seamless. I will say that you know and maybe this is us an us thing maybe it's a general thing having BI doesn't mean people use BI right so you can have everything available but the use of our dashboards is still much lower than the sort of

46:13a priority assumption would be and so having motherduck column explorer table summary as that sort intermediate step

46:23gets way more use than than Tableau ever did. >> That's interesting that I I I don't think that's surprising. Uh as it's long tail, right? And the BI dashboards often are built with, you know, the center of the distribution in mind.

46:41>> Um so that's not surprising. All right, we've got a great one here, I think, uh about kind of mother duck technicals and and how you used it. Uh I'll read this one. Um but see how this question is also for you. Uh moving to motherduck did you have to worry about any design principles to make queries better or

46:55mother duck just worked out of the box? >> I'd say if anything it sort of decreased worry. One reason is the sort of friendly SQL. So there are a lot of things you can do in duct DB land that are are not possible or require much more complex SQL in outside of it.

47:16One good example is the columns operator. So if you have some columns that you want to select with with rejects, you can do that. That's that's pretty huge for one of our data models and something that when I was trying to figure it out without without using that operator was giving me a headache. I'll also say that um the performance gains

47:35means that mean that you have to you don't have to be as careful, right? When things go so fast in milliseconds or less, optimizing from five milliseconds to two milliseconds is not that important. When our models took eight hours to run and if you know sub-optimal query added another hour on top of that, that was a big problem, right? with with motherduck

48:00the performance is so so powerful even on the light plan which you know so we use some of Motheruck's least powerful instances even then um our queries are so fast that we can be much less careful about sort of detailed optimization >> yeah yeah that's a good uh I I'll add a little bit more uh uh color to that um

48:23you know one thing that is different that we frequently see people coming from Postgress um ask questions about and see I don't I don't know if this came up with with you I know Alex guided through some of the complexity there but there's a couple things that I'll call out the first one is like um don't use primary keys and

48:39you don't need to worry about indexes typically people coming from Postgress are like okay I'm going to put all my data in mother duck and then I'm going to add primary keys I'm going to add indexes and um generally those actually don't help you at all and they make your inserts slower um it's not really designed to work that way and So um uh

48:59or as like a as a super as a super fast like optimized way whereas like Postgress it's like all right I need to put an index on this so I can query it.

49:06Um on the flip side the order of your data in duct DB informs the um how the row groups are built which is kind of like the columner way that it works with data. And so, uh, especially when you're working at like with like time oriented data, um, ordering it as you insert

49:24massively massively increases, uh, ability to query it if you're going to filter on those on those timestamps. And so, those are kind of like three things that I frequently see people coming from other databases where they just like didn't quite understand that and they they maybe get stuck for a minute and say, and then we we show a couple couple

49:42little tricks and they say, "Oh, this is way easier. I didn't realize that. But it's definitely a it's definitely a mindset shift to go from uh you know e

49:51even other more traditional data warehouses um into motherduck just because it is not exactly the same. So yeah um I don't see any other questions.

50:03We're happy to take a couple more here. Um uh if you want to type type away.

50:11Uh if not, I think Oh, perfect. Let's see here. This is a great question. I'll I'll take this one, I think. Uh unless heal, you have specific specific. All right. All right. Okay. Do you have to worry about the cost implication of nontechnical users in the prompt function or that's insificant in the grand scheme of the bill? Great question. So, by default, we

50:31actually limit it pretty tight in terms of how many credits you can burn using um using AI functions. Um, you can actually turn them off in your instance also if you don't want them. Um, and if you do want to increase your credits, it is like a talk to sales conversation.

50:49Generally, it's pretty small. Um, obviously, uh, the main trick with the prompt function is like build your tables incrementally so you persist the results of the prompts. So every time you run the model like maybe using dbt you're not um uh you're not rehitting the same uh query every time right so you can like persist those results in a in a new table. Um

51:13it's not that expensive though I mean models are only getting cheaper uh in in general when you're doing that prop time functionality you're not running it across like millions of rows. Um

51:25yeah uh so I wouldn't say it's insignificant right like you do have the ability to run millions of of AI queries right like

51:35against the table with a million rows you will you will hit the open AI API a million times right um and so uh you you can of course turn that off if you want um uh but like generally you know when we're giving users not or or less technical users tables they're kind of smaller aggregated tables So, they

51:52couldn't even like, you know, generally do that. But, it's a fair question. Um,

51:58I'm going to go to the next one here from Ben. Uh, can you describe how you connected Mother Duck and DBT? I actually didn't get to this in the demo.

52:06Um, I'm just going to pull it up real quick. Uh, and share it. Let me do this.

52:13U, Ben, thanks for teeing me up, actually. Okay. Um, I'm just going to drop this repo in here real quickly just as an example if I can find the tab here.

52:28Cool. Um, all right. So, this is a a repo that has some simple inserts. Um, I have a small DBT project in here just scaffold in here um with a single model and it's actually a Python model. Um, CE and I talked about this but didn't really didn't really broach this. You can run Python in your duct DB inside

52:47your GitHub actions. Um uh just as long as you return a data frame, it can do whatever you want. And so what's really neat about this is then you can run it with a GitHub action. And um this is very similar to what Sahil is doing uh today. I don't know if you want to add any color to like the GitHub action and

53:06how you think about it. Sahil. >> Yeah, there's really not much to tell here. It's not it's not sort of a um tricky setup at all. It's very straightforward. Um, we just set up a a Python image with GitHub actions and and run it run dbt.

53:22>> Yeah, >> we're serving our docs elsewhere um just for privacy, but but yeah, it's very simple. I pipe the the logs to Slack afterwards just because >> why not?

53:34>> Yeah. Yeah, >> that's really cool. That's a good that's a good trick honestly. Um, so yeah, it is that simple. Um the profile for example looks like this. So I'm just connecting to my DB and when you use this MD colon in your path um it grabs the token from your environment and so that token is put in the environment

53:53right here in this step. Right? So um it's a pretty pretty simple way to do that. And of course you know we can go here let me go to the actions and here we can just run the workflow.

54:07It takes 23 seconds. It's probably mostly configuring things there. Um,

54:13but let's come on, dude. Let's go. Okay, it's running. So, we can kind of check in on it. We can see it's building our image. My image is So, obviously, if you're running these like really tighter and smaller, you want your image to probably be pre-built. I'm using the Ubuntu base image. Um, and so, and now if we go back to Motherduck

54:32here, and I have a query, oops, here we go. somewhere in here that just I can run and I can see here's my data 55.

54:41Yeah, that's right. 9:55. That's UTC time. I can see this just wrote in from my GitHub action. Oh, you can't see that here. Let's go here. There we go. Share this tab. Um, so you can see I just ran this query. I'll run it again. Um, here's my data in Motherduck coming from uh this GitHub action that I just ran.

54:59So, uh, that's kind of the what that looks like.

55:06It's all right. Um, well, I really appreciate everybody's questions here. Um, it's been really great to to hear more about this and kind of tell the story. Um, all right. Looks like our slides not coming up. I'll just uh I'll I'll uh I'll talk about it u manually here. So, we are throwing a conference called small data um that is um

55:29uh in San Francisco. Gerald through the uh threw the link in there. Sahil will be talking. Oh, I see the slide. A lot of really great a lot of really great talks coming up um including including from Sahil and we'd love to see you all there. Um where we talk more about, you know, the ethos of what we're building

55:46and you know what the future will look like. So really appreciate everyone's time. Uh Sahil, awesome to to hear about this and Gerald, thank you for producing.

55:56>> Thank you guys so much for having me. >> All right, bye everybody. Bye bye.

FAQS

How did DoSomething.org reduce data costs by 20x with MotherDuck?

DoSomething replaced a 4 TB Postgres data warehouse, expensive Fivetran ingestion, and 8-hour nightly dbt Cloud runs with a lean stack built on MotherDuck. By limiting data sources to only what was actually needed, switching to dbt Core on free GitHub Actions (runs in 2-3 minutes), and dropping to MotherDuck's light plan, they cut costs to roughly 5% of their previous spend. Fivetran went from their largest line item to nearly free tier eligibility.

How can non-technical team members query data without knowing SQL?

MotherDuck has several features that enable self-serve analytics without SQL knowledge. The column explorer shows distributions, counts, and statistics for every column by just clicking on a table. The table summary lets users preview nested data structures visually. And Command K is a natural language interface where users type questions in plain English, which are converted to SQL queries using GPT-4.1 with full table metadata in context.

Why did DoSomething.org choose MotherDuck over Snowflake or BigQuery?

As a nonprofit with a single data engineer, DoSomething needed a system that was cost-effective, simple to maintain, and fast. MotherDuck on its light plan delivered queries in milliseconds compared to Postgres queries that sometimes ran overnight. The dbt Core pipeline dropped from 8 hours to 2-3 minutes. The team also found an unexpected benefit: non-technical staff could answer their own data questions directly in MotherDuck, giving them true self-serve analytics.

How do you connect MotherDuck with dbt Core using GitHub Actions?

The setup is straightforward. Create a dbt project with a profile that uses the md: connection path for your MotherDuck database. Set the MotherDuck token as an environment variable in your GitHub Actions workflow, install dependencies in a Python image, and run dbt. The dbt integration picks up the token from the environment automatically. DoSomething's entire transformation pipeline runs within the free GitHub Actions minutes each month.

Related Videos

1:00:10

2026-02-25

Shareable visualizations built by your favorite agent

You know the pattern: someone asks a question, you write a query, share the results — and a week later, the same question comes back. Watch this webinar to see how MotherDuck is rethinking how questions become answers, with AI agents that build and share interactive data visualizations straight from live queries.

Webinar

AI ML and LLMs

MotherDuck Features

9:09

2026-02-13

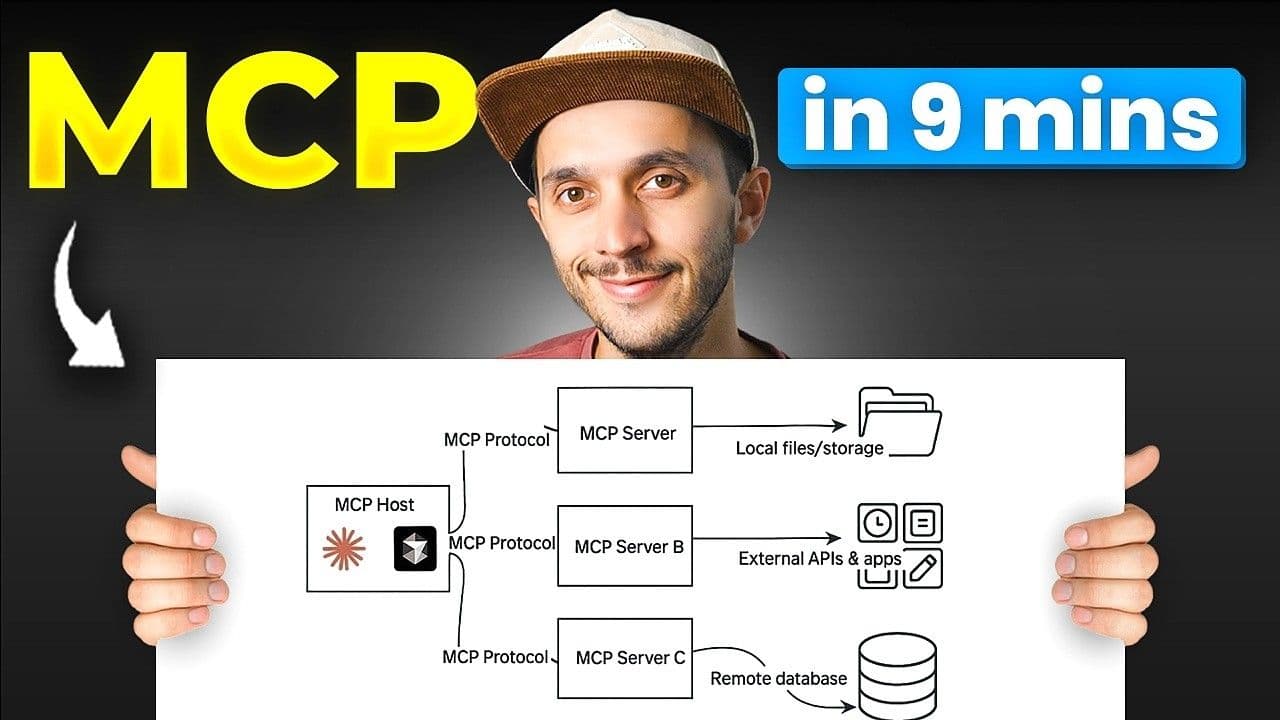

MCP: Understand It, Set It Up, Use It

Learn what MCP (Model Context Protocol) is, how its three building blocks work, and how to set up remote and local MCP servers. Includes a real demo chaining MotherDuck and Notion MCP servers in a single prompt.

YouTube

MCP

AI, ML and LLMs

2026-01-27

Preparing Your Data Warehouse for AI: Let Your Agents Cook

Jacob and Jerel from MotherDuck showcase practical ways to optimize your data warehouse for AI-powered SQL generation. Through rigorous testing with the Bird benchmark, they demonstrate that text-to-SQL accuracy can jump from 30% to 74% by enriching your database with the right metadata.

AI, ML and LLMs

SQL

MotherDuck Features

Stream

Tutorial