TL;DR: Feeling the limits of Pandas with large datasets? This guide shows how DuckDB acts as a turbocharger for your existing workflow, not a replacement. Learn to query DataFrames with SQL, achieve 10-40x faster performance with 90% less memory, and scale from your laptop to the cloud with MotherDuck in one line of code.

If you work with data in Python, you almost certainly use and love Pandas. Its DataFrame API expresses ideas powerfully and has become the standard for data manipulation.

But as your datasets grow, you've probably started feeling the pain. Your laptop fans spin up, your Jupyter kernel crashes with an OutOfMemoryError, and simple groupby operations take forever. You've hit the "DataFrame wall."

Many developers assume the next step is a massive leap to a complex distributed system like Spark. But you can break through that wall without leaving your favorite tools behind.

DuckDB and MotherDuck solve this problem by augmenting your existing workflow. In this post, we'll walk through a practical guide, based on a recent live demo, showing you how to:

- Query Pandas DataFrames and local files with the full power of SQL.

- Achieve massive performance gains—often over 10x faster and with 90% less memory.

- Build a hybrid ML pipeline that uses the best of DuckDB, Pandas, and Scikit-learn.

- Scale from local to the cloud with a single line of code.

How to Query Pandas DataFrames Directly with DuckDB SQL

DuckDB's zero-copy integration with Pandas runs on Apache Arrow. You can query a Pandas DataFrame with SQL without any costly data conversion.

We'll create a sample 500,000-row DataFrame and run a standard aggregation in both Pandas and DuckDB.

Copy code

import pandas as pd

import duckdb

import numpy as np

# Create sample sales data - 500k rows

np.random.seed(42)

n_rows = 500_000

sales_data = {

'product': np.random.choice(['Laptop', 'Mouse', 'Keyboard', 'Monitor', 'Phone', 'Tablet', 'Headphones', 'Cable'], n_rows),

'region': np.random.choice(['North', 'South', 'East', 'West'], n_rows),

'amount': np.random.uniform(10, 2000, n_rows).round(2),

'customer_id': np.random.randint(0, 20000, n_rows),

'quantity': np.random.randint(1, 10, n_rows)

}

df = pd.DataFrame(sales_data)

The standard Pandas approach is familiar:

Copy code

# Pandas approach: Standard groupby aggregation

result_pandas = df.groupby('product').agg({

'amount': ['sum', 'mean', 'count']

}).round(2)

# Time: ~0.02 seconds

Now, let's do the exact same thing with DuckDB, querying the df object directly:

Copy code

# DuckDB approach: SQL query on the same DataFrame

result_duckdb = duckdb.sql("""

SELECT

product,

SUM(amount) as total_sales,

ROUND(AVG(amount), 2) as avg_sales,

COUNT(*) as num_transactions

FROM df -- Notice we are querying the DataFrame 'df' directly

GROUP BY product

ORDER BY total_sales DESC

""").df()

# Time: ~0.01 seconds

DuckDB automatically discovers the df object in your Python environment and lets you query it as if it were a database table. For SQL fans, this exposes the full declarative power of SQL on your DataFrames.

Querying Files Directly from Disk

DuckDB can query files like CSVs and Parquet directly from disk, streaming the data instead of loading it all into memory. This works well with datasets that don't fit in RAM.

Copy code

# Save to CSV for demonstration

df.to_csv('sales_data.csv', index=False)

# Query CSV directly without loading into pandas

result = duckdb.sql("""

SELECT

region,

COUNT(DISTINCT customer_id) as unique_customers,

SUM(amount) as total_revenue

FROM 'sales_data.csv' -- Query the file path

GROUP BY region

ORDER BY total_revenue DESC

""").df()

# Note: File was streamed from disk, never fully loaded into memory

DuckDB supports wildcards, so if you have a folder full of log files or monthly sales reports, you can query them all at once:

Copy code

SELECT * FROM 'sales_data_*.csv'

This saves you from writing tedious Python loops to read and concatenate files.

The Performance Showdown: DuckDB vs. Pandas

Let's scale things up and see where DuckDB truly shines. We'll generate a 5-million-row dataset of e-commerce transactions and perform a few common analytical queries.

First, we generate the data and store it in a local DuckDB database file (my_analysis.db).

Copy code

con = duckdb.connect('my_analysis.db')

con.sql("""

CREATE OR REPLACE TABLE transactions AS

SELECT

i AS transaction_id,

DATE '2024-01-01' + INTERVAL (CAST(RANDOM() * 365 AS INTEGER)) DAY AS transaction_date,

-- ... additional columns

FROM range(5000000) t(i)

""")

# Generated and wrote to disk 5M rows in 1.56 seconds

# DuckDB database size: 115.3 MB

Notice the compression: 5 million rows with 7 columns take up only 115 MB on disk.

The Pandas Approach

To analyze this data with Pandas, we first load the entire dataset into memory.

Copy code

# Load into pandas from DuckDB

df = con.sql("SELECT * FROM transactions").df()

# Load time: 0.54 seconds

# Memory usage: ~1035 MB (due to Pandas' in-memory object overhead)

Now, let's run three queries: a complex group-by, a top-N aggregation, and a moving average calculation. These operations take around 1 second in total, including the initial load, and consume over 1 GB of RAM.

The DuckDB Approach

With DuckDB, we don't load the data. We query the 115 MB file on disk directly.

Copy code

# Query 1: Sales by category and region

q1_duckdb = con.sql("""

SELECT

category, region,

SUM(amount) as total_sales,

AVG(amount) as avg_sale,

COUNT(*) as num_transactions,

COUNT(DISTINCT customer_id) as unique_customers

FROM transactions

GROUP BY category, region

""").df()

# ... (similar SQL for Query 2 and 3)

The total time for the same three queries is just 0.07 seconds, with minimal RAM usage.

The Results

| Approach | Load Time (s) | Total Query Time (s) | Total (s) | Peak RAM Usage |

|---|---|---|---|---|

| Pandas | 0.54 | 0.45 | 0.99 | ~1035 MB |

| DuckDB | N/A (streamed) | 0.07 | 0.07 | Minimal (data streamed from 115 MB file) |

DuckDB is 14x faster and uses over 90% less memory. This difference becomes even more dramatic as datasets grow.

In a test with a simulated 50-million-row dataset (by reading a Parquet file 10 times), DuckDB was over 40x faster and used 99% less memory than the Pandas equivalent, which required over 10 GB of RAM.

This isn't just about speed. DuckDB's memory efficiency lets you analyze datasets on your laptop that would be impossible with an in-memory tool like Pandas.

A Real-World Scenario: An ML Feature Engineering Pipeline

Performance benchmarks are useful, but let's see how this hybrid approach works in a practical, end-to-end machine learning pipeline. Our goal: predict customer churn based on 1 million transactions from 50,000 customers.

The strategy is to use the right tool for the right job.

Step 1: Heavy Lifting with DuckDB SQL

We'll use DuckDB for what it does best: fast, complex aggregations over large datasets. We can write a single SQL query to generate a rich set of features for each customer, including recency, frequency, and monetary value.

Copy code

# This single query engineers 20+ features from 1M raw transactions

features = duckdb.sql("""

WITH customer_stats AS (

SELECT

customer_id,

MAX(transaction_date) AS last_purchase_date,

DATE '2024-11-28' - MAX(transaction_date) AS days_since_last_purchase,

COUNT(*) AS total_transactions,

SUM(amount) AS total_spend,

AVG(amount) AS avg_transaction_value,

-- ... and many more aggregations

FROM customer_transactions

GROUP BY customer_id

)

SELECT

*,

-- ... additional calculations on the aggregated data

FROM customer_stats

""").df()

# Engineered 20 features for 50,000 customers

# Time: 0.05s

In just 50 milliseconds, we've crunched 1 million raw transactions down to a clean, 50,000-row feature set with one row per customer.

Step 2 & 3: Preprocessing and Training with Pandas & Scikit-learn

Now that we have a smaller, manageable DataFrame (features), we can hand it off to the rich ecosystems of Pandas and Scikit-learn for the final steps.

Copy code

# Step 2: Use Pandas for final type conversions and target variable creation

features['last_purchase_date'] = pd.to_datetime(features['last_purchase_date'])

# ... create the 'will_purchase_next_30d' target variable

# Step 3: Use Scikit-learn to train a Random Forest model

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split

X = features[feature_columns]

y = features['will_purchase_next_30d']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

model = RandomForestClassifier(n_estimators=100, max_depth=10, n_jobs=-1)

model.fit(X_train, y_train)

The Power of the Hybrid Approach

The entire pipeline, from generating 1 million rows of data to training a highly accurate model, takes less than a second.

| Stage | Time (seconds) | Tool Used |

|---|---|---|

| 1. Data Generation | 0.07 | DuckDB |

| 2. Feature Engineering | 0.05 | DuckDB (SQL) |

| 3. Preprocessing | 0.01 | Pandas |

| 4. Model Training | 0.34 | Scikit-learn |

| 5. TOTAL PIPELINE | 0.47 | Hybrid Stack |

We used DuckDB for the heavy data crunching, then passed a smaller, aggregated DataFrame to Pandas and Scikit-learn for their specialized APIs. This approach lets you iterate faster and, as developer advocate Jacob likes to say, "be wrong faster," which is critical for effective data science.

Scaling from Local to Cloud: How MotherDuck Works

Everything we've done so far has been on a local machine. But what happens when your data grows too large even for DuckDB's out-of-core processing, or you need to share your analysis with a team?

MotherDuck provides a path to the cloud. Connecting to a local, persistent DuckDB database looks like this:

Copy code

# Connect to a local database file

local_con = duckdb.connect('my_analysis.db')

To run the exact same code against MotherDuck's serverless cloud platform, you just change the connection string:

Copy code

# Connect to MotherDuck - just change the connection string!

cloud_con = duckdb.connect('md:my_db?motherduck_token=YOUR_TOKEN')

That's it. By adding md: and your token, you get serverless compute that can scale up to instances with over a terabyte of RAM, persistent shared storage, and a full data warehouse feature set.

You can develop and test your pipelines locally with DuckDB, then deploy them to MotherDuck for production-scale jobs with no code changes.

Conclusion: A Faster, Simpler Data Workflow

By integrating DuckDB and MotherDuck into your Python workflow, you don't have to abandon the tools you love. You augment them, creating a hybrid stack that gives you the best of all worlds: the interactivity of Pandas, the raw power of SQL, and a path to the cloud.

You get speed, simplicity, and scale. You can iterate on your analyses faster and tackle problems that were previously out of reach for your laptop.

Ready to try it yourself? Sign up for a free MotherDuck account, grab the Jupyter Notebook from this tutorial, and see how much faster your own workflows can be.

Frequently Asked Questions

What is the difference between DuckDB and Polars?

Polars is another high-performance DataFrame library with its own powerful expression API. Like DuckDB, Polars is vectorized and very fast. The key difference is philosophy: DuckDB is an embedded analytical database that brings a powerful SQL engine to your local files and DataFrames. Polars is a Rust-powered DataFrame library designed to be a faster, more parallel alternative to the Pandas API. DuckDB also has mature out-of-core capabilities, which allow it to process datasets larger than your available RAM by spilling to disk. Many choose DuckDB for its robust SQL capabilities and out-of-core processing, while Polars is excellent for those who prefer a DataFrame-style API.

How does MotherDuck handle scaling for large datasets?

MotherDuck scales both vertically and horizontally. Vertically, you can choose from different instance sizes for your workload, up to massive machines with over a terabyte of RAM. Horizontally, you can run multiple, isolated jobs in parallel using service accounts, all sharing the same underlying storage.

What are the data size limits for DuckDB?

Locally, the main limiting factor for DuckDB isn't RAM, it's your available disk space. We've seen users process hundreds of billions of rows on a single node. RAM primarily affects speed, while the disk handles the scale.

Transcript

0:00Hello everyone. Uh, welcome to today's webinar called databased going beyond the data frame. My name is Gerald. I'm on the marketing team here at Motherduck and I'm joined by Jacob who's one of our developer advocates. Um, a little bit of housekeeping. We are recording this. So, if you signed up for it, uh, we'll be sending out a link to you to watch it if

0:23you want to go back and watch it again, as well as we'll be posting this on our website afterwards uh, as well. Uh we'll have time for a Q&A at the end. So there should be kind of a a chat bot chat box kind of on your right side of your screen of StreamYard here and you can

0:39put uh any questions or even just say hi right now. Tell us where you're uh watching from, joining us from. Uh Jacob and I are up in right now sunny Seattle.

0:48Uh so usually it's dark and gray but we get some sun today. Um yeah. Um with that out just a quick agenda. I'm just going to do a little bit of an intro just into, you know, what Motherduck is if you've never heard of Motherduck before. Uh, and then we'll dive right into a demo with Jacob kind of showing

1:03us how to use Motherduck, Duck DB, uh, and Python pandas. Um, and then we'll

1:10have like a time for a Q&A. So, uh, feel free even if you have questions during the, you know, the demo part, uh, you can pop it in the chat and if it's relevant, we'll get to it, uh, even during the middle of it, too. So, all right. Next. So, just for those of you who don't know what Motherduck is,

1:27Motherduck is a cloud data warehouse. We're all about making big data feel small. Um, we kind of do that in a few different ways compared to uh traditional data warehouses is uh one is we just kind of we focus on eliminating a lot of the complexity and overhead that you get with um a distributed system. uh and all that is mostly

1:46leveraged because we have duct DB on the back end which allows us to uh really quickly uh scale up to you know we give you small instances if if you want or scale up to really large instances uh if you have really beefy queries you want to do but that allows you to remove that complexity that you normally would have

2:03in a kind of distributed um system as well as we just really want to make it quick and easy and sometimes even fun to get to insight. So a lot of what we focus on is you know how how long does it take you to go from having a question to getting an answer and not necessarily you know optimizing for let's say hey

2:22how quickly can you query a pabyte of data. A lot of it is like you know most people aren't quering a pabyte of data.

2:27It's more like hey how can how quickly can you get answers to your everyday questions that you have or your everyday um pipelines that you're running. Uh and and lastly you know we want to make it just performant and costefficient.

2:38Again, a lot of that is because uh we're powered uh underneath the hood by uh DUTDB. But then we kind of, you know, duct DB typically, if you let me back up if you haven't heard of DuctTb, DuckDB is an open-source OLAP database. Uh it is uh by itself extremely performant and costefficient. And then mother do comes

2:57in uh and kind of builds on uh uh built a data warehouse uh with you know sharing access controls um uh recovery and everything that you would expect uh in a in a data warehouse uh you know for you know kind of a multiplayer analytics solution um and in terms of you know you

3:17know a data warehouse is nothing without its ecosystem. So mother do you know in our view sits kind of in the middle as the data warehouse and it connects with all of the tools in the data stack that you normally you know you work with you know most every day you know in this case today we'll be doing a lot of work

3:32with with Jupiter uh you know but uh you know we work with kind of your favorite you know ingestion transformation orchestration and BI tools as well. So with that um let me hand it over to Jacob. I'm going to hide these slides and if you want to share your Let me add your screen your computer to the stage.

3:52 >> Yeah, sure. We'll do this. Um, great. So, you can see Jupiter here in front of me. Uh, just as Gerald mentioned, uh, we do we will have time for Q&A at the end.

4:02If you have questions as we go here, just put them in the chat. We're going to catch them uh, at the end, right? So, uh, that way we can kind of like get through what we're doing here and then and then take on kind of more detailed questions about what we're up to. So, um, what am I talking about today,

4:19right? Uh, I'm Jacob at Motherduck. Um, for those you don't know me, I'm going to talk about, uh, ways that you can use Duct and Mother Duck together to kind of turbocharge a lot of your data science workloads. Um, we're going to focus on Pandas since it's kind of the incumbent uh, dataf frame solution. And obviously there's lots of others that fit in the

4:39space. Um, and we're going to do some live coding here together here in Jupiter, which is uh not my favorite.

4:44I'm not a Jupiter native, so you might see some weird things in here. Just know that that is my uh me me kind of working through Jupiter. I'm more more of a Python script fan, but for the sake of this, I uh I picked up Jupiter and started working through it. Um, you know, I think I think where we really

5:02think that Mother Duck and Duckb shines is a couple of things. Um when you think about it in the context of of pandas and maybe other kind of data workflows you're doing inside of Python I think the the first thing is you know uh it's just fast it's fast you get your answers fast you can iterate quickly you can

5:19modify it quickly you can be wrong faster right a lot of data science is like getting to wrong fast right um there's more wrong answers than there are right answers how do we how do we get there um what do we do when we have data that's bigger than our RAM right what do we do uh how do we handle that

5:35DuctTDB can work on out of core joins. Uh, mother duck has really big instances we can run this stuff on, right? Uh, and then of course it is fairly uh costefficient. Um, it is not as um it is

5:48much much cheaper to run uh than something for example like snowflake. Um, mother duck benches favorably versus like a snowflake 3XL which costs $128 an hour. motherduck instance that competes with that is about $5 an hour. So just to give you a sense of like where the differences are there, especially when you're doing ad hoc analysis, uh you

6:10want it to be fast. Um that's where we're going to start. So let's take a look here. Um we're going to do three things.

6:18We're going to just do kind of a pandas to duct to mother duck flow. Uh we're then going to do a little bit of exploratory data analysis. I say at scale here, it's kind of tongue and cheek. It's really just like a few million rows. And then we'll do like a endto-end kind of ML feature engineering pipeline example that just takes uh

6:35takes a data set and kind of uh interchanges it seamlessly between SQL and dataf frames. [snorts] Um so here let's start. Um so the first thing I have in here is uh I'm going to do my imports right. Um uh doing some some

6:52work with pandas and uh ductb and numpy of course numpy if you prefer to pronounce it that way. Um, and so we are going to create our data set, right? Uh, 500,000 rows. Let's just run this. And so now you can see we have our 500,000 rows on it, right? Um, so what does it look like to kind of do this type of of

7:14activity with with pandas, right? Um, and we can just run this. Let's just see. Um, great. So it takes about 410

7:24four sorry 400ths of a second to run. It's pretty fast. uh you know on this 500,000 row data set. That's pretty good. Um what does it look like to run this on duct DB? Right? So here is here's what the syntax looks like to run duct DBS SQL. Right? So I'm referencing my duct DB object that I've imported up

7:39here. Right? Um and we have this SQL this SQL

7:45API that we can use just to pass a string that will return as a data frame.

7:50Right? So we do DF to return that as a data frame. The other thing that's really cool about this is we can select from Python objects in the Python uh space because we're zero copying it with Apache arrow. So I create this dataf frame object. I call it DF, right? Um

8:08and we can query it with duct DB. It gets added to our SQL catalog. Duct DB can see it as with the name of the Python object, which is really cool. So let's run this. Okay, it's also fast.

8:21Okay, in this case, slightly slower than pandas, which is kind of funny. Um, run this a few times, it probably would show uh faster. That's very amusing to me.

8:32Uh, such as the risk of a live demo. Um, who knows? Probably because I'm like streaming video, etc. Who knows? Um, so uh that's the first thing that I just wanted to show you. This is what it looks like to kind of interact with a dataf frame uh in SQL. Right now, one thing that's really cool is we can um

8:52let me just do this real quickly here. Um we can just query the CSVs directly on disk, right? We can also do with paret and JSON. In this case, I have I have CSV since that tends to be kind of the place where all of this data lands unfortunately for all of us. Um so we're going to just drop this data set into

9:11CSV right here. Um and then we will query it uh at rest. So, we'll just do that. So, you can see we rip it to CSV and then we query. Takes about a tenth of a second. We're not loading into memory, which is really cool. We're just streaming it from disk. Um, so this means we can skip it. This is really

9:26great for like maybe large parquet files or large CSVs um that that can take a long time for us to uh operate on. So, that's like really really kind of a nice ergonomic thing.

9:38The other thing that I love about this is it can also do wildcard querying. So you'll see that I just changed the file name from um sales data to star.csv. Now just because I'm in my folder, right?

9:50There's only one CSV in there. Um this means that it's going to do a intelligent file name matching. So if I've got 10 or 100 CSVs, I don't need to worry about writing a Python loop to get all the file names or uh any of any of that stuff. I just do star. It'll select all my CSVs. That again, that works for

10:07JSON, that works for parquet. It's a very nice uh set of ergonomics here so that we can identify or so that we can quickly get to the answers uh we are seeking. Um a nice nice little uh design

10:20affordance there. And the cool thing here is um uh that all of this just you

10:27know to to tie this into motherduck to kind of you know um show show why we care about this here is we can actually use the exact same SQL to do this uh in the cloud as well which is really awesome. So uh I'm going to create a persistent database in this case right? So I'm going to use this connect API which is

10:49different than the SQL API we've been using so far, right? Um this connect API is going to just basically create a connection object that we can use. So this connection object um we're going to create our Python or our database in in Python here. Um and then we're going to create load the data into it. Right? So

11:07this is actually going to write the physical data to disk because we're not using an in-memory database. Right? This is not like pandas. This is a physical database. So we can actually write the data to disk and then we can query it right and again return it as a data frame right and so you'll see again we're using this local con object we can

11:22use execute we can also use SQL these do the same thing um and uh we'll print

11:28them and then to connect to motherduck it's the exact same right so in my motherduck I just add this right so all I'm doing in my connection string is I'm using this mdon which tells it to use the motheruck extension and then a database name and a token the database name is technically optional Um, so my database or my token is uh in

11:47my environment, right? That's why we imported OS earlier. Um, and so my mother duck token is already in there.

11:53So I don't have to worry about authentication from that perspective, but it's it's there for me to use. So we can uh run this. Let's do it. Uh, let's see here. Command enter. We'll see it run. So you can see it ran locally very fast. And now Motherduck is going. So what's happening here on the Motherduck side is there's two things. Um the CSV

12:11is not particularly large but uh we do have to kind of go out to the network and load the data in. Um and then we are also authenticating. So it's authenticating right now and then loading the data on the motherduck side.

12:22And then once it's in there, we can just query it with the identical SQL syntax that we wrote up here. Okay, great. So again, it's fast once we get authenticated and the data loaded, it's fast to query it, right? Um so this is great. And let's see, we can also now see it kind of anything that can connect

12:42to my mother duck, we'll now see in my DB I have this sales table. Let me just pop in there real quickly. Um,

12:52let me share this tab instead. And so I can just say from Oh, you know, this is I'm logged into the wrong account. That's okay. All right, we're not going to do this right now. We'll come back we'll come back to that part of it. [laughter] That's okay. um uh that way I'm not fooling around logging in while we're uh

13:09waiting for this to go. But that's the first part here, right? So the loop the loop that we just completed is we took data from uh we generated a data in a dataf frame. We were able to query it with ductb as a data frame, pass it back and forth between Python objects and pandas, right? Uh, and then we were able

13:28to just write SQL against those and then write it out to CSV and then read that CSV and then of course do that same operation in motherduck, right? And so all of these things are very easy and um, you know, hopefully you can uh, kind of see how all of these pieces are fitting together for maybe what would

13:47look like for you. Um, I'll briefly talk about like why it's fast um, before we jump into the next thing. So, one thing that that DuckTV does that is really great kind of at the scale. Oh, amazing.

13:59Lights are now off. Um, hang on one second here. I'll be right back.

14:09Perfect. All right. Not note for the studio, Gerald. Um, anyways, uh, let's

14:15see here. Um, so it's fast because it's taking these files and it's breaking them into a bunch of chunks and then it's vectorizing the compute across it, right? So that means we can run a bunch of queries in parallel. At the scale of like 500,000 rows, we don't really see too much of a difference. But at the

14:29scale of maybe millions of rows, we'll see we'll see what that looks like to kind of operate on our data.

14:35Okay. Um so the next thing we have here is uh let's just do some like exploratory data analysis. So again, we're going to generate data. Um I'm going to connect to this data using this db.connect and then I'm going to query it with the SQL API. So in this case, we're just going to generate some transactions like sales type

14:54transactions. We're going to take a date. We're going to uh have a case statement that puts it in a category, an amount, a customer ID, you know, all this kind of uh stuff in here. And we're going to do it five million times. Um and then what we do here is we we're actually executing this checkpoint. So

15:11this is just going to make sure we're writing this to the disk. So, we're going to take advantage of the fact that we have a real database here and we can actually get our data into disk and we can see like how big the data set is and just like take a look like how much data does it really take for like 5 million

15:25rows. Like how much data does it take up to to work on this, right? So, let me run this.

15:31Um, there we go. Okay, so we wrote five million rows to disk. It took 1.7 seconds. Our database size is 6.2 62 megabytes, excuse me. Um, so pretty good compression there. Uh, 13 bytes per row. Um, this is this is really great especially as we get into larger and larger data set sizes, right? Um, when you're dealing with potentially like

15:55event type data, maybe trying to do ML type prediction, it can you can have quite large data sets, right? And so figuring out how to uh keep them tight really uh really matters. So let's just see like what does it look like to do this maybe with pandas, right? So again, I can this is great. I get my data frame

16:12and then I can start doing things to uh doing things to it. So let's just run this. So uh the first thing is uh we'll let's do some analysis on it with pandas right uh we got some group buys number of unique customers some some aggregates um we've got top customers hey like what what's our customer ids with the most

16:32sales right what's get us the number the 10 largest uh and then we have like a 7-day moving average for daily revenue kind of like all the type of stuff that you'd maybe do with a transactions you know maybe an e-commerce store or something type type data set here so let's like see how long it takes and how

16:48much data used. So it took us uh about 610 of a second to load it and it used about one gig of RAM. Um and we can see how long our queries took here. Um so we're at kind of like a 1.1 seconds here for this data set and one gig of RAM there. So like what does it look like to

17:08do the same stuff in duct DB, right? So, so one thing you'll notice here is um this is actually this this amuses me a little bit. Uh obviously for those of you who are like pandas and dataf frame enjoyers, uh you'll notice that SQL is a little more verbose, right? Um for better or worse, uh it is definitely

17:27more verbose. Um but hopefully we can show you maybe one reason why you would consider using this type of language. Um which is that it's much faster. So our queries are doing the same thing, right?

17:37What's our sales by region? What's our top customers? you know, what's our 7-day moving average? Um, what do these things look like? Let's just run it.

17:45And again, wrong button. Okay, so we can see these queries. So, we went from we went from 1.1 seconds for all of this to uh point to a tenth of a second. It's for all intents and purposes much much faster. Also, this is a 62meg file.

18:01We're not This is This is super quick. Now, why is it quick? Um there's a lot of things that are happening kind of behind the scenes here, but as soon as this data is in ductb, duct DB adds and calculates a bunch of stats on top of it that make it really fast to run these kinds of calculations. Um and then

18:16again, it's massively massively parallelized. So let's just like take a look at like what the performance looks like. Again, I'm just kind of comparing these query times between pandas and duct DB. And so we can kind of see duct DB is around 10 times faster and it uses about 90% less memory, right? So this really matters. Again, not at the scale

18:33of hey, five million rows, what about 500 million rows, what about five billion rows, you know, what about those types of those types of analyses, you know, having that 10x difference in speed means you can then run that analysis maybe 10 or 100 times during a day and not uh not once, right? And that is a really big difference for handling

18:52these these types of things. Um,

18:56so, uh, we can also do a little bit of taking a look at what it takes to run this file, uh, run 10 10 copies of this file, which is 50 million rows, right?

19:05As I was kind of alluding to. Um, so let's do that. So, let's what I'm doing is is actually just copying this file because it's in my duct DB um, uh, database as a transactions table. I can just copy it to parquet really easily.

19:18So, we just ripped it to parquet. It's now in my um in my uh uh local local

19:26disk uh as paret which is great. Uh and we can see now it's 68 uh 68 megabytes.

19:32That's a little bit bigger than the ductb file, right? The ductb file has a lot more compression tricks and things happening in it that make it a little tighter. But um you know, parquet obviously for interoperability lets us use lots of different things. Uh there's there's really great reasons to use parquet.

19:46Um, so let's like do a quick simulation on what it looks like to um, uh, to run

19:52this. So, um, let's do this. Uh, I'm

19:57going to simply take, uh, this file that we have in our in our local disk and we're going to read the parquet with pandas and we're going to do it 10 times, right? So, we're going to just append those uh, append those reads onto our data frame. Um, and uh, then we'll run a quick aggregation on top of it.

20:15Now, one thing I'm going to run this, it takes it takes somewhere around 10 seconds. Uh, in the comments, if someone can help me who's a Python expert, who knows a little bit more than I do. Like, how the heck do I get this timing to work right? I'm trying to get the timer to work right. It's going to say 3

20:28seconds when this completes, it definitely takes longer than 3 seconds. Um, anyways, deeply amusing. I [laughter] I think we need to use time it in a slightly different way or to execute the queries. Anyways, if someone someone can tell me how to fix this offline, I would greatly appreciate it. Uh anyways, um uh

20:47so this is what we're looking at here in terms of uh what it takes to read this this file 10 times and then do this analysis um on top of it using pandas.

20:58Um so again we go we we go from we take this this uh 68meg paret file once we read it with pandas we we end up using somewhere around 10 gigabytes when we read it 10 times. Um so now let's just

21:11take a look at what this looks like you know kind of in SQL right um this is this is deeply amusing to me by the way so here's here's 10 union alls right uh

21:22this is taking that data set and just reading it 10 times I'm manually enumerating it here obviously we could generate the SQL with like um with a SQL uh sorry with with a Python function and then just inject that um SQL into like our uh SQL function here uh I didn't do that here to keep it kind of uh simpler

21:40to kind of see, but it is kind of a funny it is kind of a funny way to just be like, hey, what does it look like to look run on a bigger data set? I don't know. You it all 10 times. It's a good trick. Um so yeah, so this is the same the same thing here. Let's run it. Uh

21:52did I press the wrong button? Oh, there we go. Okay, so um yeah, we can see it

21:58just ran that in uh 1.3 seconds. This is I think actually real runtime unlike our previous one. Let me just check it again. Okay, keep hitting the wrong button. Um but yeah, so it's running very very quickly. It doesn't use very much RAM. We're looking at sometimes around a 25x speed up. It uses way less memory. Um it says ductb time varies

22:15with OS caching. I wasn't actually able to replicate that when I was started using Jupiter. I was seeing that in Python, but Jupiter was not replicating that. So [snorts] um anyways, note note for previous me I guess. Um so this is really great, right? Uh this is where we can start to see, you know, hey, duct DB

22:32is a lot faster in these use cases. um you know uh because it takes advantage of a bunch of uh a bunch of stats and things that are already in paret files and um those those hacks add up to really really nice fast performance doesn't use a lot of RAM um and so we can do a lot of great stuff

22:52here. Uh you know 25x is non-trivial at this point um and using less memory is amazing. So, uh, you know, this is kind of where we end up with, but, uh, you know, 4 to 150x faster depending on caching, but a lot less memory. Basically, what this means is you now you take maybe your laptop that has 32 gigs of RAM

23:13hopefully, maybe maybe 16, but like a lot of RAM, and now you can expand the scope of what type of analysis you can do on it before you even worry about getting it into prod. Um, and you can again iterate and run faster. So, um, that's that part. Uh, the last thing I want to talk about is what does it look

23:31like to kind of run a ML feature engineering pipeline. Again, this is all local. We're not using Mother Duck here.

23:37We're using DuctTV. But the way that you connect those two things together is you simply change your connection string to add MD colon and your token and you are logged in and you can use those uh things together. So, um, this is very simple customer turn prediction. you know, are will these customers make purchases in the next 30

23:56days based on a million traction transactions from 50,000 customers? Again, we can use SQL to just generate data for us using this range function, which is amazing.

24:07Um, and we're going to just put this in a table. Again, this just gets materialized on disk, which is really great. Um, so we just created a million rows from 50K customers in a tenth of a second. And we can kind of see what that looks like, right? Um really really cool stuff here um in terms of just how

24:25simple simple it is to kind of get started here. Um, okay. So, once we have this, how do we do a little bit of feature engineering, right? Well, of course, because me as a SQL enjoyer, I'm going to do uh I'm going to do SQL um and I'm going to do things like last purchase date and days

24:44purchase and all these different features that might impact my uh my model, right? So, uh we have these

24:53calculations in here. In terms of like getting into the details, I'm not going to like, you know, uh, hang up too much.

24:58This is like a totally, you know, fabricated pipeline example. Um, but the last thing I want to call out is again what we're doing is passing it to a data frame at the end, right? So, we do all this work in SQL. We pass to a dataf frame and I'll show you why that's important in a minute. So, let's run

25:13this. Okay, great. So, now we get back these 20 features. It takes, you know, tenth of a second. Um, and we can see all this information. And again, what we're passing back is just kind of a narrower set of data into our data frame, just 50,000 rows. Um, and this is this is great. Um, you know, in terms of

25:31what we can use here for, uh, for running our pipelines. So now let's take a look at doing pandas pre-processing.

25:39So what's really awesome about this is because we can just pass things back and forth between dataf frame objects using arrow, right? Kind of behind the scenes, um, this all just works for us, right?

25:49So uh we can do things like use these types of functions in pandas to uh convert types and things like that. Uh we can create target variables using RFM scoring, right? Uh was it frequenc recency frequency monetary or something?

26:07I can't remember. Yeah, that's right. Hey, look at that. That's a pretty good poll for me. I used to work in retail, so RFM was something I spent a lot of time on. Um uh anyways uh so we can kind

26:18of take a look at these. We can clean them up, right? Uh we use this suspiciously not random seed uh in here to do that. And then we can kind of time how long things take just to give you a sense. Of course, because now we're working on like a smaller data set and we're doing this work should be pretty

26:34fast, right? And again, uh yeah, great. Um yeah, very very very fast. 28 milliseconds. Um, we've identified kind of uh some really interesting points of data here in our pre-processing and again we're seamlessly passing objects from SQL back and forth to pandas.

26:53Okay, now because again we're just using data frames and we can kind of pass these things together. We can now use something like sklearn on this for example do some random forest stuff.

27:02Here's our feature columns right that we defined earlier. Here we here's some data prep options just to make sure this all works. Um, and we can run it. And again, we can see we've done some training. We've split our data set into a training set and a test set. That's amazing. Um, very smart. Uh, um, you know, and then we can actually

27:25train our model and we can see kind of what things look like. So um we've went kind of from end to end here you know again passing these objects between different libraries so that we can use what we need when we need it to solve whatever problems we have uh at hand.

27:41I'm not going to get too much into the model here at this point. We're into like kind of like you know AI is fabricating uh some of the code that I wrote here. So I'm not going to like get too too into the weeds. The important part to understand here is that um we can pass seamlessly between objects and libraries

27:58and um that means we can get you know when we need extra horsepower we can lean back into something like mother duck to use it right and let's just look at a feature importance just so we can kind of see what it looks like. Well, okay. So, this is very interesting.

28:11Probably makes sense if you think about um retail sales, right? What things would would predict what type of person would would purchase ne in the next 30 days based on uh uh you know, based on

28:23what we're looking at here? Well, spend and transactions in the last 30 days are highly predictive. I think that makes a lot of sense to me. Um so, good news.

28:30Our fake trans fake data set uh is somewhat easy to reason about and semi-true.

28:37Uh so let's look at how long it took to do all this stuff, right? Um

28:43okay. Um we generated it took 10 ten it took a tenth of a second. We did some feature engineering also fast. We did our pre-processing. We did our model training. Our total pipeline here is less than a second. So like you know this kind of the takeaway really here is we can kind of like move things around

29:00into where we need it like the right libraries for the right purpose and now we can make things super super fast.

29:06Again, what Gerald mentioned earlier, that iteration speed, like look, I love that it's fast because it's it's nice to use things that are fast, right? It's nice to drive a fast car. It's it's it's more fun, whatever. What I care about, though, in the case of this is uh it

29:21means I can iterate faster. And being being able to iterate faster means that I can get to my answer faster. And that's really important. Um, you know, time is money indeed. And it's very effective here. Um, so key takeaways.

29:34Let's just talk about these real quickly and then we'll get into some questions here. You know, we can do zero copy with pandas with Apache Arrow. We can query files without loading them into memory.

29:44We can easily flip things uh uh into from local to mother duck with a single line change. Um it says 2.6 times faster. Well, that was my testing locally. That did not prove this morning, but that's okay. Uh for exploratory, right, we're at nine 10 times faster. Uses less RAM, right? uh we can stream those data sets in when

30:04they are bigger than RAM. And last thing is you know we can do our kind of complete end toend pipelines really fast. You know again this only scale up a million rows um probably probably at larger scales you'll see even more um you know even even bigger gains. Um but yeah you can take this hybrid approach use the right tools use the tools you

30:21need right between duct DB and pandas and learn and all this stuff right um so uh yeah that is kind of the the overall thing here uh this is how I think about using um ductb for data science really making those data crunching uh make that data crunching easy and then when you really need the extra horsepower you can

30:42fire it up over to motherduck where we can run really big instances I think our biggest instances these run uh at uh more than one terabyte of RAM. Um and so and they're all serverless, so you just get bold for when you use them. Uh it's a great kind of a great way to offload those really big jobs uh into uh into a

31:01cloud uh platform. All right, I am going to stop sharing my screen um and we can

31:09take some questions. >> Cool. Uh just first kind of I guess housekeeping question. Are you going to share the demo code?

31:18 >> Uh what we'll do, Gerald, I think, is we will uh share it uh as part of the follow-up post um post webinar. Um I'm not sure where we want to put it yet.

31:28Maybe we'll throw it into the learn section on on Motherduck. Uh we can figure out where we want to where we want to put it.

31:33 >> So stay tuned. Uh if you register for it, uh we'll send out uh send a link to it uh in the follow-up email. Otherwise, we'll it'll probably show up on our website uh sometime soon. Yeah, we'll definitely send it in the followup and then figure out how to land it, you know, somewhere else.

31:48 >> Um, yeah. What other questions do folks have about Mother Duck or DuckDB or how bad my data science is? Any of those things or questions questions on any of that?

32:09Oh, thanks Joe for the note on the Python timer. Yeah, I'll uh [laughter]

32:14yeah uh it's very very amusing to me. Um

32:20yeah, uh I think the the right way to do it from a Jupiter perspective is to use like the percent sign um percent sign percent sign time and you can see how long the cell takes to run which is true system time. The timer is is you know unreliable for reasons that are uh I was not able to understand yesterday. Um,

32:39yeah.

32:43 >> All right. Doesn't seem like we've got too many questions here. Well, that's great. Um, you all know where to find me. Hit me on LinkedIn. Uh, you can you can find me there or uh on Twitterx. Uh,

32:54Matsonj. Um, yeah. Oh, I see a question here. Great. Um, you want to pull that one up, Gerald?

33:04 >> Okay. >> Awesome. So yeah, Joe's asking how is scaling set up with mother duck?

33:09 >> Yeah, good question. Um, so motherduck scales on two dimensions, horizontally, horizontally, and vertically. So vertically scaling just basically means uh you can get uh larger and larger nodes. So motherduck has five node sizes. Again, the largest one is uh 100 plus CPU cores and a terabyte plus of RAM. Uh the smaller ones I think are you

33:32know uh you know two two or four something CPUs and uh like 8 gigs of RAM. So you can kind of step up as you need it. Um and then from a horizontal perspective you can get as many nodes as you want. Um, so the abstraction do that in mother duck is you can create service accounts for your users and then each kind of uh

33:54job or training run or whatever you're doing can run on a separate service account and those will all be isolated compute from each other but they can all share the same storage. Um, which is really which is a really kind of great little trick there. Um, and so if you've got like some core data set you want to

34:07run uh run a bunch of stuff in parallel um you can you can do that. Um, >> lights again.

34:14 >> Let What Let us put these lights >> on. It's fine. >> Hang on. Hang on. I feel like just go wave at the light switch. Um, we got to fix our studio. Uh, it's very funny. Um, okay. So, yeah, that's how scaling works kind of across two dimensions there. Um, yeah.

34:31 >> Uh, yeah. So, you know, Maria asked, you know, the demo made MoDuck, you know, very easy to use. Uh are there any drawbacks of of mother duck when you >> Yeah, sure sure. I mean I I think like the big the big um thing that I would say about mother duck is that the way that we've

34:51implemented SQL and scaling is uh requires a little bit way of different thinking compared to um

35:00compared to a traditional database. One thing that we see a lot of customers run into is they like to put primary keys on let's say a uh you know customer ID take

35:11or customer ID column or something. Uh that doesn't actually really do much in an OLAP database. And so it actually makes your inserts much slower. Um which you people find out by you know saying hey why is the performance slow on this insert? Uh it's like oh you put a primary key on that. It's not designed to work that way right. um it's kind of

35:29it's it's designed to work um you know to make to do the data inserts fast and then duplicate quickly right which is different than an OOLTP database a you know like a postgress or SQL server or Oracle um so uh that's kind of I wouldn't say necessarily drawback but it's a big difference versus other database and then you know the the other thing that

35:51is is specifically different about motherduck is that it is not a federated engine in terms of um right in terms of

36:00compute. And so if you write a really big query, if you write a query that looks at a pabyte of data, um, mother duck is not something that can can do that without you having doing your own kind of query federation on your side.

36:11You'd have to run that across multiple nodes, for example. Um, whereas, you know, uh, BigQuery and other engines will just kind of say, "Yep, great.

36:18We'll bill you for that." Right? It might cost you $10,000 for the query, but, uh, they'll they'll gladly bill you for that. Um, so that that's kind of it's a little bit of a different um a way of thinking about your data. Um, but we think it lines up much more closely to actual use cases for users.

36:35 >> Cool. >> Um, you know, we talked a lot about uh pandas. How is ductt and mother duck kind of differ to pol?

36:44 >> Yeah. Yeah. Good question. Um, you know, p polars is polars is great. It's also vectorized. Um, it's also it's also pretty fast. Um the main thing that I've seen uh in terms of difference versus polars is um uh they at least as of the last time I looked at it uh couldn't do out of core joins. Um

37:12uh so like if you had something that was bigger than RAM it couldn't complete. I think also there it seems like the pullers and the pullers cloud offering is moving towards like GPU enablement type stuff right um and so that's just a different approach um mother duck and ductb are very much CPU maxing tools um

37:32so I suspect that like you know especially as you get more into traditional not traditional but like a more cutting edge ML and AI like polar probably has better affordances there uh whereas like you know things that are like just more well understood data processing loads I suspect will work uh work better on on duct tb and mother

37:49duck but they're both very fast u no no shade to polers I will say one thing that I have noticed um you know I use a lot of AI because I work with a lot of different uh languages and libraries and polars is very a llm seem to be pretty bad at writing polars um and I think

38:08part of that is because the API is not pandas compatible and it just says oh this is a data frame it's pandas um Uh so it's a little bit um of a of a

38:20challenge uh from that perspective, but again it's a great piece of software. Uh I've seen some of the stuff that Richie's done. Big big fan of Polars.

38:29 >> Yep. And I did post uh a link to a blog in the chat that we did. I don't know how long ago it was. A little while ago that one of our other Devrell guys made >> Oh, great. Thank you. TB versus pandas versus polars. There's also a YouTube video.

38:42 >> Yeah. Yeah. >> There as well. So, um, Joe's asking, "Do we have any integration with block storage?" >> Um, I'm not sure what that means. You, if you mean blob storage, uh, mother duck can read directly from Azure blob and from S3 and GCS uh, and anything that's

39:05S3 compatible. >> Okay. >> Uh, rel one one kind of key note there that also means it has a secret manager.

39:12So you basically uh you duct DB handles the interaction between authentication between those platforms.

39:21 >> Uh okay we'll keep working through these. Uh what would be a selling point uh against you know maybe big query uh besides pricing for kind of smaller big data?

39:34 >> Yeah. Yeah. Yeah. I mean I think the big thing is is that um it's really fast. Um

39:40and then each user gets isolated compute. Um and so uh that's kind of th those are two things that I think um uh

39:50are differences versus BigQuery. Um I think the the other thing that there's also uh a lot of surfaces that you can integrate it with, right? I just showed Python. There's a Java surface. There's a command line. You can run it fully locally, right? Um, one one thing that we see a lot of people do is like local

40:08development versus cloud prod. So they'll do like a local dev flow, get everything working and then and then ship it off into the cloud to run on a bigger, you know, on their prod database. Um, and so you can't really do that with traditional cloud data warehouses today. They don't have a way to do local local runs. Um, and again

40:24that's all about just like increasing your iteration speed, being able to get to wrong faster, right? I know that's that that that's kind of one of my favorite framings to think about from a from a data science perspective is just like we're going to make a lot of hypothesis uh hypotheses and most of them are going to be wrong and so we

40:40want to just be able to eliminate them as fast as as possible and so being able to do that with faster iteration locally I think is is key.

40:46 >> Cool. Uh actually kind of continuing on the idea of kind of local uh development. Um, you know, Jonathan says, "As a regular user of DUTDB for local uh, you know, medium-sized data processing and and and analytics, how should I think about leveraging mother duck?" >> Yeah, great question. Um, so from from this perspective, uh, we we think like

41:10there there's a couple ways. Um, if you don't want to run your own infrastructure, right, it's great. If you're running your own like your own VMs, that's great. Uh, Motherduck has our own layer to do that. You know, we've figured out all the kinks to make it work. Um uh you know that's kind of our a lot of

41:27a lot of the value we provide is that it's just pure serverless. You just can build for what you use and that's the kind of one of the abstractions. Um the other thing is um uh data sharing right um my guess is that if you're using duct db for local uh you're probably using something like parquet on object storage to share data

41:45across users. Motherduck has its own you know uh authentication method and notion of users. So you can share data kind of in a more uh slightly more turnkey way

41:57than like you know uh AM permissions on an object storage bucket for example but um yeah I think like really about sharing sharing and then you know scaling. The other thing is, you know, we can give you get you really big instances for fractions of, you know, fractions of an hour. And so I think that's very helpful, you know, for when

42:12you really do need those. Um, and help you help you, you know, really help you avoid having to spin up Spark, uh, is what I would say.

42:22 >> Uh, Joe's asking what other databases are similar to ductb when it comes to modeling data.

42:28 >> Yeah, I mean I think like broadly you can think about ductb as an OLAP database, right? So that means online analytical processing. Um you know a lot of databases in the space like BigQuery uh Snowflake uh Redshift uh data bricks sort of I mean data bricks is not exactly a database. So uh I guess you'd say

42:50they're a data lake but you know they're they're very if you squint it's all the same. So yeah, I would say like a lot of the OLAP uh a lot of the OLAP affordances generalize across those those types of databases including including um including DB.

43:04 >> Cool. Um where did I go? Okay, so Nick's saying asking is it okay to use motherduck as a sort of one-stop shop for a step into a cloud data stack? Uh they're not using any cloud storage solutions.

43:18 >> Yeah, great question, Nick. Yeah, I mean I think we we see a lot of people using it that way. um kind of like green field you know hey like I my typically what we see in that case is actually like people are using like let's say something like postgress or my SQL and they have an analytical use case and it's just too

43:32slow ductb can read those so that means mother duck can read them uh and then you know replicate them easily and uh kind of serve it out we do Gerald showed earlier kind of the uh um integrations that motherduck has so yes you know our goal is that you can use it very much in the line of a traditional data warehouse

43:52house. Um, and uh, it can just be kind of, you know, that that kind of tool in your stack. Um, you know, with with some added goodness around something like um, uh, local, you know, you can run it locally, too, which is very neat.

44:08 >> Uh, Joe's asking, "What are some use cases for mother outside of data science?" >> Yeah, great question. I think like there's two core use cases that I think about. One is like traditional data warehousing, right? You've got data in a bunch of different places.

44:21um and you need to uh move it uh uh move

44:26it around somewhere to conform it and then run reporting on it, maybe connect to a BI tool. Um the other the other place we see it is like what I call customerf facing analytics. Those of you in data science, maybe that's where you're building a streamlet app or our shiny. Um uh in that case um a lot of

44:44really cool stuff you can do, you know, using Mother Duck. um specifically around if you're maybe building an app that um is serving lots of users. Uh mother duck has a notion of like read scaling so that as more users log into it and use it uh more small database instances get spun up to support that so

45:03that users are not stepping on each other. They all get isolated compute. We actually have a really great integration with for example hex on that. Um that's data science I suppose. Um but yeah, like kind of customerf facing customerf facing analytics where you you have multiple users using the same dashboard potentially at the same time. Um and and

45:21you need something fast for interactive analysis. >> Uh I think just one more right now. If you guys have any other questions, feel free to pop them in the chat. Um says, can we use uh duct tb for processing data sets more than 30 million rows and about 100 columns?

45:39 >> Oh, sure. Yeah. I mean, um,

45:43how what I would say is however much the the the limiting factor that I see these days for local compute for ductb is how big your hard drive is. Um you if you've

45:56got a 1 TBTE hard drive that probably means you can analyze about 50 gigs of data just because that means sorry not 50 500 gigs of data because uh you have

46:06up to 500 gigs of space that you can spill to right um so that means you can pretty much do anything right uh the realistically what I see is much much higher uh like I've queried one terab terabyte data sets locally Um, and it really just depends on um, again how much hard drive space you have. There's

46:27kind of a little bit of a RAM, you know, how much RAM do you have? But the RAM just really matters mostly for speed.

46:31Like the disk is there to handle all of the out of core bill stuff. Um, so yeah, 30 million rows and 100 columns should be no problem. Um, you know, I think like uh it's it's pretty robust at that scale. Um, you know, I've handled billions tens or hundreds of billions of rows locally. Um, I wouldn't say no

46:50problem. Uh it did take a little while. Uh it does require a little bit of thinking cleverly about your data sometimes, but like you know that's all possible you know with single node compute these days.

47:02 >> Awesome. I think that is all of the questions we have. Um >> amazing.

47:06 >> Thank you. Thank you Jacob. Thank you everyone for all the great questions for tuning in uh joining us. Uh and everyone have a great weekend.

47:15 >> All right. Thanks everybody. >> Thank you. Bye. >> Bye.

FAQS

How much faster is DuckDB than Pandas for data analysis?

In the benchmarks shown in this video, DuckDB was about 10x faster than Pandas for exploratory data analysis on 5 million rows, while using about 90% less memory. At larger scales (50 million rows via Parquet), the speed advantage grew to around 25x. DuckDB achieves this through columnar storage, vectorized execution, parallelism, and pre-computed statistics on the data. All of this happens transparently when you write standard SQL queries.

Can you use DuckDB with Pandas DataFrames in the same Python workflow?

Yes. DuckDB integrates with Pandas through Apache Arrow zero-copy data sharing. You can create a Pandas DataFrame, then query it directly with DuckDB SQL by referencing the Python variable name. DuckDB automatically sees it in the SQL catalog. Results can be returned as DataFrames using .df(). This lets you use SQL for heavy aggregations and data crunching, then pass results to Pandas or scikit-learn for visualization, feature engineering, or ML model training. See our DuckDB + Python quickstart for more details.

How do you switch from local DuckDB to MotherDuck cloud in Python?

Switching from local DuckDB to MotherDuck requires changing just one line: your connection string. Instead of duckdb.connect('local.db'), you use duckdb.connect('md:database_name') with your MotherDuck token stored as an environment variable. All your SQL queries stay exactly the same. This makes it easy to develop locally with fast iteration and then move to MotherDuck when you need larger instances (up to 1+ terabyte of RAM), data sharing, or persistent cloud storage.

Can DuckDB query CSV and Parquet files without loading them into memory?

Yes. DuckDB can stream data directly from CSV, Parquet, and JSON files on disk (or on S3) without loading everything into memory first. You use SELECT * FROM 'file.csv' or SELECT * FROM '*.parquet' with wildcard patterns to query multiple files at once. This is particularly useful for large datasets that exceed your available RAM, as DuckDB will stream the data and can spill intermediate results to disk when needed.

Related Videos

1:00:10

2026-02-25

Shareable visualizations built by your favorite agent

You know the pattern: someone asks a question, you write a query, share the results — and a week later, the same question comes back. Watch this webinar to see how MotherDuck is rethinking how questions become answers, with AI agents that build and share interactive data visualizations straight from live queries.

Webinar

AI ML and LLMs

MotherDuck Features

9:09

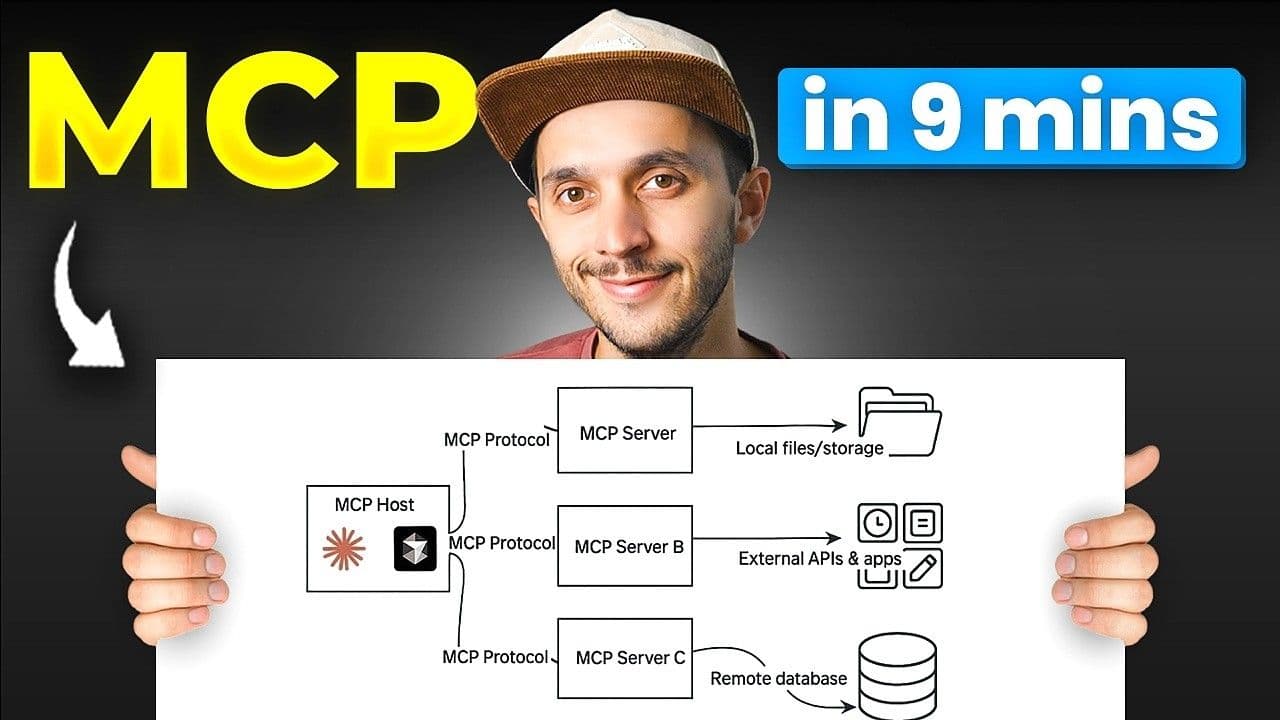

2026-02-13

MCP: Understand It, Set It Up, Use It

Learn what MCP (Model Context Protocol) is, how its three building blocks work, and how to set up remote and local MCP servers. Includes a real demo chaining MotherDuck and Notion MCP servers in a single prompt.

YouTube

MCP

AI, ML and LLMs

2026-01-27

Preparing Your Data Warehouse for AI: Let Your Agents Cook

Jacob and Jerel from MotherDuck showcase practical ways to optimize your data warehouse for AI-powered SQL generation. Through rigorous testing with the Bird benchmark, they demonstrate that text-to-SQL accuracy can jump from 30% to 74% by enriching your database with the right metadata.

AI, ML and LLMs

SQL

MotherDuck Features

Stream

Tutorial