How to Efficiently Load Data into DuckLake with Estuary

2025/07/26Featuring: ,Introduction to DuckLake and Real-Time Data Integration

DuckLake represents a new open table format created by the developers of DuckDB. Unlike traditional data lake formats that store metadata in JSON or Avro files within blob storage, DuckLake takes a different approach by storing metadata in a relational database. This architectural decision significantly speeds up metadata transactions, making operations faster and more efficient.

Understanding MotherDuck's Cloud Data Warehouse

MotherDuck is a cloud data warehouse designed to make big data feel small. Built on top of DuckDB, it focuses on three key principles:

- Eliminating complexity from traditional distributed data warehouses

- Enabling fast insights while keeping developers in their workflow

- Leveraging DuckDB's performance for cost-efficient operations

The platform integrates seamlessly with existing data stack tools and supports standard SQL queries with enhanced features.

Estuary's Real-Time Data Integration Platform

Estuary provides a real-time data integration platform that supports both streaming and batch data movement. The platform features:

Architecture Components

- Capture connectors that extract data from source systems using change data capture (CDC)

- Collections stored in object storage (S3, GCS, or compatible systems)

- Materialization connectors that load data into destinations

Key Capabilities

- Support for hundreds of source and destination systems

- Native CDC for databases like PostgreSQL, MySQL, and Oracle

- Real-time extraction from SaaS applications like Salesforce and HubSpot

- No-code setup with managed service

Setting Up a Real-Time Pipeline to DuckLake

The process of loading data into DuckLake involves several straightforward steps:

Source Configuration

- Connect to your source database (PostgreSQL, MySQL, etc.)

- Enable change data capture to track real-time changes

- Configure schema evolution settings for automatic handling of schema changes

DuckLake Setup

- Create a DuckLake database in MotherDuck

- Configure access to your S3 bucket where data will be stored

- Set up appropriate access tokens for read/write permissions

Pipeline Configuration

- Choose sync frequency (from real-time to scheduled batches)

- Select specific fields to materialize

- Configure merge queries for maintaining latest state

Performance Optimization with MotherDuck

Instant SQL Feature

MotherDuck introduces Instant SQL, which provides query results at the speed of typing by:

- Pre-caching data for immediate feedback

- Validating SQL syntax in real-time

- Enabling rapid iteration on complex queries

Storage Trade-offs

When deciding between DuckLake and MotherDuck native storage:

DuckLake advantages:

- Open format with broader ecosystem compatibility

- Support for Spark and other compute engines

- Better suited for petabyte-scale workloads

MotherDuck storage advantages:

- 2-10x faster query performance

- Optimized for read/write throughput

- Better caching and regional performance

Scaling Considerations

MotherDuck now offers larger instance sizes (Mega and Giga) to support intensive data lake operations. These instances are comparable to Snowflake 3XL configurations and enable:

- Terabyte to petabyte-scale operations

- Complex aggregations and sorting

- Efficient medallion architecture implementations

Best Practices for Implementation

Data Architecture

- Keep raw data in DuckLake for openness and flexibility

- Move silver/gold layer data to MotherDuck storage for performance

- Use Estuary's intermediate storage for reliability and replay capabilities

Partitioning Strategy

While Estuary doesn't natively configure partitions, you can:

- Allow Estuary to create initial tables

- Use

ALTER TABLEcommands to add partitions - Subsequent writes will respect partition configuration

Error Handling and Reliability

The architecture prevents common streaming issues:

- Intermediate storage prevents message loss

- Automatic handling of destination unavailability

- Support for backfills without re-querying sources

Integration with Modern Data Stack

The combination of Estuary and MotherDuck integrates with existing tools:

- dbt support through DuckDB adapter

- AI-powered SQL writing assistance

- Automatic error detection and fixing

- Support for multiple materialization targets from single source

This architecture enables organizations to implement real-time data pipelines without the traditional complexity of streaming systems, making the difference between batch and streaming simply a configuration toggle rather than an architectural decision.

Transcript

0:00Hello everyone. Good morning, good afternoon, good evening wherever you are. Uh welcome to our webinar today on efficiently loading data into Duck Lake with Estuary. Uh we've got a great uh demo lined up for you today. I'm super excited to talk about Duck Lake. There's been a lot of excitement about Duck Lake ever since it was announced. Um a few

0:23housekeeping items. Uh for those of you who registered for this, we'll send out a link to the webinar to watch it again afterwards, as well as we'll upload it to our YouTube uh for you guys to watch afterwards. Uh we'll have time for a Q&A at the end after the demo. So feel free to uh pop any questions that you may

0:42have in the chat and we'll get to them at the end. Um and yeah, we'll get started. My name is Gerald. I'm on the marketing team here at Motherduck. I'm joined by Danny from Estuary and Jacob as well from Motherduck. Uh we'll have a quick kind of intro into uh you know, Mother Duck and Estuary and Duck Lake

1:01and then we'll hop into a demo and then again end with a little bit of Q&A. Um all right, here we go. Uh so if you haven't heard of Mother Duck yet, Motherduck is a cloud data warehouse.

1:12We're all about making big data feel small. Um we uh kind of do that by with three thing three ways. Uh one, we're eliminating complexity and overhead um from kind of traditional legacy data warehouses that are, you know, distributed, maybe overengineered. Uh and we really simplify all of that and make it uh faster and more efficient. Uh

1:34we're making it really uh fast and easy to get insights from your data. Um to also just stay in flow while you're writing code and hopefully not take you out of that flow when you're trying to, you know, write a SQL query um dive into the data, do some analysis. And then we're built on top of uh duct DB uh

1:51which is just makes it makes us very performant and costefficient. Uh and you know in terms of where we fit in the data stack you know we are that kind of cloud data warehouse that sits you know in our view in in the middle.

2:03Uh and we really integrate with all the tools that you hopefully you know and love and use already today. Uh and then a quick intro also then into you know what is Duck Lake? We'll dive in a little bit more into this. Uh but hopefully you've heard about Duck Lake.

2:18Uh but ducklake is a new opent format uh from the creators of ductb uh it is despite the name duck lake it is actually not uh a requirement that you use duct db uh it is a completely uh open uh separate standard uh but its main difference is that uh instead of having uh metadata in the blob storage

2:39on either JSON or avo files you we're taking that out and putting that into basically a relational database which speeds uh those transactions on metadata makes it faster and easier. Uh and then you can still use you know ductb spark or whatever else you want to to run the compute on top of that data. And with that let me now hand over to Danny

3:02for kind of an overview on estuary.

3:08>> Oh hang on. There we go. >> Awesome. Cool. Uh let me know if you can see the introducing SR info slide please JRO.

3:20>> Yep. >> And hear me? It's all okay. Okay. All good. Cool. So uh hi everyone. Uh nice to meet you all. My name is Denny. I I currently do data and marketing at Estury and I'll be talking a little bit about the company the product and then giving you a proper end to end demonstration on how you can actually

3:37set up a real-time pipeline that loads data from a posgress database using change data capture straight into duck lake and uh we won't be doing any coding this is completely uh managed no code service so um a little bit about estur itself is is a real-time data integration platform. Uh and I say real time because that's our primary use

4:01case, but it's totally not limited to uh real-time use cases. We uh actually can do batch daily data integration, data movement as well. We support hundreds of systems um both for sources and for destinations as well.

4:18uh we support most of your popular operational data warehouses. We change data capture like posgress, MySQL, Oracle and uh also in addition to those we can extract data from real time or in real time from systems like Salesforce, HubSpot or uh Netswuite as well. And on

4:39the destination side, we we integrate with uh pretty much all of the popular data warehouses like Snowflake, data bricks uh but also Kafka related or Kafka compatible tools as well using our

4:53Kafka uh compatible API. Um one of the

4:57databases or data warehouses that we integrate with uh is motherduck uh natively. Uh and that's what we are here to talk about. Uh so in the next slide

5:09uh just wanted to give a quick overview of how the architecture uh looks like of estster itself. So estster is not a pointto-point replication system. Uh it's made up of three main components namely capture connectors uh collections in the middle and materialization connectors uh at the end. So capture connectors are the components responsible for extracting the data from

5:35your data sources. This is where all the change data capture happens that reads the binary log of a postgress database and in real time and manages everything related to that. Uh these capture connectors don't uh push data into any

5:53destination yet. Uh first they actually save the results in an object storage. This object storage is uh can be managed by Sure itself but most of our customers actually bring their own uh bucket uh which can be S3 or GCS or or anything like that anything compatible and that's where this data this change log data is saved first from there uh you can either

6:17implement transformations uh on on all of this data uh the historical data that is saved and the incoming data at the same time or start materializing them into your destination.

6:28materialization can happen in two main ways. One is u u delta materialization

6:35and the other one is u actually executing the the merge queries. So if you just want to see the raw change results uh we can just push those into uh any data warehouse. But if you want us to actually execute the merge queries and roll them up into their latest state uh for every record then we can also do

6:52that for you. So with that said, I'll jump into uh a demo of the the platform and uh show you how easy it is to actually uh get started. So after you

7:06sign up uh for free, uh this is this is what you will be greeted at. This is how the dashboard looks like. As I mentioned, we have sources, collections, and destinations. Um in in the sources uh pane you can see a list of connectors that I currently have in this account.

7:23But I can just very quickly show you how the list of connectors looks like um when you want to create something new.

7:29So as I as I mentioned earlier we support uh hundreds of uh databases and SAS tools. As you can see here in the top of this list, um most of the popular operational databases are here. And uh in this little description under the name, you can see how real time uh the connection is. Uh there are some

7:49databases or data sources that don't support actual real-time data extraction, but uh we do our best still to to get as close to uh near real time as possible. So all the databases are there but if I search for ads you can see that we have connectors for all the ads or the popular ad stuff as well or

8:08or marketing data as I mentioned uh netswuite or salesforce are some of the more popular ones as well but we also do uh change data capture from snowflake uh for example. So if you want to do some kind of reverse ETL uh reverse ETL style workload or if you want to offload queries from Snowflake or BigQuery into

8:32something like Mo for example which is a fairly a common use case lately um then it's definitely uh a good tool for that as well.

8:42So for this demo I have a posgress

8:46database already running here uh in in Google cloud actually and uh this one is being uh constantly filled with uh data just so I can show you how real time the system is. So in this overview you can see some statistics about uh the amount of data being written uh into this database uh both uh in terms of data

9:09volume and in terms of uh documents being written every hour and uh I can

9:15very quickly show you how the configuration uh looks like. If I go into this edit page uh all the documentation that you need to configure a connector is is integrated into the application. in this right side panel.

9:28So if you get stuck, you can just uh consult it and then uh see what steps are required in the source system to set up the connection. So u as expected all you need is just uh uh the address of the database and the user that you want to use for connecting. Um we also support SSH tunnels for more secure

9:49environments but also uh if you have real strict networking requirements we are actually able to deploy the connectors itself the whole data plane into your uh private network. Um in

10:03addition to those all of the connectors support uh end to end schema evolution uh that you can configure here um based on your requirements. Uh by default all of these are turned on and we the platform actually does its best to follow up any schema changes that happen in the source like adding new columns, removing uh new removing columns,

10:24changing data types, all that stuff. Uh it can automatically uh handle and follow up within the destination database.

10:35Um yeah and if I jump back into this page

10:44uh as I mentioned the second step after uh after the connector the capture connector starts extracting data is that it writes everything into uh collections.

10:57So collections are basically JSON documents uh in in a data lake in a in a bucket in an S3 bucket that you control.

11:06So you have control over the retention period. You can you have control over the the granularity of the access uh and you can also use your own like cloud uh credits and discounts. So uh once or

11:20this this actual connector that I showed you that is extracting data from posgress is saving uh um data into or

11:29extracting data from one table called shipments and saving everything into this uh collection called shipments here. So I can show you a very quick preview here of how the data looks like.

11:40And uh as a reminder these are the row change records. So you will see this operation uh field here with a U uh

11:50value which means that it was an update and uh not as opposed to being a create or a delete. So this is what a record look like just so you have an idea of what the data uh looks like that we're going to deal with in this demo. It's a randomized realistic looking uh shipment data uh with locations and uh some time

12:10stamps. So uh as I mentioned this collection is being constantly filled up by the connector and once uh you decide if you want to implement a transformation here or go straight to the data warehouse uh then you would head over to this destinations uh panel here. Similarly to

12:31how you would create a new connector, you can create a new material materialization connector here.

12:37And uh similarly we have a huge list of uh services that we actually support as I mentioned iceberg snowflake um all the all the popular ones are here including motherduck where we can actually stream data in real time as well. So I already have a mother connector ready uh to be used for this demo.

13:00And if I jump into editing it very quickly just so I can show you how how it's structured. Um exactly the same idea as with the capture connectors. We also have a documentation page embedded here in the right with a nice video as well uh to show you how to configure it. Um but all we need is a service token for the

13:25mother duck uh instance that we're using the database uh the database that we want to load data in. As you can see this is not an ordinary mother database.

13:35This is a ducklake uh database but uh everything else is in terms of configuring the connector is exactly the same uh as if you were configuring a normal mother connector. So there's no there's no extra uh things needed to be done at all because it's a duck connector.

13:55The data uh gets saved in in a necessary bucket that we control or in this case I control as well that I will show you after uh I'm done with the the connector as well. So another cool thing that you can configure here uh is the sync schedule. Uh, as I mentioned, the platform is ready to do real time data

14:16integration, but it's not limited to it at all. Currently, it's set to 30 seconds just so I can demonstrate the real timeiness aspect of it, but you can set it to even lower or even higher. And uh, you can also configure um, more granular frequencies. For example, you can set it to be real time during working hours, but outside of working

14:37hours, you can just do an update every four hours or something like that. This is useful if you want to save uh costs on the data warehouse side for like compute.

14:49Um this is where you are able to select

14:54uh exactly which fields you want to be um want to materialize in your destination. Uh you don't have to load all of the fields.

15:02uh to to if you if you don't want to use them. So uh yeah that's the basic configuration of a connector and as I mentioned this is a duck lake connector.

15:13So if I jump into S3,

15:17I will be able to show you that uh we have this bucket here called mother duck lake demo and this one uh inside the public prefix

15:29and shipments prefix obviously is continuously receiving uh data. As you can see, the latest time stamp is uh 1 p.m. uh 16, which is uh by time too. So

15:45definitely uh receiving data in in real time, which is uh great because I was praying for the demo goods.

15:54Um, so the way you would set this up on the mother duck side is that first of all you have to create a ducklake database and Jacob will talk a little bit more about what that actually entails or how it looks like under the hood maybe. But from our perspective or what I had to do when I was setting up

16:13the integration is that I just created a new database and configured it to have access to this bucket that I just showed you in S3. So that's where all the data actually lives and this bucket is actually my bucket and not something that uh mother duck owns. Uh I also have

16:30to configure a access token uh for this bucket. So duck lake or motherduck itself is able to read data from that bucket and write data into that bucket.

16:42So if I if I run this query to select the the number of uh records that actually arrive in this uh shipments uh shipments table which is in uh where

16:57is this shipments dclick public shipments you can see that now it's around 45,793

17:06and if I run it again then hopefully

17:10it's uh more. Um, yeah. So, this is

17:15because the the delay or the sync frequent sync frequency is set to 30 minutes or 30 seconds, sorry. So, um, what you would expect is that Sury will actually write the latest data into the clake every 30 seconds. So, if I keep refreshing, you can see that the number increases. uh which means that our pipeline actually works and it is

17:40loading data into uh ducklake

17:44and I can show you what the the table looks like very quickly before I hand it over to Jacob. So you can see that these records are the same structure that I showed you in the collection in the S3 collection but obviously structured uh as as a mother duck table.

18:03And um yeah that's everything I wanted to say about S3 and how you can load data into ducklake. And with that I will hand it over to Jacob to talk a little bit more about ducklake itself.

18:23All right, I'm here. Perfect. Uh, hey everybody. Um, so I have my screen shared here and uh I have added this

18:34data that uh Danny was just showing me here in um in my database. Now how this

18:41works with motherduck is so Danny owns this database. Oops, just my mic. Um, and I actually can can attach that database with a share. So here you can see uh I have share attached that share um to my mother duck instance. So I can query it and then again I just hit control enter and I query the data. So um okay, slower

19:04than I expect. It's probably booting up my instance. Oops. Um while that's running, let me just show you quickly what this looks like on the side. So, um, you can see I have the same data, the shipments duck lake table, and I can see did I get logged out? Okay, let's refresh.

19:30Love a live demo. Okay, let's make sure we can query this. Come on, you can do it. Oh dear. Okay, there we go. Perfect. All right, logged in. We're good. Um, okay. So, let me go back down to here. So, you can see that you there's a little icon here like with a person on it. This means it's a share.

19:50So, this is the data that uh Danny shared with me. And again, I can see it um you know, just from a summary perspective. This this all is um shown here. And like like Danny showed you, uh we can also preview it etc. and take a look at this information. So, that's really cool. So we have this data coming

20:09in and let's do some queries on it in in motherduck just to kind of see what's you know what what information can we learn about it. So I am going to um

20:21first thing I'm going to do is I can actually write my select after my from.

20:26Um why this is important is because duck DB has very nice SQL that makes a little bit more sense from a writing perspective. And so, um, I can just say city like this, right? And run it and I'll get my city back. Um,

20:41and, uh, that's that's pretty cool. Um, and we can, you know, do Okay, how about account star? Um, oh, that didn't work.

20:49I need to add a group by. Okay, group by all. Group by all. Okay. And so now I can see uh what this is looking like uh when I when I have this and I can add let's do an order by order by count star descending. So let's see what cities are most occurring most in the data set. Um

21:11and you'll notice as I'm querying this my queries are getting faster. Um, so what's happening from a from a back-end perspective is when I start this up and I just run this query, that's a cold query, right? And so it's hydrating the cache with this first query. Then subsequent queries just simply reuse the cache, right? So we already have all the

21:29data loaded into our caching layer in Motherduck. And so now subsequent queries are fast. But there is, you know, uh, when you're using something like Ducklake, a little bit of a cold start um, uh, when you're querying it the first time. But you'll notice, you know, subsequent queries once that data sets in there is very fast. But can we

21:45make it faster, right? Like can we make that experience better? Um let's see. So

21:52what I want to show you next is something that's called instant SQL. And so this is something we have been working on uh and it's available now in both the um mother duck uh UI as well as

22:04the duck dbli. So I am going to just type in my from. So from public do uh

22:12shipments I believe and then you'll see that it's up here it says caching. So it's caching that data. So now that it has this data kind of loaded here in my cache I can actually get results back at the speed of typing. So I can just say select city and I get the results back instantly. So imagine this sitting on a

22:33on a you know terabyte terabytes of of a table right? Right. Well, now I can kind of get my SQL query all working right on a on this in instance SQL and then run it against the final table in a really fast way. Right? So I can say let's do city, let's do uh count star, you know,

22:50okay, now it's telling me immediately I'm missing my group by. Let's give this a name as occurrences um group by all. And immediately again I get the results back which is really cool. Now again this is just operating on a preview. when I actually run the query, I will get something back slightly different. Um, order by occurrences descending. Of course, it

23:10lets you reference your alias here. I don't have to simply use my countdown here. Um, let's see. So, one thing we can do that's really cool is we could do like um what's the let's let me look at what date is in here. I think there's like a delivery date or something.

23:27Uh, we can use created at sure. So, we'll do like um

23:36let's do created at and then let's

23:41change that type, right? Uh as

23:45great date. Sure. Um and now we can kind of see what this looks like again. And again, as we work on this, if we have issues, we see them immediately as we type on this. We see all this working.

23:56Um but yeah uh so that's all uh in there as well. Um uh one thing we can do on these um is if we put in maybe like some calculations um let's just do I think can I do minus one? Let's see. Perfect. So you can see as I like just do the calculation it's kind of like Excel, right? I can just

24:20like subtract days off of it. And like for date functions, this is great because uh I often forget between my

24:28different SQL syntaxes how to how to manipulate them, right? And so uh I can just validate quickly. Okay, this is one two, right? I this this won't work, but this will work, right? Uh I don't need to do like date add a date add function or whatever. I can just you know do this I think actually I think I can also do

24:46like interval like one week or something. Sure. Right. So I can also, you know, just just as I as I type in here, I get real-time feedback in my data. So this is really cool. Um, uh, so I'm gonna take that out and we'll just leave that

25:03one there. So that's instance SQL and we want to actually run the whole thing. We just hit command enter and it runs the whole query. And now we have uh have our data in here. Okay, so that's one part of it. Um now uh another thing we can do is because it's just a database in motherduck we can actually take the data

25:22that is in our data lake and move it into a motherduck table. And why would we want to do this? Well the reality is that uh motherduck table is going to be faster to query um than than your data lake. And that's just because we're not we're making different trade-offs in our own storage format versus parquet which

25:41is an open storage format. Right? So, Parquet is making trade-offs for openness and interoperability. We're making trade-offs in our format that are for speed and, you know, read and write throughput. And so, those are just different trade-offs. And so, it's faster to use Mother Duck. Um, right now it's about two times faster to query from Mother Duck um than from from Duck

25:59Lake at kind of at worst. And in general it's probably about 5 to 10x uh difference in speed in terms of uh reading when you are reading from parquet versus reading from our local storage. Obviously that that number it can be kind of all over the place in terms of the long tail. Um if you are in a different region than uh

26:20US East1 uh you're not going to see the same uh you're not going to see the same uh query performance that you'll see that I just showed. You'll see something a little bit slower. Um, so let's bring that data into mother duck storage. So I can just do the same thing from we'll do I'll actually do it this way. We'll do

26:37it properly. Uh, create table um, shipments copy uh, as and I can just do from public dot I got to put this in the right. Let's do my DB.

26:54That's my motherduck database. So mother duck supports three-part names. If I don't if I don't pass a schema name, it'll just use main by default, but I'm going to leave that out. I'll do shipments. And then uh it will import the data. So, let's hit command enter.

27:09Cool. So, you can see it wrote all four uh 48 um 48,000 rows uh across into

27:16mother duck in about two seconds. And now I can do, you know, normal normal calculations. Let me just turn this off.

27:24Um I can do like from my DB shipments copy right and now I'm now I'm

27:33querying my DB instead of querying um uh

27:38querying ducklake right so instead of hitting S3 this is hitting our native native format um so that's really cool and again where this really lets you do it is like if you think about like you know your medallion type architecture maybe you want to keep your raw data in your data lake and you want to move maybe silver

27:53or into mother ducks so it's faster to query and you can spin up multiple instances against it and things like that. Um, speaking of which, in order to support kind of these data lake operations, we've, um, recognized that we need bigger instances. And so, uh, last week we announced larger instances in mother duck. And so, we initially had

28:13pulse, standard, and jumbo, and we've added mega and giga. Um, just to give you a sense of scale here, uh, we kind of, uh, think about or a giga is roughly the same amount of hardware as a snowflake 3XL. And so where this is really helpful is if you're working on maybe let's say terabyte uh or maybe

28:30even pabyte operations on top of your data lake maybe you need to sort it maybe you need to do a big aggregations um those megas and gigas are very very very helpful for those types of workloads um especially if you have a data lake that you need to get data into your silver or your gold layer. Um okay

28:50so that's copying data into motherduck storage. I've got one more thing for you in mother duck, which is of course we can use AI because everyone has to have an AI demo. I will say um I love the way that we're thinking about this. We're letting you kind of use it to write SQL in natural language. We also have

29:06something called fix it which is very helpful. Let me show you what that looks like. So I'll just do select star from um public shipments. And I'm just going to take the s off, right? I know I misspelled it, but I'm going to run it like this. And it's going to tell me, hey, I have an error. And then it will

29:21suggest the fix. So I can just hit accept and run. And now I query that table again. Right. So that is really cool. Um come on. You can do it. Great.

29:35Um where is this bucket, Danny? Is this in east one? >> I think so. Yeah.

29:41>> Or maybe it's an east. >> All right. All right. Anyways. Uh it's all good. Thank you. Um so yeah, you can see uh I'm curing his bucket. Oh, I didn't mention this earlier. Um, when I did attach the share, right? Uh, I can't see his database if I don't have a secret. So, as part of our, you know,

29:58setup for this demo, Danny, uh, helpfully provisioned a secret for me so that, uh, I can access just this bucket.

30:06Um, so that is in here. Um,

30:11all right. So, uh, let's now use AI to write a query. Um, let's just see here.

30:16What do we got? Delivery name. Um,

30:21let's see if there's something like um what day did I have the most orders? So, I'm just going to clear clear this out and I'm just going to say uh oops ah

30:32here. Let's hit control K and I'm just going to say what day had the most orders in public doshments. I'm going to do a full calendar. I think it has this in in context, but I'm not giving it uh if I gave it like the query select star, it would it would tell me. But anyways, all right, let's see. Created at date order

30:53by from this table ordering. Oh, sweet. Look at this. This is actually very specific to what we wanted. Okay, so we can see the 29th of April had the most orders. Let's just like expand this out a little bit to see if our AI is smart enough. Yeah. Okay, good. They did give us the right answer. Um, so you can

31:11quickly use this. Um uh do we have products in this table? Let me see. I can't remember. Uh priority. Okay, let's look at priority. Um I can just say uh

31:26add priority or something and alias it. Alias it with a nice name. Sometimes it

31:34makes jokes. Um let's see. Like it'll say like nice priority of Okay. Well, there you go. Um anyway, so there we go.

31:42And now we can run it. No. Okay.

31:47Oh yeah, makes sense. Got has to fix the group eye. Right. So when I when I highlighted that change and did command K, it only made a change to this line, right? But in SQL, you need to often make multiple changes, especially in your grouping. So there's two ways to do this, right? You can use group by all or

32:01it actually just detected here in the the AI fixer. Hey, we need to we need to fix this. So there we go. Um so slightly different result actually if we look at priority status. So, um, there were, uh, more orders with with non-priority status here on, uh, May 1st we're on April 29th, which is other top number.

32:21Okay, I've been talking a lot here. Um,

32:25uh, thanks so much for letting me show, uh, the demo here. I think we can hop into questions now.

32:34>> Sounds good. Let me just get this view clean. There you go. Thank you. Uh, thanks Danny. Thanks, Jacob, for the demo time. Now, let's hop over to Q&A.

32:43And if you guys have any other questions, feel free to pop it in the chat and we'll get through them. Um,

32:51let's see here. Have one from John about uh uh about estroy. Are there any cost implications between the real time and batch connectors on Suary?

33:02>> So cost implications in terms of cost of est platform itself. No, because the pricing is only based on the volume of data that you move through. Um, it's mostly a cost implication on the destination system. So, if you load data into Snowflake for example in real time, then you need to have a data warehouse uh running uh 247. But if you load it uh

33:30every few hours, then you can obviously pause that warehouse. Uh, as for extracting the data, you can do that in real time. That's what we usually recommend to to extract the data as as real time as possible and but we save save those collections in the middle so you can batch the the loading on the other side.

33:49>> Thank you. Uh another question about SQA uh for the SQL server connector. Does it do CDC?

33:58>> Yes, it does CDC and we also have a batch version of it for those SQL servers that don't have CDC enabled. But yeah, the default one does support CDC.

34:08>> Cool. >> Uh, keep going on the story questions. Uh, you know, migrating from MySQL. Are there any special configs needed for Oracle hosted MySQL or just this self-hosted instructions are fine?

34:23>> So, as as far as I know, the Oracle hosted MySQL is is just a simple MySQL hosted in Oracle's cloud, right? And in that case, there shouldn't be any issues using the the basic MySQL connector.

34:37>> Got it. Cool. Uh, okay. And now

34:42let's go with maybe a more kind of generic high level question about Mother Duck and Duck Lake. You know, like I guess you know, what are the pros and cons for using uh Ducklake in S3 versus having Motherduck hosted Ducklake?

34:56>> Yeah, such a good question. Um so uh I I think the question is actually mother duck storage format versus duck lake um here and and really the trade-offs are uh uh speed versus openness. So, uh, Ducklake obviously is is more open, um, and it can be hosted in your own S3 bucket or any S3 compatible storage by the way, which

35:21includes GCS, um, and min.io and many, many others. Um, uh, versus, you know, uh, speed in the mother duck, uh, format. So, um,

35:34you know, I think really that's where the trade-off comes down to. Um from a cost perspective, we're still trying to figure out, you know, what the cost should be for uh handling in particular our own storage uh or like or like motherduck managed uh S3 in that case.

35:51But um we we you know I think really

35:55it's really about you know speed versus openness. Cool. Um

36:03I'm trying to remember what this was in reference to, but can this connect to BigQuery and create a parket files on GCE VMs?

36:10>> That was during that was during uh the estuary demo. So I think that is a question for us.

36:14>> I think so. >> Yeah. >> Yeah. Yeah. I think so. So it can connect to BigQuery both as a source and the destination. So we are able to extract data from BigQuery and load data into BigQuery as well. uh it cannot create parket files on GC VMs. Uh we can

36:32create parket files in any kind of cloud storage though but yeah not VMs. >> Uh we'll keep going with a few more SQ questions. Uh how would you compare SQC with the VZM?

36:47>> I think the biggest difference is that we don't use the VZM or any kind of Kafka under the hood at all. uh everything every one of our connectors uh are written by our team uh the CDC ones and uh the biggest difference I would say is that uh the team who builds them learned a lot about uh DBZ's

37:10mistakes and uh they they try to try to keep those in mind than when they were developing most of the connectors. Um the code is uh all available on GitHub if you if you want to take a look yourself and see how it compares to to DBZM. But I would say in terms of functionality we can do everything that

37:31DBZM does. Um most of the times we handle edge cases uh even better uh like with toast fields for Postgress for example. uh we handle schema evolution uh better uh as far as I know although I I haven't been up to date with how DBZM does it in the past few humans but uh I I think we do that better and honestly

37:56it it's uh different on a connector by connector basis I would say so if you have a specific connector in mind then uh we can we can do a deeper dive into the differences >> um estro has three steps you know capture, transform, materialize, uh, you know, can it maybe via dbt? Are you breaking with ELT back to ETL logic?

38:23>> So, uh, kind of. Yeah, I mean, ELT and ETL are are never been really that separate things, but yeah, we can we can do both. So, uh, we can extract data and, uh, just put it in your in your data warehouse where you can do whatever you want with it. uh uh but we can also do transformations uh in between and

38:43these transformations are not just uh stateless inflight transformations because we have that storage layer. So you can actually do uh time window aggregates and all kinds of uh funky stuff. Um you can do it in SQL and uh TypeScript currently you can't use dbt though for now at least but that's something that we are toying around with

39:05uh the idea at least. >> Cool. Uh, now over to Jacob. We had a couple questions kind of along this vein, but like when could you should you consider using Duck Lake versus just regular Mother Duck?

39:17>> Yeah, good question. I mean, I I I

39:21from my perspective, you know, again, it's about openness. Um, you know, Ducklake is an open format. Uh, mother duck is uh funding uh development of a

39:31spark connector for it, for example. duck db is just the reference implementation and we have implemented that inside of mother duck right um so

39:41uh early innings there but um you know I think uh the first part is openness you know and speed and I will say that um when you do need kind of those really big distributed workloads we're talking like pabyte scale um we expect ducklake to to fit in there right um mother duck indeed um is kind of uh all based on

40:02ductb Right. And so from a scaling perspective, um there's just different considerations um and and trade-offs uh as it relates to, you know, how much data you can read at once. And so uh you know, I think that that perspective will be much more clear once we have more uh connectors uh tied into Duck Lake, right? More things can write into it um

40:24uh and read it and read from it. uh once that occurs um I think there'll be a very clear different you know positioning in terms of okay you know you still need your spark jobs great run those with duck lake and then use mother duct for the most part to read it

40:40>> great thank you um oh this was a question that came up during jacob your demo love mother duck and we're using it for their startup uh why is there no intellisense on table names >> we're working on it it's actually really hard. Uh this is the short answer. Um but we're definitely working on it uh as

41:00part of um what we're thinking about here. Uh so that you can do for example, you know, give a table an alias and then do a dot what you know um a dot whatever and it'll list the tables. We're definitely thinking about um uh how how to how to improve that is up

41:20on the list for our friends um at uh

41:30Okay, let me see. I lost track of where I was here. Uh oh. Does does the S4A to mother duck hosted ducklake sync automatically handle partitions or is there an option to set uh somewhere to set that?

41:43>> Yeah, actually Danny and I talked about this uh the other day. Um there so so I guess I'll I'll first go to Danny. Is there an estuary native way to do partitions today with Ducklake?

41:55>> So currently no. the you can't configure partitions in the connector itself and I think the workar around would be to pre-create the tables uh with partitions configured in in duckling and then use those as destination when you when you set up your esttory connector.

42:12>> Yes, exactly right. So uh if you define so you can create the table on the uh well actually estry will create the table for you and then uh on the mother side you'd have to write an alter table to add a partition and then any subsequent writes would use the partition.

42:29>> Yeah, exactly. >> Great. Um let's see here. Oh uh DBT uh seems to be pushing everyone from core to dbt fusion. Uh are we going to have uh DPT fusion connector?

42:46>> Great question. Um we are working on it. I have a call with the with the core team over there like or I guess the fusion team. No longer the core team, the fusion team uh next week actually.

42:57So we are we are actively pursuing that. Um there's a few ways that we can think about this. Um uh you know one of them is is native.

43:08There's also potentially a a play here where we could do something with Postgress and um that would just be

43:16fully supported on on both sides as well. That would be very that could be very interesting because it would also let us bridge for people who are historically on DBT core. Um so so we'll

43:28see kind of where that where that goes forward. Um we are definitely it DBT is an important integration for us. We spend a lot of time and resources supporting the dbt ductb adapter and we are actively pursuing uh fusion.

43:44>> Cool. Thank you. Um Danny, what's the

43:48advantage of STO versus native CDC?

43:54>> Um well that's a that's a good question and I see that there's a second part to it. So for instance, GCP has native streaming with data stream. I think that's what it's called. Um from postgress to bitquery. I think the biggest advantage with Sury is that you get access to way more things. Uh most of the times it's also cheaper than than

44:16alternatives. Um because of the fact that uh we we don't use disks at all.

44:22It's all object storage based and we can keep the cost low. Uh but I think the biggest advantage is that you get access to hundreds of connectors and not just Postgress to BigQuery. So in case you want to switch or you want to add more sources uh then you don't have to integrate another tool. If you want to

44:37integrate SAS sources or other databases or or migrate from data warehousers or anything like that, there's no block in like if you were to use a specialized dedicated surface service for uh posgress to bequery. specifically for GCP's data stream from what I heard uh development there is like painfully slow and I I think that's one of the uh

45:00things that uh people who actually switch from data stream to to Sury complain about that there's like some issues that come up and it takes a lot of time because obviously Google moves a bit slower and uh yeah it's just a bit longer to fix those.

45:16>> Cool. Thank you. Um I think that made it

45:20through all of the existing questions.

45:24Uh again yes uh we will uh this recording will be available on YouTube later and if you signed up for the webinar we'll send out a link for you to access it. Otherwise you can just go to motherduck's YouTube channel. Um, nope.

45:38Another one came in from Stephen. Says, uh, S3's batch syncs also require the intermediate S3 blob storage step.

45:47>> So, we can provide that storage for you and you don't necessarily have to set up your own. Uh, but it's recommended. So it's it's one of the ways that if you have that storage in the middle and uh you have your pipeline running for a few months and for some reason you want to do a back fill in your destination for

46:06example then you can use this storage to back fill immediately in your destination and you don't have to start querying everything again from your source database. So it has a lot of advantages and it just doesn't make uh it doesn't just make the make the architecture uh simpler. uh you can also use this intermediate S3 storage to

46:26materialize data into multiple destinations at the same time. So if you want to take data from your database into mother duck, Kafka, Snowflake, data bricks, whatever, then you can do it with just a one to many pipeline and you can extract the data once and materialize uh into multiple places. So I would always recommend setting it up.

46:46Uh but we we can provide you uh a storage uh bucket by default. >> I I would also just add on to that like uh in my experience of of scaling these um the dur uh often your

47:04target uh or sorry yeah your target if there's any unavailability issues and you're not persisting that intermediately sometimes you can miss messages. And so what you really want to make sure you're avoiding is a scenario where you get out of sync and you don't know, right? And so I think um estuaries architecture in the way that it's built

47:23this way um helps avoid those types of scenarios. um you know obviously like streaming technology has improved a lot over the last you know 10 years but as someone who I I have some scars from alternative

47:38uh implementations using things like I don't know rabbit MQ like 10 years ago and uh really hating my life and having to do like you know full reyncs and missing messages and things that just happen in those scenarios. So I think there's there's also the architecture has evolved from uh a lot of the pain of you know the the last few years but yes

48:00uh it's very interesting uh that implementation choice. >> Cool. Uh and that wraps up all the questions. Uh, as we close up, maybe uh, Jacob, Denny, I let you guys leave everyone with maybe one last, I don't know, word of advice or suggestions when it comes to, you know, Estoria, Mother Duck, Duck Lake.

48:22>> Um, yeah, I think what Jacob just touched on now that, uh, streaming is thought of as this hard and complicated thing and, uh, I think we we are trying to prove that. One of our mission is to to prove that that it's not the case anymore. Uh the difference between streaming and batch is is really just a

48:41toggle. Now uh if you want your data in real time then you set it to uh one end and if you want your data uh every few hours then you just uh set it to the other end and and that's it. Uh from the users perspective there's there's really shouldn't be any difference in terms of complexity and esture is is a platform

48:59that that enables that uh for you today. So yeah, you can go give it a try and uh yeah, thank you so much. Yeah. Uh obviously we're super excited about what Estster has built um in terms of in terms of integrating with us and as and uh you know the main thing that uh I that I am uh thinking about in context

49:20of this is you know to us ducklake is just another database you interact with it the same way. And so uh you know it's a very very easy way to get into the uh

49:32get into get into using a data lake. Sorry, I'm laughing about the Apache Beam comment. Um, I'll leave it there.

49:40Um, but yeah, uh, very very excited uh, just to see how how folks use and uh, and abuse uh, Duck Lake in the future.

49:50Looks like Gerald has uh, dropped us, so I will wrap up. Um, thank thanks everybody uh, so much. We will um uh we

49:59will share this as Gerald said and thank you for your time and we're we're super excited to share what we've been working on with you all. All right, bye.

FAQS

How do you load data into DuckLake using Estuary in real time?

Estuary captures data from sources like Postgres using change data capture and saves it into intermediate S3 collections. From there, a materialization connector streams the data into a DuckLake database hosted on MotherDuck. The sync frequency can be as low as 30 seconds, and configuring the DuckLake connector requires only a MotherDuck service token and the database name. No extra steps are needed compared to a regular MotherDuck connector.

What is DuckLake and how does it differ from regular MotherDuck storage?

DuckLake is a new open table format from the creators of DuckDB that stores metadata in a relational database rather than as files in blob storage, making metadata operations faster. The main trade-off versus MotherDuck's native storage is openness versus speed: DuckLake data lives in your own S3 bucket in Parquet format and can be queried by DuckDB, Spark, or other engines, while MotherDuck's native format is roughly 2-10x faster for reads but is proprietary.

What is MotherDuck's Instant SQL feature and how does it work?

Instant SQL is a feature in MotherDuck's UI and the DuckDB CLI that returns query results at the speed of typing. It works by caching a data preview locally, then rewriting and executing queries against the cache on every keystroke. This lets you build SQL queries iteratively and see results right away without hitting the run button, making it much faster to explore and debug queries on large datasets.

How does Estuary's architecture differ from point-to-point replication tools like Debezium?

Unlike Debezium, which requires Kafka infrastructure, Estuary uses a three-component architecture: capture connectors extract data from sources, collections store the change data in S3 object storage that you control, and materialization connectors load data into destinations. This intermediate storage layer enables backfills without re-querying sources, allows materializing into multiple destinations at the same time, and means you can toggle between real-time and batch loading without changing your pipeline.

Can you share DuckLake databases between MotherDuck users?

Yes. DuckLake databases can be shared between MotherDuck users using the share and attach mechanism. The database owner creates a share, and other users attach it to their MotherDuck instance. To access the underlying S3 data, the receiving user needs a secret with credentials for the bucket. The share is read-only by default, so the receiving user can query the data but cannot modify the original database.

Related Videos

1:00:10

2026-02-25

Shareable visualizations built by your favorite agent

You know the pattern: someone asks a question, you write a query, share the results — and a week later, the same question comes back. Watch this webinar to see how MotherDuck is rethinking how questions become answers, with AI agents that build and share interactive data visualizations straight from live queries.

Webinar

AI ML and LLMs

MotherDuck Features

9:09

2026-02-13

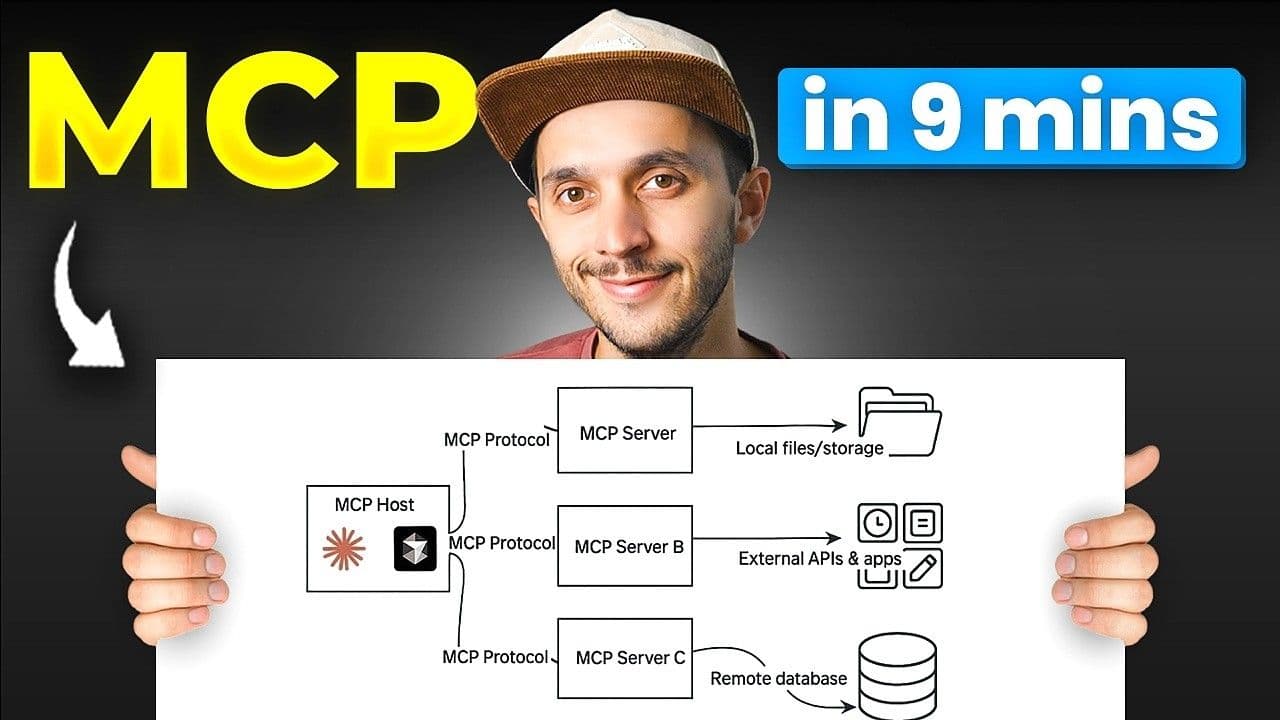

MCP: Understand It, Set It Up, Use It

Learn what MCP (Model Context Protocol) is, how its three building blocks work, and how to set up remote and local MCP servers. Includes a real demo chaining MotherDuck and Notion MCP servers in a single prompt.

YouTube

MCP

AI, ML and LLMs

2026-01-27

Preparing Your Data Warehouse for AI: Let Your Agents Cook

Jacob and Jerel from MotherDuck showcase practical ways to optimize your data warehouse for AI-powered SQL generation. Through rigorous testing with the Bird benchmark, they demonstrate that text-to-SQL accuracy can jump from 30% to 74% by enriching your database with the right metadata.

AI, ML and LLMs

SQL

MotherDuck Features

Stream

Tutorial