Speed-Querying StackOverflow data with DuckDB ft. Michael Hunger

2023/11/27Featuring:Explore StackOverflow's vast data with Michael Hunger from Neo4j using DuckDB and MotherDuck. A highlight from the DuckDB Meetup in Berlin Nov 2023. Michael is also the co-author of the book 'DuckDB in action,’ published by Manning.

Transcript

0:05you know we all have been using SE overflow all our lives speciically right uh to help us with coding questions until a co-pilot came along um so I want to talk a little bit about what would it take to actually take all of St overflow data and analyze it into in inside of Dr B I'm Michael hunger I'm with NE forj

0:24I'm leading product Innovation and developer product strategy at NE forj but uh as part of product Innovation can play around with a lot of Technologies including drb uh thank you Hest and uh it's a really great Tool uh to quickly get uh to uh quickly extract information out of data on your local machine and even if your data is as big as Tech

0:46overflow you can still get answers in a few seconds or milliseconds so let's let's see how to do that um so we already did the show of hands as such but we didn't ask who has actually used mother duck before one two three so I think I hope after the talk there will be some more folks that have used uh mck so um as uh M said

1:08we are writing dub in action it's almost done we have two chapters to go uh and publication will be around one Z release of dub so next spring and uh there's an

1:21also discount code for the book and if you want to review the book you can find me later and I have also 100% discounts for the full book if you're willing to write us an honest I don't want to get good preview I want to have an honest review so everyone wants to come see me later thank you to H and and mark for uh

1:39offering to write the forward really great and uh so actually publish is en and mck has a free ebook available so you see the cards just grab one of these cards and you can get your free ebook copy from mother duck so enough promo uh so we all know copy and pasting from s Flor is what we do right and if you want

1:59to there's even an a keyboard from from CID Williams uh that you can use for command C command V or control c v really easy that's our life and you know s flow looks like this right but who has actually ever thought about what's the infrastructure running behind the scenes right so there needs to be some database

2:18or something right SQL Server exactly there's a sequel server actually it's years almost yeah it's it's like three instances or so of SQL Server it's really tiny uh but it's also not a lot of data as you will see right so okay that's what we usually see that's even a dctb question and we could imagine it looks

2:38like this right so like ANS running behind the scenes so that's uh uh dolly for you um so but what stof also has is a rest API so you can basically just run this uh API request over here and then you get basically drb questions um up to 100 to per page and you can basically get to Json and then consume this but of

3:00course for all of Stu overflow and rest Epi will not cut it right so we need to have something else okay fortunately uh stfl is a very open uh company and so for ever actually

3:14they have been publishing on the internet archive their whole data dump of the whole stock exchange site right which is really great it's a creative commment license it's updated quarterly nowadays it used to be monthly and it's huge and it's slow right because internet archive needs money so if you have money to donate then please also donate to the internet archives and it

3:34took me multiple days actually to download the data so don't do this at home we have something better for you and it's a really weird XML export format from SQL Server so uh if I had one wish if anyone wants to build IND dctb extensions for read XML like read XML I I would be really grateful uh for

3:54that um so you can find it there and we want to look at this St St overflow data also all the other St exchanges as well there right everything from I don't know movies to medicine to bio viruses you know whatever you can build uh on on stle Flor but you want to look at these like these are the largest data senses

4:12well you see already the uh the post one is Post history is 33 gabes post that's 20 gabt and and so on so it's some Nicea it's compressed okay let's Analyze This induct me so you don't need to download it from the internet archive anymore so I've done this for you they also compress it with seven zip which you

4:31have to install uh on your computer it's really weird uh you have to extract it to CS3 out of this weird XML format uh and then so there I don't so I've written a tool long time ago which I've used but there's also XML extraction tools and JQ that you can use for uh we really need an G XML function in uh

4:51activated for bgrade and then query and input is C3 and then uh do some Eda and then we export this stuff to pet let's see how this goes okay now the live demo bit uh so we have dctb 092 here and a bunch of

5:09um files I have also um oh right so we

5:15have also data model right so we have basically users post text and posts can be answers and questions and there's also votes and comments and badges and lots of other stuff but we will focus on the the users and the posts uh today as such and a little bit on the text as well um so if you want to code along uh I put

5:34it all into giup gist so all the SQ queries in giup gist so you can basically go ahead and and use them as such otherwise I will just steal and copy and paste from the uh giup gist so that you don't can don't have to watch me typing uh statements uh if you want to read more about this we've also done

5:51a bunch of blog post about this on on mdck as well and so let's get going so

5:57we have our duct TB uh running not

6:01yet uh test. DB can you everyone read it from from the back okay so smallest file is the text file so how big is the text file is 65,000 text in SE flow right so like everything from the most popular Tech which is what any guesses Java

6:22JavaScript JavaScript okay let's see because of course we can uh get this information uh you can also use this cribe to see what's actually in the in the CSV so because this is a CSV without headers you don't have actually colum names but it uh shows this here but we can basically uh read this data really quickly from the CSV and

6:43just see that actually it's uh JavaScript 24 million 24 million questions 21 million questions for Python and then it's 18 million questions for uh Java right oh no 1.8

6:58million sorry um so okay that's the text right and then if you want to import this then we would basically just uh you use read CSV and load in the uh the file and we can provide column names and Order detect and actually because it's a SQL Ser export it's actually uh show tables tables so we have now the

7:25text table and if you do select console from yes yeah I'll fix it so 65,000 um

7:37texts in our table cool and then of course you can do the same with the other tables like users and post so this is the users table which takes a little bit longer uh to load let's see how much data is in there so it it takes a few seconds we can actually do timer on so we can see how

7:57long the stuff takes select con star from users and so it's 19 million users on Flor so this dump is from May so there might be a few more now but uh but it's actually quite nice to have all of the Overflow on my computer right so running I can don't have to go to the website anymore I can just ask questions

8:19directly so we could can do the same with the post table which takes a little bit longer um but while you're doing this now you can all do all kinds of Analytics right so because now you have all of St overflow on your computer imported in dctb and then now you B can kick yourself out and do whatever kind

8:38of analytics you want to do which I find really really nice because now I don't have to spin up you know an red shift cluster and software cluster or something like that to do this and so I can just run this uh directly on my own machine so I think this takes about 30 seconds to load the uh CSV actually I

8:56wanted to show you how long it takes to load the CSV uh while you're waiting for this I can actually show you the defile sizes so the um um that's the wrong file size I want to show you this one so it's uh 3 gabes compressed CSV 5 GB commands 600 million

9:16600 megabytes users compressed right uh uncompressed you can actually check how big this is uncompressed let's see so like the users uh

9:35so it's 1.9 gigabytes uncompressed for the for the users CSV and meanwhile we have imported it it took 46 seconds to import the the posts and there are

9:52um 58 million posts in uh questions and answers right it's all in the same table because it's BAS basically post typee post type ID one is a question post type ID 2 is is an answer beautiful relational database design uh of course right okay now we got our data in in the database now we can basically do first

10:15few questions does anyone know who's a very prolific answer on Stick overflow so one of the like fav yeah let's see so John SK was the first one of the first for users learn of so they have all like reputation 1.3 million comma I'm not sure how you are I'm at 40,000 or something that but still far away

10:39from from those right but what kind of analytics can we do uh we can for instance say um can we see how much they um oh no let's first look at posts so what we can do at the posts here is just to do an yearly analytics from on on on a post right so give me basically all

10:57the years and then how many posts in the year what was the average view count and the maximum answer count on the post table right so and remember this is kind of running through the 58 million post table here right and it runs in 01 second which is really nice it shows that my old M1 MEC is still up to the

11:17task right and uh before we go deeper into into things what we can do now is basically we can take these tables and Export them to pet because uh no one wants to do CSV anymore these days right and so basically and it takes few seconds to export this users table uh to pet and then I can use the P

11:39files later on actually I had this other

11:43query where was this I think it's coming down there somewhere um um so or we can actually look in the in the book can just steal one of the Cs in the book which is basically bar that's what

11:59my bar here so here's a nice query which gives me uh let's see so we get the users and

12:07reputation and then we say what is the reputation per time frame right so since they were last uh since they were created user so how many points per day did they collect basically right what's their run rate any way right and then we can say how many days do are these and we put this into an comment expression

12:26and then we want to actually use the bar function to make it look nice and then we get no name column describe users I think I have two

12:37different data models so it's a display name

12:43right [Music] uh display name and created at was creation date I think that was the

12:57other uh creation can probably alas it as well

13:03right

13:09uh sh yeah there's a bunch of them I think

13:16three that's what you get when you copy stuff from some random books don't do that creation date and there might be another one there's another one creation date and then now I did mess it so let me just copy this into or fix it here uh

13:41[Music] creation we just need to remember to undo that before the goes out otherwise you all watch the addition of an oh yeah thank you for feedback right exactly so display name and then down here as well uh display we could have used aliases of

14:05course so so so it's what worth it right

14:09so uh so you see uh basically the reputation of the people the Run rate and that's basically the bar chart for the Run rate so uh John SK has actually a really high run rate but Gordon Lov is actually higher as such right and bar is really nice because you can visualize in line uh uh your values so the base the

14:30base value and then the max value and then how many columns with you want to use which is really nice right okay so we exported the data already to pet so that it's easier somewhere else to get this data into dctb again right uh so uh to do this we

14:47actually open actually we can have a look at uh what our test database how big it actually turned out to be so it's 4 4.2 GB of test data that we ported into

15:00interb plus compression of course right so what we can do now is uh I have already an account at MDU uh so I can basically go to my MDU uh UI

15:14here and you already see that I have a bunch of tables somewhere behind those things and in the notebook we have also some data so I have already my login and I exported my MDU token as as an environment variable so I can basic Bally just say dbmd colon and then it basically connects to Badu and if I do

15:35uh show databases it shows me all the databases that I have there you see I've done a lot of stti overflow databases right so uh and then we can basically create a database uh Berlin Meetup or something like that and then uh if I do show databases again it should show me B meet up and then we can also say uh you use building

16:00meet up and then uh when I now do uh show tables there should be no tables in this database uh but what we can do is

16:10uh We've also uploaded these pet files to ns3 bucket so we can basically just read them from3 uh into into duct but we don't need to stress uh DTS villain here but you can just do it on in the cloud directly so I can basically just say uh timer on and then let's see how long it takes to

16:31import this pet file into into the database yeah so it's uh 10 seconds uh to load the p f from S3 into into M do

16:49uh as such okay right so now we have our users table here and now here we actually have to write so I just had the local uh import was not using the right things so I can basic go back and uh now this should uh show us all did again right so we can do the same for the

17:08other tables really quick

17:15so uh we can try this actually uh yeah

17:20there is some optimization on the query site from other like do from the client right is pushing back to the cloud and we have have the B we about net for between S3 and mod de that's why it's super fast so we can actually use directly our users P

17:39file I mean p fortunately does also a lot of push down predicates and and and and filtering so the pet format supports lots of metadata filtering so if you do counts down pet file it actually doesn't read the file right and and if you do uh select only some columns and then because it's column no forward it only

17:56pushes it's them and then because you have for each each segment better data what's in the segment it can also select which segments to read in the first place so if you replace our users table here uh with an with the pet file so then we can actually see how long this takes uh to to run

18:21so so that this now inputs all the uh data sets into into our database so if we uh is it automatically refreshed it's not automatically refresh it's already refreshing when you do something the mother UI then it automatically refreshes it um but it would be nice if there was like like some background thing that uh refreshes stuff

18:44file come on so it's also there's also some locking with the metadata C going on where is blocked basically while you're creating new tables into the uh metadata uh catalog so you see now we have the comments table which is pretty big uh and the users table so we can also just say here uh shouldn't it be use this database it

19:08should be a use right M that's really interesting so uh if you say uh yeah that's what I tried no but it doesn't have use for some reason probably again the metadata catalog uh [Music] okay uh now we can do a SEL count star from uh

19:39comments and I think this is all they're keeping it busy so now important the votes the badges the Texs post links uh so basically we using up all the mck uh infrastructure storage now because you have now imported basically all of stick overflow into into this right and so you see commment is actually 88 million if I got this right

20:0788 million comments on soflow right but we wanted to see how long it actually takes uh to run this on a pet file and actually it's it's only two seconds on a pet file right so which is really nice uh from that perspective can also see the pet file itself is [Music] um let see

20:30H so the the users pet file is 700 megabytes so it's also because it's columna compressed really nicely right S3 bucket they're both in the US uh yeah maybe where did you create it I I trust I trust midi that he created the bucket in the right uh the right Zone it's American company so it's in I think it's in Us

20:57East or something that right um so but something that mother duck also added which is really nice is uh there was uh till till d dman uh did some GPT plus sequal experiments I don't know who of you has read his blog post and so Jordan put this actually into mauck so we can now run AI powered

21:20queries on MDU and this is actually H this is a lot of fun because it's probabilistic and it's always whenever you run the stuff it it returns different things so it's actually perfect for an for a talk right uh because you actually want to have very reliable uh and uh things right so we can call Prompt schema so it basically

21:40sends the table definitions to gbt 35 and you get an verbal description of what is this database actually about let's see what it does it takes I think 3 to 10 seconds so this is really boring right so I could have seen that by just looking at the tables but if I run this the second time it will actually uh do a

21:58other different response and sometimes it actually says this is like looks looks like a QA site and so on but it's as I said it's very probabilistic okay right um what can we what else can we do we can also uh ask uh questions uh so for instance prompt query will actually generate the SQL query and run

22:18the SQL query as well kol right so don't worry uh so what are the most popular tags so it gets the schema again from the database plus our question and then basically the prompt says write a SQL statement or return only a SQL statement that would answer this question given the schema as such right and it did it

22:38right so you want to see the SQL exactly so unfortunately this this pragma doesn't return the SQL but you can basically have it ask uh for the SQL itself and then uh it uh basically

22:55Returns the SQL as such time is now the time is busy the open open AI GPT apis take so many seconds it's not the runtime of of Dr B right so don't don't worry so and this is actually looks actually quite nice right but we can also do more complicated stuff so for instance we can also say something like

23:15what what question has the highest score and what are its other attributes right so so which is already a little bit more involved in terms of the question right could be some of your colleagues coming to you as a as a data person and say can you answer this question right and then in the future they don't need to come to

23:32you anymore they leave you alone because they can just talk to uh to to uh Dr B

23:38right so yeah so that's a good question right and then you can do things like uh which five questions have the most comments and what's the post title and title count so you actually want to see kind of do a join as well and and and and things like that so I should probably have done mode line uh to actually show

23:57this better and the the model itself is a is a cloud faced service or so uh uh the

24:06model is just a regular gbt model it it's not it's not fine tuned or trained or anything it's basically just uses a custom prompt then in the prompt you provide the database schema and you provide the user question I'm not sure if there's some few short examples as well uh as such but because you can use it with any database the F shot examples

24:24don't help you much right so I think it's basically just the schema plus the user question and then I mean there's so much sequel in the internet on the

24:35internet so actually nice here we saw the uh uh deadline exceeded so it took more than but we also know that open ey has some problems right now as you all heard right so so oh yeah it could be a but duck uh thing right so basically also use some other model like Llama Or exactly yeah so code llama is is quite okay you can

25:03also run local models with llama for instance as well and uh you can even find tune so for instance if you have a data set where you have for instance a lot of example queries and questions from users you can also find tun a model like like llama or so uh to basically then um provide you uh dedicated queries

25:20for this model they also say hey I have these ETS and if someone says you know engineer they actually mean a developer and and stuff like that so it can also fix up naming and and and and stuff like this right so what's also really nice is uh there it also is able to fix up your queries right so we always as you as you

25:40saw just with me you always mistype stuff right so you can also say fix this query right uh because I uh did

25:49something wrong in this query so let's see and it's actually me miss the group by you you know I always hated group ey when I was still doing SQL on a daily basis because I always forgot to add group ey but fortunately dub has group ey all so you don't need to do it as such but if you

26:08miss your group bu it basically goes and and fixes and adds your group ey at the end and then you can also do something like this I have a join here in my um in my query where I actually intentionally use wrong the wrong uh fields and it basically fixes here the user ID to own user ID because it the schema

26:28information as well and so it can fix also your so it's kind of a little bit like co-pilot um I mean this is more fun than for real right don't give me wrong so but what's actually for real is the next bit which is creating shares and sharing data sets in for M right so if you can take any

26:45database and if I want to say I want to create a share from our Berlin Meetup uh from our Berlin meup database and uh Berlin Meetup what day is today 20th of November right uh um

27:011120 and so it will go ahead and create a read only uh uh share or you get only snapshot uh from our from our database which you then can hand to other people and they can run queries on on your data as such so I can basically just do dctb and share URL and then I can get access

27:19to all of this stuff which you can actually try and otherwise you can also use attach command where you say attach share name and then you have it Al available inside of your session as well so it basically does a snapshot copy of the 4 gabes and then access it

27:38y me sharing yeah that's up to you right so you don't want to share sensible data right uh I I'm actually not sure if there's any like masking or other other things in there yet um probably not yet

27:56right so but that could be an option where they say I want to mask sensible data for instance like I don't know Emil addresses or uh people person names or or things like that could actually be shared uh could actually be must just to explain the context if you have like open data sets because you see there was

28:12quite some cleaning to be bound from the roaded ass set B stack Overflow now day to reach out we happy to your help to make the word a better data set and then we host it uh basically on on both AAS Ro parket and um mother deck as a share datab base so that we put it in our F

28:33data set we have a couple of them there so if you have multiple shares then you can basically also say list shares and it will uh show you and here we have our burin Meetup uh share right so if I now send this for instance to M he can take this and just run the queries on it

28:51right so imagine we were just in our uh dub um sh I here and uh just use the share then it doesn't work because it's um not

29:04so yeah I thought you could I thought you could actually use it directly but and may have weird Behavior because you're the H and you

29:16best and see s right

29:22S4 he was full show tables

29:30right now I basically pass the share to someone else and they can use it as such right um and I think that's what I wanted to show in the live demo um we

29:42looked at that the pet files are much smaller than the CSV file the XML uh compressed XML files which is really nice and that the uh information is there we looked at the AI queries if you want to see the block post feel free and otherwise I think that's it uh any

30:01[Applause] [Music]

30:12questions

FAQS

How fast can DuckDB query the entire Stack Overflow dataset locally?

DuckDB can query the complete Stack Overflow dataset, 58 million posts, 19 million users, and 88 million comments, on a local laptop with sub-second query times. Loading the posts CSV takes about 46 seconds. Once imported, analytical queries across all 58 million posts run in roughly 0.1 seconds. This eliminates the need to spin up a Redshift or Snowflake cluster for exploratory data analysis.

How do you export Stack Overflow data to Parquet format with DuckDB?

You can export DuckDB tables to Parquet format with a simple command that takes just a few seconds. The Parquet files are significantly smaller than the original compressed CSV files. For example, the users file goes from 1.9 GB uncompressed CSV to 700 MB in Parquet. Parquet's columnar compression also means faster query performance, since DuckDB can use push-down predicates and read only the needed columns and segments.

How can you share DuckDB datasets using MotherDuck?

MotherDuck lets you upload a local DuckDB database to the cloud and create shareable snapshots. You connect to MotherDuck by exporting your token as an environment variable, then import data from S3 Parquet files directly into MotherDuck. Using the CREATE SHARE command, you can create read-only snapshots that other users can attach and query. You can get started with MotherDuck's free tier and access sample datasets immediately.

Can you use AI-powered SQL queries in MotherDuck with DuckDB?

Yes, MotherDuck has AI-powered query features through pragma commands. The prompt_schema function sends your table definitions to a language model and returns a verbal description of what your database contains. The prompt_query function generates and executes SQL queries from natural language questions. There is also a query-fixing feature that can automatically correct SQL errors like missing GROUP BY clauses or wrong join fields based on the schema information.

Related Videos

1:00:10

2026-02-25

Shareable visualizations built by your favorite agent

You know the pattern: someone asks a question, you write a query, share the results — and a week later, the same question comes back. Watch this webinar to see how MotherDuck is rethinking how questions become answers, with AI agents that build and share interactive data visualizations straight from live queries.

Webinar

AI ML and LLMs

MotherDuck Features

9:09

2026-02-13

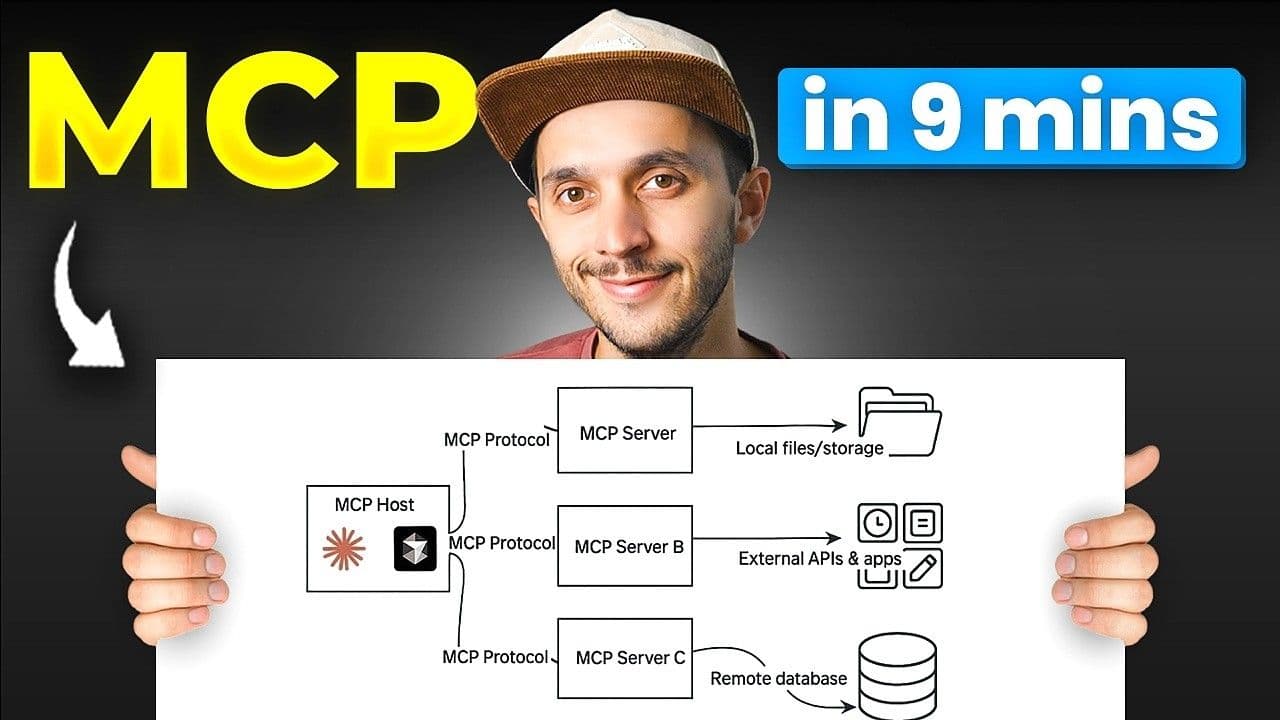

MCP: Understand It, Set It Up, Use It

Learn what MCP (Model Context Protocol) is, how its three building blocks work, and how to set up remote and local MCP servers. Includes a real demo chaining MotherDuck and Notion MCP servers in a single prompt.

YouTube

MCP

AI, ML and LLMs

2026-01-27

Preparing Your Data Warehouse for AI: Let Your Agents Cook

Jacob and Jerel from MotherDuck showcase practical ways to optimize your data warehouse for AI-powered SQL generation. Through rigorous testing with the Bird benchmark, they demonstrate that text-to-SQL accuracy can jump from 30% to 74% by enriching your database with the right metadata.

AI, ML and LLMs

SQL

MotherDuck Features

Stream

Tutorial