Stay in Flow with MotherDuck's Instant SQL

2025/05/21Featuring: , ,What is MotherDuck?

MotherDuck is a cloud data warehouse built on top of and powered by DuckDB, focused on making big data feel small. Unlike traditional big data systems that emerged in the early 2000s with technologies like Hadoop and Spark, MotherDuck takes a different approach by recognizing two key changes in the modern data landscape:

- Modern hardware is significantly more powerful: Today's laptops have multiple cores and substantial RAM, with some EC2 instances offering hundreds of cores and terabytes of memory

- Most queries aren't actually "big data": Analysis shows that 99% of queries on systems like Redshift and Snowflake can fit on a single large node, with most under a gigabyte

MotherDuck's architecture is built on three core pillars:

- Effortless and serverless: Pay only for what you use without managing resources or clusters

- Dedicated instances: Each user gets their own "duckling" (DuckDB instance) to avoid noisy neighbor problems

- Dual execution query engine: Run queries locally, in the cloud, or combine both for optimal performance

The Problem with Traditional SQL Workflows

Traditional SQL development follows a frustrating pattern that breaks flow state:

- Write your query

- Hit the run button

- Wait for results

- Debug errors

- Repeat

This write-wait-debug cycle can happen hundreds or thousands of times when developing new queries. Each wait forces a context switch that exhausts mental energy and disrupts concentration. As Hamilton, a front-end engineer at MotherDuck, explains, this interaction model hasn't fundamentally changed since the 1970s.

The lack of observability tools in SQL makes debugging particularly challenging:

- CTE debugging: Common Table Expressions are notoriously difficult to debug, requiring manual commenting and isolation

- Complex expressions: Breaking apart column expressions to identify issues requires writing more SQL

- No immediate feedback: Unlike modern development environments, SQL lacks real-time validation and preview capabilities

Introducing Instant SQL

Instant SQL transforms SQL development by providing immediate feedback on every keystroke, similar to how digital audio workstations (DAWs) work in music production. The system is guided by Brett Victor's principle: "Creators need an immediate connection to what they create."

Key Features

Real-time Query Execution

- Results update with every keystroke (50-100ms latency)

- Powered by DuckDB's local execution capabilities

- Smart caching and query rewriting for performance

Advanced Observability

- Click on any column to decompose complex expressions

- Navigate through CTEs with instant result previews

- Parser-aware syntax highlighting showing query structure

AI Integration

- Context-aware suggestions based on cursor position

- Real-time preview of AI-generated changes

- Semantic understanding of query intent

Technical Implementation

Instant SQL leverages several technical innovations:

Parser-Powered Intelligence

DuckDB exposes its query parser through SQL, allowing Instant SQL to:

- Generate abstract syntax trees (AST)

- Identify expression boundaries and table references

- Create a semantic path through the query based on cursor position

Intelligent Caching Strategy

The system automatically:

- Parses queries to identify table references

- Builds a directed acyclic graph (DAG) of dependencies

- Creates optimized caches for interactive modeling

- Rewrites queries to use cached data

Dual Execution with MotherDuck

For large datasets, MotherDuck's architecture enables:

- Server-side scanning and filtering of massive tables

- Local caching of relevant subsets

- Seamless coordination between cloud and local execution

Practical Applications

Local File Exploration

Query local files directly without uploading to the cloud:

Copy code

SELECT * FROM 'path/to/file.parquet'

Cross-Database Queries

Combine data from multiple sources:

- MotherDuck tables

- PostgreSQL replicas

- Local files

- S3 object storage

Interactive Data Modeling

- Modify CTEs and see downstream impacts immediately

- Test transformations without full query execution

- Debug complex joins and aggregations in real-time

Performance at Scale

Instant SQL scales effectively through:

- Smart sampling: For terabyte-scale data, it samples intelligently

- Filtered caching: WHERE clauses execute server-side to minimize data transfer

- Incremental updates: Only affected parts of the query are re-executed

The system works with datasets ranging from local CSV files to 100+ billion row tables in MotherDuck, adapting its caching strategy based on data size and query complexity.

Getting Started

To try Instant SQL:

- Sign up for a MotherDuck account at motherduck.com

- Run

duckdb -ifor local-only exploration without an account - Join the community at slack.motherduck.com for support and feedback

Instant SQL represents a fundamental shift in SQL development, transforming a traditionally passive, wait-heavy process into an active, immediate experience that keeps developers in flow state while writing complex analytical queries.

Transcript

0:01Hello everyone. Welcome to our webinar

0:05on staying in flow with instant SQL. Uh my name is Gerald. I'll be your kind of host today. I'm joined by Hamilton uh front engineer at Motherdoc and Jacob Madson who is a developer advocate at Motherdoc. Uh quick uh housekeeping items. Uh we'll be recording this session. So, we'll send out a link um if you want to watch it again or if you

0:29need to drop off uh and you want to, you know, catch back up on it. Um and if you

0:36have any questions, feel free to pop them in the chat. Uh we'll have a Q&A time for Q&A uh after uh after the the demo. Uh quick agenda. I will do a short introduction on just what is mother duck. Uh and then we'll hand it over to Hamilton to give uh his kind of presentation on his inspiration for

0:57instance SQL. Uh and then we'll uh add in Jacob for a demo uh of instance SQL and then again time for Q&A at the end.

1:08With that, I'll go ahead and get going with uh just uh introduction to you know what Motherduck is if you haven't heard of Motherduck before. Um, Motherduct is a a cloud data warehouse built uh and powered by uh built on top of and powered by uh DuckDB. And we're really focus on uh making big data feel small.

1:28Um uh and really to kind of understand where motherduck came from, we actually had to kind of go back to the beginning of the big data era. um when uh you know you know early 2000s we had things like Hadoop and Spark come on the scene uh to handle all these massive amounts of of of data that we were generating and and

1:48everyone said hey you know you're going to get great insights from all this data that you have but in order to get all these insights and and and value from this data we need to create these massive uh cloud data warehouses um but the the and it was great you know you you were able to handle this you know

2:05these massive data sizes lots of throughput but uh there was also a lot of hidden costs that came alongside with that. So you had to um make lots of round trips to the cloud. You had to kind of split your work across multiple nodes and you had to bring all that data back together and all of that introduced

2:21a lot of you know latency a lot of complexity and a lot of overhead. Uh but then if we skip forward forward a few years um to you know I don't know five or even so so years ago a couple things have changed from the the introduction the beginning of the big data era um two the main things that have changed is one

2:41just hardware is significantly more powerful uh you know the laptops or computers back in 2006 were were you know only had maybe you know one core and a couple gigs of RAM versus now you know laptops this MacBook Pro that I'm using for the webinar has I think about 20 cores and you know 30 I don't know lots a lot of RAM and then

3:04you can even scale up into these you know large EC2 instances that have hundreds of cores and terabytes of RAM.

3:11Uh and so the other thing that has changed also is that you know while we were kind of told maybe hey you're going to be running you know queries on pabytes of data uh and we need to build these systems and optimize for this massive massive amounts of data that like Google or you know Netflix or Facebook has uh the reality is that the

3:29most queries that that people are running uh are are not big data they're more you know normal size data um and and able to fit in these kind of on these machines that we you know that uh we have that are more powerful. Uh this is a a quote from or tweet from George Frasier from Fiverr. He did an analysis

3:48of queries on Redshift and Snowflake and really is showing that you know 99% of of queries on these systems can really fit in a single large node. They're you know under a terabyte. Most of them most of them are even under a gigabyte. So um you know with those kind of two truths in mind um you know mother duck kind of

4:08takes the approach of like hey what what if we built a cloud data warehouse um you know instead of building it for these massive amounts of big data what if we build it for kind of the 99%.

4:20uh and um you know if we built a system with those things in mind I think one of the first things that we'll think about is like hey how do we eliminate this kind of distributed overhead that really ends up being unnecessary in a world where you can handle most of your workloads on a single node. Um the

4:36second thing is like instead of again building these massive complex systems that are optimized for Googleized data, what if you just make it really easy for you to get business value out of the the data that you have making it really you know easy for both technical people and non-technical stakeholders to to query data to get answers and and that brings

4:55rise to things like instant SQL right and so when we focus on uh really the user experience uh and and generating you know business value um we build tools tools to make people's lives easier. Uh and lastly, we're going to build on, you know, the reality of the hardware and and the the the technology that that is kind of modern, right? And

5:17so that that brings us into, you know, duct, right, which is the the OLAP open- source database that Motherduck is built on top of. It's extremely fast, extremely efficient, and lightweight. Uh again we're also making taking advantage of these you know modern processors that have you know multiple cores and can we're able to parallelize a lot of the

5:38work. Um and you know that kind of come

5:42comes into fruition in kind of three pillars that we have in our our architecture. Uh you know the first is that it is we call effortless and serverless. So it's it is you you only pay for what you use. you're not, you know, worrying about managing resources, um, spinning up clusters and whatnot.

5:59Um, you know, DUTDB is extremely fast and efficient. You can spin things up really spin things up and down, you know, in sub 100 milliseconds. Um secondly, instead of you know I guess scaling out in the horizontally in the normal sense that you think of in terms of splitting a single query across hundreds or you know dozens or hundreds

6:18of nodes uh we really think of uh enabling kind of each user or each um account to have their own dedicated we call duckling which is basically an instance of duct DB. So you can scale up uh on these big bigger systems if you need to have you know you know more powerful processing or you can use what

6:37we have what called pulse instances for maybe more regular workloads. Um and you're also because you're on your own instance you're not worrying about you know noisy neighbor problems fighting for resources. Uh you know maybe if someone's running a super you know intensive query uh you're not stuck behind them. Uh and then lastly uh we have what's called the dual query dual

6:57execution query engine. Hamilton will get into this a little bit in in in his talk. Um but a lot of it is I mean unlocked by uh the power of ductb being uh super lightweight and embeddible. Um you can run queries you know super fast locally on your machine. You can also run queries in the cloud and motherduck

7:17or you can combine the two or you can combine it from you know maybe you have data in S3 someplace else and you want to you want to combine them motherduck uh will create a a query plan that that is kind of optimized for minimizing data movement u making uh your queries fast and and efficient. So I think that is

7:35the last of my slides. So with that let me hand it over to Hamilton.

7:41Alrighty. Thank

7:45you. So, uh, today we're going to talk about SQL. Um, but really we're talking about

7:54software that might require mastery but,

7:59um, encourages a flow state and is fun

8:03to use, kind of like playing an instrument. Well, um, so first I want to talk about recording studios. Um, in my previous life, I was a musician and um, I had the privilege of working with artists big and small uh, in recording studios all around um, the United States. And uh, I loved it. It was some of the happiest and most fun years of my

8:27life. And um, at the center of the

8:31recording studio is this piece of software that there's arrows pointing to it called the digital audio workstation or DAW. Um, and the the point of the DAW

8:41is to orchestrate all of the input signals from the recording studio, whether it's microphones or equipment into recorded audio tracks, digital audio tracks, and then also helps you mix those audio tracks together to make a semiinished final product, a song or a piece of music. And working in the DAW is kind of amazing actually. Um, every action you

9:06take is met with an instantaneous preview as you work. You can solo individual tracks. You can loop little bits of audio. Um, you can tweak plugins

9:17like EQ or reverb or digital software instruments and immediately hear a preview of the thing you're working on as you go. Um, it's really fun actually.

9:29Um, and because it's really fun to to play, it's actually really fun to get good at this because the software is designed to put you into a flow state. Um, so a number of years ago, I moved from music into uh software and I

9:48began focusing on building analytical data tools. And something that I've missed since I've joined this new industry is that feeling of immediiacy.

9:58It was so great working in a doll and um knowing that as soon as I tweaked something I could immediately tell if I got it right. And so I've been chasing that ever since. Uh there's this quote by Father John Kulkin that's very popular um well-known people often attributed to Marshall McLuhan but I think he said it first which is we shape

10:17our tools and thereafter our tools shape us. Now, after years of working in something like a digital audio workstation, it's sort of changed the way my brain works and my expectations for the tools that I'm using. So, now let's let's talk about SQL. Um, of all the ancient programming languages, um, SQL has had kind of the most interesting history and has managed

10:43to succeed wildly in spite of what is probably decades, and I really mean decades of people criticizing the language itself. SQL has endured and in fact actually thrived despite these uh, language flaws and it's the reason why many of us have our careers in data today as well. And partially this is because database engines have always met

11:07the technical needs of the moment. Um when the internet became a thing um the idea of big data and distributed computing uh kind of became in vogue and databases met that need pretty directly and now we have this new moment in AI where there are new pressures on databases to to evolve and to meet those needs. And so databases have also pushed

11:29SQL as a language in interesting directions. The language itself has expanded and attempted to accommodate more and more use cases that it was never originally designed to use. And that's all wonderful, but the interaction model of SQL has basically not changed since the 70s. You still write your query, hit the run button, and wait for results. Now, as somebody

11:52who comes from the musical world where everything feels instantaneous and immediate and and feels really good, um it's kind of a jarring experience actually writing SQL um for me because it breaks flow, right? I have to hit that run button and wait. It's pretty hard to debug. Um there's no way for me to like solo individual parts of the

12:12query and understand what's going on and ultimately simply does not spark joy for me. So, let's walk through a few examples. The first writing a new ad hoc query. So everybody's been in this position. You're faced with a blank SQL editor and you start typing a query, some new ad hoc analytical query. So you'll write out your query, then hit

12:34the run button. You get your first error. You think, ah, okay, simple mistake. I can fix that. Hit the run button, get another error, fix that, and then finally hit that run button. And then you wait and then finally you get some kind of result set. Wonderful. Now your brain has moved from debugging mode into analytical mode. Right? The first thing

12:58you notice is this column looks a little funky. Probably got something wrong in the query. Turns out you accidentally reverse create a date and close date. So you fix that. Click run.

13:09Wait, and then finally get your result set back. And you do this over and over again. Something like this. You write, you wait, and then you debug in a loop over and over. And writing and debugging are awesome, right? They're like very active analytical activities that really require your brain power. But waiting is not, right? Waiting is passive. Um,

13:31every time you ask your brain to wait, you're asking it to spin a plate on a stick in your head and keep your attention. Um, and that's fine if you're only running a few queries a day, but when you're writing a new ad hoc query, you're doing this like hundreds or even thousands of times a day, right? Um, and

13:48all of that weight time certainly compounds to a lot of wasted time, but even worse, it exhausts your brain. You know, we were simply not evolved to wait for SQL queries to run.

14:02Now, the um a lot of modern OLAP engines

14:06have done a great job of shrinking that wait time, making the engines so fast that you're not really waiting that long. But really, any attempt at context switch might give my poor brain just enough time to switch gears and head to social media and then I've lost my train of thought. So, that's just writing new queries. You also have the problem of

14:26dealing with queries that are already written, right? So, imagine this situation. you've uh inherited this big query with a bunch of CTE from a colleague and they ask you to fix some column at the very very end and you might try to diligently look through every part of the query to try to understand what exactly is going on but

14:44at a certain point you might just give up and comment out whole parts of the query just to isolate the individual CTE. Now, CTE are awesome to write, but they're so hard to debug. And in fact, they're so hard to debug that the popular BI tool Hex has a whole blog post about how you shouldn't actually use them. Now, Hex has this really cool

15:04bit of functionality where you can like chain SQL queries in cells and then it sort of figures out things for you.

15:11That's awesome if you use Hex, but it makes me kind of sad because I don't think there's anything inherently wrong with CTE. we just lack good tooling to actually debug them. So let's talk about another debugging problem. Now imagine you have this query and you get this, right? Some column with a bunch of nulls and you're trying to understand what's going on

15:32here. Then you look at the query and you realize, ah, this complex column expression that took me forever to write um has something going on here. And the problem is you're going to have to break apart every part of this expression to figure out if it's your query logic or if it's the underlying data that's causing all of the nulls. Um, of all the

15:50popular programming languages, SQL seems to be the only one that almost completely lacks debugging tools. Right?

15:56It seems like the solution to this problem is always just to write more SQL. So that's that's it for humans, right? Let's talk about AI. We're in this kind of interesting moment right now where AI is changing workflows across the board. So in your SQL editor, you might have the ability to highlight some text and get a suggestion through a

16:17prompt and your course of action is to accept the suggestion, then run the query and then actually debug the output there. Um, and that's kind of terrifying, right? We're essentially asking this uh asking for this non-deterministic output to be like shoved into our extremely fragile unobservable system. And I think that most people that actually write SQL for

16:39a living are terrified of AI for this particular reason, right? Um and I think it shows that there's a problem here where um we can't we don't have the tooling to make the most of this moment in AI with SQL. So um the problems extend well beyond just human interaction.

16:58So, good luck with the vibe coding, right? So, I'm picking on SQL, but I love SQL and I really want us to do better with it. Uh, and the question is, can we do better? Um, and to do better,

17:12it means we need some sort of rubric about what better looks like. Um, and so for this, I turn to Brett Victor. Um, he's a well-known researcher who gave a talk about 12 years ago called inventing on principle. And in his talk, he states his thesis for what makes good software. And he says this, "Creators need an immediate connection to what

17:33they create." And what I mean by that is when you're making something, if you make a change or you make a decision, you need to see the effect of that immediately. There can't be a delay and there can't be anything hidden. So that's great. It sounds like we have a rubric, right?

17:52Um, DAWs, digital audio workstations have this in spades, right? I know everything I do. I get an immediate feedback loop on on the result and I can also solo tracks and loop things and really isolate. So nothing is hidden and there's no delay. And that makes sense to me because um the DAW has to model a

18:13real world recording studio and recording studios are all analog signals moving at the speed of sound and the speed of light which means that everything has to be instantaneous. So it's really quite easy when your software has to model a physical system to achieve this result. Now the problem is it's easy to just because we're doing something kind of abstract like

18:33transforming data assume that we don't need physicality in our data tools. And so I ask you why hasn't anyone actually tried this with SQL yet? Why is why hasn't anyone created a system where there's no delay and nothing is hidden? Now um let's take each one of these and figure out the reason why and see if if there there's a way forward.

18:56First, let's talk about delays. When I think of a SQL system that has no delays, I think of this, right? You get a result set per keystroke. I'm running a lot of queries and my result set is updating as I type. That is about as close to a no delay system as you're going to get. And so, in practice, what

19:16this means is I need to get a result set every 50 to 100 milliseconds. So, that's great. So, now we have a latency requirement um that is potentially easy to meet. Now the problem is most database engines if they're running in a data center you're going to pay some sort of network cost and that's definitely more than our latency

19:34requirements plus computation. So this automatically rules out a lot of um popular database systems whether they're analytical or transactional. So what we need is a is a database engine that sits on our computer runs locally is has great SQL and is very expressive.

19:53And there's one database that I can think of that fits that bill really well, of course, which is duct DB. Um, DB is fast, right? And it's local.

20:01Everyone here probably knows what DB is, but just to kind of set the playing field, it's an inprocess analytical database. It's kind of like the SQLite of databases, but I think that does some disservice to it. Um, most important thing is that it's very flexible and very powerful. And we know it's powerful when we run queries, right? The first

20:21thing people notice is that queries run faster than you can respond a lot of the time. So this is obviously a very simple almost like trivially simple query. Um and I could easily if all my queries look like this I could run DB on every single keystroke and get my result sets. Now the problem is real world

20:40queries usually have more data and more logic. And so here's an example with um a few CTE and a join. And this here is still incredibly fast, right? I think in absolute terms, duct DB just like kind of blows it blows everything out of the water. But unfortunately for our latency requirements, for that interaction speed, it's still too slow. And so we

21:02have to take a more drastic action. Now um the question is can we take this query and rewrite it somehow so that we can get the latency that we actually want. This is kind of where things get really interesting, right? uh duct db actually exposes a way to parse the query in SQL. This is a scalar function

21:22that is just sort of like hidden inside the JSON extension which which is a um standard extension in ductb. And when you run this you get this which is a serialized JSON representation of the abstract syntax tree which tells you all of the semantics of your query. Now, because we have this um it means we may be able to

21:43actually rewrite our query to essentially run something smaller or faster or um something just a lot simpler. So, um that's necessary actually because not every duct DB query is fast, right? If you uh many of you know you can query object storage with duct db and this is itself requiring a network uh round trip, right?

22:07um because this is not sitting locally. And so if you combine all these pieces, the parser rewriting and then essentially kind of running the rewritten query, you get this. The top part is the original query. The middle part is a rewritten cacheed version of the query and the bottom we are replacing the from statement with our cache table. And that's pretty awesome

22:30because it will get us more into this range. Very very exciting. So we know that duct DB um has the capacity to do

22:39the things that we actually want it to do. And unfortunately it works great for these kind of simple queries, right? Um but in reality queries often have group buys and wear clauses. And there's a lot more we need to do when we're actually um scanning the table originally to generate our cache. And this is where mother duck actually comes into the

22:58picture. Um can I cache a relevant subset of a big remote source? Can I sample it? Is the remote source structured in a way to get what I want fast? Well, Motherduck databases actually are perfect for this. So, Motherduck has um in its architecture a principle called dual execution. The idea is that you have your local version

23:19of DB and then you have another copy of DuctTDB running on a very powerful server in Motherduct's cloud and that um

23:29database engine also has access to potentially much larger duct DB database that you care about and we can actually split up the caching step in such a way that we can use the big beefy motherduck server to do the scanning and selection of rows out of because of a wear clause and bring that cache back into local

23:49duct db cache locally where we can model the data in real time. So uh mother duck is kind of the missing piece of the puzzle for doing this in in a reasonable scale. So and that works really well. So for instance if a mother duck table 62 billion rows um I certainly don't want to pull down that entire uh table and

24:13database into my local cache. which will take a while to actually pull the whole thing, but I can actually help create the cache using um serverside mother duck to actually essentially pull the rows that I actually need. So I can do interactive modeling locally and that's sort of how the whole whole system could work in practice. So that's it for

24:33delays, right? We can combine duct DBs, its parser, its runtime guarantees and motherduck along with dual execution to actually build a system that scales pretty effortlessly where there's not a delay. Awesome. So, let's move on.

24:48There's there's two requirements to Brett Victor's inventing in principle. The other is there can't be anything hidden. So, we saw that SQL has a lot of observability problems. what would it mean to break apart those those problems and expose the underlying data in an interesting way.

25:07So um my background is in data visualization um and there's this well-known researcher named Ben Schneiderman who wrote this paper called the eyes habit and in that paper he cites his visual information seeking mantra. This is by the way how the the paper opens. He actually opens with a mantra um which is this overview first zoom and filter then details on demand.

25:30Overview first zoom and filter then details on demand. Now, if we were in person, I would like make everyone chant this so you can become part of the data visualization cult. Um, but that doesn't really work well over live stream. And so, what I would um suggest is that after this, you go turn off the light in

25:48the room you're in, lay down on the floor and chant overview first, zoom in, filter, then details on demand, and then that will turn you into a world-class database expert. So, what does it mean to apply these principles um to SQL? Uh what does it mean to get an overview? What does it mean to zoom in and filter? What does it mean to get

26:06details on demand? Well, um what do we have in the SQL query? We have a cursor, right? The cursor is probably the best proxy for the user's attention in the query. Um so the question is, can we use the cursor position and then everything else to figure out where the user is semantically and then help them do

26:26interesting things to help them understand it better? Well, we have a few things at our disposal. So, obviously, we have the ability to get the abstract syntax tree.

26:36We we talked about that before when we were talking about delays, but embedded in this is just the bare minimum information we need, which is the start location of expressions and table references. So, if you pair that with the tokenizer, you have the ability to do something kind of cool. You can find the boundaries of every expression node

26:55and every table reference. And then from there, it's not that much harder to find that the start and ends of every clause in the query. And then from there, you can find the entire boundaries of a select node and so on and so forth, which gives you something really awesome, a path through the abstract syntax tree that tells you exactly where

27:14the cursor is. Um, and so this kind of parser powered query intelligence is actually quite novel in the world of SQL. Um, and you can do a lot of really cool things with it. So I want to talk through maybe just a couple of examples. Um the first here's a simple video that's showing um parser aware syntax highlighting. On the right you

27:35see the path through the a right you could see how this is a bunch of joins altogether. Turns out that's actually like a very one-sided join tree. Um you can see the entire expression light up when you um highlight certain parts of it. It's really cool. The main point is just to show that we have this information and it's encoded well and we

27:55can do interesting things with it. So here's a really goofy example. This is just hitting a button that shuffles all of the columns in the select list interactively, preserving the formatting above and below. Um, obviously this is not useful, but it shows that you can actually use um this path element to essentially rewrite the query in interesting ways and especially in ways

28:17that are kind of user consumable. Let's talk about some more interesting things. So, we've got um a couple of column expressions and I know that I'm in a column expression. And because I know that I and I know that it doesn't have an alias, I'm able to actually use a simple AI model to generate an alias for

28:34these columns. Kind of begins to show you some of the power of having this stuff at your disposal. Here's a similar example um where I can turn my existing select node into a C to a C a CTE and then use an AI model to name it and then keep modeling as I go. So really the sky's is

28:53the limit with these kind of parser powered features. And because of this path generation, we have some really powerful tools to expose things that are hidden. So anyway, a lot of ingredients in this soup. Um, we have ways to reduce the delay to nothing. We have ways to potentially explore things that are hidden and expose them. So again, where

29:18does that actually leave us? Well, um, gives us a system where users can write SQL in a

29:27flow state, uh, that's easy to debug and may require mastery, but is actually fun to play, kind of like playing an instrument. So, all of these pieces together form instant SQL.

29:47Um, and now I'd like to invite Jacob Matson onto the digital stage and we're going to show you a few

30:00examples. All right. So, give me one second here. Fantastic. So, forget what we're doing today. I guess we're going to query the wellknown New York City taxi data set. Indeed. Um, and we're going to do something interesting. I'm not sure. As is often the case when we're writing a new hot dog query, we're just going to start typing and see where

30:19it goes. Now, as Jacob types, you can see things are happening. Essentially, every keystroke, we have an event loop that um parses the query, analyzes it, and then figures out what to cache so that we can actually rewrite our query so that it feels instantaneous. And so, he's selecting some columns and getting some parts together here. Um it's a

30:42really kind of awesome and um delightful experience indeed. Let's add this one more thing here. Sure.

30:52[Music]

30:56Uh all right. Where do you want to go from here? Awesome. Okay. So, you see we have a bunch of simple columns. Um we have a couple of functions, but we also have this fair amount divided by trip distance as cost per mile. Um that's great. I can we make another one that's cost per kilometer because as everyone

31:12on the call knows Jacob is Canadian and he doesn't even know what a mile is. So gonna help him out here. Fine.

31:21Awesome. So as you can see as he's typing basically um

31:28you need a com. So that's wonderful. So we've got cost per mile, cost per kilometer. Now Jacob, can you click on cost per kilometer in the result set? So first thing we get SQL lacks observability tools. If you have a column expression and you click on the header in instance SQL, it breaks apart the expression and shows you the next level. So Jacob can

31:49actually break this down and show the entire flow of data through the system which is really really awesome. Now Jacob, can you just like mess with that 0.62 and just like type other stuff there? Oops. Yeah, let's try type some numbers, shall we? As you can see, he's typing and everything in the entire expression tree of data is updating in

32:08real time as he does it. So, not only does this real timeness apply just to the top level uh columns, but also to all of the child expressions as well.

32:17And this is really cool. This is about as close, I think, as anyone's gotten to essentially turning a SQL query into a big Excel function that outputs essentially like a list of expression trees. It's like a very powerful idea and it really makes it easy to do all sorts of things from you know working on complex um column expressions to

32:39debugging um regular expressions which is a real pain and pain pain uh if you've ever done it professionally.

32:47Awesome. Okay, so where should we go from here, Jacob? Let's put this in a CTE. Um great. Yes. And and then do some aggregates. Yeah, perfect. We're all good little data engineers, so we need to wrap our stuff in CTE to keep it going.

33:03Okay, awesome. Okay, fantastic. So, what are we interested in? Let's get average. Average average cost per kilometer.

33:14Cost per kilometer uh as average. Can we ask the median? Because Yeah. Yeah. I'm a statistician, so medians are always better than averages. And Jacob's a finance guy, so how dare you. Uh, let's add pickup hour in here too, right? Yes. Okay.

33:33Yep. One of those. Great. Exactly. Okay. Okay. Anyway, great. This is classic example of why we use medians. As you can see, Jacob has a not a number for pickup zero and then infinity for 23.

33:45Those are valid duct DB numbers. So, we need to go fix that. So, let's click back into the CTE above. Yep. And when you click on it, notice that the result set just changed. Wherever my cursor is, whatever subquery it's in, um, it will visualize the data at that select node.

34:03We've worked out all of the dependencies so that you can actually make edits in real time in any CTE and see the update in its in its child. So, let's let's do that. Let's like fix let's fix this problem issue, isn't it? I think trip trip distance there's some zeros. Let's just check.

34:20Okay. Yep. Yep. So, we'll just remove those. Okay. Okay. And let's see if our averages are fixed. Beautiful. Look at those simple nice averages, real numbers. Um, fantastic. So, um, that's

34:34sort of the second major piece of, um, the observability story for instance SQL, right? Um, not only can we decompose expressions and see the flow of data through them, but we can also click anywhere and actually see that data. So if you've ever done data engineering seriously, you know that writing ad hoc queries can get really verbose and there's a lot of CTE and

34:54this just makes it a real joy to actually debug them quickly. Absolutely. Hamilton, do we want to talk about how you know the the number of rows in this data set?

35:04Um we can. Yeah, sure. Do we want to do anything else with this first? Sure.

35:09Let's do that. That's fine. We can add another. Let's um let's actually see. Let's actually see if we can split this on weekday versus weekend. So, I'm just going to add another CTE.

35:20So, we'll do

35:24that. This is this is a fun part because I get to write a case statement while

35:31everyone loves writing a case.

35:36Uh pickup day is in. Okay.

35:43Saturday, Sunday, then weekend. How about spell right?

35:51Else week day end as day part. Oh, day part. There we

36:00go. Nice. Day part. Hey, well done. Good. Good job. Round of applause for Jacob first. Thank you. Speed running a case statement live. Um, and what are we gonna do with this? I think that like I think what we want to show here is you can you can drill into it, right? So, just like we did this earlier, we can

36:17click on it and we can actually look at the specific bits in here. So, we can see see at what it's comparing, right?

36:23We can see it's looking at the actual pickup day, right? Friday, and saying is is it in these two false, right? So we're immediately we immediately can kind of see again get getting that you know um uh kind of Excel type

36:38experience in there. So that's really I guess we need the the star. We need all the other rows from that one as well.

36:44Yeah, let's get that. Yeah, let's do that. And then we'll and we update that to Perfect.

36:51And we'll just change this to pick up pickup day. Okay, cool. Is it pickup day? Oh yeah. Oh, you know, we want day actually. Daytime. You're right. You're right. Wonderful. Okay. Some good debugging right there.

37:05Yeah, super fast. Um, so this is really, really awesome. Um, and um, we basically

37:12modeled this kind of query with multiple CTEs and we never hit the run button.

37:17Just to make it clear, we never once actually needed to run the full query to figure out what's going on. But I think it's important to remember that instance SQL is really a complement to running your query. It's not a complete replacement because ultimately like you you want to actually run the query and see the results. That's the whole that's

37:33the whole point of analytics, right? So I think at this point we could probably just run the query and see the actual results. There we go. So this actually runs it on the full data set and returns pretty quickly, right? 233 milliseconds.

37:46That's fantastic. Um but there's also network and other things involved there. We can't actually run that at keystroke speeds and certainly not with the same kind of ergonomics around breaking up the query and doing interesting things that that we care about. Yep. Awesome.

37:59So that's kind of instant SQL in a nutshell. What else should we show them?

38:03I think um let's just do a quick, you know, it's not just limited to to working on mother duck data, right? We can actually do this, too.

38:15Oh, awesome. Okay. So, we're going to qu Yeah, let's just hop into here. All right. We'll we'll write this from scratch, but we'll use instant SQL, right? So, we'll do let's do select one just to clear it. All right. So,

38:33uh we attach this Postgress service request data. Same same data set but in Postgress. And by the way, he just typed in we just got a result set. So this is quering Postgress with instant SQL and getting results as he goes. Let's just let's just riff a little bit like Yeah, sure. We can just do like agency count star as count, right? And

38:57we can do group by, right? Wonderful. Awesome. So this is like the kind of the coolest way to actually explore a Postgress replica because now you can just kind of type as soon as you attach Postgress into duct uh to duct DB, you could just work with it. And I think the point of this is that instance SQL

39:15actually works fairly well with almost any external data source. Um whether it's Postgress or MySQL or BigQuery or stuff in object storage, it's essentially the same model, right? We're caching something remotely and then we are after that cache is there, we're rewriting everything to point to our local DB cache of that thing. So it's super cool. Um, now the problem is it's

39:40not always easy to scan a massive data set to get what you want. So, do we have anything else we could show people?

39:46Yeah, let me just remember the table name. I have the foursquare data set which is about a 100 million rows. So, let me just pop in here and remember what the what the uh Okay, here it is. We'll use this one. I'm going to do this.

40:01Okay. Yeah. Nice. So from FSQOS places

40:07and we will just full screen this again. Yeah. How many rows are in this one? Uh let's just let's we can you know let's just do this. We can I mean I guess we can show the table looks. There we go.

40:17Okay. Well here there we go. 100 million. Wow. 100 million. Okay. Awesome. And so this works really great with mother duck tables as well and actually works even more naturally I think with mother duck because of dual execution. Generally speaking what we can do is break up where the scanning actually happens when we're getting the cache which means that we can do a

40:38better job of getting rows that we actually care about. And so this is I think mother duck's kind of unique place with this technology. Um, it's really only possible to make this work elegantly with dual execution in mother duck. So awesome. Um, what else do we

40:57want to show? Um, I mean I think because you know one thing that's kind of sneaky in here is I'm actually using the duct DB UI. So we can actually select from our local file system also, right? So just just like we were showing earlier, uh I can just do, you know, I just reference it like this and I say I think

41:16service request.park is in there. Let's see if it samples it for me. Perfect. Okay. So So I get I get all the web UI.

41:25This is kind of like a nice little, you know, uh shout out to what you guys are doing on the UI team, Hamilton. But I get all the, you know, I get all of this um all of this goodness um running locally. And then of course I can run it this way. And now I get all of the

41:38awesome column explorer stuff and it's super fast because I get to use all my cores to do this, you know, really fun uh analysis. So So it's so sick. It's like also just a way to explore data files on totally totally. Uh yeah, I

41:57think that's all I had. Did is there anything else you wanted to hit on? I think we could show them some of the kind of cool prompting. Oh yeah. Yeah.

42:04Yeah. We got some regax we can do, right? Yeah. Yeah. Okay, let's do that.

42:09Um, complaint type. Okay, let's see if we can like simplify these a little bit.

42:14So, we're going to turn on instance equal again. Okay. And let's remove everything to the left of the dash here. So, we're going to do this, and this will hop us into a nice little AI um affordance um that is going to let us do something really fun, which is use

42:49regex. Nice. Let's hope the demo gods smile upon us. Awesome. Okay, so this feature what it does is it makes a suggestion to your SQL just like we talked about before. But if you look down it actually

43:07applies it applies this change interactively to instant SQL which means that it's actually really easy to trust the um suggestions that your AI AI assistant makes for you. That's really really cool. It's very special.

43:25Um, and this means that pretty much all AI features in motherduck are about to get really, really good with instance SQL as a result. And really the sky's is the limit when we give the um when we are able to add into the context window deep semantic information plus samples of data plus virtually anything that you need to

43:44know about the query. Um, it really gives the model unprecedented control and understanding of what you're trying to do and it just makes the results way better as well.

43:54Yep, indeed. And we I'll show this one real quick, too, because I never remember the syntax. So, I can be like, I don't know, return the hash, right?

44:02Hash this column. This is my This is my trick for never reading the docs, right?

44:07There we go. All right. Anyways, that's great. There's always like a mental block between like actually just taking the first word of your prompt and just using that as a function.

44:19Um, all right. Fantastic. So, I think that basically concludes it for the demo and um my screen. I'm gonna

44:29remove myself off.

44:37Excellent. All right. Are we ready for Hamilton? Do you have are we ready for Q&A or do you have something else? I I forget. No, that that's pretty much it.

44:43That's that's in SQL in a nutshell and yeah, I'd love to answer any questions people have. Yeah. Yeah. Let me um actually Jacob, I'm going to pull you back here to the stage too so you can help answer questions. That works. Um yeah, thanks for putting questions. If anyone else has questions uh you know, put them in the chat and we'll make our

45:00way through them. Um I think one clarification that someone had asked is basically uh is Motherduck the same as DuckDB? Jacob, you want to give that?

45:10You want to answer that? Yeah, sure. I answered it in the chat, but um no, Motherduck and Duck DB Labs are separate companies. Obviously, we have a a close relationship with them. Um they are uh uh you know on point for the open source duct DB project and then we get to the fun the fun job of extending that um to

45:27run on the cloud. Uh there's this one uh uh does uh instance SQL already support goto definition example controlclick on cost per kilometer?

45:41Does not but it's definitely on the road map. It's on the road map. Okay, great.

45:45Cool. Um, and next one is I think we kind of talked about this a little bit, but uh, would Instant SQL still work on a very large data set? In other words, what is the max data set size that Instant SQL could work for? I, this is my favorite question actually because one of the cool things about how we designed this

46:04is it kind of scales infinitely. Um, but it also depends on a bunch of factors. So for instance, if I've got a terabyte of data that I'm trying to query in S3 in parquet, I may be able to scan like just the very top of it to generate a cache. But if I'm trying I'm adding a wear clause and I want really

46:24want to actually you know get more data there. Um it's going to be very slow to cache that data. And this is kind of one of the places where motherduck actually I think uh really shines right. So if I have a you know 100 billion row table in motherduck and I have a wear clause and I'm really interested in modeling kind

46:41of a small subset of the data in my ad hoc query um we can mother duck will pretty uh seamlessly split up um the

46:50scan of that data so that it runs server side on your duckling and then once it's got the things that it cares about ships it back to the to the local duct DB and then caches it there in a in a temp table. Uh, and because it does that, it actually means that you get the best of

47:05both worlds. You're using motherduck for the thing that it's really, really good at, and then you're using local duck DB for the things that makes it really special, which is local fast computation. And the cool thing is as a user, you don't have to make that choice at all. You just have to start quering a mother duck database and um, instant SQL

47:21does the rest for you. Cool. Uh I think this next question is kind of us all building off on that is you know how does it figure out the cache assuming it's a highly filtered data is there a transparent way that it does this.

47:35Um transparent is an interesting uh word. Um so the way that it figures out the cache is um relatively conceptually straightforward. So we parse the query and then we look at all of the different table references in every node and then we basically build a directive asyical graph of that and then from there um we figure out what we can cache

47:56intelligently through the DAG so that it makes it easy to click through different CTE and subqueries and kind of um have fast access to data. Um and there this

48:06model gets a lot more complex when you think about filtered queries with wear clauses and also group eyes as well. So there's some other things that we have to do to make that work. Uh making joins work is actually kind of an interesting problem as well. We had there's a lot of things we have to do to actually make

48:20modeling joins work really effectively. So um because there's all sorts of things that happen when you're actually trying to join data. There's there's whole classes of binder errors that only exist in joining. And so we actually have tricks for a number of different um um contexts in which you're trying to model your data. Um but ultimately the parser

48:40is the thing that guides the caching and without this ability to generate the path we wouldn't know exactly where what what we need to actually show cache and thus show to the user. So you notice when we click on that CTE um in in your select query um we have to figure out do we have that already cached? If we don't

48:58how do we cache it after we cach it how do we rewrite the query to actually point to that. Um so these are all the the bits and pieces that kind of power the instance machinery.

49:08Cool. Um, this is maybe a little bit of a two-part question, but um, you know, is one is is Instant SQL open source?

49:16Uh, and then, you know, I wanted to check if this is doable in other database engines as well. Um, Instant SQL is not currently open source. Um, doing this in another database engine would require you to probably pull out the internals and figure out a lot of very tricky things. Um, I think DuckDB is probably the best suited database for

49:38this sort of thing just because it actually does a good job of exposing some of its internals in a in a different way than other other database engines. But ultimately, um, it's extremely complex to do this. Um, it is probably the single most complicated technical project I've worked in my entire career in data. Um, and to say

49:58that I've even hit the bottom of the well, um, would be disingenuous. like we have not even gone to the depths of of how to make this work. Um, and I think so I think it's very possible that one could in theory do this in another database engine, but I think it would require you to run database engines on both your machine

50:17and also where the data lives at the same time and to elegantly coordinate between the two in a way that makes it possible to do what you want to do. Um, so that's sort of my very high level like go for it. I I've actually tried to use cursor to write parts of instance SQL and I'm finding because we're kind

50:36of at the frontier of a lot of things. It really turns out the foundation models don't understand how to proceed across the board. It really requires um human thinking to get this part right.

50:47Yeah. Great. Um here you go. Never used uh mother duck the kind of local files exploration. Does that require uploading uh the file to the cloud? I think Jacob, you could answer that one. Yep, sure.

51:01Um, so no uh there there's kind of two parts to uh how it works. Um when you

51:09are logged into motherduck and you are running um running the duct DB UI for example, um that is executing locally on local files and that actually all of those queries stay local. They don't they don't hit the cloud at all, right?

51:24When you're hit when you're running local files. Now, what that lets you do because you're running to Hamilton's point earlier the the same database locally and in the cloud that means you can like join that data that you're using locally to to data that's in the cloud kind of trivially in a single single statement. But no, uh you can

51:41keep all of your data locally um uh in order and and query it and explore it with all those great affordances that we were showing um without having to put it in mother duck. Okay, great.

51:55Um, I think those are all the questions that have come in so far. If anyone else has any questions, we'll give it a little bit uh for you to ask your last questions. Otherwise, we'll end it in just a bit. So, um, while we wait for that, yeah, if you want to try out, you know, motherduck instant SQL, uh, you know, you can

52:15either go to motherdoc.com, sign up for an account, you can also just run duct db-i, uh, and, uh, that will kind of give you a a a local, we'll call the ductb local UI. Uh, you don't even need a mother duck account to do that. Uh, so you can do everything kind of again there just purely locally and you still get pretty

52:34much everything that you would get with a mother duck account. the a couple things that you I think you just don't get some of the AI features um without um without a a paid account or without a trial. Um and yes, as Jacob said, um you know, join us on Slack. Uh we have slack.mmotherduck.com. Uh always looking

52:52for, you know, feedback. Uh if you're enjoying instance, maybe frustrations, uh you want to stay up to date on, you know, what we're working on. Uh you know, we all hang out there a lot. Uh and really appreciate um you know, people trying it out. uh people giving us your feedback uh and and sharing your thoughts. Uh let me look we have a

53:12couple one uh I know it says Austo asks what about visuals? Um not sure Austo if

53:21you have uh more if you want to expand on that or if you want to talk about data. Yeah, I I assume that's a question around visualization.

53:33Yeah. So, um, Motherduck has this feature called the column explorer. Um, and, um, it gives you, um, all of your distributions and tons of diagnostics about any table or result set. Um, and this actually this feature exists in the duct DB UI as well. Um, so you're able to um, basically use the column explorer with the duct DB UI on your local files

53:56uh, in local mother local DB databases. And it works really well actually with mother duck because you have a really beefy database engine on the other side crunching a lot of data to give you distributions.

54:09Y uh okay I think we're kind of going down this thread in terms of you know is Mother Duck strictly a warehouse which needs a BI tool downstream or do we also want to be the BI layer? Oh definitely Mother Duck's goal is to be a data warehouse. Um the tools that I think I am maybe most interested in building are

54:29not those at the end of the analysis pipeline like you might get in a BI tool but actually those in the beginning of the pipeline. I think that um first mile problems uh are some of the hardest problems to actually solve in data analysis. Last mile problems I think BI tools do a great job and you have other

54:45requirements like governance and things like that. I'm interested in helping people clean up the mess of their data primarily. Yeah, that's why we have both the column explorer and also why we have instance SQL. Instance SQL is really designed to be a helpful modeling and prototyping tool um for for your new ad hoc queries. Yeah, that that's right. Um

55:03I'll just add on to that like you know that that we appreciate as kind of practitioners um how messy this stuff is. And so all of the affordances we're thinking about here are like how to make it easier to build the thing that's really valuable for your business or your company or your friends or yourself, right? Um it's

55:22not it's not like oh like is this a is this a BI tool or is this a warehouse?

55:26Like obviously like we need to think about those things too in terms of how we bring it to market, but it's it it really I think like at our core we want to make this stuff uh awesome to use and hopefully that is uh uh reflected in what we're building here. Yep.

55:43Yeah, I guess maybe continuing on that is um you know Vic saying you know there's a lot of modeling in the last mile quil layer that BI and you know warehouse need to be tightly coupled.

55:54Yeah, I mean you know that's a really interesting question and I think what we've seen uh in terms of how the market is shaping out in ter and how people are buying is that they are kind of keeping them separated.

56:07Um, we will see. I mean, uh, obviously

56:12Tableau, which is probably the biggest standalone BI tool outside of PowerBI, which is doesn't I don't know. I I wouldn't call it PowerBI standalone.

56:20It's kind of, you know, you use it if you're in the Microsoft ecosystem. Um, you know, both of those both of those tools have databases, you know, that are baked into them, right? Tableau paid, I think, a big number for Hyper, which was kind of a precursor to DUTDB. Um and

56:38um you know you do you need OLAP in there? Yes. Um how how will all of these pieces fit together? You know anyone anyone's guess for the future. Um you know I think we're again our perspective here is uh build something build something awesome make the experience great to use. Make it so that you can do the

56:58thing you do do your job easier and better. Um and you know the the the shape of how the future of work will look is you know obviously changing every day as AI continues to advance too. So um yeah very very good question.

57:15Cool. Um with that I think I think we're good. Uh there is one request which I agree with which is um you know uh feature request uh ductb UI dark theme.

57:28Um yeah, one day we'll get that. Thank you. Nope. Nope. Awesome. Cool. With with that uh I think we're right at time, so I will end it here. Thank you everyone for coming uh and participating. Thank you for all the great questions. Uh and yes, if you want want to try out instant SQL, try out Motherduck uh motherduck.com or duckdui.

57:55Thanks everyone. Thank you so much. Bye. Take care.

FAQS

What is MotherDuck's Instant SQL and how does it work?

Instant SQL is a MotherDuck feature that returns query results on every keystroke as you type, removing the traditional write-run-wait-debug loop. It works by parsing the SQL abstract syntax tree, caching a relevant subset of data locally using DuckDB, and rewriting queries to run against the local cache in under 50-100 milliseconds. For large remote datasets, MotherDuck's dual execution engine scans and filters data server-side before shipping only the needed rows to your local DuckDB cache.

How does MotherDuck's dual execution engine improve query performance?

MotherDuck's dual execution engine splits work between a local DuckDB instance on your machine and a DuckDB server in the cloud. For Instant SQL, the cloud server handles scanning large tables and applying WHERE clause filters, then ships only the matching rows to your local DuckDB for interactive modeling. This means you can interactively explore tables with billions of rows at keystroke speed. The heavy scanning happens server-side while lightweight computation runs locally with zero network latency.

How does Instant SQL help debug CTEs and complex SQL queries?

Instant SQL uses the cursor position to determine which CTE or subquery you are editing and automatically displays the data at that level. You can click between CTEs to see intermediate results in real time without commenting out parts of the query. For column expressions, clicking a column header decomposes it into its sub-expressions, showing the full flow of data through calculations, similar to tracing formulas in Excel. This makes debugging complex queries much faster than running partial queries manually.

Can Instant SQL work with external data sources like Postgres?

Yes, Instant SQL works with external data sources including Postgres, MySQL, BigQuery, and data in object storage like S3. When you attach an external database to DuckDB, Instant SQL caches the relevant data locally and then rewrites queries to point to the local cache, giving you the same keystroke-speed feedback. The same caching model applies regardless of the source, though MotherDuck databases benefit most from the dual execution architecture for efficient server-side scanning.

Do you need a MotherDuck account to use Instant SQL?

No, you can use Instant SQL locally without a MotherDuck account by running duckdb -ui from the DuckDB CLI, which opens the DuckDB local UI with Instant SQL, the column explorer, and other features. A MotherDuck account adds dual execution for querying large cloud-hosted datasets, AI-powered features like Command K for natural language SQL generation, and the ability to combine local and cloud data in a single query.

Related Videos

1:00:10

2026-02-25

Shareable visualizations built by your favorite agent

You know the pattern: someone asks a question, you write a query, share the results — and a week later, the same question comes back. Watch this webinar to see how MotherDuck is rethinking how questions become answers, with AI agents that build and share interactive data visualizations straight from live queries.

Webinar

AI ML and LLMs

MotherDuck Features

9:09

2026-02-13

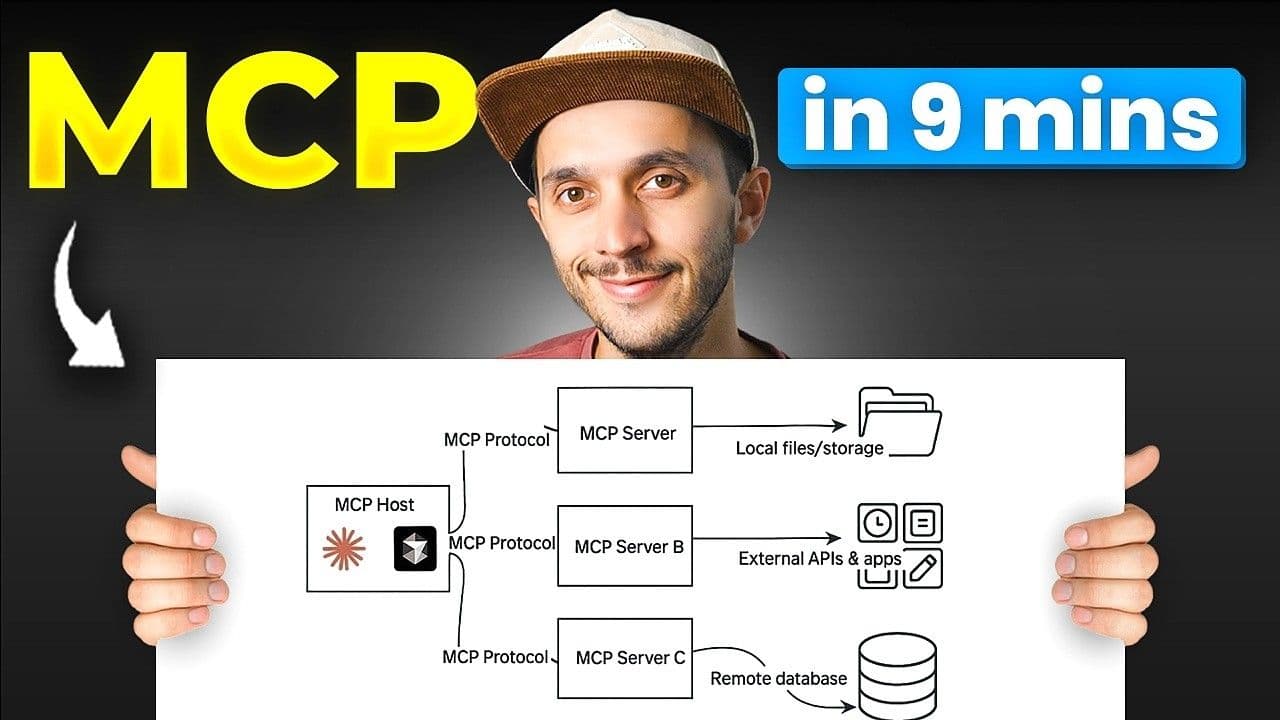

MCP: Understand It, Set It Up, Use It

Learn what MCP (Model Context Protocol) is, how its three building blocks work, and how to set up remote and local MCP servers. Includes a real demo chaining MotherDuck and Notion MCP servers in a single prompt.

YouTube

MCP

AI, ML and LLMs

2026-01-27

Preparing Your Data Warehouse for AI: Let Your Agents Cook

Jacob and Jerel from MotherDuck showcase practical ways to optimize your data warehouse for AI-powered SQL generation. Through rigorous testing with the Bird benchmark, they demonstrate that text-to-SQL accuracy can jump from 30% to 74% by enriching your database with the right metadata.

AI, ML and LLMs

SQL

MotherDuck Features

Stream

Tutorial