Understanding DuckLake: A Table Format with a Modern Architecture

2025/06/05Featuring:The Evolution from Databases to Table Formats

Modern data engineering has undergone a significant transformation in how analytical data is stored and processed. Traditional OLAP databases once handled both storage and compute, but this approach led to two major challenges: vendor lock-in through proprietary formats and the inability to scale storage independently from compute.

This limitation gave birth to the data lake architecture, where analytical data is stored as files (primarily in columnar formats like Parquet) on object storage systems such as AWS S3, Google Cloud Storage, or Azure Blob Storage. This decoupling allows any compute engine - Apache Spark, Trino, or DuckDB - to query the same data.

The Table Format Revolution

While storing data as Parquet files on blob storage provides flexibility, it sacrifices essential database features:

- No atomicity: Parquet files are immutable, requiring complete rewrites for updates

- No schema evolution: Adding or removing columns requires manual tracking

- No time travel: Querying historical states of data becomes complex

Table formats like Apache Iceberg and Delta Lake emerged to bridge this gap. They add a metadata layer on top of file formats, enabling:

- Metadata tracking (typically in JSON or Avro format)

- Snapshot isolation and time travel capabilities

- Schema evolution support

- Partition pruning optimization

However, these solutions introduce new complexities. They generate numerous small metadata files that are expensive to read over networks, and often require external catalogs like Unity Catalog or AWS Glue to track table locations and versions.

DuckLake: A Fresh Approach to Table Formats

DuckLake represents a fundamental rethink of table format architecture. Despite its name, DuckLake is not tied to DuckDB - it's an open standard for managing large tables on blob storage.

The Key Innovation: Database-Backed Metadata

Unlike Iceberg or Delta Lake, which store metadata as files on blob storage, DuckLake stores metadata in a relational database. This can be:

- DuckDB (ideal for local development)

- SQLite

- PostgreSQL (typical for production)

- MySQL

This architectural decision leverages what relational databases do best: handle small, frequent updates with transactional guarantees. Since metadata operations (tracking versions, handling deletes, updating schemas) are exactly this type of workload, a transactional database is the perfect fit.

Performance Benefits

The metadata typically represents less than 1/100,000th of the actual data size. By storing it in a database, DuckLake eliminates the overhead of scanning dozens of metadata files on blob storage. A single SQL query can resolve all metadata operations - current snapshots, file lists, and more - dramatically reducing the round trips required for basic operations.

DuckLake in Practice

Architecture Overview

DuckLake maintains a clear separation of concerns:

- Metadata: Stored in SQL tables within a relational database

- Data: Stored as Parquet files on blob storage (S3, Azure, GCS)

Key Features

DuckLake supports all the features expected from a modern lakehouse format:

- ACID transactions across multiple tables

- Full schema evolution with column additions and updates

- Snapshot isolation and time travel queries

- Efficient metadata management through SQL

Practical Implementation

Setting up DuckLake requires three components:

- Data storage: A blob storage bucket (e.g., AWS S3) with read/write access

- Metadata storage: A PostgreSQL or MySQL database (services like Supabase work well)

- Compute engine: DuckDB or any compatible query engine

When creating a DuckLake table, the system automatically generates metadata tables in the specified database while storing the actual data as Parquet files in the designated blob storage location. Updates to tables create new Parquet files and deletion markers, maintaining immutability while providing a mutable interface.

The Future of Table Formats

DuckLake's approach solves many of the metadata management challenges that plague current table formats. By leveraging proven relational database technology for metadata while maintaining open file formats for data, it offers a pragmatic solution to the complexities of modern data lakes.

While still in its early stages, DuckLake shows promise for organizations looking to simplify their data lake architecture without sacrificing the flexibility and scalability that made data lakes popular in the first place. As the ecosystem matures and more compute engines add support, DuckLake could become a compelling alternative to established formats like Iceberg and Delta Lake.

Transcript

0:00Forget about iceberg and delta lake. Duck lake might be faster. DuckDB just introduced a new table formats named Duck Lake. And if you work with data, you've probably heard about the table wars with iceberg versus delta lake over the past few years. No, you haven't.

0:17Well, all right. I'll do a quick recap and then we'll dive into the lake. Duck Lake to be precise. So, whether you're new to table formats or an iceberg expert, this video is for you. My mission is to make it easy to understand and get started with ducklick. But first a little bit of context. So to understand table formats, we need to

0:36start with the file formats like bar and avo. But before that, why we as developers should even care about file format? Aren't databases supposed to handle storage for us? Well, yes.

0:48Originally database were used for data injuring and they still are. But there were two main challenges with old traditional OLAP database. First is the standard vendor locking. Data was often stored in a proprietary format and making export or migration really painful. Second, traditional database weren't always built to scale storage independently from compute. And that's where decoupling compute from storage

1:13started to make sense. Instead of relying on a database and giant to store everything, engineers started to storing analytical data as file mainly in open colonar formats like park on object storage like AWS S3, Google cloud storage or Azure blob storage. These formats are designed for heavy analytical queries like aggregations across billions of rows. So this architecture is what we call data ro

1:40park file on blob storage queried by any computing giant of your choice like apache spark task or of course ddb. But this freedom of separating storage and computes comes with a trade-off. Indeed you lose database like guarantees no atomicity. You cannot just update a park file in place because they are immutable. You often have to rewite the

2:02entire file. No schema evolution. It's hard to or remove columns without manually tracking change and no time travel. You cannot easily query the state of data as of yesterday. So that's where table formats come in. They sit on top of file format like park and add database like features. So you have metadata tracking usually in JSON or avo

2:23snapshot isolation and time travel schema evolution partition printing. as whole features are stored as separate metadata files in the same blob storage system. However, that creates a new set of problems. You generate lots of small metadata files which are expensive to read over network like AWSS3. You often need anyway an external catalog like Unity or AWS Glue to tell your query

2:50where the root folder of that table is and what version exists. So while table format brows huge improvement, they also introduce overhead and complexity, especially around metadata management.

3:01So enter duck lake, a brand new table format developed by the creators of DuckDB. Yes, it's yet another table format, but Duck Lake brings a fresh take with its architecture. So first of all, Duck Lake is not tied to Duck DB despite the name. Duck Lake is not a duck DB specific thing as you said.

3:20Yeah, it's it's a standard. It's a standard. It's a convention of how do we manage large tables on glob stores that are stored in formats like parket um in a sane way using a database. So yeah, it's true that as of today the easiest way to use ducklake is through ductb but it's not a technical requirement for

3:42ducklake. Second, unlike iceberg or data link where metadata is stored as file on the blob storage click store metadata in a relational database. Yes. So now you understand why the initial context was useful. We kind of are coming back to the database to some extent. So that catalog database can be either duct DB great for local use or playground SQLite

4:05but typically Postgress or MySQL. And you may ask why use a transactional database for metadata? Because these systems are designed to handle small and frequent updates with transactional guarantees and metadata operations like tracking version and link deletes, updating schema are small but frequent and transactional database are just a great fit for that. Also the metadata is tiny often less than 1 100,000 of the

4:34actual size of the data. So storing it in a database avoids all the overheads of scanning dozen of metadata files on blob storage. But as for the metadata, the data itself is still stored like other table formats as park on the blob storage of your choice. So you may have understood already based on my previous example, but because of this

4:54architecture, the click can be really fast. So let's say you want to query a nice bird table. Here are roughly the operation. You query the catalog to find the S3 path. You scan S3 to get the current version. You download the GSON Afro metadata file. You load the file list. Finally, you can scan the park file. And if you are just updating or

5:14reading a single row, that's a lot of round trips. Duck plague flips the script. The meta data is in a database or a single SQL query can resolve everything. Current snapshots, file list, etc. And then you can directly query your data. So no more chasing down thousands of file just to do basic operation. Duck Lake support nearly

5:34everything you would expect from a modern lakehouse stable format. AC transaction across multiple tables full schema evolution snapshot isolation and time travel full list on the website. So in short ducklake architecture is metadata store in SQL tables on DDB SQLite per realistically on postgress or my SQL and data still in park file on the blob story. So dake is not just yet

5:59another table format. It reinks the metadata layer entirely. All right. Now that we've covered the backstory of tables, formats and introduce ducklake.

6:09Let's get practical. To run the next demo, you'll need three components where you store your data, where you store the meta data, and the computing giant. And of course, here we'll use the local duct DB. So for data, we'll use AWS SS3. You need a bucket where we have read, write access to this one. for meta data will

6:27set up a poser SQL database hosted on superbase. Superbase is a great option if you want to off this posest. It has full managed posgress database capabilities and you can set it up in one minute and they have a generous fleet here. Plus they have an amazing UI with a tons of feature that we'll use for ducklake exploration. Once you

6:48create an account, you can create a project and retrieve your connection parameters IPv4 compatible there. For the compute, aka the ductb clients. I'm going to use the ductb CLI. You can install it with one command script or through a package manager like if you are on Mac OS. You can check the link in the description to have full

7:09installation information. All right. The first thing we want to do because we're going to use AWS S3 is that I'm going to use the AWS uh CLI to log to SSO. So I'm

7:20just going to do AWS SSO logging. So that would refresh my AWS credential. Now that it's done, I have a couple of SQL operation. And so I'm going to launch the local uh Doug DB client using the CLI. And here I'm just basically having a SQL file and sending those SQL operation to the terminal down using a

7:42specific shortcut. First thing I'm going to do is create uh a secret based on the credial chain of AWS S3 I just refreshed. And then I'm going to create a posgress uh secret based on the parameters and password I just created in superbase. Default database name is posgress. So I'm just going to create this uh secrets and now I can install uh

8:08ducklake and the pos extension. So if you're not aware duct db as a mechanism of duct db extension you can actually explore uh all the duct db extension using uh this command and and those are the core extension that dugb support.

8:23There is also the community extensions but this is part of the core extension as you can see and now it is installed.

8:30is going to be autoloaded when I'm triggering uh this command. So you don't need to load it manually. So how about this command? What does it do? The attach command is like attaching another database. Could be a duck DB database.

8:44It could be a posgress database or a MySQL database. And here we are attaching a duck lake. So using a posgress database with a DB name posgress. And I'm naming it medio data lake and I'm giving a data path. So this is where the actual data is going to be stored. So this can be a local file path

9:05or it can be uh an S3 path an Azure uh path. So here I create a bucket with a given prefix duck click where I have read and write operation. And before running uh this touch command we can explore uh you know what is inside this uh actual uh folder and you can see there is nothing there is no park file

9:27uh yet there I'm using the club to list basically all the file that are on this packet I do have access so as I'm getting a result so that seems working all right so let's create the attach command and something interesting to mention is that because I create a duck db secrets using the duct DB secrets manager. And by the way, I didn't leak

9:49anything. This database no longer exists when you're watching this video. Well tried. Basically, Doug DDB knows which secrets to pick up when I attach the posgress database. So, let's go for it.

10:00So, what's happening behind the scene is that Doug DB has created multiple metadata table. So we can actually look into the superbase UI and you can go to table editor and you see now I have a tons of tables here. all the specification of this table. Uh you can find on uh the documentation website but as you can see I have for example

10:23information about snapshot duck uh duck schema duck metadata that's the one I wanted to uh show you where you can actually see the data path and ducklick table which uh should be empty because we have no table yet in our database. So now I can use the media duck click you know database and I'm going to load uh a

10:45CSV file. So this CSV file uh is about ambient air quality data and just to show you uh so this is hosted on another S3 bucket you have uh a couple of information basically uh those information uh PM10 and PM25 are like measure for air quality in a given uh city. So, and what I'm going to do is basically create a duckling

11:11table based on this uh CSV file. So, let's go. So, this data set is you know roughly small. There's just 40,000 uh rows. So, now what I'm expected is that I should have metadata in the posgress database and I should have data itself as park file in the given uh location I give it which is u this one. So let's

11:35actually check that we can do now against a blob command and I should have one or more park file. Here I just have one parket file because the data is relatively small. You can expect multiple parker file if your data is really large. You can also uh inspect all the meta data tables directly from the duck db client. By the way you see

11:55they're all underscore ducklake metadata something. And so this is the table that we see. But just for convenient let's use again the web UI of um superbase.

12:04And so now if I go to dclick table now I have an entry of the dictable uh the table that I just created. I have also some information on the snapshot. Now you see just uh I just see the updates because now we have a new version of the uh duck click with one table added. You have also specific comments if you want

12:25to see actual snapshot which actually query those uh those uh table. Um and you see I have basically roughly the same information I just show you. You have also what is interesting some column uh statistic with like mean max value. So these are the matrix for error quality that I just talked before PN 10 and PN 25. So this metadata also could

12:47be interesting for various things to query directly the metadata with just a SQL and you can also actually query that from WB directly. All right, let's do another operation now. So what we're going to do is basically create an ISO 2 uh country code. So you know country code can have either ISO tree. So tree letter that's one convention or two uh

13:10letter that's another convention. So I'm just going to create a new colonizer 2. And now that it's done, I'm going to update basically uh for the country uh Germany which is D EU uh the ISO codes

13:23to for the column just created. So let's do that. So now I have updated successfully uh my table. So if I do simple from where the ISO 2 the new column created equal DE I should have all the row with uh Germany. So DU. All right. Now let's inspect against the park file. What would you expect?

13:44Because park file are immutable. So we should see more park file. So we have other park file and we have also delete park file. So if you look at this delete park file actually what you're going to get is basically information about the file path and a position and the p is basically the row position of the data

14:04that needs to be deleted. Of course when I'm doing just a query um from there I'm getting the latest test snapshot of my table that so that means also I can do travel time. So here what I'm doing because I show you that you can inspect the snapshot just like this and let's say I want to inspect uh ISO 2 when it

14:25was version one. It's not working because ISO 2 was not existing in the version one. There was just ISO tree. I created that column after and as you can see on the version two. So if I do this I'm going to get actually all the column which are null and if I had it a condition of where it is not null I'm

14:45just going to get zero because it's on the version tree that I actually updated this column. So now I should get some data indeed with the update I did before. With this modio, I hope you understand all the different concept about metadata, the data store, schema evolution, update in time travel. There is much more. But that's it for this

15:06endzon today. Let me know in the comments what you think, especially if you are already an iceberg or delta lake user. I would love to hear how you think it compares. Duck Lake is still very early in its life cycle, which means it's a great time to get involved if there is a feature you need. Now it's

15:25the moment to give feedback because the DDB team is actively listening. In the meantime, take care of your data lake and I'll see you in the next one.

15:34[Music]

FAQS

How does DuckLake differ from Apache Iceberg and Delta Lake?

DuckLake stores its metadata in a relational database (such as Postgres, MySQL, or DuckDB) instead of as JSON or Avro files in blob storage. This removes the overhead of scanning thousands of small metadata files over the network. A single SQL query can resolve the current snapshot, file list, and schema, whereas Iceberg requires multiple S3 round trips to query the catalog, scan for versions, download metadata, and load file lists before reading any data.

What are the components needed to set up DuckLake?

DuckLake needs three components: (1) data storage for the Parquet files, such as AWS S3 or any S3-compatible storage; (2) a metadata database like Postgres, MySQL, SQLite, or DuckDB to store the catalog tables; and (3) a compute engine like the DuckDB CLI to run queries. You attach a DuckLake database in DuckDB using the ATTACH command, specifying both the metadata database connection and the S3 data path.

Does DuckLake support time travel and schema evolution?

Yes, DuckLake supports both as part of its ACID transaction guarantees. Each change creates a new snapshot version in the metadata database. You can query historical versions of your table by specifying a snapshot version, and the system correctly handles schema changes across versions. For example, a column added in version 2 will return NULL when querying version 1. Schema evolution operations like adding or removing columns are fully supported.

Why does DuckLake store metadata in a relational database instead of blob storage?

Metadata operations like tracking versions, recording deletes, and updating schemas are small but frequent and need transactional guarantees, which is exactly what relational databases are built for. The metadata is typically less than 1/100,000th the size of the actual data, so storing it in a database avoids the overhead of scanning dozens of files on blob storage. This architecture makes operations like snapshots, file listing, and schema queries resolvable in a single SQL query.

Related Videos

1:00:10

2026-02-25

Shareable visualizations built by your favorite agent

You know the pattern: someone asks a question, you write a query, share the results — and a week later, the same question comes back. Watch this webinar to see how MotherDuck is rethinking how questions become answers, with AI agents that build and share interactive data visualizations straight from live queries.

Webinar

AI ML and LLMs

MotherDuck Features

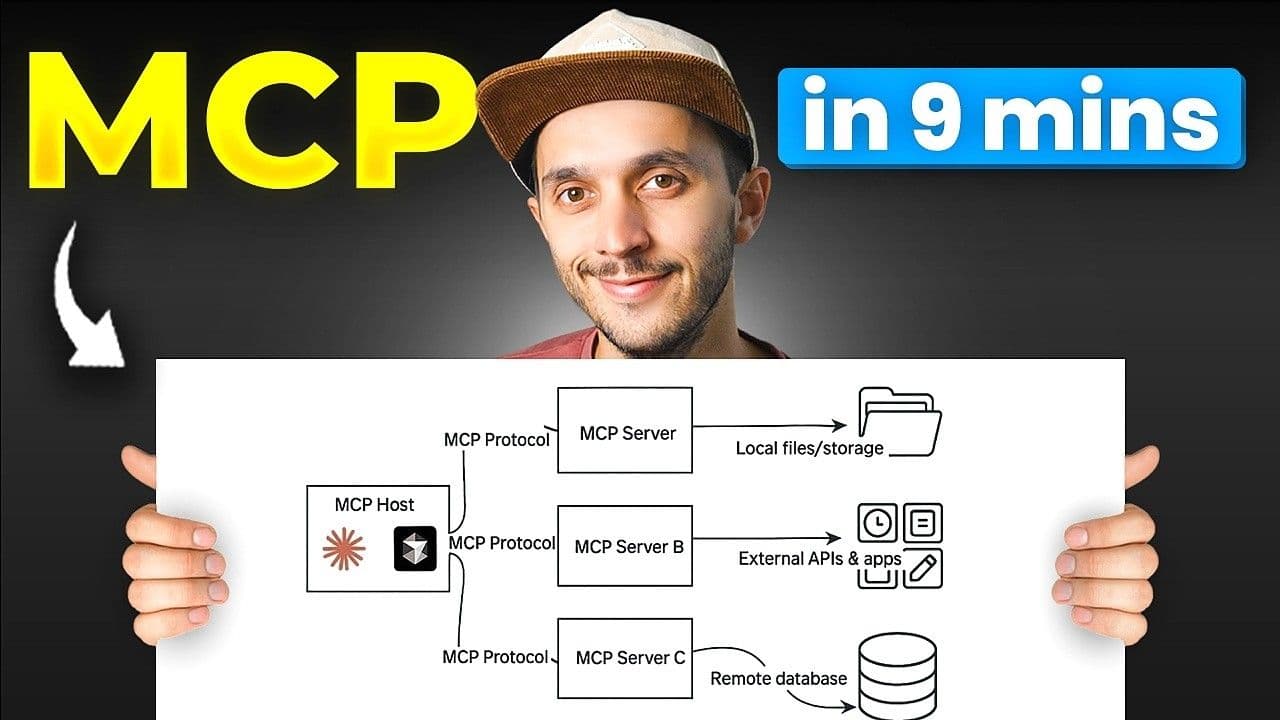

9:09

2026-02-13

MCP: Understand It, Set It Up, Use It

Learn what MCP (Model Context Protocol) is, how its three building blocks work, and how to set up remote and local MCP servers. Includes a real demo chaining MotherDuck and Notion MCP servers in a single prompt.

YouTube

MCP

AI, ML and LLMs

2026-01-27

Preparing Your Data Warehouse for AI: Let Your Agents Cook

Jacob and Jerel from MotherDuck showcase practical ways to optimize your data warehouse for AI-powered SQL generation. Through rigorous testing with the Bird benchmark, they demonstrate that text-to-SQL accuracy can jump from 30% to 74% by enriching your database with the right metadata.

AI, ML and LLMs

SQL

MotherDuck Features

Stream

Tutorial