Leveraging AI for Creative Content at BuzzFeed

BuzzFeed's data team, led by Gilad, has integrated large language models and generative AI capabilities into their products and toolkits to enhance creative processes rather than replace human workers. The team focuses on using AI advances to build tools and create experiences that wouldn't be possible otherwise, enabling readers to participate more deeply in media experiences.

Text Understanding Through Embeddings

The Challenge of Content Classification

Understanding what content is truly about goes beyond simple named entity recognition. BuzzFeed publishes diverse content that often defies traditional categorization - like an article about an Indiana woman who brought her pet raccoon to a fire station fearing it had overdosed on marijuana. These unique stories require sophisticated understanding beyond standard topic taxonomies.

From Universal Sentence Encoders to Modern Embeddings

BuzzFeed transitioned from using Google's Universal Sentence Encoders and DistilBERT (which maintained 97% of BERT's performance while being 60% smaller) to modern embedding approaches. They now use Nomic embeddings with 124 million parameters to create dense vector representations of their content.

These embeddings serve as foundational infrastructure for:

- Content clustering and similarity detection

- Recommendation systems

- Trend analysis and business intelligence

- Understanding audience consumption patterns

AI-Powered Visual Content Creation

The Viral Barbie Dreamhouse Experiment

BuzzFeed's editorial team began experimenting with Midjourney to create images relevant to trending topics. Sarah, a content creator, researched and crafted prompts representing each U.S. state to generate "Barbie's Dreamhouse in Every State." The post went viral organically on Instagram and TikTok, with one TikTok video alone garnering over 13 million views.

Building Custom Image Generators

Following early successes, BuzzFeed developed in-house capabilities using:

- Stable Diffusion XL (SDXL) models

- Low-Rank Adaptation (LoRA) for efficient fine-tuning

- APIs like Replicate for model hosting

This technology powers interactive generators including:

- Shrek Generator: Allows users to "Shrek-ify" anyone

- Moo Deng Generator: Creates images of the viral baby hippo in various scenarios

- Mormon Wives Generator: Face-swaps users into themed content

- Medieval Pet Generator: Transforms pet photos into medieval artwork

- AI Emoji Contest: Enables users to create custom emojis

Data-Driven Content Optimization

Historical A/B Testing Data as Training Material

BuzzFeed has collected years of A/B testing data from their Bayesian-based testing system, which evaluates different headline and image combinations. This historical dataset includes:

- Multiple headline variants

- Performance metrics (click-through rates)

- Winning combinations

AI-Powered Headline Generation

The team trained a model using Hugging Face's Accelerate and Transformers library to predict winning headlines. The workflow involves:

- Generating 16 candidate headlines using Claude

- Running headlines through the trained model

- Using a "Battle Royale" style competition where groups of four compete

- Identifying the predicted best-performing headline

This approach combines BuzzFeed's unique historical data with large language model capabilities to create diverse, high-performing headlines while helping writers learn new approaches.

The Future of AI in Media

BuzzFeed views current AI applications as the beginning of a significant transformation in media creation and consumption. Rather than using these advances to create "subpar versions of the same static, unimaginative things," the company focuses on discovering unique possibilities within this new medium. Their philosophy parallels early television broadcasters who initially just recorded plays before discovering the medium's true potential.

The team's approach emphasizes:

- Continuous learning and testing

- Relentless iteration

- Finding unique applications for AI technology

- Creating interactive experiences that engage audiences

Early experiments have proven successful, with users actively engaging with these AI-powered interactive formats, demonstrating strong audience appetite for innovative content experiences that blend human creativity with AI capabilities.

Transcript

0:00[Music]

0:16I'm gilad I lead the data team at BuzzFeed uh I lead the core platform the analytics and machine learning teams uh and increasingly we're leveraging large language models and generative uh image capabilities uh and we've integrated number them into our uh into our products uh and and toolkits so I want to showcase some of those examples I'm

0:37also going to cover how we integrate some of our editorial point of view into these models that we use many times we leverage kind of smaller mo more focused data sets in those use cases um so a lot of what we think about is how we harness AI uh uh for Creative purposes like how do we um like you know use these

0:59advanced uh in in technology to build tools that we couldn't build otherwise to create experiences that we couldn't uh create otherwise right and I'll hoping to show you some some of these examples um these aren't these capabilities don't replace anyone we're not trying to replace anyone's job but we're enhancing kind of the way we operate right and um we're

1:23really excited by the capabilities uh that we have right now with some of these advances to um have our readers really participate more deeply in some of the conversation in and in the media experiences that we create um so I'll talk about I'll show you some of the new content formats uh that we've built over the past uh couple months and I'll talk

1:44about how these advances also serve as foundational infrastructure uh across a number of ranking uh and recommender systems okay so buz at the core Buzz BuzzFeed what we are is we make content we make a lot of content we make different kinds of content we have a range of Brands uh that we we have built and we operate and we publish everywhere

2:06on the internet right we have pretty uh large websites and apps but we're very active on platforms like Tik Tok Pinterest Facebook Instagram you name it um we make entertainment content so funny enlightening positive uh content make content that inspires audiences to take various actions um like interact on the site uh lots of people taking quizzes and polls uh we are one of the

2:33largest shopping e-commerce sites and and traffic drivers to Amazon for example so a lot of shopping interactions happen on BuzzFeed um and then we have h a pretty large Newsroom under HuffPost where we publish uh content that informs uh people around the world we monetize our content in different ways I'm not going to touch on that in this talk but there's

2:54advertising there's subscription there's e-commerce lots of data science and analytics work on that front uh that's really interesting and important uh but I I I do want to um refocus and really talk about how data science and machine learning fits into into this picture and into how we publish content um so at the core of you know a core problem that we

3:15focus on uh often is understanding what a piece of content is about um now named entities noun phrases can only take us so far right so why do we care about this well ultimately what we're trying to do often is m match between our content we have a ton of content and then users which we have a lot of users

3:34coming to our site right and um how do we do that I mean the better we can represent what our content is about the better uh we can understand what users care about so we look at various implicit data from how users navigate our websites and the better we can cluster Rank and identify similar content right from our um from our large

3:58library of content now why is it difficult you may say okay well isn't this like a solv problem you just take named entity right this article is about Angelina Jolie well it's more complicated than that because you want to um you know there are certain taxonomies that we use and publish under you know Sports content or entertainment

4:16content but um you know we we a lot of our content is more nuanced than that it's about a specific story or specific angle or narrative and like how do you classify things like this like this is a real article that we publish publish an Indiana woman brought her pet raccoon to a fire station early Friday because she

4:34was afraid that it had overdosed on marijuana officials say what is that topic like we don't have that topic it doesn't exist in our catalog uh but we do publish a lot of content like this there are various weird medical things that are spreading on the internet and we verify them and they're interesting for audiences so we

4:53want to write about them um so uh a lot of kind of some of the advances that uh we found with uh you know word embeddings uh and sort of extracting uh you know different pieces of from llms enable us to find other content that's similar to this so like a lot of what we do is think

5:15about representation how do we take a piece of text and sort of condense it right into a core thing that best represents the piece of text and I can't overstate how important these these shifts and capabilities have been and how much they've unlocked for us uh over the past two years I would say um the better we can represent text the better

5:36we can cluster content find similar content recommend content to users right the better insights and bi we have like our analysts can talk about Trends and what audiences are consuming in a better way right so there are a lot of benefits to to improving uh on this front uh now the old way was using Universal sentence encoders uh this was a pre-trained model

5:59uh that uh by Google it was a much smaller data set uh but at the time it was the largest available uh we at the time we used theber to um which was also kind of a smaller more efficient version of The Bert model uh which was groundbreaking at the time and use trans the Transformer architecture distill

6:18Bert interestingly was 60% smaller than Bert but maintained 97% of the performance right so we're constantly trying to find smaller models that we can kind of work with uh as it's cheaper it's easier faster uh more performant now the new approach involves embeddings right so what are embeddings they're effectively dense low diim low dimensional Vector representations of

6:44data right we use them often for text it could be other forms of data uh but think of it as a form of compressed knowledge right you take a large data set and you kind of distill it to the core while maintaining the semantic understanding of what this text is about out um you can also think of it as like

7:02as one of the Hidden layers from a deep neural network right if you train a neural network on a lot of texts extract one of the layers that those are your sort of dense vectors that represent the text now we use this in many ways um you

7:17know as we sort of uh we publish an article we create a dense Vector representation of this article we use the nomic embeddings for example they're small considered small with 124 million parameters only uh and we use these uh vectors to represent a you know an item that we publish uh and then very easily with uh simple Vector math we can

7:40identify the items that are closest to this article right um and so it's it's a

7:47really really interesting and quite straightforward way to maintain the semantic understanding of what a piece of content is about now this is just an example of the like the inputs uh that we use in some cases uh when we generate the embeddings so we put in the title of the article uh we put in when it was

8:09published some tags right from a CMS some text and additional metadata that uh we think will be useful as a useful way to represent what this text is right so a lot more than just the title of the

8:23article okay so so on the text front that is kind of that serves as a foundational capability that powers a lot of what we've been building over the past year now on the image image side it's a really we've sort of been through this really interesting Journey leveraging the advances to create new interactive formats now it started with our

8:45editorial team playing around with mid Journey right they said ah this is cool you can kind of create images that are relevant to the conversation happening on the internet our audience uh may be interested in this and let's see what we could do with it uh they tried a few different uh formats um and ultimately landed on this um this

9:07list posts about Barbie's dreamhouse in every state right so a lot of BuzzFeed publishes a lot of identity based content does it's really really successful you know Millennials uh gen Z on our website love love really love this content um and so Sarah published this list um Barbie dream housee in every state and you think it's air

9:29fairly easy list to create you just kind of generate these images in mid Journey but actually she spent a lot of time researching every state kind of putting together a prompt that really represents what a state is about um and and put together this list this uh this list it went completely viral organically um first on Instagram and

9:52then a Tik tocker um covered it and their art their uh video got over 13 million views she created a series out of it others you know saw it and kind of uh created their own versions We saw massive spikes in Google traffic right so the whole kind of internet was lighting up and people were sharing and

10:13responding and interacting with this content and that that was a very clear signal for our teams that oh there's something there's something here about creating these images uh in a way that is responsive to events that are happening in the day-to-day that we're covering like we were covering Barbie and the movie that that just come out um

10:31and you know it's clear that our readers also want to interact and engage with this um so uh fast forward a little bit and we get to Shrek right so after you know seeing a few uh early successes with uh formats that are based off of generative uh image capabilities we started to look into ways in which we

10:52can better uh integrate this into our um into our toolkit right internal tools uh it's great to use mid Journey but we wanted to make it easier and we wanted um just a lot more control in uh the output right and so we start looking into B various diffusion based approaches and landed on one uh which I'll talk about in a little bit which

11:14involves fine tuning uh a stable diffusion Excel model and uh this is an example of the kind of output that you can create uh from this kind of model um we made this Shrek generator again you could go to the we site there's some sort of um you know blur about about any

11:35something that was happening at the time relating to Shrek and then every you know user could um just say who they want to Shrek ify right very simple and we got thousands and thousands and thousands of responses right A lot of these are created by users uh Shreks and there are thousands more that I could show you um now underlying that

11:56capability um what powers it is is uh effectively a way to fine-tune a stable diffusion model right so stable sdxl which is stable diffusion Excel is an advanced version of the original stable diffusion model which gen just generates higher quality images um and using an approach called Low Laura low rank approximation um we can sort of effectively fine-tune this large model

12:25right so what ends up happening is the majority of the parameters in the model are staying static right they're not being touched but a handful of them are tuned to fit the style or the object

12:38that is uh being fine-tuned in this case if you fine-tune a model uh with these images of dog you can create sty different styles of the object right you could also um you know so really depending on the inputs to the model uh you can kind of focus it in specific directions which was really really interesting for editorial team who

13:01thought you know they could do a lot with a capability like this uh especially as they understand what's moving audiences and what's being covered uh you know right now they could if they could create uh a format that leverages uh these capabilities they they thought they could really sort of interest and excite audiences um and so you you're creating

13:23uh these models that adapt to new tasks right and you could generate images in a new style or based on uh you know new data um and initially we hosted you know we built this in house and eventually uh as more kind of services launched we kind of leveraged uh some some third party uh ecosystem Services we love

13:43replicate uh and their API is pretty well do documented so many of our models today live on replicate um and our edit team is having so much fun this is just from yesterday right so uh who heard about mudang the hippo the baby hippo you must have seen baby hippo no yeah okay so uh super cute

14:05baby hippo in the uh in a zoo in Northern Thailand just went viral on the internet a few days ago uh using the new flux model we can create uh baby uh

14:18mudang as kind of the president or uh their debut performance on Dancing With the Stars right and you know we have our audiences creating all the these uh really fun funny uh visuals of mudang

14:35something you know phenomenon that's going viral uh on the internet uh the other thing that uh our team launched yesterday was this uh uh uh W uh

14:46generator and it's in response to a lot of coverage that they've been uh they've been publishing about this show on Hulu The Secret Lives of Mormon wives right so you it uses face swap model and you can sort of upload a picture of yourself or anyone and you sort of you Mormon ify Mormon wify yourself um I love this uh this these

15:11quotes that that we added so we in sort of integrated Chachi PT to create some of these blurbs sip rum discreetly from a hidden flask at PTA meetings I think I would totally totally be that person um and so these are just you know two examples of uh ways in which we create experience experiences that help our

15:31audiences participate in in what's happening right in our coverage and we think that's really powerful and we're seeing a lot of uh responses like meaningful responses from users here's just another very quick example uh created a medieval pet generator put you a picture of your pet we uh make a medieval version of it and uh where you

15:53hosted this huge Emoji AI generator contest right all these new emojis created by audiences so just like a few examples of ways in which they interactive format leveraging these advances relating to something that we're covering uh and and getting you know huge traction for audiences and very easy to implement now any any one of these generators could be kind of

16:17Spun up really quickly the final use case I want to talk about um is I think really interesting so leveraging um again data set that we have in house smaller kind kind of data sets to uh perform a really meaningful task uh one that you know we care about a lot we we we you know we think a lot about how we

16:38package content since it's so core to what we do we create content but we want to package it appropriately so that it reaches uh the right users wherever we publish it right and so on our website in apps we have we've had this uh basian based AB testing system that um has been running for years uh that effectively

16:59takes a few uh variants of title and image right variations and uh runs very

17:06fast test to see what is the best uh performing um variation right of this headline and and images and it's you know this capability is built into our CMS our writers when they're about to publish piece of content they pick two they write two headlines maybe pick two styles of images and then all the permutations run and the ab test uh

17:28resolves and the you know the right uh headline and image then are published to the large to a broader broader audience um and we have all these all this data from all these results uh from all these AB tests over many years right um and so

17:45what we did is train a model based on this historical data set right of all these candidates and the results right because we had the results in the form of click the rates CTR um and so we take this data set um and uh you know and we use it to train uh a model that can predict text that

18:06will likely um you know a headline that would likely outperform another headline right so the inputs is the headline embedding uh right the output some probability that a headline is the winner right we use uh we train a model using in this case hugging face uh accelerate via Transformers Library um you know it's just simple simple optimized training um we kind of set the

18:32objective function multiclass uh classification problem and then we um and then we have this model right that's trained uh given a set of titles it can identify or predict which headline will perform best um and then what we do is we go to a large language model in this case we use Claud uh we generate 16 candidate headlines uh based on some

18:56prompt that we developed and then we run these uh headlines through this model right and we do do it sort of Battle Royale style so four compete against each other and then the the winners of each uh section kind of compete against each other and we get effectively you know the winning headline and what's what's interesting about this approach

19:16is both we use historical data uh that we have that is kind of unique to us and we get a lot more diversity in headline options and it it's also a tool that can help writers learn about you know know different ways and different approaches to headlines as you know it'd be a lot to ask of them to write 16 different

19:35variations so finally we're you know I strongly believe that we're in this in this at the start of this really significant transformation of how media is created and consumed like most companies are uh making like using these advances to make subar versions of the same static unimaginative uh things and I think many are misunderstanding ing the capabilities that they enable like

20:01it it reminds me of the way that the early broadcasters when TV technology came out uh the you know initially they broadcasted recordings of plays right rather than experimenting with the medium itself so it feels a little bit like that we're in the stage right now where there's this new medium there all these like really interesting possibilities uh but you know folks are

20:22kind of sticking to the same known formats and so our goal at BuzzFeed is really to learn and test relentlessly and iterate until we find sort of you know the unique interesting things that could be done with this medium and our early experiments have proved that uh you know our users are are here for it uh so we are uh also

20:43here for it thank you so much [Music]

FAQS

How does BuzzFeed use embeddings and LLMs for content recommendation?

BuzzFeed uses dense vector embeddings (specifically nomic embeddings with 124 million parameters) to represent each article as a compressed semantic vector. They feed in the title, publication date, tags, article text, and additional metadata to generate these embeddings. With simple vector math, they can identify similar content, cluster articles by topic, and power recommendation systems. This was a big improvement over their previous approach using Universal Sentence Encoders and DistilBERT.

How did BuzzFeed build their Shrek and custom image generators using AI?

BuzzFeed fine-tunes Stable Diffusion XL models using LoRA (Low Rank Approximation), which adjusts a small subset of model parameters while keeping the majority static. This lets them quickly adapt the model to generate images in specific styles or featuring specific objects. They host these models on Replicate's API and can spin up new generators, like their Shrek-ifier or medieval pet portrait tool, rapidly in response to trending cultural moments.

How does BuzzFeed use AI to optimize article headlines?

BuzzFeed trained a model on years of historical A/B testing data (headline candidates paired with click-through rate results) to predict which headline will outperform others. They then use Claude to generate 16 candidate headlines, run them through the prediction model in a bracket-style tournament, and surface the winning headline. This gives them much more diversity in headline options than asking writers to manually create multiple variations.

Related Videos

1:00:10

2026-02-25

Shareable visualizations built by your favorite agent

You know the pattern: someone asks a question, you write a query, share the results — and a week later, the same question comes back. Watch this webinar to see how MotherDuck is rethinking how questions become answers, with AI agents that build and share interactive data visualizations straight from live queries.

Webinar

AI ML and LLMs

MotherDuck Features

9:09

2026-02-13

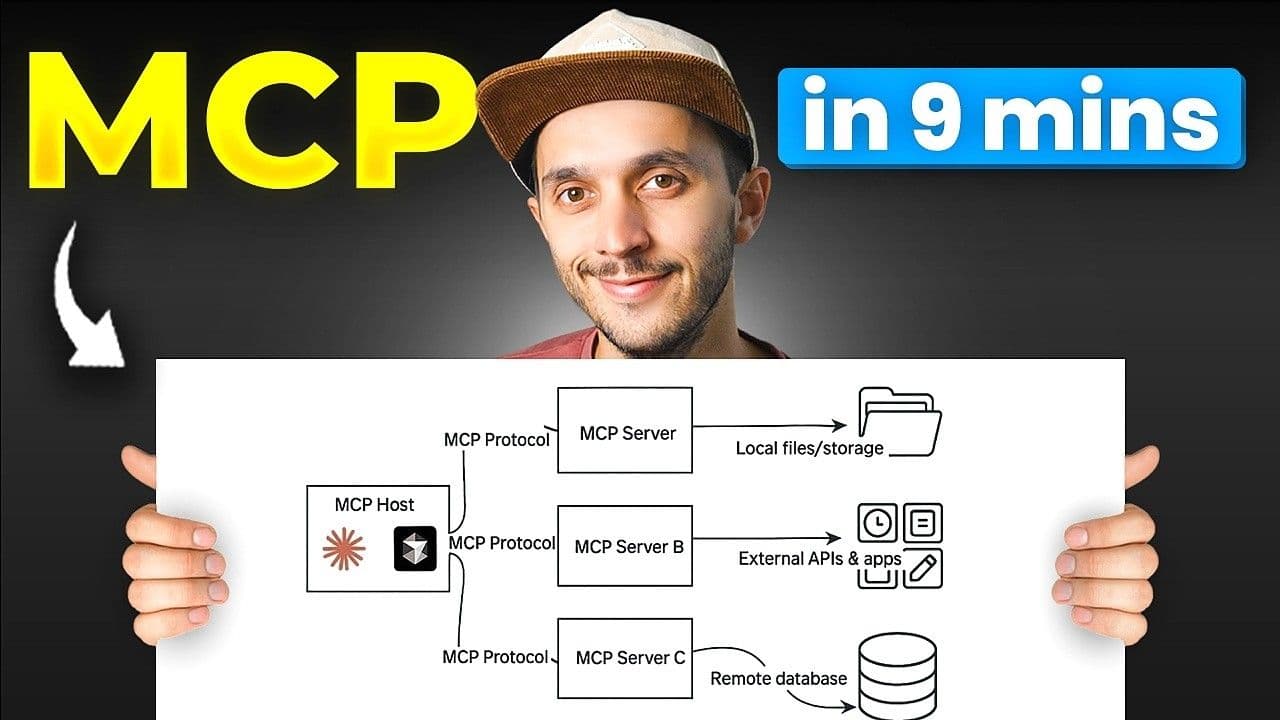

MCP: Understand It, Set It Up, Use It

Learn what MCP (Model Context Protocol) is, how its three building blocks work, and how to set up remote and local MCP servers. Includes a real demo chaining MotherDuck and Notion MCP servers in a single prompt.

YouTube

MCP

AI, ML and LLMs

2026-01-27

Preparing Your Data Warehouse for AI: Let Your Agents Cook

Jacob and Jerel from MotherDuck showcase practical ways to optimize your data warehouse for AI-powered SQL generation. Through rigorous testing with the Bird benchmark, they demonstrate that text-to-SQL accuracy can jump from 30% to 74% by enriching your database with the right metadata.

AI, ML and LLMs

SQL

MotherDuck Features

Stream

Tutorial