Building an Unstructured Data Pipeline: ETL with MotherDuck & Unstructured.io

2025/02/20 - 8 min read

BYKey Takeaways

- Unified Storage: Learn how to consolidate PDFs, docs, and HTML into MotherDuck alongside your structured analytics data.

- Simplified ETL: See how Unstructured.io handles the heavy lifting of parsing, chunking, and metadata extraction.

- In-Process AI: Discover how to generate vector embeddings using MotherDuck's native SQL functions without external API calls.

- RAG Readiness: Prepare your data architecture for AI agents and customer-facing analytics.

LLMs have extensive abilities to process data across multiple modalities. This has elevated the potential for using unstructured data in novel ways to deliver business insights. Advancements in AI have propelled the use of data sources like PDFs, text files, and HTML pages to build AI applications, and having a reliable way to store and retrieve unstructured data is now an essential capability for modern data pipelines and business applications. Unstructured.io provides a robust solution for transforming raw, unstructured data into structured data.

This blog post introduces a powerful new integration between MotherDuck and Unstructured.io that paves the way for ingesting unstructured data into MotherDuck to make unstructured data analytics and RAG application development a breeze.

What are the Challenges of Unstructured Data ETL & Why Use Unstructured.io for RAG Applications?

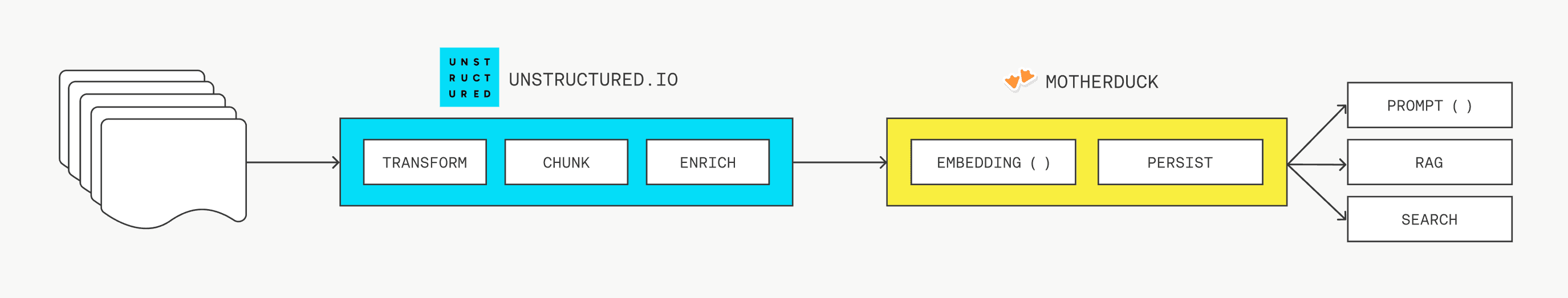

The Anatomy of a Modern Unstructured Pipeline

Before diving into the code, it is helpful to understand the data flow in a modern AI stack. Unlike traditional ETL which maps columns to columns, unstructured ETL involves:

- Ingestion: Reading raw files from S3, local drives, or Google Drive.

- Partitioning: Breaking documents down into logical elements (titles, list items, narrative text).

- Chunking: Grouping text into token-sized windows appropriate for LLMs.

- Loading: Moving this structured JSON representation into MotherDuck.

- Vectorization: Converting text chunks into embeddings for semantic search.

This guide focuses on automating steps 1 through 4 with Unstructured.io, and executing step 5 natively within MotherDuck.

Handling unstructured data for AI applications may pose several challenges, from inconsistent data formats to wrangling and keeping track of valuable metadata. Building a RAG system that processes multiple file types while maintaining a structured format for retrieval is complex, often requiring custom parsing and pre-processing. Additionally, integrating data from different sources like cloud storage, databases, and local files can be difficult without a standardized approach.

Unstructured.io addresses these issues by simplifying the Extract, Transform, and Load (ETL) process for unstructured data. Its framework converts diverse document formats into structured JSON while preserving the metadata, ensuring that critical information remains intact throughout your pipeline. In addition, Unstructured.io provides built-in chunking strategies and robust mechanisms for batch processing and handling incremental updates. By providing built-in connectors to various data sources, Unstructured.io streamlines data preparation and reduces the complexity of working with unstructured content in AI workflows.

Why Use MotherDuck for Unstructured Data Analytics and AI Workloads?

Building AI applications with unstructured data can become unwieldy and cumbersome, especially when integrating multiple data sources. MotherDuck, the efficient, in-process cloud data warehouse for analytics, streamlines this workflow by consolidating the storage of scattered information from both structured and unstructured sources into a single, accessible location, effectively eliminating data silos. Powered by DuckDB's blazing fast query engine and purpose built for analytics, it enables high-performance queries across numerical and textual data. For startups building AI agents, managing a separate vector database (like Pinecone) alongside a data warehouse adds unnecessary infrastructure overhead. MotherDuck allows you to treat your data warehouse as a vector store, simplifying your stack.

With its built-in AI integration, MotherDuck enhances text analysis using its prompt() function, allowing seamless processing of unstructured content. Additionally, vector search and full-text search capabilities provide advanced retrieval mechanisms, enabling AI applications to build richer contextual models. By using metadata-preserving pipelines, developers can further enhance data filtering, searchability, and structured-unstructured data integration within their data workflows.

Tutorial: How to Ingest Unstructured Data into MotherDuck Using Unstructured.io

To use Unstructured.io's MotherDuck destination connector, you will need the following:

- A MotherDuck account and access token.

- A database and a schema within your MotherDuck account.

- A table with the appropriate schema to store your processed data.

The Unstructured.io connector does not automatically create a database, schema, or table for data ingestion into MotherDuck. Instead, these must be set up manually before configuring the connector to load data correctly. By default, Unstructured.io uses the schema name main and the table name elements unless specified otherwise.

To ensure maximum compatibility with Unstructured.io, the following table schema can be used as a reference:

Copy code

CREATE TABLE elements (

id VARCHAR,

element_id VARCHAR,

text TEXT,

embeddings FLOAT[],

type VARCHAR,

system VARCHAR,

layout_width DECIMAL,

layout_height DECIMAL,

points TEXT,

url TEXT,

version VARCHAR,

date_created INTEGER,

date_modified INTEGER,

date_processed DOUBLE,

permissions_data TEXT,

record_locator TEXT,

category_depth INTEGER,

parent_id VARCHAR,

attached_filename VARCHAR,

filetype VARCHAR,

last_modified TIMESTAMP,

file_directory VARCHAR,

filename VARCHAR,

languages VARCHAR[],

page_number VARCHAR,

links TEXT,

page_name VARCHAR,

link_urls VARCHAR[],

link_texts VARCHAR[],

sent_from VARCHAR[],

sent_to VARCHAR[],

subject VARCHAR,

section VARCHAR,

header_footer_type VARCHAR,

emphasized_text_contents VARCHAR[],

emphasized_text_tags VARCHAR[],

text_as_html TEXT,

regex_metadata TEXT,

detection_class_prob DECIMAL

);

How to Build an Unstructured Data Pipeline for AI and RAG with MotherDuck

Unstructured.io provides a Python framework to orchestrate your ETL pipeline and a no-code interface for building data pipelines for unstructured data.

Learn more about the newly released MotherDuck connector here to get started.

First, install the MotherDuck connector and its dependencies using the following command:

Copy code

pip install "unstructured-ingest[motherduck]"

You will need the following environment variables:

MOTHERDUCK_MD_TOKEN- The access token for the target MotherDuck account, represented bymd_tokenin the Python client.MOTHERDUCK_DATABASE- The name of the target database in the account, represented bydatabasein the Python client.MOTHERDUCK_DB_SCHEMA- The name of the target schema in the database, represented bydb_schemain the Python client.MOTHERDUCK_TABLE- The name of the target table in the schema, represented bytablein the Python client.UNSTRUCTURED_API_KEY- Your Unstructured API key value. Follow these instructions to get your API key.UNSTRUCTURED_API_URL- Your Unstructured API URL.

Now let's use the Unstructured Python SDK to build the pipeline. An example pipeline is provided using the local source connector, which can help you load all the unstructured documents present in your local folder into MotherDuck. In practice, the source connector can be any of the ones supported by Unstructured.io.

Create an example pipeline using local documents

The pipeline below ingests local documents (PDFs) from a specified folder, utilizing the default document chunker.

This example pipeline can be used to process a collection of documents, including PDFs, Word files, and more, before storing them in MotherDuck for retrieval-augmented generation (RAG) applications:

Copy code

import os

from unstructured_ingest.v2.pipeline.pipeline import Pipeline

from unstructured_ingest.v2.interfaces import ProcessorConfig

from unstructured_ingest.v2.processes.connectors.duckdb.motherduck import (

MotherDuckAccessConfig,

MotherDuckConnectionConfig,

MotherDuckUploadStagerConfig,

MotherDuckUploaderConfig

)

from unstructured_ingest.v2.processes.connectors.local import (

LocalIndexerConfig,

LocalConnectionConfig,

LocalDownloaderConfig

)

from unstructured_ingest.v2.processes.partitioner import PartitionerConfig

from unstructured_ingest.v2.processes.chunker import ChunkerConfig

# Chunking and embedding are optional.

if __name__ == "__main__":

Pipeline.from_configs(

context=ProcessorConfig(),

indexer_config=LocalIndexerConfig(input_path=os.getenv("LOCAL_FILE_INPUT_DIR")),

downloader_config=LocalDownloaderConfig(),

source_connection_config=LocalConnectionConfig(),

partitioner_config=PartitionerConfig(

partition_by_api=True,

api_key=os.getenv("UNSTRUCTURED_API_KEY"),

partition_endpoint=os.getenv("UNSTRUCTURED_API_URL"),

additional_partition_args={

"split_pdf_page": True,

"split_pdf_allow_failed": True,

"split_pdf_concurrency_level": 15

}

),

chunker_config=ChunkerConfig(chunking_strategy="by_title"),

destination_connection_config=MotherDuckConnectionConfig( access_config=MotherDuckAccessConfig(md_token=os.getenv("MOTHERDUCK_MD_TOKEN")),

database=os.getenv("MOTHERDUCK_DATABASE"),

db_schema=os.getenv("MOTHERDUCK_DB_SCHEMA"),

table=os.getenv("MOTHERDUCK_TABLE")

),

stager_config=MotherDuckUploadStagerConfig(),

uploader_config=MotherDuckUploaderConfig(batch_size=50)

).run()

How to Generate Text Embeddings in MotherDuck: Preparing for Vector Search

Generating embeddings usually requires moving data out to an external API (like OpenAI) and writing it back. MotherDuck allows you to run this in-process or via native integrations, significantly reducing latency and complexity for your data pipeline. The query below demonstrates generating embeddings for your text chunks in a single SQL command:

Copy code

UPDATE unstructured_data.main.elements SET embeddings = embedding(text);

MotherDuck currently supports OpenAI’s text-embedding-3-small (512 dimensions) and text-embedding-3-large (1024 dimensions) for embedding generation.

With these capabilities, complete RAG applications can be built within MotherDuck that integrate vector search, full-text search, and hybrid retrieval into a single cloud data warehouse environment.

How to Query and Validate Unstructured Data in MotherDuck

Now that your pipeline is set up, you can run it to check the ingestion output in MotherDuck’s web UI.

Here’s an example SQL query we used to view some of the fields:

Copy code

SELECT id, element_id, "text", embeddings, "type", date_created,

date_modified, date_processed, permissions_data, record_locator,

filetype, last_modified, file_directory, filename, languages, page_number

FROM elements;

Building AI Use Cases on MotherDuck

Building AI applications or analytics pipelines on unstructured data comes with challenges such as inconsistent formats, and inefficient retrieval processes. Unstructured.io addresses these challenges by transforming raw, unstructured content into structured formats while preserving metadata, ensuring consistency across workflows. However, structured and unstructured data often remain siloed, making comprehensive analysis difficult. By integrating with MotherDuck, developers can consolidate and query across structured and unstructured data within a single data store, enriching data models with better context.

Applications relying on both data types, benefit from fast analytical querying on structured data and keyword (Full Text Search) and embedding-based vector search (Cosine similarity) on unstructured data.

Whether you're optimizing a RAG system or handling large-scale AI applications, using Unstructured.io and MotherDuck together provides a powerful solution for maximizing the value of unstructured data. Streamlining data pipelines from ingestion to retrieval enhances scalability and efficiency in AI application development and enables you to build future-proofed data pipelines.

TABLE OF CONTENTS

Start using MotherDuck now!

FAQS

What is an unstructured data pipeline?

An unstructured data pipeline is a process that extracts raw data (like PDFs, HTML, or images), transforms it into a structured format (JSON or SQL tables), and loads it into a data warehouse or vector database for analysis and AI retrieval.

Can MotherDuck handle vector embeddings?

Yes, MotherDuck supports vector embeddings directly within the database. You can generate embeddings using SQL functions like embedding() and perform vector similarity searches using cosine similarity for RAG applications.

How does Unstructured.io integrate with MotherDuck?

Unstructured.io provides a dedicated destination connector for MotherDuck. It handles the ingestion, partitioning, and chunking of files, automatically mapping them to a MotherDuck table schema optimized for hybrid search.