With this summer edition, you'll have a roadmap for your vacation time to learn the basics of being a full-stack data engineer. Fill your knowledge gaps, refresh the basics, or learn with a curated list and path towards a full-time data engineer.

After covering the essential toolkit in Part 1 (essential tools for your machine) and Part 2 (infrastructure and DevOps), this article teaches you how and in what order to learn these skills. The roadmap provides a structured path to level up during the slower summer months.

The roadmap is organized into 3 weeks that you can learn at your own pace and time availability:

- Week 1: Foundation (SQL, Git, Linux basics)

- Week 2: Core Engineering (Python, Cloud, Data Modeling)

- Week 3: Advanced Topics (Streaming, Data Quality, DevOps)

How to use this guide: Each section contains curated resources (articles, videos, tutorials) for that topic. Click on the links that interest you most. It's meant as a guided roadmap to learn the fundamentals of a "full stack" data engineer.

TIP: Learning at Your Own Pace While structured as a three-week program, everyone learns differently. Pick what's most relevant to your goals and skip sections you won't need immediately or in the near-term future. Consistency matters more than speed. Sometimes we forget how far 30 minutes a day can take us. And no, after three weeks, you won't know everything you need to know, but you'll be able to understand the problems and identify potential angles to solve them.Week 1: Foundation and Core Skills

Let's get started with building your technical foundation skills for data engineering.

You can learn the foundational skills in many ways: there are bootcamps, courses, blogs, YouTube videos, hands-on projects, and many more ways to learn them (free and paid ones), including the more advanced skills.

SQL Foundations

Probably the most important skill of any data engineer, at any level, whether they are closer to the business or more technical, is SQL—the language of data. You can descriptively explain what you want from your data much more precisely than natural language through LLM workflows. That's why it will always be a core skill. For example, in the English language, you won't specify the partitions or the exact date range (including or excluding the current month). There are many questions that you need to define in your WHERE statement or in the SELECT, which you would miss otherwise.

To get started with SQL until you master it, you can follow this roadmap below:

- Start with understanding SQL.

- Database design principles, from relational database basics to key concepts for beginners. Learn DDL (

ALTER,CREATE), DML (INSERT,UPDATE,DELETE), and relational theory by Edgar F. Codd, who invented the theoretical basis for relational databases. - Advanced SQL queries, such as Window functions for performing advanced aggregations without additional subqueries within the current query. Or, CTEs are a powerful syntax that allows for better readability, creating aliases for sub-queries, and even recursion is possible.

- ACID properties and transactions within databases such as Postgres, MySQL, and DuckDB.

- Learn the differences between OLTP vs. OLAP with a beginner's guide. Also, check out an explainer of What is OLAP?

- dbt core (tutorial and SQLMesh (tutorial: frameworks to encapsulate SQL into a structure that can be versioned, tested, and run in order, including well-documented lineage as a web page.

Version Control

If you use SQL, very quickly you'll want to work with coworkers and want to version it so as not to lose essential changes or to roll back added bugs.

Therefore, you need version control. This short chapter gives you some starting points for the most common one.

- What is version control - a visual guide to version control.

- The tool, Git fundamentals.

- GitHub/GitLab Collaboration: Learn about platforms like GitHub and GitLab for hosting Git repositories and for sharing and collaborating with others. Main features include Pull Requests and Issues for communicating your changes in a structured way.

- Learn the different git workflows. Also, check out git worktree. Although it's a bit advanced, it's good to know it's there, especially if you need to work on different branches simultaneously without constantly stashing or committing your unfinished changes before switching to another branch.

There are many more helpful topics, such as GitHub Actions/Pipelines for CI/CD or basic automation (uploading documents to a website, checking grammar automatically before publishing, etc.). However, for the first week, let's keep it simple and move on to the next chapter: Linux and scripting.

Environment Setup, Linux Fundamentals & Basic Scripting

Set up your development environment and master essential Linux skills for data engineering. This depends on your operating system of choice, too, but most data engineering tasks are typically run on servers. In almost all cases, they are executed on Unix-based systems. That's why Linux fundamentals are key to elevating your data engineering skills.

Below are the resources and roadmap to learn about these topics:

- Bash scripting essentials, starting with the basics of bash scripting, including variables, commands, inputs/outputs, and debugging. Alternatively, use this course with an interactive command line in the browser: Linux command line basics (Paid).

- Package managers (Apt, yum, Homebrew, Wget): How to Use Package Managers in Linux? (APT and YUM) and Homebrew for macOS

- SSH and remote connections: Connecting to a remote server and fixing a DAG or updating a script on the fly.

- Development environment setup: Simple yet powerful dev setups: MacOS setup with pyenv, docker, uv, VSCode, Linux (Omakub, Omarchy) and Windows Setup for data scientist.

- Cron jobs and scheduling: Basic automation scripts without the need for a heavy tool.

Congratulations, this wraps up week one. If you have watched, experimented, and taken notes, you now possess the fundamentals of data engineering and, frankly, any engineering or technical job. Give yourself some time to ponder and review, and then proceed to week two below.

Week 2: Core Data Engineering

Week two is all about the essential data concepts, primarily established principles for manipulating and architecting data flows for data engineering tasks.

Data Modeling & Warehousing

To avoid creating independent SQL queries and persistent data tables without connected data sets, we need to model our data with a more holistic approach.

This is where the concepts of so-called data modeling and the long-standing term data warehousing originate. The sole purpose of these is to organize data optimized for consumption, whereas data in Postgres and other operational databases is optimized for storage.

This chapter will teach you and point you to key knowledge to prepare you to model enterprise workloads.

- Data modeling is a significant one, and somewhat underappreciated these days. However, with the rise of AI and automation, it hasn't been more critical to learn.

- Dimensional modeling with a star schema.

- Snowflake schema vs star schema: Understanding when to use normalized vs denormalized dimension tables.

- Data normalization: 1NF, 2NF, 3NF principles for reducing data redundancy

- Fact tables vs dimension tables: Understanding measures, metrics, and descriptive attributes.

- Granularity is a key concept to understand, so your facts will not suffer from too low detail that is slow, or too high-level detail that loses crucial information when drilling down in a dashboard.

- Data warehouse design methodologies:

- Kimball methodology: Bottom-up, business process-focused approach.

- Inmon methodology: Top-down, enterprise data model approach.

- Data Vault 2.0: An approach with hubs, links, and satellites for agility and scalability.

- Advanced modeling concepts:

- Slowly changing dimensions: Handling changes in dimension data over time.

- Bridge tables and many-to-many relationships: Managing complex relationships in dimensional models.

Python for Data Engineering & Workflow Orchestration

After SQL, Python is the next most important language to learn. While it's beneficial to have deep knowledge about SQL, and you only need preliminary Linux skills to get around a server and run some commands from the command line, Python is the utility language of data. It's the glue code that connects everything you can't achieve with SQL, most notably working with external systems and orchestrating your data workflows with Python libraries and frameworks.

Orchestration and other more modern tools help you automate and organize, as well as version your data tasks and pipelines.

- Starting with a Python general introduction.

- DataFrame and data manipulation with Pandas, Polars and DuckDB. Navigating the Dataframe Landscape and DuckDB vs Pandas vs Polars for Python Developers, Video Format

- Python libraries for Data validation with Pydantic or Data Testing with pytest.

- Utilitarian Python knowledge. Connecting to any API quickly with FastAPI.

- Workflow orchestration is almost as important as the Python language itself. Apache Airflow is the biggest name. You learn about task dependencies and scheduling, as well as how orchestration and integration of data tools and stacks work through workflow management. Also, check out related DAG design patterns for guidance on designing pipelines that are easy to maintain and separate business logic from technical logic in an organized and conventional manner.

Cloud Platforms Introduction

Getting to know major cloud platform providers can save you a significant amount of time and enhance your employability because you know how to work around permissions, the services provided, and how to automate specific tasks. Ensure you select the right provider based on your location and primary use, or the company you prefer to work for.

- Introduction to AWS, Azure, or Google Cloud. Vital is permission management, such as security and IAM basics, on all platforms.

- Dedicated data services: MotherDuck, BigQuery, Fabric, hosted Airflow (Azure & AWS).

- Object Storage or blob storage setup on all platforms.

Depending on where your resume positions you, you'll do different work. But some sort of analytics through business intelligence (BI) is always involved. Visualizing your data and showing it in a way that makes sense immediately is hard; that's where BI tools and data visualization come into play.

- Introduction to BI tools and using notebooks. Others are Jupyter Notebooks, Hex, DeepNote, and many more. Check Jupyter notebooks for analytics, which is a super helpful toolkit for data analysis and iteration.

- Metrics and KPI design with metrics layers and semantics.

- Data visualization best practices. Tools like color management and a high-level grammar of interactive graphics help understand data presentation. Hichert SUCCESS Rules is another great option, although it is only available in German. Check also [Data Visualization with Hex/Preset and DuckDB/MotherDuck](https://www.youtube.com/watch?v=F9yHuAO50PQ&t=2s).

- Self-service analytics enables business people to serve themselves.

This concludes Week Two. You're ready to tackle the advanced topics in Week three.

Week 3: Advanced Topics

This final week focuses on advanced topics, including data quality and streaming. This last part of the data engineering roadmap focuses on cost optimization, data quality, event-driven approaches, DevOps learnings, and advanced data quality and observability.

Some of these topics are rarer approaches and should be avoided initially, but there's a time when you need any of them.

Stream Processing & Event-Driven Data

Event-driven approaches or integrating your data as a stream, end-to-end from source to your analytics, is sometimes a must and business-critical, especially for ad-tech or sports, where you need live results that are as up-to-date as possible.

Understanding stream processing fundamentals is especially beneficial for validating users' requests for real-time data insights, as they will often ask for it, but it's not always necessary.

- Event-driven architecture and design practices: How do they differ from batch loads? Key players in this category are Apache Kafka and Flink.

- Real-time analytics patterns: Change Data Capture (CDC) and the difference in propagating that stream compared to batch. See Postgres change data capture possibilities.

Data Quality & Testing

Implementing robust data quality frameworks and testing strategies is crucial for maintaining a stable data platform. Most often, it's quick to set up a data platform, or a stack to extract analytics from your data, but doing it stably and with high data quality is an entirely different job. The tools in this chapter will help you with that.

- Great Expectations and other data quality frameworks.

- Unit testing for data pipelines with Dagster (docs: How to test your data and pipelines in an automated fashion.

- Data lineage and governance: How to get the lineage of your data flow.

- A Beginner’s Guide for Observability. Be sure to learn about Data Contracts, a concept for defining data interfaces between data and business teams.

- Metadata Management: Data discovery with data catalogs, ratings of datasets to know which ones are actively used and of good quality. Check also the Schema registry management to handle metadata.

Cost Optimization & Resource Management

Most of the time, especially if you use cloud solutions, the price to pay for these services is relatively high. Therefore, stopping the creation of a heavy temp table on an hourly basis can save a significant amount of costs. Consequently, it's crucial to debug heavy SQL queries or wasted orchestration tasks, including orphaned ones that aren't connected to any upstream datasets or that aren't in use.

Stacks that don't run in the cloud are optimized differently. Here, you don't pay for cloud services, but to run your own. That's why you optimize for team members and tasks. As data engineering tasks are elaborate, spending time on the right tasks can save a lot of money, too.

In the past, it was referred to as performance tuning. At that time, we were optimizing for speed, which remains the case today. Similarly, if you maximize performance, you also improve cost efficiency at the same time, as it runs for shorter periods. Over time, this can result in significant savings.

- Cloud cost monitoring and optimization: Tools to monitor the cost and usage of data engineering tasks.

- Performance Tuning: Indexing, partitioning strategies, and caching mechanisms are important components, as is query optimization for better efficiency and lower cost.

- Storage tiering and lifecycle management

Infrastructure as Code & DevOps

Infrastructure management and deploying new software in an automated fashion typically occurs through Infrastructure as Code (IaC) using Kubernetes or a similar platform. That's why it's good to have preliminary knowledge about these tools and when to use them.

- Docker containerization is a good start; here's a beginner's guide.

- Kubernetes and Terraform basics.

- Monitoring and logging explained.

- Advanced CI/CD for deploying entire data stacks and data platforms.

That's it. This is a three-week roadmap with numerous courses and links to help you learn data engineering. Let's take a break and dive into the final part, observing what we've learned throughout these three weeks.

Congratulations, You've Learned the Essentials of Data Engineering

This roadmap provides the foundation, but data engineering is a field that requires continuous learning. Stay curious, build projects, and connect with the community. The skills you've developed here will serve as your starting point into more specialized areas as you grow in your career.

A quick recap of what you have learned. By the end of this 3-week roadmap, you should have learned a lot, especially the key components of data engineering. With a little bit of picking and choosing, it should have been fun to engage in new, interesting, and potentially unknown topics.

By Week 1, you learned how to write SQL to query the data you want, and some additional functions that SQL provides that you didn't know before. You know how to safely version control your SQL statements and collaborate with others on them. And you have some basic Linux skills.

After Week 2, you can navigate and use a cloud-based data warehouse on one of the major cloud providers of your choice. You learned different ways to model your data and its flow, as well as which Python libraries and helper frameworks are available.

Week 3 enables you to understand basic analytics skills and present data to clients. You know how to implement the glue code between SQL and run it on Linux using workflow orchestration tools. You have a rough idea of what real-time data workloads look like and how they differ from batch workloads. You should have an understanding of how to package production-ready code for deploying scalable data stacks using DevOps tools and methodologies. You have heard and seen various approaches to architecting an enterprise data platform.

What's Next?

All of it will help you build your portfolio and land your dream data engineering role. Each week builds upon the previous, creating a comprehensive learning experience that mirrors real-world data engineering challenges.

Throughout the entire process, it's beneficial to build your online portfolio, where you showcase your data engineering learnings, Git projects, website, and links to hackathons you participated in, among other things that demonstrate your motivation. Above all, sharing is also fun; people will reach out to you after reading your content, especially if they learn from it too.

Remember to take your time learning new concepts. If you give yourself time to digest, you learn more easily, you'll be able to recall specific terms better, and it's easier to connect the knowledge—this is how our brains learn.

Consistency is key. Dedicate 1-2 hours daily for a couple of weeks, and you'll be amazed at what compounding and consistent learning can achieve.

I hope you enjoyed this write-up. If so, you may also find the essential toolkit article for data engineers, available in Part 1 and Part 2, or check an End-To-End Data Engineering Project with Python and DuckDB.

If you want more? Check out the Mastering Essentials resources by MotherDuck, or follow their YouTube channel for additional resources. If you like DuckDB and need a cost-efficient data warehouse or data engine, check out MotherDuck for free.

Further in-depth content can be found and learned through bootcamps, events, and courses. Please don't give up; it's a lot to take in when you start. Begin with the fundamentals as guided in this roadmap, and also follow your interests. It's better to learn something that might not be suitable right now, but because you are passionate about it, learning comes much more easily. And over time, that knowledge may be put to use at a crucial moment later on.

TABLE OF CONTENTS

Start using MotherDuck now!

FAQS

What skills should I learn to become a data engineer?

- SQL (essential for querying and managing data)

- Python (for data pipelines and transformations)

- Cloud platforms (AWS, GCP, or Azure)

- Data warehousing concepts (star schema, normalization, ACID transactions)

- Orchestration tools (Airflow, Prefect, or Dagster)

- Version control with Git

Why is SQL the most important skill for data engineering?

SQL is fundamental because it's the universal language for data manipulation, supported by virtually every database and data warehouse. Understanding advanced concepts like window functions, CTEs, and query optimization is essential since much of a data engineer's work involves transforming and aggregating data at scale.

What is the difference between a star schema and snowflake schema?

A star schema denormalizes dimension tables for faster query performance, making it ideal for most data warehouse use cases. A snowflake schema normalizes dimensions into multiple related tables, reducing storage but requiring more complex joins. Star schemas are generally preferred for OLAP workloads due to simpler queries and better performance.

What Python skills should data engineers learn?

- Pandas and Polars for data manipulation

- Object-oriented programming fundamentals

- Working with APIs and JSON data

- Writing efficient, reusable code

- Testing and debugging data pipelines

- Integration with SQL databases and cloud services

Why should data engineers learn cloud platforms?

Modern data infrastructure runs primarily on cloud platforms like AWS, GCP, and Azure. Data engineers need to understand cloud storage (S3, GCS), compute services, and managed data services because most production data pipelines leverage these services for scalability, reliability, and cost efficiency.

What is a data orchestrator and why do data engineers need one?

A data orchestrator like Airflow, Prefect, or Dagster manages the scheduling, dependencies, and execution of data pipelines. Data engineers need orchestrators to ensure pipelines run reliably at scale, handle failures gracefully, maintain data freshness, and provide visibility into pipeline health and performance.

PREVIOUS POSTS

2025/07/17 - Ryan Boyd

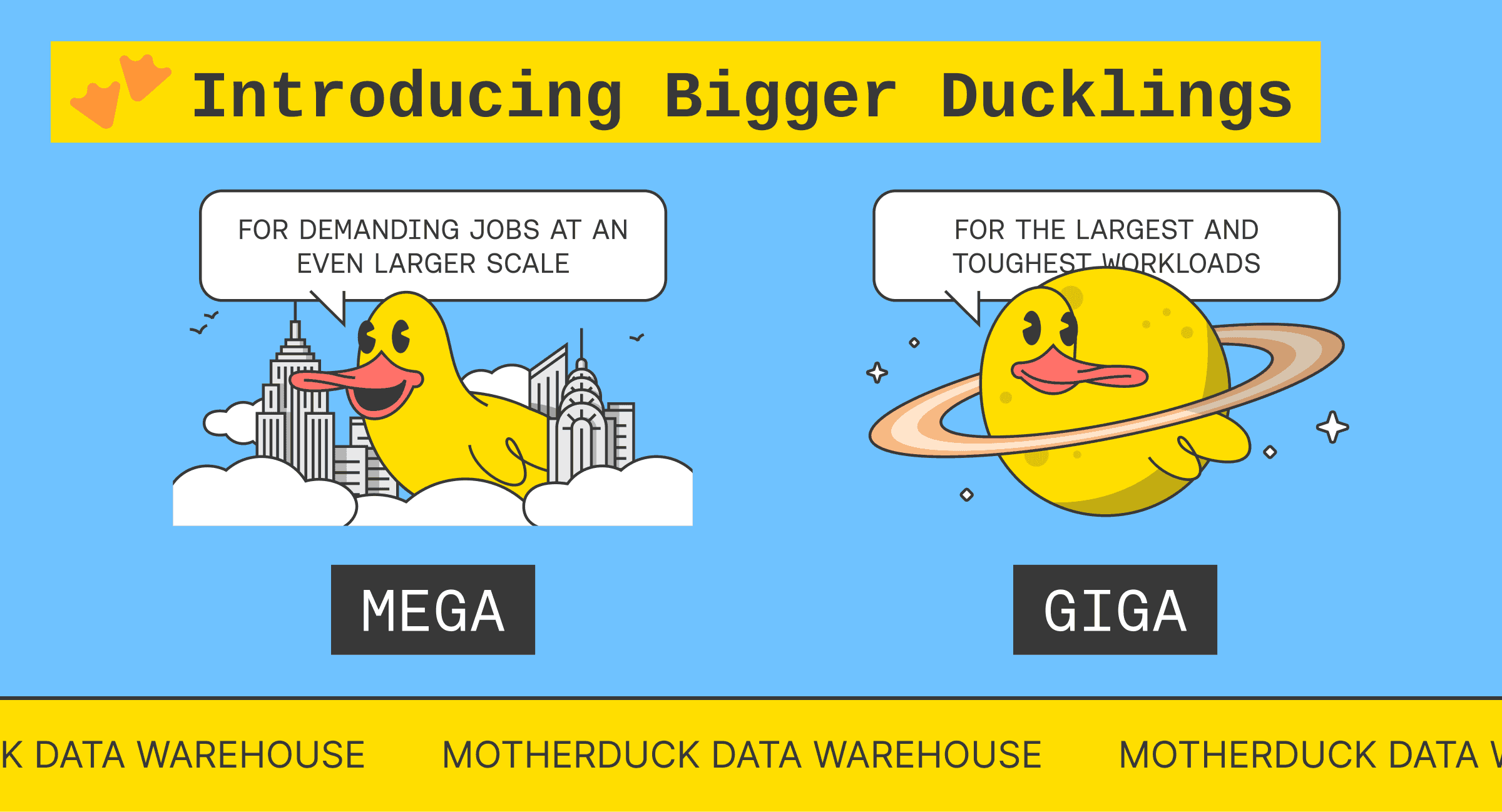

Introducing Mega and Giga Ducklings: Scaling Up, Way Up

New MotherDuck instance sizes allow data warehousing users more flexibility for complex queries and transformations. Need more compute to scale up? Megas and Gigas will help!

2025/07/17 - Ryan Boyd

Small Data SF Returns November 4-5, 2025: First Speakers Announced

Conference with two days of practical innovation on data and AI: workshops and talks from industry leaders, including Benn Stancil, Joe Reis, Adi Polak, George Fraser, Jordan Tigani, Holden Karau, Ravin Kumar, Sam Alexander and more!