A duck in the hand is worth two in the cloud

2024/11/08Featuring: , Guen PrawiroatmodjoData professionals often find themselves in the "uncanny valley of data." Their datasets, ranging from 10 to 200 gigabytes, are too large for single-machine tools like Pandas, leading to slow performance and out-of-memory errors. Yet, these datasets are not so massive that they justify the cost and complexity of a distributed big data cluster. This gap has historically forced analysts into awkward workflows or onto expensive, over-provisioned infrastructure. However, the landscape of data processing is changing, driven by a "Data Singularity"—the point where modern single-node hardware, with its exponential growth in CPU cores and dramatically lower storage costs, is now powerful enough to handle workloads that once required large clusters. This shift creates an opportunity for a new analytics architecture that combines the power of a single machine with the scale of the cloud.

This article explores a practical demonstration of this new paradigm. Using a 160GB dataset of 1.7 billion bird observations, MotherDuck software engineer Gwen shows how to interactively explore, prepare, and visualize this massive dataset directly from a Jupyter notebook, blending local and cloud computation into a seamless, high-performance workflow.

The Modern Stack for Laptop-Scale Analytics

The foundation for this powerful analytical experience is DuckDB, a single-node, in-process OLAP query engine designed to take full advantage of modern hardware. Its columnar architecture and automatic multithreading capabilities allow a single query to saturate all available CPU cores on a machine. It also provides excellent support for reading various file formats, including Parquet and CSV, directly from local or remote storage like S3.

MotherDuck extends DuckDB's capabilities into a modern cloud data warehouse. It is not a traditional warehouse that requires moving all work to the cloud. Instead, it operates on a seamless, hybrid execution model. MotherDuck’s extension for DuckDB allows computation to occur both locally on the user's machine and in the MotherDuck cloud. The system intelligently routes work to the most efficient location. Heavy computation against large, cloud-resident datasets is pushed to MotherDuck’s backend, while subsequent processing on smaller, filtered results can run locally. This platform makes the transition completely seamless to the user, who simply executes SQL commands without needing to specify where they should run.

Prepare a 160GB Dataset for Interactive Analysis

The demonstration uses the eBird dataset from the Cornell Lab of Ornithology, a remarkable citizen science project containing 1.7 billion global bird observations. In its raw, compressed text format, the dataset is approximately 160GB.

The one-time data loading process is a perfect example of DuckDB's efficiency. The workflow begins with a CREATE TABLE AS SELECT (CTAS) statement. This single SQL command instructs MotherDuck to ingest the data by using DuckDB's powerful read_csv function, which can read the compressed text file directly without needing a manual decompression step. During this ingestion, light transformations, such as casting data to the correct types, are applied. This process efficiently converts and stores the raw text data in MotherDuck's optimized cloud format, making it available for fast, interactive querying from any connected client.

Explore Billions of Rows Interactively from a Notebook

With the data loaded, the analysis can begin directly from a familiar Python environment. Connecting to MotherDuck is as simple as providing a motherduck: connection string to the DuckDB client. Once connected, the entire 1.7 billion row table is ready for exploration.

The initial queries confirm the scale of the data and the performance of the system. A simple COUNT(*) query confirms the 1.7 billion row count. A subsequent query to find the most frequently observed birds in Washington State in 2023 runs against the entire dataset and completes in just 14 milliseconds. This incredible speed, with the user's laptop not even breaking a sweat, showcases the power of executing the heavy lifting on the MotherDuck cloud backend.

Visualize Geospatial Migration Patterns with H3

The analysis then turns to a more complex task: visualizing the migration patterns of the Snow Goose. This workflow perfectly illustrates the hybrid model in action.

First, a query is executed in MotherDuck to filter the 1.7 billion observations down to only those for the Snow Goose in the last year. This is the heavy-lifting step that leverages the power of the cloud. The resulting, much smaller dataset of around 100,000 rows is then pulled down into a local temporary table within the user's DuckDB instance.

With the relevant data now on the local machine, the H3 geospatial library is used to prepare it for visualization. H3, a standard created by Uber, divides the world into hexagonal cells. By converting the latitude and longitude points into H3 cell IDs at a specific resolution, the data is effectively downsampled. This local aggregation step makes rendering a complex migration map in a tool like Plotly fast and efficient, as the browser only needs to handle the aggregated data points rather than every single observation.

Accelerate Discovery with Natural Language and AI

While fast queries on raw data are powerful, the next level of analytical acceleration involves reducing the time it takes to formulate the right questions and enrich the results. To this end, MotherDuck integrates AI capabilities directly into its SQL dialect.

First, the prompt_query function enables natural language-to-SQL generation. Instead of manually writing a complex query to find a bird species with a long migration path, the analyst can simply ask a question in plain English, such as finding birds that winter in Mexico and summer in Canada. The system automatically generates the corresponding SQL, saving significant time and effort, especially for users less comfortable with complex SQL syntax.

The second pattern involves using a large language model (LLM) for data enrichment. In the demonstration, the goal is to identify which of the most-observed birds in Washington are duck species. This manual research, a process that could otherwise take half a day, is now automated. A SQL query first retrieves the top 100 most common bird names. The query's results, a list of bird names, are then passed directly to the built-in prompt function, which asks the LLM to identify the ducks. The entire process takes about 10 seconds, turning hours of manual work into a single, efficient query.

Bridging the Uncanny Valley of Data

The ability to analyze 1.7 billion rows from a laptop is more than a technical curiosity; it represents a fundamental shift in how data analysis can be done. The combination of DuckDB's single-node performance and MotherDuck's hybrid cloud architecture empowers individual analysts to tackle large-scale data problems that were previously out of reach without specialized infrastructure.

This approach closes the gap in the "uncanny valley of data," making large-scale analytics more accessible, interactive, and cost-effective. By intelligently blending local and cloud resources, data professionals can stay in their preferred environments, like Jupyter, while harnessing the power needed to explore and derive insights from massive datasets.

Transcript

0:07are we ready to go yes okay we're going to go hey everybody um I'm Jacob here

0:13and uh I'm going to talk about a duck in the hand is worth two in the cloud and how we do data preparation analytics uh with duck B so quick quick set of intros here I'm Jacob I'm uh working devil at mother duck and uh I also have my colleague Gwen come up here in a little bit and uh do a demo for us so it's

0:32going to be a lot of fun she's software engineer um so I think what I want to do to start here is really just talk about how we got to where we did today and this is kind of the Big Data story um if you look at this dot this is around 2005 and uh our friends at Google did us

0:53a all the big favor and started writing about the thoughts they were on into like Google file system like M produce like big table and something that was happening at this time is if you look at this trend I don't think we even need like a linear regression we can just do this right um this is clock frequency

1:12there was certain assumptions made about architecture around this time um uh that led to design decisions made uh in the software that was produced to handle big data and what happened is the trend actually didn't hold um our our CPU frequency was flat

1:30so what we did instead um in order to you know hold up Mo's law is uh we started scaling the number of cores also around that same point in time that CPU frequency kind of flattened out and so now we have logical cores increasing uh again mors law continues to stay true kind of in a strange way a

1:50way that we've never seen before um at the same time storage uh got a lot cheaper um from 2005 to today it went

2:00you know down in price from $2 per gigabyte to uh a Cent and if uh some

2:06colleagues at AWS are to be believed the actual price for gigabyte realize there is much much lower than this price so um

2:15we also have this happening too which is storage getting faster okay so what this means is things that used to take a lot of time and need to run out a bunch of nodes because we need to run a bunch of hard drives in parallel can now just run on a single hard drive and as it turns out that means we

2:29buil really big nodes I pulled this off Vantage sh the other day um this is the biggest nodes uh available in AWS today

2:39um I think they're actually were first built to run sap Hana um which is coincidentally in memory analytics um yeah we have like 32 uh terabytes of RAM and almost 900 vcpus on these things so like pretty pretty big pretty big single modes um so we have an observation here right number of cores goes away Up Storage price goes way

3:02down if you know these things are true or you notice these things being true what kind of software would you build right um You probably wouldn't build software that needed to run on a bunch of nodes so I'm going to introduce duct TB so uh this is a single node in process

3:21olap query engine um it's very efficient it has a lot of um Innovation placed in the way that it automatically multi-threads so uh what this means really is that uh you can saturate your node with a single query for those of you that are familiar kind of with database Primitives that's actually really hard to do with like postgress or

3:44SQL Server um by Design By the way you want space for transactions um dtb saturates on a single query um it also has some really nice uh features and support around reading files in a bunch of different places in bunch of different formats geospatial data frames I know it's kind of come up in a few other talks so I w't

4:05go into too much detail um but that's there's some really nice ergonomics there um and again it's all in SQL so no switching languages right a SQL you can select star out of an S3 bucket for example so what we're doing at mother duck is rethinking what data warehousing workloads look like for the real data workloads that you have um there's no

4:27distributed overhead we are aggressively sing single node um because of that uh we find it easier to accelerate business value because we can tie across all of the parts uh of the kind of problem stack in SQL and of course because it's powered by duct TB we get all of the fun stuff that they nerd snipe each other on

4:48um so what does this mean for us I stole this from uh from a slide that Hest the founder of duv put together at posit conference earlier this year which is we are approaching the data singularity we our Hardware now exceeds the amount of data that we can produce on a daily basis so we have reached the data sing

5:08Singularity that's where we are at and so uh from here we're going to jump into a demo that Gwen is going to take us through cool thank you Jacob all right so if you want to code along with us or look at this later uh this is so you all we can find the code so what I'm going

5:24to show you today is um is how to do a large uh data set out how to process a large data set on your laptop so you're probably familiar with petas right so if your petas data frame is like 10 megabytes or something it's really well behave it's very nice actually very expressive beautiful it works great um

5:42but once you've get to like 10 gigs above things start to get a little awkward um you know it should work it looks like it works but it doesn't quite feel right so that's what I call the uh The Uncanny Valley of of data there's this uh this kind of area kind of in between where you go from small data or

6:01tiny data to Big Data it's kind of like you know it's not quite clear what tool to use so as Jacob mentioned computers have gotten more and more powerful single single nodes are actually quite performant most people have like a pretty powerful laptop with you know over 10 cores and uh you know 30 or more gigs of RAM so you know why can't we do

6:24like 100 gigs or like 10 gigs like comfortably on a laptop so I'll show you you a data set that is procured by the Cornell lab of ornithology uh you know

6:37any any birders any birders yes birders right so there's an app called Merlin that you can download if you see a bird in the wild you can identify it and and log it and it's going to end up in this database so you can download it it's called EBD it's a citizen science project so everything is available you

6:56just have to sign you know you're not going to use it to hunt Rams yeah front

7:02front of location like the breeding location you're supposed to you know use it for for personal re so um so if you you know if you you you're interested you can actually just go and download it right now it's about 160 G gigs of uh compressed data tx. gz file uh so what I did is I downloaded that file uh and I'm

7:21going to show you now how to do some filtering and like on my laptop without having to you know go to like a cluster or a large expensive uh data warehouse or or post installation somewhere um and then I'm going to do some interactive prepping and pulling it into a data frame turning into a nice small data

7:41frame that I can visualize um so just all you need to to run this demo is duct DB um I li of course you need plotly too there are a few other to to Wrangle the data all you need is duct DV uh the way you connect to a duct DV file is like this dub.

7:58connect uh and then you put the path of your your file so it's similar to SQL light you just connect to local database if you want to connect to mother duck which is uh the cloud version of of of dotb you can actually use uh this mother dock MD colon uh connection string um so I'm going to show you that right now

8:18here um let me just see is the font size

8:22good for everybody yes all right so um just

8:28screen this so what I did is I downloaded this file when you go to ebird.org and you download the data it gives you AAR archive file so I I unarchive this and it turns into this txa gz file um it took me about 5 hours to download this this was just uh like on a pretty fast internet connection um and then I uh

8:51loaded it into mother duck so what I did here is I ran duct DB just in the in the command line um this is all just I did this before the workshop I'm not going to show this L because this takes about an hour so that's one one thing you need to know about about databases right so

9:06once you've loaded your data into the database all the queries after that are going to be super fast so this but this is a an inial low step you're going to need to do so what I did here is I just did what we call a c task create table as EBD that's that's what this database called eer eer Data uh and then I took a

9:23subset of some of the columns in there so there's a global unique identifier which is kind of like if a person submits an observation in Marland for example the common name of the bird the scientific name of the bird subspecies information and then here I'm doing a little bit of data light light data cleanup so those of you are data

9:38Engineers who know that this is never what it looks like there's lots more uh cleanup to do usually on your data but you know we're lucky here that eird has done a lot of that work for us uh but observation count sometimes it has an X and sometimes it has a number in it uh because it's a txt file right there's no

9:54type check so now I'm loading into a database I'm actually going to cast this to an integer uh if it's an X I'm going to say okay it's zero birs zero OBS um

10:05then I'm going to get the country at latitude and longitude which I'm going to use for my visualization and the observation date U then dctb has uh like what Jacob mentioned duct has some really really amazing ergonomics for reading different types of files so there's this function called read CSV um which I can use to directly read this

10:23compress txt file so I actually you know I was getting ready to start like unzipping this and be like okay do I have enough space you know on my hard drive actually don't have to do that I can just read it directly from from the file and it's actually faster too so that's very convenient okay so I did

10:39this take about an hour now it's all in mother duck so mother duck all you need to connect to mother duck is a mother du token you're going to get that from the app if you go to app to.mm mother.com settings um it lets you create a token you can set the lifetime of the token you can have it expire in a day expire

10:56in a year never expire up to you um if you use it from python typically what I do is I uh create an environment variable called mother dark token um I use a library called M for that so I just create a file that's called in there I put I literally put this text mother. token equals and then I pie a

11:14token there you can also you know if you're on Windows you can like export it to your environment variables uh before you start Jupiter um but yeah those you're familiar how that works so okay so now I'm going to go ahead and connect to mother duck um so I import duct TB and then again like the previous slide

11:32all I need to do is connect to my du. eir so I already created that database so that's what I did here actually so let me just here create database Bird that's what I ran before forgot to add that um and then that's has my data so I'm going to connect to that so now for this demo in particular I created this

11:52connection wrapper um so um there are a lot of people who are usually only in the like the p Pyon ecosystem we're not super familiar with SQL so I'm going to just basically show you some some nice convenience functions but I actually CU of a sh of hands who you are uh pretty comfortable with SQL use it in your

12:11day-to-day job okay that's actually most people here um who of you mostly use like data frame libraries like um pandas

12:21poers okay great so there's actually a lot of lot of SQL experts here so let's let's do that okay so I just I just created a query here under tables um I can actually just show you what it does here is just select database schema name from show all tables um and that is just running so I'm seeing what database

12:43I'm in uh what the name is and then I made another convenience called describe so what that calls is it just calls describe EBD um it gives me so I actually I can also just now that I know everybody in a SQL here um I can just do this SA thing uh easy peasy there's also a really nice uh uh uh uh Jupiter uh Magic

13:07command you can use it's called Magic D.B so then you type percent percent dql

13:13and then you can start typing SQL directly in Jupiter um so yeah so again so here are all those all those columns I loaded so yeah all the types look good great um so let's actually see how big this data set is because I know it's like 160 gigs right so let's just do a count star so here I'm using the deductive

13:31relational API that's all built in um looks like it's about 1.7 billion rows so that's a lot of bird observations and that's from all of history for the entire planet right okay so now now you know typically what I do now I want to kind of see an example of the table so let's get a subset of some of the some

13:49of the columns here so okay there's a lot of cute bird names out we repl wingspan board game yes okay you're my people yeah I this just fun there's so many birds there's 10,000 Birds in here they all that cute names um so basically what you can see so there's United Kingdom Colombia you know got 2024 and

14:10it keeps going so okay now we kind of know what the shape of data is so let's start so so Jak and I live in Seattle that's where our HQ is mother duck um and there's a lot of birds in Washington state and sometimes I look up and I'm like hey what is what is this bird so

14:23I'm just going to use um this database and see what are the five most frequent observed bird species in Washington state so for this I'm going to use the pisar API again if you're comfortable with SQL you can just run a SQL query here uh the pisar API is under dub.

14:42experimental um and um it lets you use a data frame uh like libr like language so again I create a data frame I pass in the relational uh uh expression for the for the EVD table here to create like a spark wrapper data frame and and I can do this kind of chain you know like filter. groupy doag

15:04Etc and I'm going to first filter I'm just going to see for last year because you know all of time you know I I'm not super interested in that it's going to be slow as well we see United States state Washington I want to group by the common name and then I want to get the sum of the observation count and I want

15:21to just take the top five and here you go so the most commonly observed bird in Washington state is the snow goose all right so those kind of white geese that That honk and and fly in uh in in groups

15:35flops um so this very handy so hey there's another cool thing that we support a mother duck and that is natural language to SQL U translation so I've made this this convenience function called prompt query uh which you can see here what it does it just runs pragma prompt query so again it's a very very simple SQL command uh that I can run and

15:57then I can pass a sentence here say get me the top five common names of total observation C of birds in Washington state in 2023 it's going to be a little bit slower than than just writing the pure SQL because it needs to actually call the language model but yeah under the hood so this this prompt SQL

16:13convenience method it it uses the uh the uh prompt query U or prompt um function table function and it lets me actually inspect what is the SQL that's being generated so it's more or less what I can write this myself right but if you have more you know or if you have users or colleagues in your space that are not

16:32the most comfortable in Sequel this is a really nice tool to be able to offer them right okay so now I know it's the snow goose so let's look at all the observations of snow geese last year sorted by month so again here's my query I'm again using spark data frame API but again use whatever you're comfortable

16:47with and a filter by the observation day being last year and then for the snow goose that's the common name and then I'm going to now create a new column called month I'm going to Alias Alias set month because I want to see month by month where these birds have been migrating so I'm going to run this oh

17:04it's already done so that's one thing that that Jacob told me is when he is duck TV for the first time he couldn't believe it was so fast he was like did actually my Cay actually run so I I was like I just had the same experience it's 14 milliseconds right um and this is almost this giant two 1.7 billion row

17:23data set that's pretty cool right my my laptop is not even sweating this is all running in the cloud dark TV and mother okay now I'm going to store this in a temp table so temp tables are a feature in DCTV um they are basically the same as creating a table in your memory data in your inmemory database which just

17:40like a convenient shorthand let me again just let you peek under the curtain here to show you what what is the actual um GE 2 temp table it just runs a create orl temp table okay it's a littleit hard to see I'm just going to type in here um mark down because it's easier

18:01um so it's create or replace temp table word OBS as and then here I put my query right so I'm going to do that and I can here because it's a python relation IP I can just pass that relation in here so I created a relation here so that's what the uh the pisar exteral API does it

18:23just creates a result which I can get the actual relation so I just wrun this relation here that's going to give me back that table there you go so this is a kind of a so kind of a lazy loaded preview of the data so I canect so like does everything look good um anyway I'm going to go and and create that temp

18:44table now and I'm going to go and inspect that so again com. table again it's the relational API it's going to give me a lazy lazy preview so again so these are all the snowgies all the observations in the entire world in the last year um and then I'm going to see how many RADS this that so that's that's

18:59pretty reasonable right it's like 100,000 rows that's comfortable so now I'm going to go and visualize these results I'm going to use the H3 uh uh Library so those you're familiar with geospatial data H3 is a library created by or like a standard created by Uber it uh divides the world map into hexagons I can pick uh what

19:22what kind of resolution I want to use so high is very fine so very small hexagons and zero is very large and course so I'm going to use six because that's sort of the the right uh sort of uh um resolution that I want and I'm going to go and down sample my data just so my plot is a little bit faster right

19:39because I'm going to use plot Le and every single point um is going to have some amplitude and it's going of do a lot of computation here in the browser with JavaScript um so U yeah so what I'm doing here is I'm converting the latitude and longitude to a cell ID which is again it's splitting up the

19:56world into hexagon with this uh number six which is like seems arbitr that's the the resolution I'm using and then I'm going to convert that back into latitude longitude and now I'm going to go and plot that so this is like again this is a very well behaved happy pandas data frame and I go plot that and here

20:15we go so these are all the snowgies me zoom out again a little bit uh I made a little animation here so it will go by months so as you can see now uh snow is migrating up north in the summer and they're coming coming back so this is pretty cool right this is just from people uh open data set people in in the

20:34US just seeing a snow goose and being like okay I'm going to put the in eird so thanks to those folks burgers for their service all right so the next thing I'm going to do is I think this is pretty cool right I can see the Gees like moving I'm going to see if there are any other birds in the eer database

20:50that travel further distances let's let's look at some birds that mostly spent their time in Mexico during the winter and in Canada during the summer so I'm going to be lazy and I'm just going to go ask my AI um model to generate sequel for me okay it's the semi palmated Senpai bird okay sounds like a really cute bird um I think it's

21:16here yeah Cornel you know orology department has so this is the bird looks very little but it's mighty um I'm going to go and I created the function get observation which just runs everything that I just showed um it it filters down the data it gets all the bird observations for this particular species this year and then

21:35I'm going to go and pl it on my open street map again there we go so in the winter they're all in South America so let's let's run my little animation again and there we go they go all the way up to the North and then they come back down and now let's let's see cuz I'm not an orthologist so I know nothing

21:52so I'm going to go look and it's actually correct they are indeed they're hanging out here at the coasts South America then they migrate through Cal us up to the north of Canada where they go for breathing so it's pretty cool let me just look at it again cuz it's very satisfying okay Birds okay so uh

22:17lastly we love ducks and mother duck and a duck we just love Ducks so one thing that mother duck also supports is a chat GP or ch GPT for 40 model interface so

22:31again let me let me show you what that looks like in SQL so it's just select prompt so is pure SQL uh that you can run to generate natural language so I'm just going to ask what is the scientific name for a duck actually there are a lot of here it says depending on the specific species duck refers to Broad or

22:51water file in the family an the Mard is

22:55very common so hey one interesting thing is LM have information in them they're maybe not a database in the same sense that you can you know look things up but hey maybe we can do like a like a join on this data you know maybe can use the data that's in the llm join it with our data from our database and see if we can

23:13get something interesting so here I'm going to do this this is now my promps right it's going to say give me the top 100 common names observation year for Washington State 2023 by a total number of observations then aggregate them into a single column in this form so going to just do column name and value aggregate all the rows and then Preen this prompt

23:33here so I'm going to use natural language uh uh to SQL generation to create a query that's going to give me data then I'm going to go paret back into a prompt into a string which I'm then going to feed back into my llm right so I'm here I'm using a combination of my text to SQL and my my

23:53actual um gbt model so I'm going to run that again this is looks like last time around it's about 6 seconds so definitely faster than I can run than I can create sequel so here you go so this is my prop now right so here user asked the following question which top 10 duck species were observed most

24:12frequently please answer the question given the additional information here is the additional information so this is coming from eird right so eird knows I'm going to assume that Chad GPT was not trained on eird but now I'm giving it some info from eird okay so I'm going to go do that so I'm using uh here right I'm using a CTE so with prompt Tex as

24:33that thing I just showed you select and then that prompt table function that we support in mother deck uh from prompt text and then I'm going to just fetch the first result and I'm going to print thater takes about 5 Seconds to run and here we go um I have to admit I

24:53actually did this without llms before and it was a pain right like I spent half a day like uh uh uh grabbing like

25:02pages from Wikipedia about all the duck species in the world it it just took me like a lot of time and this took me like 10 seconds like the LM already knows everything in Wikipedia almost um so instead of me having to create a whole new table with you know all these different duck species and to join that

25:19on the scientific name and that was actually a big pain because there's apparently I'm not an AR apologist right so just stop me if I'm wrong but there's apparently multiple uh authorities who have different opinions about what scientific names for ducks should be so I had like I had like three columns with different you know scientific names and

25:36then I had to do a join on all three of those to make sure I didn't miss anything it was a pain this took me 10 seconds love it very excited about this so yeah so the American region so just for for funsies I'm going to see what is the migration behavior of that bird I'm going to use my uh that that function I

25:53created took one second bam here we go

25:59the American WID where is it going of course it's going north that's where birs going or in the win sorry anyway that's it so that's the demo I wanted to show you this we're very excited about um how you can use duct B and mother duck uh to interactively explore large data set that don't quite fit on your laptop but

26:23still use your laptop for computation and uh congratulations you are now holding a duck that easy thank you all so

26:37much got spot if you can reach out to us uh Jae and I are both available on our mother du Community slack so please come chat with us and we've got 10 minutes for questions any

26:55questions um I I was just curious about how the uh like llm gen component works

27:02so I just like taking a quick look at this and like um I guess it's like a plugin that you have for duck DB and it was inspired by but like I was curious like did you do anything specific for duck DB's cql generation or is it just kind of more did General SQL generation work pretty well and like do you ever

27:19run into errors with the SQL Generation Um so yeah so we didn't explain this but um mother duck Works in any duct environment so wherever you can duct DB you can mother duck so the way this works is uh duct is a really beautiful framework for extensions so we created an extension called mother duck that gets automatically installed and

27:39loaded in your duck DB installation your duct is when you connect to one of those MD colon uh here like this MD colon and

27:48then for example myor DB is like your default database but you get um the proms that I just showed you um which is

27:57oh snap yeah plot Le crashes sadly okay

28:03so this here uh all the way at the ends this select prompt where did we go here is actually down here here this

28:17this is a table function right that gets loaded into your duct TB instance when the mother duck extension loads and it gets evaluated on the mother duck surface so we run a service that actually has our model running and that evaluates that prods now to ask your to answer your question about like did you run into any challenges right uh text

28:40text to SQL I think uh Jacob you want to answer that question yeah sure I can answer that question um so uh we actually do train a model um that's available on hugging face for doing natural language sequel um uh we're working on training actually the next version of that um we do keep marks up there too uh gp4 is marginally better

29:02than the model we've trained um as an example so um but we trained it probably about uh a year ago so we definitely do track that we track that for accuracy um it's pretty good because it's trained specifically for SQL specifically for our dialect of SQL um but you know obviously uh I think our point of view

29:21is not that natural language to equel replaces the analyst is that it helps kind of bridge the gap in terms of problem solving and so if you can help accelerate you know what you're thinking about as a business user with natural language and then kind of partner with you know your data scientist or your data analyst team um we think that's a

29:38very strong pattern for helping kind of data team scale I'm just trying to understand a little bit better where the computation is happening or the data is in the demo did you upload the data to the cloud or to mother and that's why was yeah I'm going to repeat the question just for the um could you repeat the question

29:59yeah no I can repeat it I can repeat it so I think the question was like where is the computation happening where's the data um and is that is that fair where is that okay great you want to take that going or you want me to okay okay so uh in this case uh it's the answer is a

30:15little bit of both so uh dub uh as we've implemented with a mother extension has dual execution so there's some execution happening locally some happening in the cloud in this case most of the data is in the cloud uh the kind of First Step that we did is we downloaded that data set um actually using an ec2 instance uh

30:34because it's so large and um kind of a slow down though and so we just did that and then and then uh it's as simple as running that select query that that g show to make it available in mother duck right um here let me go can I switch over to that so we'll go to the very top

30:50here okay so this statement right here

30:54took that took that file that was on kind of ec2 instance and it put that into other so when we're running these queries it can we can uh we can join kind of combination of the of the cloud and local data in this case most of the I think all the data was actually in the cloud because the promp function runs in

31:11the cloud also but it's returning I mean it's in this in this demo um uh it's effectively the duck interaction piece is kind of like a thin client and then the panda data frame stuff is happening all over yeah so yeah so what what basically Jacob was saying the the first part is in the cloud so this initial

31:31query here where I just look at get the

31:35uh sorry it's this one get all observations for a particular bird in a particular year that's happening on mother duck then I pull that down save it into a temporary local local table and then I do this postprocessing where I do the down sampling for uh for the plotting so this is a really good question because it's so seamless right

31:56like you don't even like you just do condu SQL and it's like it's so seamless that you always don't know anymore what is running locally on in a cloud um but that's the power of our platform is we make it very um easy to go back and forth between cloud and local and it basically does everything for you it

32:13figures out for you what is faster to run locally and what is faster to run in Cloud we can take one more

32:23question can you speak a little bit about the schema inference at the beginning sure yeah yeah so this is a tabular file it's a a.txt file it's basically a CSP right it's just common separated values um and uh yeah it's automatically inferring the types here I can re CSV does State optional input arguments so uh you can actually

32:52explicitly does that work yeah yeah you can explicitly specify what the types are um but yeah in this file I just

33:08it okay that all thank you that was fun cool thank you

FAQS

How can DuckDB and MotherDuck handle large datasets that do not fit in laptop memory?

DuckDB and MotherDuck use a dual execution model where heavy queries run in MotherDuck's cloud while lighter post-processing happens locally. In the demo, a 1.7 billion-row bird observation dataset was loaded into MotherDuck, queried in the cloud in milliseconds, and results were pulled down to a local temp table for visualization with Plotly. This split between cloud and local execution lets you work with datasets far larger than your laptop's RAM.

How does DuckDB handle CSV files with messy data types?

DuckDB's read_csv function includes automatic schema inference and can read compressed files directly (like .txt.gz) without extracting them first. For columns with mixed types, you can cast values during import, for example converting an observation count column that contains both numbers and 'X' strings to integers. DuckDB's CSV reader is specifically designed to handle the common edge cases that make CSV wrangling painful in other tools.

Can you combine LLM responses with DuckDB database queries?

Yes. MotherDuck has a prompt SQL function that calls GPT-4o directly from SQL. You can generate a natural language query about your data, have it return results, then concatenate those results into a prompt for the LLM to answer questions requiring external knowledge. In the demo, this identified duck species from the eBird database by joining database observations with the LLM's knowledge of scientific classifications, a task that previously took half a day of manual Wikipedia research.

What is MotherDuck's natural language to SQL feature?

MotherDuck provides a prompt_query pragma that translates natural language questions into SQL queries and executes them against your database. It works by sending your database schema and the user's question to a language model, which generates the appropriate SQL. While slightly slower than writing SQL directly (about 6 seconds), it is especially useful for colleagues who are less comfortable with SQL, letting them explore data independently.

Related Videos

9:09

2026-02-13

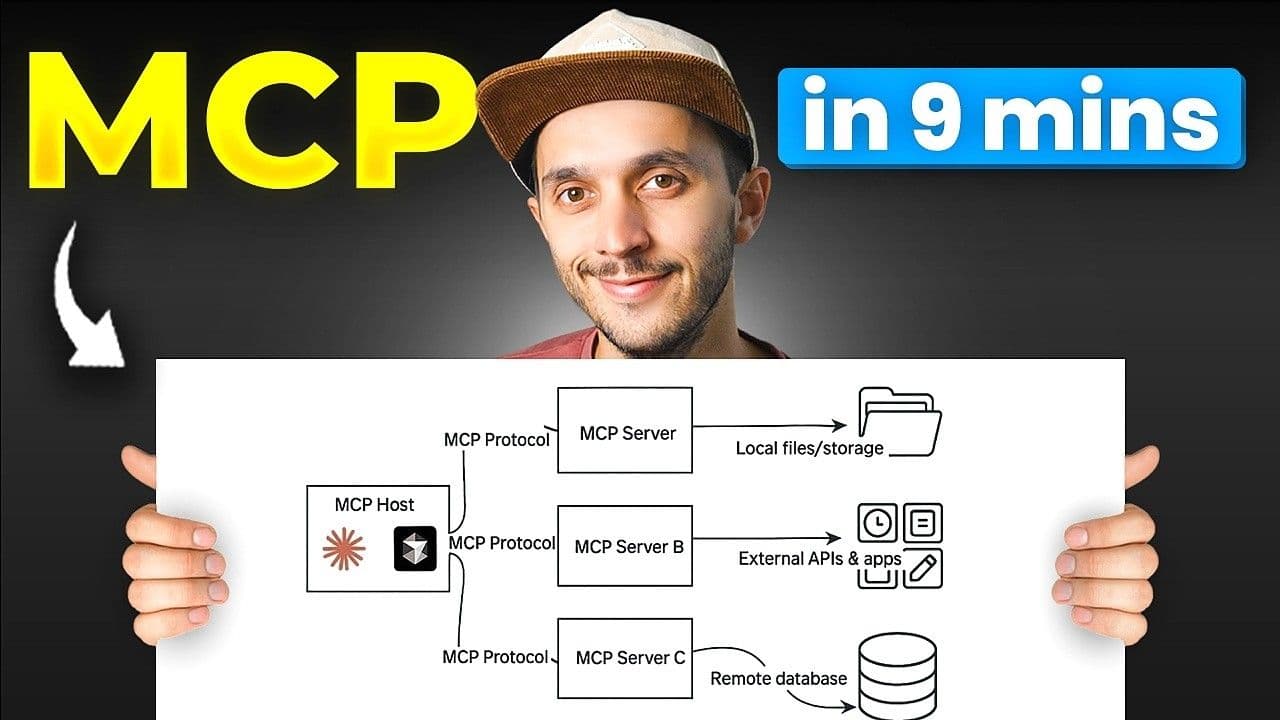

MCP: Understand It, Set It Up, Use It

Learn what MCP (Model Context Protocol) is, how its three building blocks work, and how to set up remote and local MCP servers. Includes a real demo chaining MotherDuck and Notion MCP servers in a single prompt.

YouTube

MCP

AI, ML and LLMs

2026-01-27

Preparing Your Data Warehouse for AI: Let Your Agents Cook

Jacob and Jerel from MotherDuck showcase practical ways to optimize your data warehouse for AI-powered SQL generation. Through rigorous testing with the Bird benchmark, they demonstrate that text-to-SQL accuracy can jump from 30% to 74% by enriching your database with the right metadata.

AI, ML and LLMs

SQL

MotherDuck Features

Stream

Tutorial

0:09:18

2026-01-21

No More Writing SQL for Quick Analysis

Learn how to use the MotherDuck MCP server with Claude to analyze data using natural language—no SQL required. This text-to-SQL tutorial shows how AI data analysis works with the Model Context Protocol (MCP), letting you query databases, Parquet files on S3, and even public APIs just by asking questions in plain English.

YouTube

Tutorial

AI, ML and LLMs