AI Powered BI: Can LLMs REALLY Generate Your Dashboards? ft. Michael Driscoll

2025/05/20Featuring:The Evolution of Business Intelligence: From Clicks to Code

Business Intelligence is experiencing a fundamental shift from traditional drag-and-drop interfaces to code-based approaches, accelerated by the rise of large language models (LLMs). This transformation promises to make data analytics more accessible while maintaining the precision and version control benefits that come with code-based solutions.

Understanding BI as Code

BI as code follows the same principles that revolutionized infrastructure management with infrastructure as code. Instead of configuring dashboards through complex user interfaces, the entire BI stack—data sources, models, semantic layers, and dashboards—exists as declarative code artifacts in a GitHub repository.

This approach offers several advantages:

- Version control: Every change is tracked and reversible

- Collaboration: Teams can review and contribute through standard development workflows

- Expressiveness: Code provides far more flexibility than any UI could offer

- AI compatibility: LLMs excel at generating and modifying code

The Role of LLMs in Modern BI Workflows

Large language models are particularly well-suited for BI as code frameworks. Unlike low-code or no-code interfaces that were trending a few years ago, English has become the universal interface. LLMs can take natural language prompts and generate the necessary code to build metrics, dashboards, and analyses.

Schema-First AI Generation

One of the most powerful approaches involves sending only the schema of a dataset to an LLM, rather than the entire dataset. This method:

- Preserves data privacy and security

- Reduces processing time significantly

- Still provides enough context for intelligent metric generation

- Allows the LLM to infer meaningful metrics based on column names and data types

English vs SQL: Finding the Right Balance

The debate between using natural language versus SQL for analytics reveals interesting nuances:

When English Works Better

- Simple data transformations

- Date truncations and timezone conversions

- Basic aggregations

- Initial exploration of datasets

When SQL Excels

- Complex window functions

- Precise time-based calculations

- Multi-step algorithms

- Production-grade queries requiring exact specifications

The Time Complexity Challenge

A compelling example involves specifying time ranges. Asking for "revenue over the last three days" in English seems straightforward, but raises multiple questions:

- Should it include today's partial data?

- Should it truncate to complete hours?

- When comparing periods, should partial days be handled consistently?

These nuances demonstrate why code-based approaches maintain their value even as natural language interfaces improve.

Local Development with DuckDB

DuckDB has emerged as a powerful engine for local BI development. By embedding DuckDB directly into BI tools, developers can:

- Work with multi-million row datasets on their local machines

- Get instant feedback on metric changes

- Avoid cloud processing costs during development

- Maintain complete data privacy

The combination of DuckDB's performance and BI as code principles creates a development experience similar to modern web development—write code, see immediate results, iterate quickly.

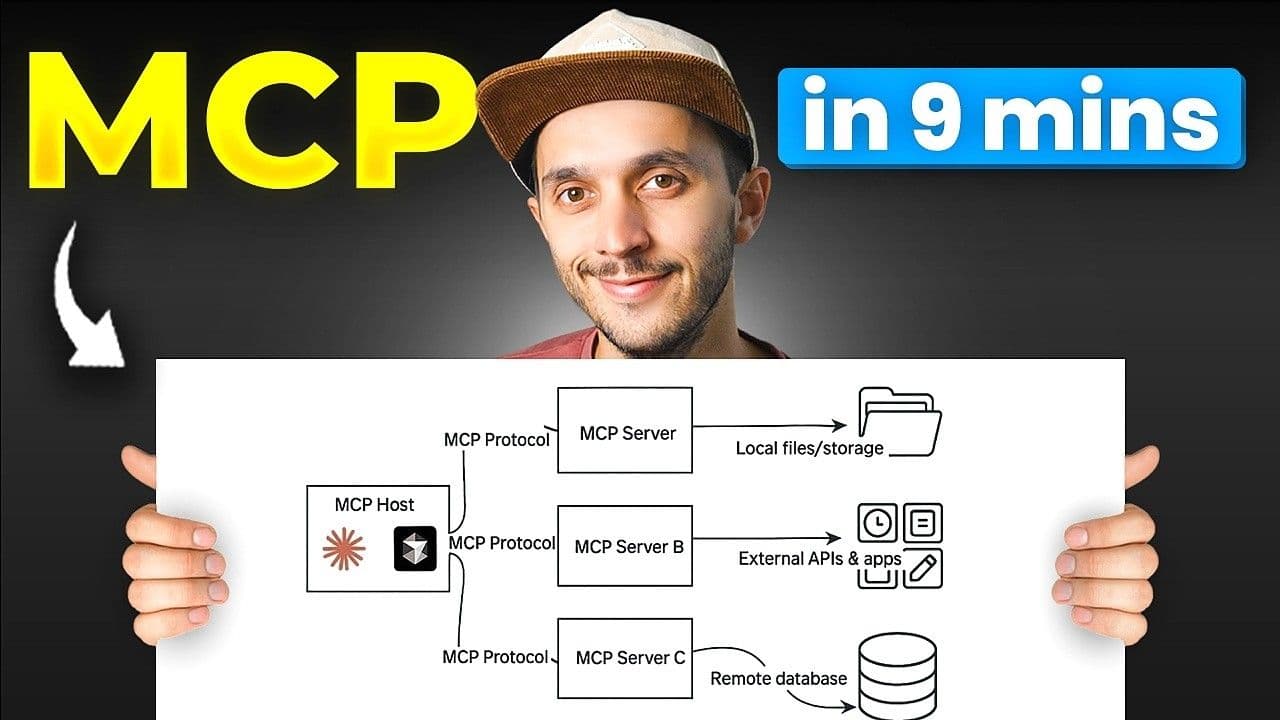

MCP Servers: Bridging AI and Data

Model Context Protocol (MCP) servers represent a new paradigm for AI-data interactions. Instead of developing separate integrations for each service, MCP provides a common interface that allows AI assistants to:

- Query databases directly

- Access real-time data

- Generate insights without manual data export

- Maintain context across multiple queries

Local MCP Implementation

Running MCP servers locally with tools like DuckDB offers unique advantages:

- Complete data privacy—no data leaves your machine

- Instant query execution

- Direct access to local datasets

- No cloud infrastructure required

Practical Implementation: From Data to Dashboard

The modern BI workflow demonstrates impressive efficiency:

- Data Import: Connect to data sources (S3, local files, databases)

- AI-Generated Metrics: LLMs analyze schemas and suggest relevant metrics

- Code Generation: Create YAML configurations for metrics and dashboards

- Instant Preview: See results immediately with local processing

- Iterative Refinement: Use natural language to adjust and improve

Real-World Example: GitHub Analytics

Analyzing GitHub commit data showcases the power of this approach:

- Identify top contributors across multiple usernames

- Analyze code changes by file type and category

- Generate insights about project focus areas

- Complete complex analyses in minutes rather than hours

The Future of BI: Notebooks, Dashboards, or Both?

The evolution of BI interfaces suggests multiple modalities will coexist:

Traditional Dashboards

- Best for monitoring key metrics

- Daily business operations

- Executive reporting

- Standardized views

Notebook-Style Interfaces

- Ideal for exploratory analysis

- Root cause investigations

- Iterative questioning

- Sharing analytical narratives

Challenges and Considerations

Despite the excitement around AI-powered BI, several challenges remain:

Technical Hurdles

- LLMs perform better with established coding patterns

- Innovation in interface design may be constrained by AI training data

- Documentation quality directly impacts AI effectiveness

Human Factors

- Metadata often exists in people's heads, not systems

- Business logic requires human understanding

- Quality control remains essential

The Augmentation Perspective

Rather than replacement, AI in BI represents augmentation. Data professionals who embrace these tools will find their capabilities enhanced, not diminished. The technology handles routine tasks while humans provide context, validation, and strategic thinking.

Best Practices for AI-Powered BI

To maximize success with AI-powered BI:

- Follow conventions: Use established patterns that LLMs recognize

- Document thoroughly: Quality documentation improves AI performance

- Start with schemas: Let AI infer from structure before accessing data

- Maintain human oversight: Verify generated code and results

- Iterate quickly: Leverage local processing for rapid development

Looking Ahead

The convergence of BI as code, powerful local databases like DuckDB, and sophisticated LLMs creates unprecedented opportunities for data analysis. As these technologies mature, we can expect:

- More sophisticated metric generation

- Better handling of complex business logic

- Seamless integration between exploration and production

- Continued importance of human expertise in context and validation

The future of business intelligence isn't about choosing between humans or AI, dashboards or notebooks, clicks or code. It's about combining these elements to create more powerful, flexible, and accessible analytics experiences for everyone.

Transcript

0:00Do you think the BIS code will kind of like grow specifically now? AI is there to generate code and it's kind of like the the interface for for Genbi at the end. There's nothing worse than being given the keys to a data warehouse and saying, "Hey, go figure out what's going on here." Um, so LLMs are not that

0:19different really than a junior data engineer. Do you think English is easier to express analytics needs than SQL?

0:26There's all these nuances um when it comes to English language on something as simple as expressing time. That's where SQL and and any code can be quite precise. Maybe the ADLMS is going to find their own language, right? And then we mention the real data as uh as

0:45context 13. Wow. Yeah. Where do you think the technology might fail? Mike, uh how are you doing?

0:55Doing great, Medio. Thanks for having me. So, Mike, can you tell us like you've been doing a lot of things in data, but can you uh give us like a quick intro about about your background and your current role at at real data?

1:12Sure. Uh well I I you know every time I I tell my background that the number of years I've been working in data seems to grow but I've been um I really got my start uh as a computational biologist. I I was pretty active in the R community back in the day which is one of the reasons I'm a huge fan of DuckDB. uh

1:33Hannis uh the duct TV's creator was also I think an early uh uh user of the open

1:40source R statistical language and um that was in the 2000s I worked in big data I moved to San Francisco I started a consultancy in big data data science ultimately started a business called meta markets which we sold to Snapchat and we had built a large-scale analytics platform there um we invented a database called Druid Apache Druid there and real really uh

2:06the company um I've been running and leading with a talented team for the last few years came out of Snapchat. We spun the team and technology out of Snap and we had the realization that um there's some really powerful real-time analytics databases out there uh beyond Druid uh one of them being duct DB. And so um we're just tremendously excited to

2:30be using duct DB at the core of real. Uh we use some other databases as well and um yeah we're we're really trying to um

2:40build faster dashboards. We think that fast databases deserve fast dashboards and uh that's what we've been working on for the past few years and we've got dozens of customers and millions of dollars of revenue to show for it. And uh more than anything, I think we're just having a a great time. The data community has always been um I think

3:00just a a great community to be a part of. Uh and so it's been fun just kind of building in public, building in the open. We've been very um like Mother Duck and the Duck DB community have been we've been very public and uh what we've built and so uh yeah, we've had a lot of fun interacting with that community.

3:18Nice. Um so you've been you know marketing yourself or defining yourself as BI as code. Um and I feel this is a

3:28term still pretty new. I actually I think I did a blog when I joined Mo a couple of months after like two years ago already and um and it resonated with quite a lot of people but I feel it's is still like two years later a term you know still new kind of like can you like remind us the definition and where do

3:48you think it is mattering or not in the data ecosystem? Well, I I think at the heart of it, the the the core idea is very similar to what we saw with, you know, infrastructure as code, right? Um and and that has really revolutionized how people kind of parameterize um servers and and do deployments. I think we've

4:10seen Verscell obviously uh following that wave um and and they've got frankly apps as code uh these days. I think that BIS code is just in that same vein where the idea is that your entire BI stack can be um made up of just a GitHub repo,

4:30a set of declarative code artifacts that define the data sources, the data models, the semantic layer, in our case we call it the metrics layer, and then the dashboards. And so, um, that's at the heart of it. The one thing I would say that that I think we've stumbled into relevant to this discussion today is that a lot of people were trying to

4:50embrace low code or no code interfaces. That was a bit of a thing uh a few years back. But um you know AI of course you the comment is that uh English is the universal language and so um English prompts um talking to LLMs uh LLMs are

5:09incredibly good at taking English and turning English into uh computer code and so what we found that um building real projects um has become even easier

5:21because with the power of things like cloud and uh GPT40 So um we can actually generate the BI as code with an LLM. Um

5:31AIS are really good at uh interfacing with you know X as code uh frameworks anyway. So, so what do you think the so

5:41BIS code you know it's it's mostly getting software engineuring best practice I feel you know compared to classic BI where we have you know uh I I would say you're kind of like vendor locked with within like a specific ecosystem you know UI with drag and drop um do you think the BIS code will kind of like grow specifically Now

6:08that you know AI is there to generate code and it's kind of like the the interface for for Genbi at the end.

6:17Absolutely. I think that you today the dominant players in in BI are BIS clicks. uh yeah many of us have experienced right these kind of bloated interfaces right and and the truth is that you know when it comes to something like a data visualization data visualizations are are creative artifacts right they're they're as varied and as unique as you know the

6:41kind of image you might generate with with deli um and so the idea that you

6:47can you know click your way to to exactly that you know visualization that you want to show your um your executive team. Uh it's very hard to build a UI that covers right the the um the entropy the space uh that exists for data visualization in particular which is really at the core I think of a lot of

7:08BI tools. And so absolutely when you when you replace that that BI is clicks with BI as code and then you you kind of replace the interface with a with an AI prompt you have the ability I think to it's far more expressive right code is so much more expressive than any UI could ever be. So I I think I mean look

7:28we're seeing AIS remake a lot of interfaces but certainly I think BI is is absolutely ripe for disruption by this uh prompt interfaces. Yeah. And do

7:40you think that LMS are going to solve like the metadata problem like specifically I want to create a metric for revenue but the problem is that you know I'm not sure which table to use. Um so how do we kind of like it feels like this problem is really hard to solve but uh what what's your thoughts on that?

8:04Right. So I think I think the reason why a lot of text to SQL approaches have um fallen a bit flat. Um now of course it's still early so a a lot of things fall flat in the in the early endings here of AI. So I want to caveat and say things are getting better faster right? Um, it

8:21was it was not long ago that uh Will Smith eating spaghetti uh looked like you know true it was AI slop quite literally probably AI slop there in that video and now we can see Will Smith eating spaghetti quite uh you looking quite refined I think. But I will say that that the great thing about LLMs is to your point

8:45a metric like revenue. Um there may not actually be revenue anywhere in your in the 200 database tables that you've got.

8:53There might be an orders table with price and and the LLM um I do think the LM is more likely to know that revenue is going to be the sum of price and it might even know to exclude let's say refunds. But um I think that um you know

9:08a lot of approaches in AI are about you know uh kind of guiding the model a bit and maybe and sort of reducing the narrowing the scope of what the LLM is working on. And so I think probably our our view is that LLMs do much better with a curated layer that sits on top of those let's say 200 tables in your data

9:28warehouse and that is the semantic layer or the metrics layer. And so LLM can help you build that metrics layer um because they have a good world model right they know revenue is probably a sum of price. They can infer a good set of metrics um which I think you'll show off in a few minutes. Um, so I think

9:45they can address it, but you know, uh, they're uh, you know, they're not perfect, but just like, you know, look, I I think the analogy is if you're a data engineer, and I I used to be a consultant in data engineering, there's nothing worse than being given the keys to a data warehouse and saying, "Hey, go figure out what's going on here about

10:05our business." So even the smartest data engineer, right, can struggle when facing just a mess of tables that are undocumented.

10:14Um, so LLMs are not that different really than a junior data engineer uh if they're not giving more, you know, given more context. Yeah. And I think that this is good to like just to step back a bit that you still know to understand all the various bits and sometimes those various bit like no matter how the LM is

10:34smart if it's not connected to you know an excel sheet somewhere of your customers or this thing which which kind of like is like the role of data engineers sometimes you know of a sherlock's role right um I think like if

10:52you don't give access to those resource it's it's it's pretty hard to to find out. I think datab bricks did uh a product um kind of like uh in that vibe

11:03where I don't remember it's been out for a while. I'm not sure you know where where is it it is that but I think the the whole premise was you ask a question and it has you know different uh connection to actually you know your CRM and your information to kind of be able to to build the metric but so yeah

11:24humans interaction at least for now and hopefully um still matters still matters a lot so LLMs are generating a lot of codes and there is all this vibe of you know LMS are going to kill uh programmers. I don't really really want to get into that debate but I want to narrow it down rather into that. Do you

11:45think English is easier to express analytics needs than SQL? What's your thoughts on that?

11:54Um well I you know I think I think there's some good analogies when you think about the the world of law, right?

12:01uh in law there's always this tension between um precision and clarity. If you ever read the indemnification clauses in a contract, right, this legal ease is can be um overwhelming uh to look at, but it's it's code. It's legal code. And so I do think that um it really depends on the task at hand. I think if you're doing simple

12:25tasks um uh I think that English is probably easier to express uh than SQL if you just you know you don't remember the um the syntax for date truncation.

12:37You just say hey can you truncate this time stamp right or convert a a UTC time stamp to a time zone that's easier to express in English. I do think when you get into sort of having to describe you know an algorithm quite precisely like a window function um it turns out that code can be uh quite precise if we think

12:57about ma mathematics has spent hundreds of years right since the time of decart thinking about mathematical notation right and so I do think that uh at its heart really SQL and and other languages can be really good at providing a level of precision that you know English language is just not able to achieve. Um so I would say um it just it depends on

13:22the use case and probably for most the truth is for 80% of the use cases English is going to be sufficient right but it's going to be those um those more narrow use cases that require kind of more expertise uh into expressing code.

13:37Yeah, based on my experience, I feel like if I use English to kind of like,

13:43you know, adapt the code or transform the data, um, it's easier like I don't want to say, you know, calculate me, uh, I don't know, the top domain that's been sharing my blog, for example, and I have stats, right?

13:59um he needs to parse a certain field and

14:03maybe that field is not named correctly, right? That's that's the reality, right? So maybe it's going to, you know, allisonate or give me something. But if I know, you know, how the data looks like and I can say, oh, you know, can you infer and do u a calculation on the top domain? So it's not it's it's in

14:22between you know I want uh a high level question and the the SQL SQL generation

14:30I feel there is a right spot there I'll

14:34give you an example that we've had directly spent a lot of time on at real and this is like specifying time right so if you say to someone in English it's very easy to say hey could you give me the last three days I want to know what the revenue for my company was over the last three days right how much revenue

14:49did I make in the last three Well, that sounds simple enough, but then when you sort of double click in, you're like, okay, well, do you want the last three complete days or do you want to include today, which might be partial? Okay, well, you say, I want to include today. Um, you say, okay, great.

15:05You want to include today. Do you want to include the current hour or do you want to sort of truncate uh, you know, 8 a.m. Pacific? So, you know, maybe we don't have a partial hour involved. You say, okay, I want to, you know, include 8 a.m. Pacific. And then if I said I want to compare the last three days of

15:19revenue to the previous three days. Um now you have this question of well should I compare three complete days to two days and eight hours? And so there's all these nuances um when it comes to English language on something as simple as t expressing time. And so you know that's where SQL and and any code can be quite precise.

15:40We've actually invented our own dialect for that sort of precision which um we hope you know we hope we can use an LLM to interact with. Okay, I see. And do you think like we we're going to do a small demo with uh with real and you know the generated dashboard and by the way I'm curious in the audience if

15:58you've been using BIS code tool and generating dashboard with Lance what's what's your workflow? Are you using like a tool like cursor or directly the tool itself? Um but do you think data companies still need to do their own UI or they should you know optimize to to integrate with existing IDE or you know directly uh you know embedded chat like

16:23clothes or chat GPT. I think to that extent um you you you wrote uh a post on

16:32LinkedIn. Let me share it uh quickly

16:36with the audience which was about um you

16:41know the acquisition of uh neon by data bricks and saying that you know a lot of usage is coming actually from you know LLMs and AI. So do you want to optimize from the user asking an LMS and the lens do the stuff right or do you want to optimize for the human user experience on the UI right so what what what do you

17:05think and I'll put the well you give your thoughts the the the message you you the post the LinkedIn post you shared I'm like I guess I've been posting so much I don't remember exactly what uh which post that was well Um I

17:21mean I think you know you and I were just uh chatting about okay this is um ah yes this is about the um well you

17:31know I I think we're all recognizing I mean before the we started the show uh medie you and I were talking about um you know llm.ext text, right? And we all recognize that, you know, it's probably going to be agents that are reading our documentation more so even than than uh than search engines, which use robots.ext uh and and probably more so than humans,

17:53right? Um so so I I think that um I

17:58think that we do need to uh start conforming, you know, our tools, uh anticipating that agents are going to be, you know, significant users of those tools. I think I do think you know we're we're thoughtful of this as you start to design um the shape of of any BI you know a BIS code application or frankly

18:19any kind of XS code framework um I think

18:23it is instructive to kind of think about following precedent because one thing I've noticed with when I use cursor to generate a real project um real has borrowed a lot of ideas from folks like Verscell uh you know folks like Hashi Corp you know the um you know the the world of of Node uhJS projects, TypeScript and so our naming conventions

18:45do um often follow those naming conventions but when we when we uh when we have actually come up with our own ideas for okay instead of calling it you know main we'll call it you know uh I don't know index something um violating

19:01those precedents actually confuses the LLM. So LLMs are much better at kind of following um established precedent with coding coding styles and coding principles um that have come before it.

19:13And if you are going to violate precedent and innovate in an area of of you know you have to really um put that be clear about that in your documentation you have to teach the LLM.

19:24And again, I think it's it's not that different than teaching developers, right? Developers um there's that great quote from um Eric Bernhardson, who's the founder of uh Mo, you know, modal mode labs, modal labs, and he said, you know, I don't want to learn your garbage query language, right? One of the reasons why SQL is so popular, it's

19:45because people have been writing SQL for, you know, since uh EF COD, you know, came up with it. So I yeah I would just say I think you know if you if if if you're thinking about building for folks who are starting new projects I do think it's very wise to think about um how you can be friendly to that um to

20:05claude or you know GPT codeex I guess now they've got codecs that came out last week um and make it easier on the LLM to build you know build your projects you you were mentioning if you v so LM like to follow you know convention so you think it's going to be harder tend to to innovate and you know

20:23get out of the box if if you have such

20:27at least in term of like the yes the interface right which is the the the which is the code.

20:35Uh I mean I do think we we end up with you know Murray Gellman is the um you know Nobel Laurette physicist who talks about kind of frozen accidents in history right where in evolution you know something happens in an entire you know all every mammal including us kind of has some weird physiology because of a frozen accident in our kind of

20:55evolutionary past. I think with the querty keyboard we've seen time and again people have tried to build a better more efficient keyboard layout never never works right people want to create espiranto a better language other than English right so um I do think that there is going to be a little bit of like AI is could could end up deepening

21:16the grooves for a set of languages and conventions that already exist that have thousands of answers on stack overflow right where the LLMs have been able uh you know tons and tons of repos uh on uh GitHub. I hope that doesn't mean PHP you know ends up being the uh the dominant language. Uh but but certainly

21:38it does bode well for SQL right like um you know the the idea everyone wants to replace SQL with something better. I think that in the in the age of LLM overcoming sequels yeah it's very hard to escape that kind of um you know gravitational force maybe the LMS is going to find their own language right and that's that's that will be the the

22:02the thing that would be maybe curious they that's true that's true if they find their own and then they start writing it of course they're they're going to write a lot more of it a lot faster than any of us yeah um so let's let's take a small I have a couple the break question topics, but let's just uh

22:18take a small hands-on break um and go over uh just uh kind of like the the demo you did at uh the the docom. And so what I'm going to do here uh is basically just go to the flow of uh installing uh real I'm going to share my screen here. Um and so if you go to real

22:41data, you have a one installation script. Uh, and by the way, it's super nice that uh you were able to influence Doug DB folks because I wanted to have an installation script. And I don't know if it's coincidence, but it's probably influenced, but right after the duck.com, they they put it on their website. Um, so you can also install DDB

23:00with a oneline script. Anyway, so if I run this, uh, hopefully it works. Everything is, uh, you know, uh, just live. I'm going to install into my current directory uh for now and I think I already actually installed uh here I just create a folder and so real is based on it's it's mostly a CLI based on

23:23go right is that correct that's right primarily Golang yeah the runtime is Golang and so that's the beauty of it is that you just need a binary and then you can run you know stuff and the rest is mostly configuration and then SQL file I guess. Is that correct? That's right. We call that scraml sometimes because it's

23:45a combination of SQL and YAML. Interesting. Uh so you are between a SQL and a YAML engineer. Um a scraml engineer. Scramble engineer. Um so

24:00uh and someone just asked in the in the

24:04in the show uh will this bear be a recording? Yes, you can uh register to the uh Luma event uh and uh there will

24:15be the recording send it to you. I'll share the link right after. Um so if we add the data um if we add S3 what is

24:25working behind the scene? Are you doing a custom order or do you Yeah, it's a great question. So you know when we started rail um I there was an internal discussion about like should we use let's just duct DB's got great connectivity let's just use ductb's s3 reader um it was not as mature as it is now and so we initially actually built

24:49our own S3 um reader but you'll see now

24:53um what we will what we do now do if you do um if you do Amazon S3 is we actually now just generate uh the duct DB code

25:03that reads from S3. So um bit by bit we're replacing some of our native connectors that we wrote in Golang and you did some um sometimes almost what a bit too clever things behind the scene.

25:15We're we're now just leaning into um the duct DB ecosystem. Uh certainly now with the with the emergence of um you know the the community extensions I think that's going to get even more powerful.

25:27Um so so in general like a lot of tools

25:31we're embedding duct DB into the real binary and then um you're running it locally on your machine you can uh one of the nice things we can lean on here too is that if you have implicit credentials like if you've yeah you know set up your AWS or you're running on a VPN and you know you're able to access

25:51you know um locations that you know that you wouldn't otherwise access real is able to see those uh see that data um and of course not because we're running it through real cloud you kind of have a private um connection from real to that S3 bucket that only you have access to.

26:09Yeah. Yeah. So it's it's also a question of having a better integration with your with your cloud product because here like just to to mention first everything is uh running locally. Okay. Okay, let's uh I have actually um a small that I want to try out uh which is a park file

26:28uh about accur data of one year. So it's

26:33kind of large. I think it's almost one gigabyte. So I think we'll wait a bit.

26:37We'll see how fast my internet connection is first, right? Because I need to download it. So there is a first step that is building the model and then there is this button generating matrix with uh with AI. Um so okay it's done.

26:54Um so what's the what's the difference that between building the models and building the dashboard with AI? So um for real we we wanted to give people the opportunity to get right into a dashboard experience. Um so we kind of let them skip the step of building um building a model but every real dashboard uh unlike a lot of BI tools

27:18real does not um build its dashboards on top of tables. Yeah, we build it on top of the semantic layer. And so when you've imported this source that has I it has I guess a few million uh how many rows does that source have? Yeah, let me let me zoom uh zoom in. That's much better. Okay. Um so you've got Yeah.

27:36Three almost four million. Almost four million. Yeah. So, uh, so to help folks,

27:43you know, kind of on their data to dashboard journey, um, what what real, uh, is going to offer, and you could probably, um, I would say you, you could just say generate dashboard AI. What when you click that, what it's going to do is it's going to, um, look at the schema of that hacker news park file.

28:00Um, and then it's actually going to just infer. And by the way, every time we run these demos, um, it's making call to GP240. We're going to make that swappable for folks. We've had some requests to make it call to other, but it's going to infer what it thinks are given the prompt is essentially given this data set, what do you think are a

28:19good set of metrics uh for, you know, for analyzing this data set and GPT40 just decides that let's look at total posts and average score. Uh, I wouldn't say that, you know, max score is that interesting. Yeah, minimum score is not interesting. But it what is interesting is that this was really fast because we only send the schema, right? I guess to

28:41That's right. That's right. And so I think that's Yeah, that's the thing interesting. Uh so I've done also a demo with uh the MCP. We're going to talk about it right uh right away. But I think uh when people think about you know data workflow and AI, you think, oh, I need you know I have all my data

28:59sitting somewhere. So it's really hard to kind of like include the AI especially if you have sensitive data but actually it's much less sensitive anyway if you you know send the schema and it's much faster and I think you get

29:15most of the context what's what's based on you've learning like I guess you need also to kind of like send samples of data can you maybe elaborate a bit on that I think we we want to do that I think in that sort of just to um respect I think the privacy concern concerns of of any customer that you know may not

29:32know that there's a GPT40 call. Some people are a little uh antsy about sending data you know their own data. So we only send the header the schema. Um but I think once we have pluggable uh LLM calls in real then I think we will move towards sending allowing people the option of sending a little bit of you

29:52know let's say a 100 or a thousand rows of data. I think that will give the LLM a better chance to understand, you know, uh what's interesting about it. But to your point, we definitely do not need to ship, you know, um a gigabyte of data.

30:04Uh that's frankly too much context for an LLM anyway. Um but I think what what and so if you look here, what real did is you've got that source on the left.

30:13This is on the left hand side there, you've got your BIS code. So you've got your um that's just your file structure.

30:18You've got uh your source YAML. You've got your metrics YAML. If you click on that metric zaml, you'll see that we built um you click there did it. Yeah.

30:26Okay. This is our metrics layer view. You can see it's it's inferred some aggregate measures and dimensions. Yeah.

30:32U again you can you can edit this in the UI. You can also again it's code if you click to the left it will turn you can actually see the underlying YAML there.

30:40Um yeah let's uh let's let's actually uh open it into uh cursor just to see you

30:48know what exactly has been generated over there. So we have um so we have the

30:55it's basically the same file that I can see on the on the UI right so we have those those YAML file um exactly and uh and then I

31:07can either if I work from here that's also fine but what do your user do like that's related to the question like where do you see people more comfortable

31:19absolutely I would say the um some of the most common things we see and I it's not as re not as relevant for this data set but I'll see if I can come up with some some similar examples um formatting rules. So, one of the things that we use under the covers at real is we use the

31:35D3 format library. And we all know that currency formatting can be kind of a pain in the neck. And rather than, you know, bias clicks and trying to figure out how to format your currency string and change it from, let's say, uh, US currency to euros, um, you can just prompt, uh, cursor and say, "Hey, cursor, update, um, you know, update the

31:58revenue to be expressed in euro uh, instead of USD." the cursor is smart enough to read our documentation recognize we're using D3 format and it it will actually update the uh the formatting for the and the revenue YAML.

32:12Uh if you click on you're looking at the uh the dashboard if you click on metrics that's probably the one that has more um in the metrics folder there. Yeah, right there. This is sort of really doing the heavy lifting for real. This is where you're going to define all of these. Um, so one thing you might do is is like you

32:30might say uh hey cursor um can you give me um let's say the uh the a I'm kind of

32:39looking at the data here but like can you give me um the average um do we have

32:44a to we have a total ranking what's the descendence measure is that the the depth of the uh of the I think I think we could we could like one one career interesting would be the mention in the post of a given technology. So sure we can try that. So can you give me the number of mentions on all post

33:09uh on Doug DB? Um okay let's see let's see let's see what it's going to do while he's doing that. Um we were talking about docs.

33:20I've been advocating for lms.txt txt docs and so um so people that doesn't know um and txt docs is a you know as

33:30you mentioned a new standard for to make more uh the more friendly to uh grass content and so if you go actually there is uh both modd and uh and doug db have

33:46links you see uh to full to their full docs into lm.tx txt. So if you click on this, you see it's um ddblaps documentation, you have a full markdown of the wall docs and what you can do in cursor after uh is just going to cursor

34:06setting uh not sure it's on profile it is uh cursor setting sorry and if you go I

34:20think on features And you get docs and you see here you can add the docs and so let's let's add the real data docs. Uh what is your it's docs.com docs. Yeah docs.real.com. Yep. So if the website has llm.txt, this is where you fill the the full URL, right? If there was, for example, that's that's the to-do uh for

34:45Mike after the after the the session. I've I've already slacked my team and said get on it guys. Wow. It's gonna be done in it's 25 minutes before the live stream. Anyway, what it works Yeah. What it works also is just uh passing the the root website and what it's going to do is so if I do real uh real data just to

35:09have avoid anything. Um and so and so now it's going to basically index it you see and I can reference uh within uh within that. So it's funny because because I have an MCP here is trying to uh to query directly the data which doesn't have access. So that's also an interesting uh experience is that I have

35:34that MCP but I don't want him to use it. Uh I don't want you to use the MCP. Uh I

35:42think I'm not sure if I can MCPD. I just edit the EML file based

35:50on and so now I can do this and then we

35:55mention the real data as uh as context.

36:00So let's see what let's see what it's going to do. All right. So it seems uh already much more keen based on what it it's done. So um so this is what it suggests.

36:14Yeah, that's exactly right. Okay, that looks pretty good. Uh let's see if it actually works. That of course is the Yeah. Yeah. And so now if I go to the UI is the best place to see

36:28where should I look? And you should look in and look in metrics and see if it actually added this to it should have added. Did you accept the uh you accept the adduct? Okay, it's it's there, right? All right, it's there. Now go to Now go to the the dashboard and see.

36:42Let's see if it actually uh it should it should be at the bottom. Okay, go to all. I can uh I can filter I can idle and filter this one.

36:55Yeah. Okay. Yeah. Yeah. Can you see it dimensions? Wow. There we go. Okay. Click, by the way, click on DECTV mentions. Click on the one through three. That'll bring you a bigger graph. All right. Right. And now you can now you can actually do compare.

37:09Looking at last 12 months. Let's do year-over-year comparison. So there's a toggle up on the right. Wait, I don't I have only I have only data source from 24 and 25. Okay. So uh that's that's over the over the month. So I think uh this was the Doug DB UI. Uh, I can tell the feature. This was instant sequel.

37:31Yeah, this one. No, actually was last year. Sorry. Um, I'm mixing things. But yeah, uh, it's probably due to, you know, major release or specific features, but uh, yeah. So, this is interesting. So, um, what we've done so far, just to recap, um, is that we started from, uh, from the CLI, right?

37:54So I have my real process here which is running right and I have an UI and it generated me uh some uh some data here uh some files which is mostly uh YAML file right and and that's how I configure you know my my metrics um I specify I did uh do the entry of the data it was a public S3 bucket with

38:21a one park file for our this data and then what we tried is basically to use cursor and prompt to to do something we asked first and he gave me the number of mention of all post duct DB fun fact is that it tried to use the duct dbmcp which is uh so a tool to basically run

38:39the query directly with the with the agent but I didn't want to use that so I specify I would just want you to edit the file and I specify the real data documentation that I had it with cursor and it was all good Then after I can uh directly uh inspect the result. It feels also a bit like the BI uh development

39:01like this is much more close to like you develop a web interface, right? It's uh you you do a bit of HTML and then you get your instance feedback. That's yes.

39:13Does that raise an element maybe? Absolutely. I think one of the key elements here is that because duct DB is the engine under the hood, all of these each time you make a change to your metrics definition, if you wanted to, you know, refine the metric ductb mentions, um, you see that instantly, right? If this were running on, you

39:33know, Snowflake or BigQuery, you know, you wouldn't have that same feedback loop. I mean, this is a it's all running locally, in fact, right? Yes, you got, you know, your your Apple silicon is just kind of crunching through that duct DB database and and the experience is pretty joyful, right, of that kind of local development loop. Yeah. What do

39:53you So we saw like a bit like already uh maybe do you want to dive into uh a demo so a bit more within the the MCP and just before you you prepare yourself maybe in the screen I can give like a quick intro for people that doesn't know but basically MCP uh so I have a video

40:12about that on the moder YouTube channel also um so if you go

40:19to if you tap modb MCP. Uh you're gonna see uh my beautiful face with another cap on YouTube. But basically I wanted just to steal uh I steal some assets from my from the past.

40:36Um and uh and basically what's happening here uh with MCP uh which was developed by entrepre is a is a common way to uh

40:46for uh for AI to to to talk to different service. So instead of developing every time you know an integration an API to to Slack or given service or here for example running with DDB you have a common interface so that people can just add the MCP on DDB within cursor and then their cursor can run query using

41:08DDB that's that's roughly how how it works and so your MCP can run on a server as for example one popular MCP nowadays is superbase which is hosted posgress And so people can say, you know, create me a table uh for this specific u uh asset and it's going to go connect to the remote server uh and

41:32create the DDL for you. No, you don't even need to go and connect to superbase given like you do the configuration. Uh but the beauty of it with Doug DB is because everything runs locally, you can run the MCP uh also locally. So the dagb

41:47is local and so the all the workflow is um is looking like is that instead of uh

41:54you know you write code sorry let me just roll back uh this is the this is the current step you know standard workflow with AI is that you ask write a data pipeline it generating some code and then you still need to test it against data right and that's kind of like the loop which is a bit slow is that you get the code and

42:16the LMS doesn't have a context. It doesn't have the schema. We saw that with uh the real demo that you know you can generate uh AI dashboard in actually send the schema to the LLMs and retrieve back and that's basically kind of the premise is that with an MCP of DDBR here with the demo is that you get the MCG get much

42:39more context around the data and maybe query also directly the data and you get the result. So there is no I generate the code and now I need to test it against my data. So that's that's MCP in a nutshell for data. And so if you're if you're ready, right? So one of the things that we're um pretty excited

42:57about um at real is is not just using

43:01you know gen by the way everyone can see can everyone see my screen there? Okay, great. Came up is is uh certainly we've talked about leveraging LLMs on the you what we call GenBI, right? generative AI for for BI as code and I think I was uh

43:17I'm glad uh Mie that you pulled off a great example there um uh and we can go of course further there you know building entire projects kind of vibe coding an entire um dashboard stack um you know as a real kind of GitHub project um this is actually one such project this is a ductb commits project uh so this is actually living you know

43:39in a github repo we published it to real cloud code. We have this um GitHub analytics project and again this is just BIS code. We've got that same set of um you know kind of models, sources, dashboards and so this is um this is now you know a dashboard and of course we can interact with this dashboard and ask

43:58questions of this um ourselves. Uh we can say okay you know very commonly is like yeah who are the top committers to duct DB? Um, no surprise here that uh Mark uh is at is at the top there. Um and and you know so you know Mark is the co-creator of tech DB for people that doesn't know. And so you can zoom in and

44:20you can look at you know how's how is Mark's code uh you can all see he's got two usernames here. You can kind of see like how is he comparing to Hannis over time right? Um and uh um so anyway there's lots of you know interesting things we can do here with um you know with with exploring the data sort of

44:38this is kind of data exploration as clicks. Again we make that analogy you know exploration as clicks versus exploration as code. Well um one of the things that we've uh recently started to do is build out a real MCP. We thought what if instead of having to click through and ask questions about you know um which files are being uh you know

44:59touched um what are what's the nature of those files um you know if we were to break down uh you know uh um you different different uh values here like what's the commit message or what's the file path um that's being you know touched by this and you can get some interesting insights here to see like what file paths are being kind of uh you

45:19know um uh you know most frequently touched, but that's where BI is code can be powerful. And so what we've actually done and um I'm actually going to not uh

45:31I I I wish I could run it live, but I think I'm uh I've got some JSON error.

45:37Uh so I'm just going to show what we what I ran right before I got on this uh call. This is uh basically we've built an MCP server with um with real. It's actually running in a um uh actually maybe if I get Docker open it will be okay. I might actually try to do it from scratch. Let's see. Okay. So just to get

45:56just to give context then the does the MCP server is running locally or is on the cloud then now? Um it's running locally actually. Okay. Yeah it's running locally. So everything is local here. Okay. Let's see if this is right.

46:11I've got my claude um MCP uh desktop

46:16config. There's a couple of uh keys in there which we're gonna have to rotate after this call. Uh so if folks want to start hammering our open API key. Um so

46:27um we got that running and then in theory um you know in theory we we we can actually run you know uh we can actually connect to the real MCP server.

46:39Now, I think again this may actually have to be like a baking show where I show you what um would actually happen, but let's just try and see if I if I copy in this question. Uh I'm going to ask it. Using the real MCP server, can you answer the following? Who are the top committers to duct DB in um uh okay,

46:58it didn't like that. Let's see. Using the real MCP server. Um okay, who are

47:04the top committers? Let's see what actually happens. I don't know. So here you have the MCP configuration. So it's kind of like the same I I have on cursor but you did to the cloud app, right?

47:16That's correct. That's right. We we have this thing called um cloud desktop config here. That and this we have our open AIP our real service token um and some other hints there. I'm looking to see real organization name. Okay, that's all there. There's something going on.

47:33Format preset. Okay. I'm looking at some other code, but um I think I think there's an I've got a little bit of a of a of a JSON error. Syntax error. Yeah, I

47:46may have solved it. Okay. Yeah, there's an error reading property name at position A2 line 4 column 45. I don't know what that is. I'm not I'm not going to waste our our viewers time, but what I will show is if if you do fix that uh JSON error, you can actually get um um the MCP server and you increase just the

48:05text that we can. Yep. Exactly. So, it's going to send a request um and it's going to basically say like okay um let's get uh let's look and see um you know what uh different models do I have access to. So what dimensions do I have access to? So I see there's a metrics view specifically for ductb commits.

48:26Let's examine its structure and understand what data we have available. Then next it's going to go and say I need to get the available time range so I can properly query for 2025 data. And so it's basically making queries to um this local duct DB powered uh embedded

48:44uh into real MCP server and it's able to basically figure out okay who are the top committers. So it's using our aggregation API and you can see it's able to pull back again JSON and code and then it's able to render that you know as essentially text. But even more interestingly, it does things like it's worth noting that Mark and Mark Rasal

49:05are the same person, right? So no super run hiding, right? And so these are the kinds of things that a smart analyst would do and will say, okay, right, can you combine these two and generate a new top committer list? So it does that. And of course, no surprise, you know, Mark ends up uh well and above uh on top for, you

49:25know, for January to February 2025. Um

49:29and so the last the last thing I added here was I said can you look at the commits and see based on the volume of code changes what insights can you draw and again it's going to query you know run some different queries and look at um you know the past year it's going to look at uh the you know where the

49:47largest commits have been done um it looks at top commits by file changes and category and you know that's very similar to what you know I might have done here right I was looking at top commits by file path and you know I can kind of click around and see if I can get some insights into you know the file

50:05path and the file extensions right and the file names but but the MCP can just kind of run it's very patient and so it went through it does a lot of analysis and finally it comes up with based on my analysis of tech commits last year here are key insights about the types of commits and so all of this is generated

50:23in real time right this is not taking more than just you know the time it takes to generate the tokens um and that's what we get out of core processing was a great uh obviously a high um priority SQL syntax extensions pispark compatibility performance optimizations and so on and so I think what we see here is this starts to be

50:43like something that you can you could throw this MCP server at any GitHub repository right we all have we all have GitHub repositories many of us right not all of them are public you could actually use the same codebase and you could say give me some insights, right?

50:59Um what what is what are people, you know, working on the uh the polars project focused on? What's happening in the iceberg uh the Apache iceberg community? Um that's the kind of insight that might take, you know, hours as a even a smart, you know, uh uh data scientist or data analyst, but here we're able to kind of generate that

51:20insight and really just um with a few prompts in just a matter of um you know, really a matter of minutes. So, uh, it's extremely exciting to see what's possible and, you know, I think for for, you know, for our team and I'm sure others have had similar experiences, um, it really makes you, this is that aha

51:39moment where you think like, oh my gosh, like there's an intelligent intelligent life inside of, uh, when you combine LLMs, yeah, with something like a metrics layer, you really start to have a level of intelligence that's not possible, right? um yeah uh you know just kind of with what people can do pointing and clicking through an interface. So I'll pause there. Um yeah

52:03no that was uh that was super interesting and so and by the way if there is some docs on your MCP server I guess it's on the docs.real data.com.

52:14Yes, we're going to be actually rewriting it. Uh today it's written in um uh real MCP server is written in uh Python because that was the fastest way to get going. Our plan is in the coming weeks to uh write rewrite this in Golang. Uh and then we can actually embed it directly with the real binary.

52:32So you won't have to do this kind of hoops that I just jumped through to get it working. Uh it will be embedded and it will load up as soon as um as you load a real server. Oh, good. already and I'm curious what do you think because so here the flow was really you know uh kind of like top down and um and

52:52I would say you know really a discussion but people today are kind of like used to dashboards um you know maybe they're not looking at those dashboard how do you see those two you know coexisting to together I mean I think you know uh there's different modal modalities of data you know analysis and exploration right so I

53:15think like one modality is a dashboard just like you know you look at your car dashboard and find out what's you know how much gas do I have in the tank what's my engine uh what's my engine temperature how much wiper fluid do I have left that's that's an a lot of people use that for their business

53:31they're looking at kind of metrics right every day they look and see what their MR their MAU and other key business metrics are um I think for the exploratory use case right for the sort of root cause analysis or the insight generation. I do think a notebook a notebook modality is probably more appropriate. Um and so that that

53:51notebook you saw, you know, that kind of iterative series of questions. I don't think text is going to be the the dominant output. It will be the input.

54:01But I think that if if we can um generate relevant visualizations to tell the story of the insight, that's I think what um folks are going to uh use. And so ultimately it's a bit of both. But that this is where I think you know companies like Hex who've obviously leaned into um the notebook style. Uh certainly the ductb UI that Motherduck

54:23has built has a bit more of that notebook style um interface. And so I think that is probably what we'll see.

54:28And it's an open question of where the insights will live. Will will will you know duct DB send visualiza the ductb UI send visualizations to uh you know cloud and people will live in cloud or will people live in the ductb UI and you know cloud will be just an LLM that that is called by the you know the MCP server.

54:49Yeah that's a very good point. We don't really still know what's the you know we we need to serve you know our user and meet where they are and at the moment they are a bit everywhere and unless we see a majority uh I think also what's going to be hard is that there's going to be a lot of different there's still

55:06like today a lot of different competition in term of uh you know you are using code but a lot of people are using chat GPT and even if the integration looks the same I think uh you know to provide the same smooth experience like maybe you know adding some graph and so on that might be you know uh a bit different but it is uh it

55:27is interesting and I think I saw there is a lot of opportunity for DDB and real to like I'm like personal data like finance data I'm not comfortable to you know to kind of like push the data to you know analms and so on so having everything running locally I think another enterprise probably uh will have a specific requirement. But I think what

55:53what is really interesting is that you basically uh you know give the prompt and instead of just returning text there is much more execution context of the schema happening and maybe like I was thinking of like you know it because like that was a bit like kind of a draft like add exploration and at the end of

56:16the you know this exploration usually you want to share it like with a clean notebook style where people see you know the story right I think like if you could say okay clean up create the story and then you know clothe our chat GPT gener generates a sharable link uh I think that would be maybe the next step

56:38but again um it's it's difficult to see because that like cloud is not really it's it's optimizing for a specific use case right is is BI is going to work for that use case it's I think it's too soon to right to tell. Is there is there anything you you you want to finish to be a bit more uh pessimist because we've

56:59been so much optimistic and with our demo which you know looks nice what is like you know the downside where where do you think the technology might might fail?

57:13Yeah, I mean I you know I'll candidly say at this stage of of you know um building it is uh it is incredibly it's

57:24hard to be pessimistic um just watching you know how fast things are moving and how quickly things are improving. I mean, look, I think the only thing that you one could be pessimistic for is um I think with with this type of technology revolution comes change. And so there's going to be a lot of disruption, I think. And uh you know, I think I'll say

57:45this when I when I go into the Reddit community in particular, which tends to be a bit more uh they always say the pessimists are probably the most real uh have the most real worldview. Um I think there is a lot of fear among data analysts and engineer data engineers for okay am I just going to be part of these

58:02layoffs that are happening and be replaced by an AI and so um I think you know I I love the data community and I I hope that actually we find a way to have um you know an abundance mindset as we use these tools uh and this is sort of a complement and an augmentation and not a

58:20replacement. Yeah, I think I mean that's a bigger debate, but I'm pos I'm also positive around that. I think we we've seen a lot of people saying that it will not replace people, but it will definitely replace people that are not using AI, right? Yes. So, I think this is where you need to find the middle ground and and we saw with the with the

58:41demo like, you know, stuff breaks. You need to fix the JSON syntax. you need to fix, you know, not telling him to use the the the MCP DDB, but rather the the the real so MCP. So you you always need kind of like a control, you know, view and you still need to we talked about the meta data initially and you know how

59:04your notion of revenue inside the company is defined. How do you define a customer? Sometimes it's even in the head of people. That's the reality of most of the startup, right? So you need to go talk to people to just go do the relevant metrics like there is no you know secret behind it like Mike we are

59:22already time out um it's uh was a pleasure to have you thank you everyone and I'll see you around in another video or another uh quack and code episodes and have a good week. Thank you all.

59:40[Music]

FAQS

What is BI as code and why does it matter for AI-generated dashboards?

BI as code means your entire BI stack, including data sources, data models, semantic layer, and dashboards, is defined as declarative code artifacts in a GitHub repository, similar to infrastructure as code. This matters for AI because LLMs are very good at generating and modifying code. When you replace point-and-click BI interfaces with code-based definitions, AI can generate and iterate on dashboards far more expressively than any drag-and-drop UI could allow.

Why do text-to-SQL approaches struggle with business metrics like revenue?

Text-to-SQL struggles because business concepts like "revenue" may not exist as a column in any of your 200 database tables. It might be the sum of a price column minus refunds from an orders table. While LLMs have good world models and can often infer these relationships, they perform much better when working against a curated semantic layer or metrics layer rather than raw database tables. Without this layer, LLMs face the same challenge as a junior data engineer handed the keys to an undocumented data warehouse.

Is English easier than SQL for expressing analytics needs?

For about 80% of use cases, English is sufficient, like asking to truncate a timestamp or convert time zones. But for precise requirements, SQL and code do better. Michael Driscoll gives the example of "last three days of revenue": does that include today's partial data? The current partial hour? How do you compare three complete days to two days and eight hours? These time-related nuances are where English becomes ambiguous and code provides the precision needed.

How does an MCP server enable AI-powered data analysis?

An MCP (Model Context Protocol) server gives AI agents direct access to query data and explore schemas, eliminating the traditional cycle of generating code and then testing it separately. In the demo, a real MCP server powered by DuckDB locally allowed Claude to iteratively query a DuckDB commits dataset, discover metrics, analyze commit patterns, and generate insights without human intervention. The AI even noticed that two usernames belonged to the same person and combined them automatically. Learn more about MCP with DuckDB.

Should BI tool companies optimize for AI agents or human users?

Michael Driscoll argues companies should anticipate that AI agents will become significant users of their tools, alongside humans. He notes that when building BI as code frameworks, following established naming conventions from projects like Vercel and Node.js helps LLMs generate correct code. Violating established precedents confuses models, similar to how it confuses human developers. SQL's longevity as a standard is actually reinforced by AI, since LLMs have been trained on vast amounts of SQL from Stack Overflow and GitHub repositories.

Related Videos

9:09

2026-02-13

MCP: Understand It, Set It Up, Use It

Learn what MCP (Model Context Protocol) is, how its three building blocks work, and how to set up remote and local MCP servers. Includes a real demo chaining MotherDuck and Notion MCP servers in a single prompt.

YouTube

MCP

AI, ML and LLMs

2026-01-27

Preparing Your Data Warehouse for AI: Let Your Agents Cook

Jacob and Jerel from MotherDuck showcase practical ways to optimize your data warehouse for AI-powered SQL generation. Through rigorous testing with the Bird benchmark, they demonstrate that text-to-SQL accuracy can jump from 30% to 74% by enriching your database with the right metadata.

AI, ML and LLMs

SQL

MotherDuck Features

Stream

Tutorial

0:09:18

2026-01-21

No More Writing SQL for Quick Analysis

Learn how to use the MotherDuck MCP server with Claude to analyze data using natural language—no SQL required. This text-to-SQL tutorial shows how AI data analysis works with the Model Context Protocol (MCP), letting you query databases, Parquet files on S3, and even public APIs just by asking questions in plain English.

YouTube

Tutorial

AI, ML and LLMs