Boosting datasets discoverability for AI using DuckDB

2024/05/31Featuring:TL;DR: Hugging Face's dataset viewer uses DuckDB for full-text search and filtering across 100,000+ ML datasets. Plus, the new DuckDB Hugging Face extension lets you query any HF dataset directly from the CLI.

What is Hugging Face?

A collaborative open-source platform for machine learning:

- Datasets: 100,000+ public datasets (growing daily)

- Models: 600,000+ models with weights and metadata

- Spaces: Python apps (Gradio/Streamlit) showcasing models

- Posts: Social media for ML—follow researchers, comment, like

Key insight: Everything links together. Models reference their training datasets. Spaces demo those models. Collections curate related resources.

The Dataset Viewer: How It Works

The Challenge

Datasets can be terabytes. Users want to explore without downloading everything.

The Solution: Auto-Conversion to Parquet

- All datasets (CSV, JSON, Parquet) auto-convert to Parquet

- Stored in a special

refs/convert/parquetbranch - Features built on top of this standardized format

Features Powered by DuckDB

Full-Text Search (BM25):

- Creates DuckDB file with FTS index

- Downloads file per-query (moved to local SSD for 7x speedup)

Filtering: Query datasets by column values

Statistics: Computed with Polars (switched from DuckDB due to concurrency issues in their workload)

Current Limitations

- Only first 5GB indexed for large datasets (74TB FineWeb dataset!)

- Working on scaling beyond this

The DuckDB Hugging Face Extension

Query any HF dataset directly from DuckDB CLI using the hf:// URL scheme. The extension supports:

- Public datasets without authentication

- Private datasets with a secret token

- Creating local tables from remote HF data

Text-to-SQL with MotherDuck's NSQL Model

Demo using MotherDuck's text-to-SQL model on HF:

- Get schema from DuckDB

- Build a prompt with the schema and natural language question

- Run model locally (12GB GGUF format)

- Execute generated SQL

The challenge: Text-to-SQL works great on single tables. Real applications with messy schemas, cryptic column names (looking at you, SAP)—still unsolved.

Loading HF Datasets into MotherDuck

MotherDuck can load directly from Hugging Face URLs, allowing you to query large datasets in the cloud without downloading locally.

What's Next for Dataset Viewer

- Scale beyond 5GB indexing limit

- Ethics/content moderation (legal issues, abuse detection)

- Better discoverability for the 100K+ datasets

Transcript

0:29for

0:58e

1:28e

1:58e

2:28e

2:58e

3:28e

3:58e e

4:58hello everybody how's it going and welcome to another episode of qu and code where we discuss data Tech topic and do a part where we code uh and today

5:11we have uh an interesting guest uh working at aging face if you haven't worked at ug or if you do use aing face please let me know in the comment we have already seen uh someone that said that uh it does use Ugg face but haven't used the data set view where we'll talk about that um but so we've been acing F has been

5:33around uh WB topics uh multiple times already uh at the duckon in Amsterdam um and in Paris uh where we did a dub Meetup in uh French uh shout out to K for for his talk and now we have again someone else Andrea which is also working on the data set of Vier and I'm super excited uh to have her on the show

5:59so warm welcome Andrea how are you

6:03doing hello hello everyone I'm happy to participate in today's podcast nice um so you said so it's the afternoon right for you right now yes yeah nice uh evening evening for

6:19me and uh maybe the uh the morning for

6:24uh for someone else um and so before diving into uh uh to the different topics uh what exactly do you do and what's your background first uh how did you come at you know software engineer and then working at the hugging face he okay I am Andrea S I live here

6:47in Bolivia in kabamba City ER which is

6:52in the heart of South America I am a system engineer and also I had a master degree in business intelligence all around data warehousing data Mars and it processes uh before joining hen phas which was one and a half years ago and I worked on different positions I used to change my job like each year which sometimes is not seen well but I think

7:21I've done that I've done that as well so that's don't worry don't worry you understand yeah because in many inter they asked me why do you change your job so frequently but uh I think all of these changes make me have a broad comprehension about software engineering I worked as a full stack then I realized I didn't like too much user interfaces

7:48so I become a backend developer but I was still looking for my passion which was actually data I love more working with SQL all data stuff and now I am

8:02here in had face working as a software and like a data engineer because I am in the data set B project which I can tell you more in details yeah in a couple of minutes but so that's that's interesting what uh what did actually the thing around data makes you interested to to jump in from the back end and the

8:25project itself yeah actually I I loved when I have a

8:33data inconsistency issue for example if there was a quality issue ER because I I like to investigate or to do trouble shoting sometimes I feel like a sherock HS of data because it's super exciting when you have to go for clues for many different sources and finally you you you find the inconsistency and you can fix it uh that's what I like more

9:00more than interfaces or Services actually yeah so the it's really the relationship with uh with data basically that makes um the the thing interesting but it's true that uh um debugging data pipeline can be sometimes uh quite quite challenging except especially if you're not the owner of the data source yeah I'm just curious like how do you easily

9:26handle that [Music] it was not easy because you know there are some data sources with Messi schema messy data and also there if there is a legacy system you have to to ask about the the meaning of the data the sources the relation and it sometimes it's kind of impossible because even the the owners don't know what their data do and you

9:56have to do H you have to suppose uh some some Clues and then you have to prove that and that's what I like SQL because uh you know there are many interesting functions window functions you can create tables and and that's all uh that's why I I like it moreel than other languages because you can integrate everything in one

10:22single uh one single truth yeah it's uh it's really interesting because I know a few folks that actually you know have the more the data frame mindset and that's why they don't like SQL but it's nice to find people that's moving from the you know programming language interface to to SQL but SQL is great because it's also a lower technical

10:46barrier to entry right it's the common Dominator with a lot of uh uh data profile you know a bit less technical so um so that's true let's let's do um uh a short intro on uh on hugging face first

11:03because uh I'm sure there is people that are aware of um of hugging space itself or they use the the models or not the space or just talk if you could give us uh an intro about you know the main component of what makes hugging face what is hugging face today I have um I have here the the website you can see it

11:28yep so maybe we can do uh you can do a work through uh over here to the different things that we we can that Hing Fest has yeah first uh it's I like to to

11:44answer this question because it's it's is it nice uh what we are at Hing face we're a company that is trying to to to collaborate with the well to

11:58democratize good machine learning this is the the mission but uh we are a collaborative open source platform uh we try to cover the machine learning uh flow from end to end providing libraries Tools in this case the hugging face Hub which is the the main platform and besides that we work with other companies or research projects uh to create papers to release

12:26new data sets models and so on in this case you are on the hugging face Hub platform which is like a GitHub of machine learning I would say but nowadays I am looking it more like a social media for machine learning because you can create your profile interesting you can even even post about your your thoughts your investigations

12:49people can interact with it h they can like it or they can comment they can contribute and and it's I think it's a good uh point for machine learning people that like that uh now let's go to the sections we have three main sections here I would start with the data sets yeah go you know when we are working on

13:14machine learning process uh we first need data uh here we have more than 100,000 data sets it's

13:23growing every day all of them are public and it's easy to access ER what people do here H they can publish well we have actually two kind of users I would say for data sets the ones that are looking for data for training models or so doing some data exploration and other users that want to show or share the data in the community

13:51they are looking for hosting for example uh here you can see the trending data sets and we can do it later we will go through the data set V itself but uh here you can filter by task yeah if you are looking for a image classification task you can click them and you will find a lot of data that you can download and

14:16process and do whatever you need even you can contribute if there are if you find something weird or or a legal concern you can contribute they we we have a a tab in the bew where you can share your your thoughts also you can filter by languages the languages are I think it's really important nowadays because there's a lot of models and data in

14:42English but H we want to democratize all of this process so we have to include Spanish uh French other yeah other non inclusive groups that's that's a good that's a the that's a good point that um

14:58we of often forget I mean I'm not English native but work in English and I'm really comfortable but I know for example uh you know in ddb we have community in in Brazil which are uh really active and using uh the DB but they mostly learn in Brazilian right so we often forget that in Portuguese I mean um that we we uh people have

15:23different way of learning aside from the you know machine learning application that's important to have diversity into into the language yeah it's pretty much important I think because if you want to to train a a

15:41solid model in a specific language you will need a lot of data and models can many times Mistake by languages even the the current models I've seen this here

15:54my sometimes they fail

15:59okay let's continue with this second part which is models uh once you have a data set H The

16:07Next Step that usually users do is to train a model or H they can the same way they can download a model room may run maybe locally yeah or they can share their model with the community yeah uh here we have like 600,000 the same way you can filter by tasks depending on what you are doing yeah H what is interesting here is that

16:35there are some models that share their weight the model the file model itself but some of them for example if you can click on on one of them not sure if this one does yeah they but they normally do I think I read it yeah before continue I I maybe I can

16:59explain you a little bit about what is a repository in hugging face yeah which is the main entity in all of this Hub each project each data set model or a space is like a GitHub repository let's say that like that which is compounded by

17:18different files and the rme file which is the card we call it the space or the model card maybe you can choose another another one yeah yeah so there the C is the card has the information about the model or the data set yeah where they say how users can use it even download it or how it was built

17:44yeah but what I wanted to highlight is that there are some models that even they share which data they are using yeah and they are linked to the data sets over they were trained on and in this part in the right for example you have an spaces list which means we will go down to spaces yeah but uh these

18:09spaces are like applications that users buil using python to Showcase these models their data set and other researches and what is amazing for me here is that you can link your data set your model your space and you can see that the community is really building something interesting and all of on top of the open and free software yeah uh

18:39you know it's important I think to know uh from which data sources your model comes from and if it's uh and if it's

18:48open to to watch and to audit I think that's more safe and and Truth for the community yeah if it's a if it's a black box it's a pretty pretty diff to uh to

19:00estimate the legibility and you know and also the around ethics and so on if it's if it's a if it's a good model um so so far we've done so we start with the data set um you have 100,000 of them um and

19:18models and we so you have any you can filter by application uh there is a couple of models um you can't you explain also the entity of a repository um that contain uh contain the models and then uh there is the

19:39spaces that which is basically the application where you can quickly uh

19:46deploy um your model run and have a runtime over there so you have the source data you have the seource model and then you have basically the runtime yes well but I forgot to mention about the spaces is that everything you upload it you can do it with a couple of lines in Python yeah all of them are

20:07like uh many applications that you don't need to know about infrastructure yeah or even user interface which for me is is incredible because I don't want to talk about I I don't like HTML or css

20:24all of these applications are are built in with python yeah there are two Frameworks gadio and stream lead yeah where you can use you have some components that you can use direct direct yeah yeah so gradio and streamlit are both uh framework to build application or data apps or data vistion with uh the pattern run time and

20:50actually we've done um uh a live stream with uh someone with from the streamly team um to talk about how theb works with with stream L and the the pro so that's great um and the post like maybe you can talk a bit about it I think it's pretty new right H yes yes I think it's like three

21:13months yeah you can post and this make

21:18the Hub looks more like a social media I see yeah you can share your papers on even if you can follow people h and if you have a network or or colleagues that they are uh doing some likes or they are commenting you will will you will see as well of all of this network yeah and yeah you can as you see here you have

21:43reference of of the papers uh the different space that the person it's kind of like the portfolio of everything that he has interacted the models are here and then the the data sets

21:59yeah yeah no you we now have the collections where you can create a set of resources for a specific uh topic yeah for example I created a collection for text tosql where we have different models uh spaces data sets you can add it what is your username it's aoria

22:25aor no without yeah you yeah so this is the collection you mentioned yes and you can share this collection if you want to talk about the specific topic yeah no that's nice that's great all right we have already like took uh quite some time so I think it was important to give um uh a good overview on uh you know what's uh

22:48hugging face uh in uh in general um and

22:53so here uh I'm just looking quickly uh

22:57at the chat um loving this work Truth uh so people appreciate the work truth um and so yeah um I think so now we can move on to uh yeah zooming in on the uh the the data set viewer uh because that's that's what you you work on is that correct yeah initially it was a library we have

23:24the data set Library yeah which is another project that l you mainly H download data sets from this Hub and you can push the Hub with with a line of code yeah but this is using the library locally what we were working on is in the data set Vier project uh previously it was named data set server but it is

23:50one month that it's named bware because it's basically can you go through the one of the data sets for example

24:01yeah okay all of this is is the Vier

24:06yeah yes initially it was before uh I

24:11join I joined the team it there was my colleague Silvan ER which which told me that initially since this was all like a GitHub repository they only visualized the files as they were yeah and also we have this Ry file that is the data set card yeah and they show it this card but that was like three years ago yeah but

24:40since then it H become more intuitive I

24:45think it we they implemented the show in the first 100 rows yeah so that we can

24:53have a preview of data but nowadays

24:58we are working our team is is H grow we

25:02are 16 members and now what we want to do is to provide data sets users a a platform a place where they can easily explore the data yeah without having to download it because there are big data sets of gigabytes or terabytes what if you want to explore them or want to to look for something you will have to download

25:27everything but that's not what we want to to do we want to make their task easier and friendly that even people that are not related to the data itself can explore it yeah and can take advantage or use in in their models so just yeah just to sorry to interrupt to kind of a wrap up so the data set

25:51preview is kind of like a super read me right coming back to the history of when you started the the data set first there was just the card right which is the Ry I guess I believe yeah um and then serving the the the data set which are there in the data folder right yeah

26:13these are the files yeah and so that user supp par or CSV and uh and the data set viewer is working on any type of file H yeah we support CSP Park with

26:29Json and web data sets yeah and CS

26:33CSV maybe we can go with another data set yeah this one is the fine web that was released one month ago I think it's the bigger one because it is 74 terabytes or information yeah sometimes and it's still challenging for us to process this kind of data set yeah maybe can you look for the E MDB data sets yeah no in the

27:02search next to Hing yeah uh yeah there's

27:07multiple of them the second one the second one this one yeah this is uh data

27:14set for movies reviews Yeah Yeah Yeah from IMDb we have the viewer totally and

27:21full working for some data set but uh it's still challenging for big data sets in this case it is not um too much complicated yeah um and here do you want

27:35me to go through the features or not yeah that's maybe um what I um like to understand uh because we are also talking about Doug DB is maybe a bit like how how does this work behind the scene actually uh behind the scenes is each project in the hub is a repository yeah we have all the files poed in

27:59S3 what we do is to have a pipeline that

28:04runs on each of these files to get insights about it uh but first I I can

28:11explain you the structure of the data set MH a data set is compon of different configs configurations we call that which uh you can use for example if you have a data set that it's in different languages you will have one configuration for Spanish one for English one for French but if it it's not a multilingual data set we have a

28:34default config which is named defa and then the config has different splits which are subset of this data sets and machine learning you know we have the training data set the test data set and the evaluation and this is our structure one data set have different configurations and different splits that's why what you are seeing H after data set viewer you have

29:03the split you can open the drop down uh here in this case we have yeah yes that's the configuration that's what you mean yes in this case we have only one configuration that is the default yeah because this data set only supports English in this case and we have three split okay I see uh okay this is the the first part

29:27of the structure if you go to the files you will see that all of this is stored in our S3 buckets these are our

29:38our sources and all of these files can be in different formats yeah okay now you can go to the data set viewer again what we used to have like two years ago was only the first 100 rows yeah not all the data what was a game changing for us was um

30:03when we introduced The Well actually silan which just my colleague introduced the auto converion to parket files MH from all of these different formats we needed to to start building new features over one one specific file format because it's complicated to work on all of them because of the features yeah and then some some of them are not optimized

30:28for heing for compression and I mean God knows that sometimes parsing CSV schema can be a nightmare yeah that's why we chose uh

30:40parking I think it's the the better one as you mentioned because of compression because we have to think about the storage as well and on top of parket we started to build all of these features like the pagination you have at the bottom yeah this was allowed us to users to go to the full data set because it was not

31:05possible before you can you have to just you had just a preview now you can go for the full data set um it's still complicated for big data set because H if you have the data in the storage like is 10 terabytes if and we have to convert or copy those parket file full data set we would have to H

31:30duplicate the size uh what we do is we take the first 5 gigabytes for this big

31:38data set this is our top currently but at least we can give a preview of the data set to the users yeah and so what uh and what are

31:49you using when like behind the scene I believe this is uh ddb which is executing a query or you have a basically API that's going on your backand and do query for displaying the data set viewers statistic H right now we are using arrow and parket to okay to read all the the pagination which we have a an end point

32:15called row but for example there we have

32:19here three other features that we recently implemented last year uh the first part is the search bar where this is a feature we are using dob specifically the full sear right yes

32:36okay you what what would you do behind the scenes we first convert everything to parket H we store this parket files in another branch of the data set and maybe we can we can go to that later yeah uh right uh right here right

32:57yeah we have have a new revision or branch which is park it okay so this is what the auto conversion basically creat yeah yeah and

33:08this one is actually uh what is it this one H the D

33:15yeah this is the part of the other implementation first we have the parking file and then we use the full teex search feature from do DB oh yeah I see yeah what we do is H well this was I think for now the unique way we find what we do is we inest uh do DB file with all of the data because it's needed

33:40to to create the index index yeah and all of the data plus the inlex

33:48is stored in a new file where we upload to this DB Branch yeah the idea was to

33:55maybe in the future uh have a branch by format yeah currently we have the the Parky and this theb file and for the full teex search what we do um each time it's needed to perform a a search we download this file and we execute the

34:16full teex search function which is the bm25 yeah over this data set and that's

34:24all yeah for we have here two two two

34:29other features can you imagine what are them um so so far we we talk so just to recap

34:39We There is the Octo convert that put into par uh for you know compute

34:45statistic and display more easily and then we have the full St search and then

34:52there is also uh statistic being computed here I guess yeah those those are maybe are used with through dou DBA maybe not sure initially was we were working with to get the statistics there was I think it was three months that we were working with it but at some point we had some concurrency issues with DB maybe it was

35:21because we were still learning how to have a a good performance or I don't know but then we try it with Pol MH

35:32which is another framework that uh it's super stable and it's the one which we are currently using but uh if you click on some of the

35:44statistics yeah and in this at this point we are running a filter expression over the do DB file okay so this one there many memory issues this last days

35:58we have had this is an issue which we are working on currently no wor let's give give some time let's give it a chance

36:12again uh I maybe to the test data set

36:19yeah uh this one H to the split sorry to the test split yeah maybe it should be

36:29L

36:33data so here we have a

36:37filter ER basically with this is a simple query we have the select start from this table which we ingested all the data and we append the last part which is the filter the work condition yeah um and that's all I think it was at

36:59the beginning it was difficult because we were thinking about which technology to use yeah that could cover all of these features at the same time but now that we see how we have implemented this only we took the B with a couple of lines it sounds really easy yeah no but it's and it's a it's a great use of the

37:20full Tex search I feel like it's a totally underrated features uh from uh

37:27from the um there is uh some capture what you said like you need to re-update the index you know have some work behind the SC to do um but it's a it's a pretty

37:40pretty neat features cool so that's a that's a good history and that's also interesting that like if you if you're limiting with some framework some part nothing prevent you you know to combine you you mention polars to compute some statistics um I think that's the beauty of today is that when you have a arrow behind the scene you can you know it's

38:01easy to to move data around or do some operation and uh use different those different framework I guess you have some comment on that yes yes uh also we we faced when we

38:17were implemented it and maybe we're still facing it is the latency yeah the

38:24response response time with which sometimes for example I saw one data set with three columns yeah but took more time than a data set with 20 columns yeah but this is because you have to know the content and in this case of data sets it's difficult to have one specific strategy for all the data set

38:48yeah so it's it has been interesting to work on it we also have uh recently the search bar used to be slower than what you have seen yeah because we have to download it and ex running the fulltech search query was there was an issue because when we were doing the test locally it worked super fast and we didn't know what was

39:19happening in production we started to think that maybe it was the CPU maybe we needed more memory but no that that was not the the issue actually what was affecting much the performance was the the dis because we had shared the storage yeah and we identified that it was like seven times faster to run in a local storage that could have been

39:51obvious but it took it took me some time to understand that yeah yeah it's true that like with our powerful laptop today right we sometimes forget that the servers and Cloud are designed uh differently uh also to for that purpose

40:09so we have um about 20 minutes and I want to uh so we cover basically U with the uh Al component of hanging phase uh the we zoom in on the data set viewer the history how do you do the calculation behind the scene where are you using the um now there is um two interesting thing I want to dive is the text to SQL and

40:35also the hugging face extension that was just announced yesterday which one do you want to start um let's start with extension yeah

40:47sure um so I can so maybe

40:53um I have actually um

40:58uh here uh we can maybe start with uh with the one that we were just busy right let me just uh check you're saying my screen right yes um how much is

41:13actually uh this one yeah it's it's totally okay so um yeah so we were uh busy with this IMDb uh data sets uh so maybe I can't uh um pick uh this one and

41:30so I need so the big news basically

41:34before I I'll do the magic and then we'll give the the explanation um so if I launch do you how do you usually uh use ddbm I'm curious mostly on python or through the CLI when you're using ad in an adoc way locally um using the CL yeah nice it's easy um I'm all for all flavors but I'm

41:57also uh using the CLI um so what uh we

42:02can do actually which is pretty magic is that we can uh read a data set in query directly from a local WB right um but I

42:14suggest we create directly a table um uh in memory so let's say IMDb uhing

42:24face has select and here is the magic so

42:28what I need what do I need to do ER you need to put HF

42:37yeah the dots and slashes yeah so that's

42:42the reference to to aging phas data set yes this is uh the file system yeah

42:49and then um I guess uh data set data

42:54sets we yes slash and the name of the and

42:59data the the the the path of the repository so I the name with the stand for because with stand for is the user or the organization and the data set name is cmdb you can copy yeah so emdb so if I copy this actually but you you need to put also the Stan for name yeah so the uniqueness is basically

43:26based on on organization uh the name of the data set and in path uh within the uh the repo so

43:36we need uh this and basically the name of the stand for uh NLP right and then basically uh

43:47let's take the test data set train data set I don't

43:54know yes and so if I do this and I'm missing

44:03something start or you can that will be better exactly uh I can also do uh just uh just

44:14this right because that give is a first statement and that would be enough um so oops of course it's it's not uh it's not

44:25working uh you have to remove the resolve and main main part where no Sor the resolve is not

44:36there um I'm wondering if uh he can you

44:41go to the data sets be work again I think with the path is not is not correct so we have FF set stand for NLP

44:51so one thing uh uh I think that would be great is to have a a link uh like this where you could we can we can I actually click to the full link of the data set if I click here yeah you can access using the the HTTP link yeah

45:11oh you can you have you have to to click on the file yeah what I usually well you can copy the download link ah yeah yeah so just uh just over there yeah but here

45:24here if I'm copying here so basically so if I'm copying everything here on the the thing that's the right the right one right so let's let's do again U First

45:37what if we try to select all the files with the glob patter maybe uh yeah but normally should be able to

45:50um to read the single file right uh no without blob and without me

46:06that's what you mean and um maybe

46:14yeah ah it's more happy but it have to

46:19work with the name yeah yeah we'll try we'll we try again probably uh a bath uh uh which is

46:28uh not correct on the first one yeah maybe so uh so let's wait just a couple of second uh and uh actually what I can do is take another shell and show I had played uh just before uh with

46:49um this data set it's just a a data set

46:55uh from uh redit about uh climate uh so

47:00this is just just that so both are done woohoo so now we have uh the full data set actually here in this

47:11instance and so what is really cool I'm zooming in is as out is that now we can do query around this right uh we can perform full SE search uh or other things uh here it's mostly a data set which is a pretty simple with text right um but I think what I wanted to show here for

47:32example um we have a couple of things here um ID the post title uh and uh the comments because it's for each post and then there is a series of comments um for for Reddit um but so what's what what is the initial intention to enable this extension and to be able to read directly from Doug DB can you tell us us

47:57a bit more about that uh yeah sure one of our objectives is not just

48:06to to have this this Beware with all the features but we know that uh people need to explore maybe locally with different

48:17Frameworks currently they can do it with using pandas polers and they previously

48:25it was also possible us using the python API for do DB but there are as as you

48:34mentioned there is people that likes more using this CLI to do without having to to set up any specific language yeah in the laptop because using the CLA is is super fast you just download a file and you can run it yeah and also uh we

48:54previously we have a long uh URL file for this parket file sorry a URL for all

49:02of these parket files but as you can now hear you just need to remember the data set name and the file yeah and that's all previously it was longer that may be

49:15confusing H this is one one point of the

49:19integration and the other one that we used to have issues is about the authentication yes currently you can this is a public data set but we also have yeah well uh uh um because I want

49:35to time is running out but we can mention that so there is the uh on the ddb uh documentation I believe you actually contributed to that page or

49:49actually yeah yeah yes so this one um tell you what you can do with the Ugg pH data set from dou B and of course if you want uh so this is what we did actually uh and you see we can also use uh revision uh specific so maybe that's where it was not happy I don't know um

50:11and we can uh that's what you were talking authenticate to hagging face um either with the token or with

50:20the the credential chain where your uh token uh g face token is over there uh to be able I guess to query your private uh data set is that correct yes

50:34yes yeah um cool that's really great we have um uh I just want to show something before diving to the text to SQL um you can also do it uh through modd um so this is the UI of course you can um uh

50:53connect uh to mod deck just by doing

50:57um open MD and I'll be connected to uh

51:02to mdck so this is my database in the cloud and everything that I'm going to do in the UI I can show you um you can do it here um but so what is uh pretty nice is that you can um uh create uh a database

51:19uh first just I'll create just a database hugging pH data set and I create the just the same data set from uh from the Reddit uh climate comments and that's it and now I can querry uh basically those data set from uh from other deck you see it's recognized the the schema and so one so that's pretty neat because then you can

51:45you were talking about larger data sets um that's also a way to if you don't want to use your your local machine um to basically use the uh the power of mother duck and we do have still a fre here so you can go sign up and try it out but I think that's pretty neat I think I'm going to update because mother

52:05duck we have uh you know some public data set that we are um uh hosting um on our s but I think

52:14I'm going to definitely had a couple of like simple queries for people to get started based on a g face data set because that's that's just pretty neat to to have them uh already there so so

52:26yeah thank thank you thank you for that um so text to SQL what is all about can you tell us a bit more yeah yeah you know nowadays all people is

52:44powering feature with AI and why not SQL this time I think data engineers and data analyst ER started to ask about if

52:57there is going to be the possibility to have a natural language input and to have a result that's maybe covering an SQL query SQL which is ultra I I think it's really easy because if you have the correct model that does this operation to convert from natural language to to SQL it's easy there are many models that are

53:24really um are fast are solid they

53:29generate a correct uh SQL but what is the challenging here and many companies even Enterprise companies are with this challenge is that uh you

53:42need to elaborate the context for the model yeah you just need to pass some information for the model to to know how to generate the the SQL command in this case uh in simple words what we do and

53:58what you can do to try this text to SQL is to pass the model the the schema of the tables and the query basically you say based on this schema we have this columns these data types I want to know this this question and the model

54:21generates an SQL query that's all behind the scenes there are many implementations which what changes is the basically the prompt yeah because if you do this text to SQL task with only one data set one table it's super fast and easy but what if you have uh a real

54:45application with different tables different schemas there is the chance there is the challenge because uh we have messy schema sometimes yeah sometimes you have columns that don't even understand why is the why is the name of the this column what do they store you know so maybe this problem is not solved isn't solved yet there is a there are many

55:13open papers that are starting to to think if this is solved but it's not ER models are generating correctly the SQL commands but not I think it's not ready ready to implement in a in a big solution yeah but you mention a really good point is that if you're metad data

55:36is not there it's not correct the model that can basically you know do nothing for you so you have meaningless like colum name like zero one and so on I don't know if you work with SIP uh database but they really I'm pretty sure if there is people in the audience that work with VP Source database uh it's

55:55basically technical name for a lot of things um at least back then when I worked with it so it's like it's really difficult to make sense of of anything you need kind of a um a semantic layer or that's feed by the business initially to mention like this is you know what this colon mean and so on so I think

56:15like description of column would be uh an important field in the future right um to to feed uh those model but so this is um I'm talking to your blog I share it by the way on U on YouTube for folks and our LinkedIn um there is as you said

56:35uh you build the prompt based on the schema and then use the the the model so that's the text to sequal model that mother duck and number station actually released right and which is um available

56:49actually uh sorry on uh mother duck uh

56:56organization so here you have a space with a demo that you can try and you also build uh a space based on on this tutorial which is just explaining how how this things works and we have a notebook uh which is still running um so if you want to use the notebook it's actually um I think at the end of the

57:19blog maybe yep here so it's here uh I'll

57:24share it directly let me give you a sec in chat uh notebook for friends YouTube and people

57:34uh on LinkedIn and so um so yeah so the what it does is that um first you install just the dependency python right and here what do we do that's the uh biggest

57:51part yes here's where you download the the model to run into Loc yeah so we actually not really running

58:01it locally we're running locally on collab it's important to mention because when I'm there I'm local right sometimes we forget uh if I you know you can have also a Jupiter notebook running local so just important yeah I I meant locally because uh uh you know these days many

58:22people think that they are using llm models but but actually they are using an API which is not exactly a model but a service but this kind of doing manually I would say it's like exactly so this is the uh the model itself so this is pretty large uh so that's why it takes a bit of time so if you're run it

58:42on on your side be just a bit patient there is you know uh s gigabyt of uh to

58:49download and then here what what does what does this part do in this part we are uh we are loading the the model in this case un using lama lama C CPP which is uh most powerful

59:06with this uh goof goof format models

59:10which are like a minimiz version of the real model because the the original model to is like 15 gigabytes if I'm not wrong yeah exactly I think uh we I don't know if we can see uh it's uh

59:29directly uh there can see the

59:33size yeah you will have to zo the yeah it's roughly like yeah 12 12 or mostly around 12 gigabyte yes yeah it's possible to load also with Transformers or other libraries but by the time I was working on this my my laptop has not enough resources that's why I rather to use the minimiz no no that makes that makes sense all

59:58right so this is done um we have loaded the model uh what is this part does actually is okay ER in this part we are using the data sets bware API um as we were talking before we have converted parket files M and we have an in this specific

60:21API what we do is we ask for the parket

60:25urls yeah that we can use it INB later

60:31Y and after that uh dug DB part um so

60:36you actually use it uh just to create

60:41the to generate the ddl right yes maybe

60:46we could have used the describe command for the par but I think the format we

60:53will have to to form in a ddl way SQL

60:58ddl that's why I I rather using this and

61:02for dug DB user this is actually a pretty neat trick uh which not a lot of people knows uh but I use it in the past to feed so before our text to SQL model

61:14when I was using chat GPT um for the buing pipeline sometimes I feed the the model and I was using exactly this function so uh if you actually so if I go back um and I do uh uh so from Doug DB tables which is uh a

61:36table function you can see I have all the create table uh statement uh ddl that's available based on the data set cool so now we have the ddl uh we

61:50just need to basically do ask the query the natural language so here you ask CS from Albania uh country and then here is

62:00the prompt yeah this prompt uh what we're doing is adding just the schema and the

62:10yeah query in notal language so here we have the ddl and uh and then uh the the query input

62:24uh I think like the question the query input is I see here query should be query input right yeah but we are formatting it in the next line oh yeah yeah okay I see it ah was going confused uh you got me for a sake so uh what is uh what what are we

62:49doing here we are sending the promt to the model and getting the the first choice because sometimes mod return more than one uh response we are getting the first one [Music] and getting the text of it let's see this part could take some seconds to run yeah it could take some seconds we are uh after that so we're pretty much uh

63:17pretty much there um but uh we can maybe already uh ask like for conclusion what's what's next for you what's next for you you a tugging phase or for the data and the data set viewer can you tell us about it yeah in the data set VI we still have some challenges uh some of them related to

63:39big data sets as I mentioned we currently cover the first five gigabytes so we have to work on that and also we have the we have

63:51some since there's a lot of data sets uh there are some ethics concerns it would be great to maybe start uh not sure if auditing but we have to be responsible about what what people is sharing because there are sometimes legal issues um many topics even about sexual abuse

64:15that yeah you you can't imagine that there can be there but yeah like like in GitHub there is always some weird and dark uh stuff so I guess you need to have uh uh uh to to have some kind of

64:30audit so Audits and going moving forward from the 5 gigabyte of sze of data set that's exciting so we just um run it um

64:40and now we have uh this SQL uh which is uh being generated right so that's the SQL which is generated from the model right and uh and now basically we can just uh this is just using ddb um and uh

64:59running the the query right yeah

65:04um and actually uh so the the SQL

65:08outputs uh them where do you uh yeah you

65:14get the data there no but that's that's uh that's amazing that's really great so um it's a fun example to play with uh text to SQL and dou DB um also uh so in

65:27the session we talked about the hugging fix extension right uh so now you can query directly iing pH uh data set directly from the dbcli or any clients if you're more into goang or python or whatsoever um you can uh you can too um Andrea thank you so much for joining us uh I had a much of fun to uh

65:53dive deep into the history of the data viewer and I'm really looking for what I think this extension is uh is opening a lot of doors like I mentioned we've uh uh loading larger data set in mod duck and do doing pre compute over there uh transformation uh I think we can be uh really creative so uh yeah um looking

66:15forward on on that and have uh have a

66:20great uh day because it's still the afternoon for you um and uh and I guess we I'll put all the links in the description uh regarding your your blog uh your the notebook and so want is there any uh thing else you you would like to add um we have also some or examples about how to use this

66:45uh new extension INB with data sets uh covering like uh other features so visit the the page and and yeah thank you for the invitation I enjoyed the session it was nice to participate cool uh I'll

67:03we'll see you around the Quack and cod live stream are happening every other week so

FAQS

How does Hugging Face use DuckDB for dataset search and exploration?

Hugging Face uses DuckDB in its Dataset Viewer for full-text search via DuckDB's BM25 full-text search feature. Datasets are first auto-converted to Parquet format, then ingested into DuckDB files with full-text search indexes. When a user performs a search, the DuckDB file is downloaded and the full-text search query runs against it. DuckDB is also used for filter expressions on dataset columns, letting users explore datasets without downloading them.

How do you query Hugging Face datasets directly from DuckDB?

With the Hugging Face DuckDB extension, you can query datasets directly from the DuckDB CLI or any DuckDB client using a simple path format: hf://datasets/{organization}/{dataset_name}/{split}.parquet. This eliminates the need for long Parquet URLs and lets you read public datasets with just the dataset name. For private datasets, you can authenticate using your Hugging Face token. You can also load these datasets into MotherDuck for cloud-based analysis.

What is text-to-SQL with DuckDB and how does it work?

Text-to-SQL uses an AI model to convert natural language questions into SQL queries. The process involves passing the database schema (DDL statements) and your question as a prompt to a language model, which generates the corresponding SQL. DuckDB's duckdb_tables() function is particularly useful for extracting CREATE TABLE DDL statements to build the prompt context. While models generate correct SQL for simple cases, the main challenge is providing sufficient business context and handling complex multi-table schemas.

How does the Hugging Face Dataset Viewer handle large datasets?

The Hugging Face Dataset Viewer auto-converts all supported formats (CSV, Parquet, JSON, WebDatasets) to Parquet files for efficient processing. For very large datasets (like the 74-terabyte FineWeb dataset), the viewer currently processes the first 5 gigabytes to provide a preview, since converting the full dataset would require duplicating the storage. Pagination, search, filtering, and statistics are all built on top of this Parquet conversion layer.

Related Videos

9:09

2026-02-13

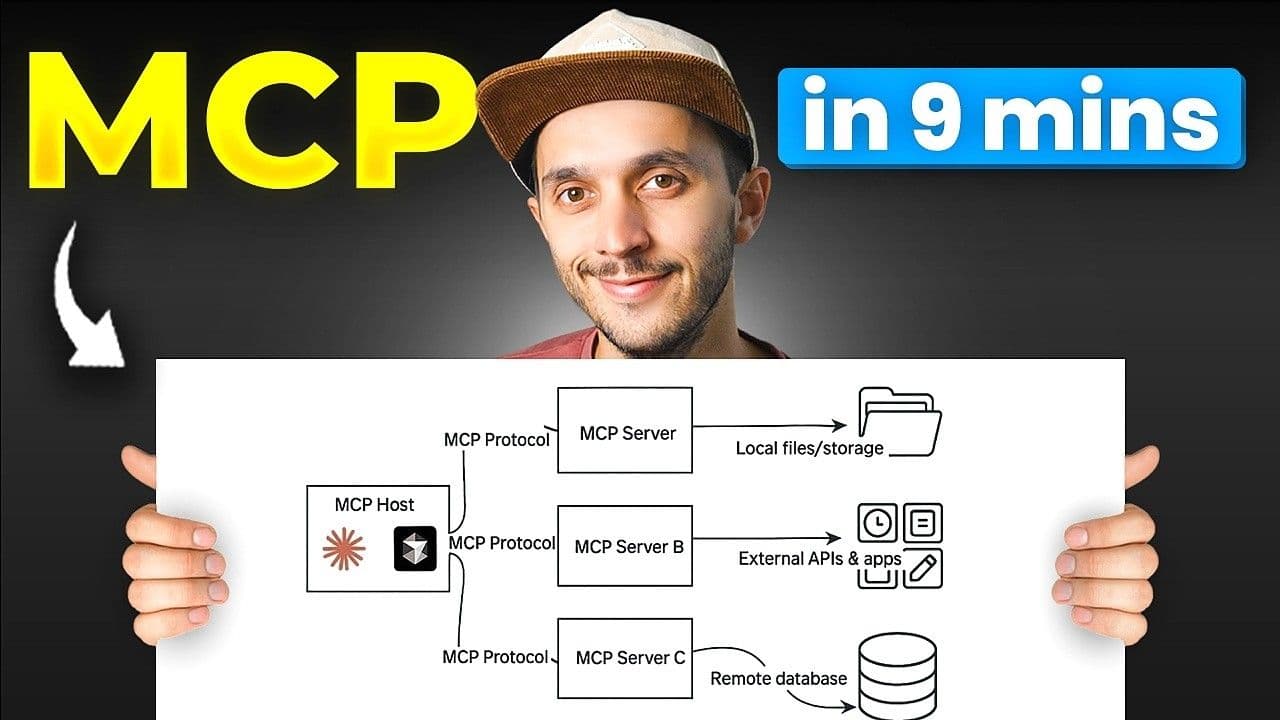

MCP: Understand It, Set It Up, Use It

Learn what MCP (Model Context Protocol) is, how its three building blocks work, and how to set up remote and local MCP servers. Includes a real demo chaining MotherDuck and Notion MCP servers in a single prompt.

YouTube

MCP

AI, ML and LLMs

2026-01-27

Preparing Your Data Warehouse for AI: Let Your Agents Cook

Jacob and Jerel from MotherDuck showcase practical ways to optimize your data warehouse for AI-powered SQL generation. Through rigorous testing with the Bird benchmark, they demonstrate that text-to-SQL accuracy can jump from 30% to 74% by enriching your database with the right metadata.

AI, ML and LLMs

SQL

MotherDuck Features

Stream

Tutorial

0:09:18

2026-01-21

No More Writing SQL for Quick Analysis

Learn how to use the MotherDuck MCP server with Claude to analyze data using natural language—no SQL required. This text-to-SQL tutorial shows how AI data analysis works with the Model Context Protocol (MCP), letting you query databases, Parquet files on S3, and even public APIs just by asking questions in plain English.

YouTube

Tutorial

AI, ML and LLMs