DuckDB and recommenders : a lightning fast synergy ft. Khalil Muhammad

2024/02/19Featuring:Khalil Muhammad at the DuckDB meetup in Dublin on 23 January 2024! He shows what DuckDB offers you to move quickly when building recommendation engines and optimizing data modeling for machine learning. Predict user preferences to profit!

Transcript

0:05essentially this talk is about what DB offers when you deal with data and modeling tasks so when you build recommended systems when you build machine Learning Systems um often you don't think around the concept the concert of things around them um but what this talk hopefully you should take away from it is that duck DB offers you

0:27a lot more than simply just running queries and it helps you move fast um okay so okay cool so um what are recommenders the idea is for you to just get a very high level of what this recommenders do but the theory and the practice of recommenders are similar to any algori them or any machine learning model that you've dealt with or you've

0:49seen in the wild you have seen recommended systems in your Netflix on Amazon um triping by or anywhere you go to where you don't search and then they seem to know what you want basically that is a recommended so what they do is they learn to predict your preferences for profit and for your satisfaction obviously um and to do that

1:11they tap into multiple sources of data it could be your history when it's available it could be the product catalog what they do have in stuck it could be the context maybe it's just the news for example in Ireland maybe it couldn't be about the Olympics or housing crisis but that piece of information Fe into the machine learning

1:31model and then obviously set on business rules so let's say you're recommending books you wouldn't want to recommend pornography to kids right so that would be like a business rule that you plug in you take all of this pack them into a single solution plug them into the machine learning model and outcomes your preferences these preferences in most

1:52cases are just a series of rules this is what you should take away about to recommend a system they learn patterns and rules um and in doing that the recommender system is able to tell what you like from what you do not like when we say things we like internally we call those positive samples and it's important that you

2:12remember this name because I'm going to use it going down um in in in in the next few slides and this positive samples are things you have done so it's easy to gather you've clicked on a hotel we know you like it you've watched this movie we know you like it on the other hand there are negative examples and you

2:31need to have that at hand but it's very scarce the fact that you did not watch a movie does not mean that you do not like that movie it simply means you did not watch it right um so we call this negative samples and you need and if you remember the movie of the TV show is it

2:47a Silicon Valley where Jinan had like the Hot Dog app right and it was just a predictor to say this is a hot dog this is not a hot dog so you I'm sure she passed a lot of hot dogs through it and then any other thing that is not a hot dog would be the negative samples for

3:01that so keep that in mind now where to find this negative samples is still a subject of debate it's still a very open research question um and I'm going to tell you how we did it in Duck DB and this is just a very naive implementation but it works um so beyond negative samples there are lots of challenges when it comes to

3:24building a machine learning model especially recommended systems um and I want you to think of a context where you have multiple collaborators working on the same project right as opposed to one person hiding off somewhere and building this machine learning model what you start to see is that you need to collaborate right between the contributors to that

3:47particular project and when you have more contributors the expectation is that you move much faster but too many cooks will spoil the broth so there needs to be a way that you facilitate this um contributions without one stepping on another person's tool um but what's most important is if one person succeeds on a team then their success

4:12should be reproducible across board right so we need a standard to replicate experiments um that's also a challenge when you do that because you can't say it works on my machine and that's okay uh but what's most important is when you build it wherever you build it it should be easy to get it into production so where possible you want

4:35your development environment and the PIP PLS to mirror what you could have in production so these are just some of the challenges that comes into play when you think around collaboration the other challenge is scalability imagine a situation where you don't have pie spark or spark because you know for whatever reason up um and it's strch 24 so

4:57everybody's embracing the more stack duck DB and the others why not scalability becomes a concern because there's always more data than you can feed your model most of these models the more data you give it the better they are so you have to figure out how to deal with scale problems and what we are what we have at the moment is that

5:19vertical scaling allows us to add more R and it's actually cheaper than was a previous talk here where someone mentioned about horizontal scaling um it's actually cheaper for you to vertically scale by adding more RAM than for you to provision a new machine because once you provision a new machine there's a cost involved in running that you know and all that kind of stuff

5:38around there so there's a limit to Vertical scaling yet um and a single machine would go along way so how can we take that challenge and uh simplify it but what's the most important is that when you had GPU accelerated models because most of these models would be GPU accelerated the bottleneck tends to be them waiting for input IO that's IO

6:04operations so input operations when you read data for the models they just stay idle until they get that data so we need to ensure that there's a neat pipeline that just kind of feeds that model really fast um but from a team perspective if the team can move much faster if I can retrain my model on a

6:23daily basis that is good for the business right so it means that we can do more experiments um and hope to leave get to a better product at the end of the day this is where duck DB comes into play all those problems all those

6:39challenges du DB so I'm not going to sell du DB beyond that but um let's just say that it works for us um and again there's a disclaimer here so I'm not representing my company but let's just say that it does work for whatever context I'm working with um so in terms of collaboration this is the first

6:57problem I mentioned to collaborate without friction to be able to work with other people you need to have a concept of a data set because if you imagine that when you run uh an e-commerce business the data is always increasing some of them are becoming stal some of them is coming um becoming new so you need to

7:18have snapshots of your data what we call the data set um so you need to have that handy also when you collaborate with people you want to give each contributor the flexibility to go off and create their own features um features are essentially the aspects or attributes of data that goes into the small El uh but what's most important also is that when

7:40you have collaborators some of them would need new ones would come so you need to have the flexibility to allow other people to contribute to your project without much friction um and with duck DB um we're able to get to adopt a strategy that allows us to pull as much data as we need and then and enable all this

8:00requirements uh and I'm going to talk about that so imagine a situation and this would be a typical setup for uh an e-commerce or a an ml team and an e-commerce setup I'm just trying to get this to work um okay no pointer it always starts with your database and this would be a collection of Records containing all those sources

8:26of data I talked about which is the content the user histories the business rules that would reside in some uh database probably in the cloud

8:37um what we have when you need to get D DB to solve this particular problem is a concept of a data spec which is just a configuration for your data set and that would contain all the aspects of the data that you want to get so you can think about it as a range in time it could be a certain category of customers

8:57it depends on whatever dimension as you want but think of that data spec as a declarative way of slicing your data giving you a view into it we have a query Builder essentially that takes this data sets spec and it's able to query all the data sources and fetch as much data as we need um and from that

9:19comes the the data that we use to to train the model so once that is done um the process that orchestrates that saves the data spec and the training data in a cloud storage and this could be your ec2 it could be whatever cloud provider that you have what this gives us is solution to reproducibility and a central source

9:45for data set so essentially you created a new interface for getting your training data the spec and the associated data so you could reproduce this on other machines so when a new contributor joins what they simply do is query this catalog of data set specs okay using doc DB um and they can now fetch the training data from the cloud

10:09whatever Cloud Ser because docdb allows you to connect to this Cloud providers and pull as much data as you want the training data is cached locally so that there's no repeated um cost in pulling data back this if we had mother do then maybe wouldn't have worried about all of this right uh but get to mother do but anyway

10:29so um the train data is cached and from that we also use doc DB as a feature pipeline essentially creating your own features um and that feeds into a pie Touch model for for model training so what duck DB does here is two things it serves as a glue connecting different data sources but it also serves as a

10:51facade giving us a very neat way to query and access data that addresses the

10:58collaboration problem um the second problem is scale essentially trying to do more with less um since most of the models we build are using pyou um in some cases they are also tens of low models what You' find is that for many use cases people tend to use the map style data set which gives you a random access to your data

11:21set which means you need to have all of that data in memory but when you deal with larger models you can't have everything in memory so what is recommended is to go for the iterator style data set and you wouldn't find a lot of this um and it's it doesn't render Weare I should have chosen a better background but what what we have

11:41there is an example from the duck DB website on how you could use the fetch

11:47record batch command and it's sort of there um just over there that's what you should take away from here what that command does is when you send a SQL statement to Doc DB it allows you to retrieve the results in batches so you just fetch as much as you need do what you need to do with it and move on um

12:08it's a very handy command and that's what we use to build our um itable data set that

12:16allows us to string data so at the top there I'm going to try this is the middle right okay awesome okay so I to there um are just kind of things you would do to configure this particular data set which is what goes into your pouch uh model this would be very similar to what obtains and T of FL

12:40so whatever you take from here you could apply there the negative samples so this method there would be called by the model when it means data and then the negative samples are created and this is where do DB plays a very good role so if we connected do DB we request for the data that g gives us both positive and

13:00negative samples together and then we start reading in the loop and when we read that data essentially we could do cheap operations here so that we don't waste the um gpu's time and then we can send it the data that we need basically and this happens so fast thanks to Dr D

13:22um and here let's just go back a little bit notice I'm specifying chunk size that tells Stu DB the amount of data that you want to get back so to show you the potency of just vertical scaling and just having a very good machine this would be very you know it's just the same spec as the machine you said um

13:43there but it this has about eight CPUs or so um you can see that increasing the chunk size you know tends to move faster because the GPU is hungry for data so it could process it much faster the time on the Y AIS there is just how long it takes to go through an entire data set and this results would be from a

14:03contrived data set which is a mov lens it's very common to recommend the system doain so I will share um a notable way

14:11you could run this experiment yourself um but what you should take away from this is your RAM kind of determines your speed and I don't fully agree with this article but it's a good one to read nonetheless so there this article which is duck DV is incredibly fast if you have um enough memory I say do DB is

14:31incredibly fast if you could configure it properly um so yeah just take note of that and read it um so yeah dotb is also faster than pandas in a lot of operations you do so when you have a lot of joints or if you're not familiar with pandas pandas is like a data structure that we use a lot in in

14:50data science um but if you could do all of those operations in Duck DB you would be saving a lot of time um and it's also convenient so with um Duc DD taking advantage of the UDF we're also able to implement negative sampling and this is how you'd go about doing the negative samples essentially so imagine a situation where

15:15you have a set of products that only available in a particular country um and you have all the items that a user had seen previously um and you want to generate negative samples so essentially you're trying to find all the items that this user has not seen that reduces the operation to a set difference um operation docdb allows you to specify

15:37this operation in Python code so there isn't too much sequence in there there's a slight Cur to it um and you register this function um this UDF using the create function command in there um and anytime you write SQL like this focus on this particular area there you can call that function so here is get negatives and I'm passing

16:02the relevant fields in there um and Dr DB will run it in Python interestingly this was really fast um faster than a similar operation in a database I can't say but um despite it being UDF despite it not using Arrow um format because I think there're two formats right there's either the python based on in the arrow

16:23based format uh this was incredibly fast maybe because the data was local I don't but um let's just say it it is much faster than um some Cloud providers um and we we did experiment or I did experiment with a lot of other tools in there um what I gathered was that on a very bad day for a similar

16:48operation duct was at least 10 times faster and this is me having a very bad day so we tried this with different data sets because I mentioned that we have this whole concept of data sets um and you can see that creating negative samples in Doc DB this would be the same standard of Doc DB um two negative

17:07samples for per positive sample it goes in about 2 seconds while in sequin light is about 5 Seconds um and then the data just kind of like preparing the data for streaming it takes about 6 seconds what I mean by that is I'm just going to go back for a second so that I think I showed it here this particular statement

17:28there where you say fetch record batch okay that's when the data is prepared for streaming that takes about 0.6 seconds while this one um takes about two seconds so it's it's it's it's it's a huge amount of gain that you get um and this 10x difference is is a difference between waiting an hour for results and waiting 10 hours for result

17:55so you think about it it's not just speed in terms of the algorithm but it's also the amount an employer is getting um you know for whatever they have been paid for so um it's it's it's a good cach if you want to try it out um and there are some certain uh tips that I gathered from

18:12here and this would kind of Mark to what uh what James had talked about

18:18earlier this is the same query right um except that this bit has been taken out um so here we have this window functions in there we been doing sort of a partition buy and then an order buy and here we do not have um that bit what I noticed is that there's a little um effort involved in trying to

18:41tune your your duct DD queries to make

18:46Avail of the memory resources you have um and that first query the one with the window functions this is what it looks like when it runs at first um the CPU usage starts very very little um of course I can't show you know animation but essentially you can imagine that it keeps growing until it gets to this

19:05particular level the memory just kind of goes really high and it kind of picks around 80% because by default most um dog Deb would be using 80% of yes exactly good um but without it it kind of flattens down this is the same query so here without the qualified by but this is not plainy to say that you

19:27should not adopt duck DB is to say that duck DB is moving quite fast in terms of addressing most of these concerns and what if you read an article from last year and you compare it to this year you realize that it's not there anymore um but it's not a deal breaker also because you could rewrite your SQL to avoid all of these

19:48concerns uh but knowing them beforehand saves you a lot of time um so in there in the documentation that most of us don't read um there are tips there on set memory limits and once you set those memory limits um you avoid the out of memory issues you avoid potentially um seg folds which I've seen one but then again I I tried to push it

20:15to the extreme um but at the cost of speed so the more data the more RAM you have the faster it operates and when you set a limit it sort of slows down a little bit so um check those these two

20:29options and set them when you can um and then when you work with a lot of people and there's a lot of setup involved in accessing your data you should have a single way to initialize your docdb connection so that you make sure you clean up afterwards um because it's it's healthy it's just data hygiene right um so having a single connector or

20:52a single entry point like this would help where essentially you're all using similar memory limits um and then you have similar setups but then what happens afterwards would be dictated by whatever the collaborator once um it's a very good um tip to have so what I will say is it's been a very

21:14enjoyable trip adopting duck DB um what

21:19I'll also say is that it gives you a solution that you could easily productionize there is a lot of access to help online um but it's the speed that just gets to you you just get work so fast and the convenience that you don't have to pull too much data and the cost savings all of that just kind of makes it a very

21:39handy tool for machine learning tasks and recommend assistance so my name is k and I work for storytale um an audio Publishing House thank [Music]

21:57you

FAQS

How can DuckDB be used to build recommendation systems?

DuckDB fills two roles in recommendation system pipelines: it acts as data glue connecting different data sources (cloud storage, local files, databases) and as a feature pipeline for generating training data. With DuckDB's fetch_record_batch command, you can stream data in configurable chunk sizes directly into PyTorch models, keeping GPUs fed with data instead of sitting idle waiting for I/O. This approach was at least 10x faster than comparable operations in cloud databases.

How does DuckDB handle negative sampling for machine learning models?

DuckDB supports Python UDFs (User Defined Functions) that let you implement negative sampling as a set difference operation directly in SQL. You register a Python function that finds all items a user has not interacted with, then call it from SQL queries. Even though this runs as a Python UDF rather than native Arrow format, it was remarkably fast, significantly outperforming similar operations in cloud database providers on local data.

What are best practices for configuring DuckDB for machine learning workloads?

Set explicit memory limits using DuckDB's configuration options to avoid out-of-memory crashes. Create a single connection initializer that all collaborators share so everyone uses consistent settings. Tune chunk_size in fetch_record_batch to match your GPU's data appetite. Window functions can be memory-intensive, so rewriting queries (for example, using recursive CTEs instead) can reduce memory pressure from 80% to nearly flat, making DuckDB more parallelizable for ML tasks.

Why is DuckDB useful for ML team collaboration and reproducibility?

DuckDB enables reproducible ML experiments through a "data spec" pattern: a declarative configuration that defines a time range, customer segments, or other data slicing criteria. The spec and resulting training data are stored in cloud storage, creating a catalog that any team member can query. New contributors simply fetch the data spec and cached training data via DuckDB's cloud storage connectors. This eliminates "works on my machine" problems and keeps experiment reproduction consistent.

Related Videos

9:09

2026-02-13

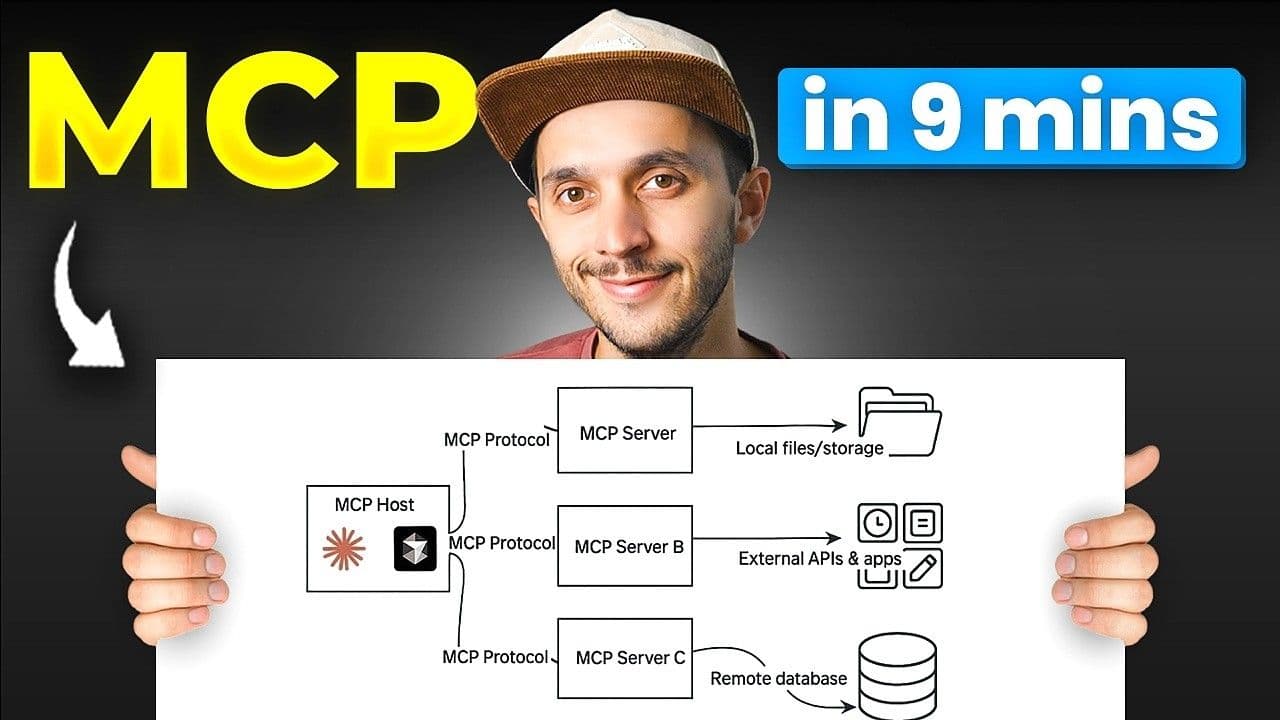

MCP: Understand It, Set It Up, Use It

Learn what MCP (Model Context Protocol) is, how its three building blocks work, and how to set up remote and local MCP servers. Includes a real demo chaining MotherDuck and Notion MCP servers in a single prompt.

YouTube

MCP

AI, ML and LLMs

2026-01-27

Preparing Your Data Warehouse for AI: Let Your Agents Cook

Jacob and Jerel from MotherDuck showcase practical ways to optimize your data warehouse for AI-powered SQL generation. Through rigorous testing with the Bird benchmark, they demonstrate that text-to-SQL accuracy can jump from 30% to 74% by enriching your database with the right metadata.

AI, ML and LLMs

SQL

MotherDuck Features

Stream

Tutorial

0:09:18

2026-01-21

No More Writing SQL for Quick Analysis

Learn how to use the MotherDuck MCP server with Claude to analyze data using natural language—no SQL required. This text-to-SQL tutorial shows how AI data analysis works with the Model Context Protocol (MCP), letting you query databases, Parquet files on S3, and even public APIs just by asking questions in plain English.

YouTube

Tutorial

AI, ML and LLMs