Machine learning and AI at MotherDuck

2024/10/23Featuring:MotherDuck's Till Döhmen talks at DuckDB Meetup #1 Amsterdam about all the clever ways the AI team is extending SQL to include AI features.

Transcript

0:01there's a lot of slides but I I try to keep it uh keep it not boring um yeah so

0:07I'm till I started the AI team at mother duck last year here in Amsterdam um pretty much one year ago now and um you might be wondering what is mother duck might have not heard about mother duck before so I'm going to start with an introduction uh about mother duck uh so who are we we are accompanied was founded in May 2022

0:32since June 2024 we are a uh GA and we

0:38have around 55 employees worldwide and I think nine of us um are based in Amsterdam we are VC funded and we are like lucky to have a dub Labs partnership and we work closely together with dbbs and um what what are doing we're

1:01designing systems or data systems for the Post Big Data world uh what what is

1:08it mean I we want to build um data warehouse that actually leverage local compute as well as uh single node Cloud compute uh object storage all these kind of things so um you don't don't have to pay the Big Data Texs so um what is it motivated by so um local compute every everyone sitting here is probably a notebook that is using for

1:36work and that mostly sits idle unless it's currently compiling some code um so and with dub we can actually leverage that to process uh your analytical queries so second thing is I I said

1:52single node compute so because distributed compute is still painful you see two workers concurrently processed in a bottle of wine that doesn't look too convenient yeah um yeah so and um single

2:09note comp comput in the cloud is actually quite powerful so there are up to 24 terabyte uh machines with up to 24 terabyte um of of main memory almost 500

2:22cores uh available in the cloud so that's a lot of power for um the dctb and um yeah so m is basically a dub extension

2:35for scale up and for collaboration and um it is as simple to use as that so instead of um connecting to your local dctb database file uh or opening a CLI session against your local dub database file you can uh connect to U mother duck instead of course in this case my MDU token is um an environment variable but

3:04once that's set you just connect to mother do and you will have access to um your local databases you can still attach your local dub database to the same session but you also have access to shares which are shared duct DB databases in the cloud they can be shared with all other users or only with users in your

3:25organization and um they can also be joined um Madu also comes with a web UI that is wasm based so even when you run queries in the web UI to do data exploration or development you can actually leverage your local compute with that and at the same time of course when you run queries against um remote data sets uh it could

3:50also be data sets on on on S3 or Iceberg you can Leverage The Cloud compute side of Madu and there's more mother de has a large ecosystem of Partners and different connectors um there of course also an intersection with the duct DB ecosystem and and um connectors and uh there's also wasm SDK actually from mother duck that can be

4:19used to build very low latency data apps I think kis mentioned mentioned it earlier as one of the use cases uh to build low latency data apps and that's something that mother enables but also it takes care of managing the um the respective data in the in the context of your Enterprise or of your users so uh one thing we did on the AI

4:45side just just as a starter for example is this data app generator so this was inspired by Cloe Cloe artifacts I don't know if anyone here has knows that or has used that before it's basically this AI chatboard that can build applications and we did the same to build um mother dock data applications so the prompt I

5:07want the dashboard of hecko News Post and it would start spinning and show you um show you a live data app and you can iterate on it and um so now I think I added a chart when clicking on a certain column in the um in a dashboard and there there it is so it's really I'm I'm

5:29always is really amazed that these things just like work but of course this

5:35is more like a demo and something to play around with and not a super serious project yet but uh you can go you can go and get up and try it out if you want to so in general I want to cover a few topics and I hope we get to all of them but maybe I just speed up towards the

5:54end um or Gabor maybe remind me like a

5:59few minutes before perfect thank you um

6:03first off I want to talk about Tex to SQL because that's always a topic that comes to mind in the context of AI and databases and talk a bit about where I think we're currently standing or or this fear dist standing and um yeah show

6:20a little bit of the things we have done and then go through a couple of other I think interesting topics so I want to start with a quiz so are there any duct DB users here like day-to-day users okay okay so would would anyone be like know

6:41the query for the first question by heart so I have a table and I want to get all columns um that end with underscore amounts uh from that table in a single select statement okay people might think I bought you because you are so mother Ducker but

7:08okay yes exactly yes so okay let's not go into the details of the exact syntax but you could instead also just ask the dctb texos SQL model that we that we find tuned um

7:26so second one I think that's a nice one reservoir sampling I don't know if people do that that often and I would definitely have to go to the documentation uh what the exact syntax is but we can also just ask the model to tell us what the syntax is and um summary statistics um you give me summary statistics of the table um there

7:49just a lot of functions in dctb that are uh I think dub has more than 600 Scala functions in total and of course different parameters and all these things and uh I don't know how often like that's the reason why you get 1.3 million visitors a month [Music] yeah so um yeah so this model is on

8:15hugging phase so you can uh run it on the on the website you can go to website play around with it but what is also really cool uh you can use all Lama on your local machine so that was one of the reasons why we wanted to try a smaller model of course you can do things like that with gbt as well and

8:31nowadays um on the next slide I will show show show some benchmarks is also pretty good at uh speaking dctb but um you can now just run this locally without exposing your schemas to some uh some uh foreign company uh so this a benchmark I just mentioned so we built um we built a benchmark where we just keep keep track

8:54of how good are current models at writing dctb SQL uh currently o1

9:00preview from mmei is leading clo 3.5 Sonet next but surprisingly quen 2.5 is small language model is uh is is following and um it's actually better than our model by now I think this was nine months ago and we are working on training a new iteration of that that is also knows about new activ B versions this one was 0.9 I think so and there's

9:25also hugging phase space now where we collect these benchmarks and you can run your own benchmarks there if you want to uh so let's but let's take a step back from there because there was some some confusion around what this stb text SQL model is or where Texas SQL is going is also a constant question so um I just

9:47want to think about it in terms of different roles so uh we have business users who might be use doing analytics or might want to get insights from data they many ask questions they know nothing about SQL mostly data analysts data scientists who are really the data experts they really know the data they are working with they're also good at

10:08writing SQL mostly write analytical SQL queries though and then there's the type of software engineer that is can be all all over this I was really surprised 25% of D users are sare Engineers so um that's really really really important uh audience for for for dub and um yeah but

10:28day in data engineers and not necessarily so much interested in analytical queries only and when you look at this whole landscape of Texas SQL tools um and also the academic side

10:39of things yeah this whole uh this whole thing is 90 99% focus on analytical

10:47queries I'm sorry that it's so small but it's basically just a list of all the machine learning model or machine learning papers about text to SQL from the recent years and what I want to get at is this basically just serving one of one of the users of dctb SQL uh of of of dub or of data warehouses which are the

11:07analyst so nowadays you would do texos SQL plus retrieval which means you look at the query history that the users use that the users have had and uh you would look at um other data documentation and build a re a retriever mechanism on top of that and then generate queries from that but they are can be regarded as draft they are often

11:34not really the exact kind of query you want but that might be a really good starting point and I feel that a lot of companies are also leveraging that nowadays to become more productive on the analytic site but it requires SQL knowledge uh so this dbn SQL model is more going into um you know all the all

11:55the different functions not only analytical questions but also more the the DB DBA typee of like copy inter statement for example and it's more like saving a round to the dctb Dos uh it's not suitable for generating super complex queries but then there's this left side of business users yeah so people who only care about the results not so much

12:17about the SQL itself and I would say there's currently not really a good solution for that and I wouldn't dare to let the existing Texas SQL models uh onto into the hands of any nontechnical user any user who can't verify the code that is actually generated so this is a bit um yeah of of of the current problem

12:38with the with the Texas SQL and I think um one one thing that is really interesting are semantic layers so um they give give you a way to essentially structure structure all your internal data knowledge and make it explicit and um Melo is an interesting example of that because there this the knowledge is actually embedded or is

13:03part of the language so when you're compiling a syntactically correct mow query you can be sure that it's also semantically correct which is not the case for regular SQL queries um so and and the last thing software Engineers data data um data Engineers

13:24they really work a lot in the IDE and they want to generate they they want to keep on iterating on the same code so it's not hey okay give me one of query I want ass assistance with writing my query while writing my query small things like oh I don't remember the uh the parameters for the regex extract

13:45function or uh I can't come up with a regex just recommend one in line you might have worked with uh co-pilot um GitHub co-pilot or cursor as IDE so these tools I think are really really useful um but they're missing the context of your database schemas for example and they also cannot verify the SQL they generate or the the the snippet

14:08to generate so I think there we there we have a unique opportunity to to just make developers life easier and one of the things we did is this um fix it it's a very first start into this direction sorry for the small gift and quick GI but um uh when you uh so when you run a query in a mod de web UI

14:31and you encounter an error then um we will we will make a decision is this errow fixable at all uh if so then we

14:41will provide the error message to our fix it uh to our fix it back end and it will um generate a single line fix for the error and uh will also validate if the if that fix makes sense and then you can as a user accept that and you kind of the latency is very low it's only 0.6

15:01or 0.7 seconds currently so you can also stay in the flow of writing writing uh of course there are also some of these like standard Tex secq functionalities in mother duck so you can uh generate you can do this Tex SQL generation for analyst you can say okay I have this schema here and please generate a query for me and um we take a

15:26little bit of the schema copying the schema over for example to jbt and validate an the query off of your shoulders uh so that's if that's the first uh chapter and now I want to go to

15:41something that I'm really excited about we also going to release a blog post about it later today so you can still even if it's too too quick or so you can you can still go back and to to read that um so want to talk about

15:59small language models in the context of databases and I want to talk about because actually 50% of uh columns in databases are text typed so there are a lot of string values in databases actually and of course we have um tools like uh working with regular expressions or um getting a substring of a string but now with this wave of large language

16:24models um I think it's really time to also bring bring those capabilities to the to the database and why I think it's a good time now is because the the costs per token have fallen a lot in the last year so when gbd3 came out 2020 it cost 20 $20 for million tokens uh right now gbd4 om Min only costs 24 24 cents for 1

16:49million tokens if you go to even smaller models you can get up to like 99.7% lower cost than it would have been a few years ago and um those small models so models with

17:04eight 8 billion or less parameters um would fall into this small language model category and they are actually still pretty powerful so these very small models are doing pretty well on the on the benchmarks still so oh I'm

17:19jumping ahead already so uh yeah this this is a new function we we have in mother do uh with this function you can actually call small language models to process your data in a database through through SQL and we take care of all the um orchestration in the background for that uh what what can you do with that

17:41what is it that useful for so let's say you're data analyst working with uh this data set here this one of our example data sets Hecker news POS um and you see

17:55this long text field and wonder what are

17:59these people writing about um hope it's not too too small but it's very hard to get a quick overview of what what the topics of these different posts are about even when I draw a sample of it and with this uh prompt function I can just prompt prompt a model to give me for every row to give me a summary of

18:21the like five five word summary of the text and um so that's pretty useful now

18:29now now I can look at that column and get a really quick overview of um what this post what this post is about so

18:37what you can also do is uh you can use structured output so you can say I don't want to have text as response I actually want to have a typed struct as response from from the language model so we can say give me the topic give me the sentiment as an integer actually so say one if it's really negative five if it's

18:59really positive and give me a list of Technologies mentioned in the post and then you will get a adjacent output so what is nice and DCT be if you're working with structs you can actually um you can uness that that that stru super easily and you just have three new three new columns in your data set um among

19:21others you have a sentiment classification of the post um yeah so language models are basically like a Swiss army knife for NLP they can do a lot of things and um yeah to the to the prom function they are they're accessible but what I what I think is even more exciting is this world of open models and local

19:48inference so there are all these language models out there 100,000 new language models every week we might not need all of them uh every month might not need all of them but some of them could be useful for example the sentiment classification right I think it's um to use even a small language model for sentiment classification feels

20:09quite like a waste there are a lot of really useful pre-tuned models on hugging phase that can do that uh with fewer parameters um much more efficiently and uh one thing that came out this week is uh olama I showed that earlier for for the local model inference actually now supports all models on hugging phase

20:37so we can uh we can do inference on your

20:41on your notebook and you can even do inference in wasm yeah um I didn't explain dual execution

20:50you might want to talk to one of our database P what that ex exactly is but it's essentially the way we split work between clients and so us and uh I I think this entrance topic fits in super well so if you want to not send your

21:07data to a third party API you can never uh actually leverage your local compute to compute the sentiment for example where is with a specific model with your with your local GPU potentially or even MacBooks are are pretty good at it by now uh or for embedding computation which is even more efficient you can you can compute 500 500 embeddings per

21:29second in your browser with web GPU using a Macbook um yeah so talking about embeddings we

21:40have the same thing for the embedding function I would take five more minutes if that's okay so you can do things with with with that you can start doing things like similarity search in SQL you can do that as well with um the like regular du dub

21:59provides this array coine similarity function so that's basically the core of every uh similarity search embedding based similarity search U setup and with

22:10this function you can do the similarity search end to endend within SQL without having to go to like an external python script where you compute your embeddings you you can just do it like this put it in your DBT job whatever it is and um

22:26answer these these kind of questions and you can even build rack applications fully in SQL with a combination of both so it's more like funnier I don't know if there's a good use case for that actually um but it's

22:44possible so if you want to try out any of those functions with dub or with mother duck you can just go to the docs and we also have a free trial where you can get um 40,000 proms per day or a million embeddings per day for free just to play around with it and see whether you like it and of course we

23:03would be interested in hearing if you like it what you um um yeah or what you

23:09not like about it really quick uh about Vector search because we started talking about it there in this example we're using naive Vector search so what is really really great about it inducti ability is super fast so you you can do this naive uh Vector search so just compute the coine similarity between your search keyword and your 2 million entries in your table

23:38uh in less than a second so it's pretty fast given that a language model also needs a few seconds to respond so it doesn't actually add that much so i' would say for w actually using the naive search inductive B is pretty pretty good uh but then there's also the vsss exchange for dub um that makes embedding search on really

24:03large uh data sets super efficient and I think that's really useful for things like entity matching where you have to do like n n bym comparisons in your table and then that thing can really really speed up uh the processing it comes with the with the overhead of computing an index uh it has relatively large memory footprint right now uh

24:28right right now so I think maybe a good compromise is IV IVF flat which is uh

24:36has a bit lower memory footprint is has also relatively fast index creation has not the high super high QPS super high recall but it's still reasonable and now since a few days or a few weeks there's a community exchange for duct Tob uh built around F so with this thing you can also use IV IV flat search actually

24:57inductiv um if you want to do hybrid search we have a blog post about that um yeah I wanted to talk about machine learning I think yeah okay okay few

25:13minutes uh so there I kind of like that

25:18I I really like that machine learning topic because I used to work for a company called hops Works before and they were a machine learning feature store so that's really my world just thinking about how how do we go from relational data sets in the data warehouse to useful features for model and I think right now dctb really fits

25:38into these two steps join filter Transformations this feature engineering part of the workload and maybe um we can expand it into either of those directions go a little bit more towards how can how can we help with data set search data Discovery or on the other end of the spectrum how can we help with um machine learning actually because in

26:02I don't know if you know that but in like a simple thing you can do induc you can actually uh do linear regression just in SQL and er it's an it's an aggregate function so and turns out it's also relatively easy to implement K's clustering in a in a database engine it's a minib batch version of C's

26:22clustering I guess a lot of other uh um data warehouses do do similar things when I talk about um machine learning in a database so that's that's totally possible um and that is an experimental Branch so maybe maybe there will be a k means uh clustering function in the M deck extension one day or induct uh another thing also GR in

26:49boosting it was like a bit of black box for me okay it's this magic thing that does like builds a lot of uh trees and does boosting and this but actually you can Implement gradient uh gradient booster trees uh fully in SQL so there's really really interesting paper from um from this person and um it's even faster

27:11than light GBM or XG boost so I think that's a pretty pretty exciting topic another another direction or the other end of the spectrum is is data cleaning and wrangling uh this paper kind of triggered the idea we say we should bring prompting to the database layer uh because it was about data wrangling and they used language

27:34models to do the data wrangling and it worked actually pretty pretty good there's some followup work that we were involved in with fine-tuning smaller models for these different wrangling and data cleaning tasks and our last paper is about was about actually code generation so how can we generate useful SQL Snippets that do the data ranging part without using the model to do the

27:57processing of each row but with using the model more in a text SQL sense hey give me the combination of the right re expression and this and this in this that leads from my input to my desired output um yeah so I don't know if there are any Master students in this room if so uh yeah doesn't look like it too bad

28:19but uh yeah we have some some some interesting Master tees topics in that direction right now uh if you're if you happen to know some someone feel feel free to direct them to in our Direction all right thank

28:37you at some point during your presentation you mentioned about uh in one hour you compute 500 embeddings one second so what are the dimensions of these embeddings [Music]

28:54and um I can't remember the name of the model was something with all mini all Min Min ml there this is the one that

29:04also uses by default oh no it's a different this this specific demo was um on open model on hugging phas there this hugging phas Transformer JS Benchmark space and that was the default model they use when you look at inference times for um the OPI embedding models that we using in production you for for for batch of thousand embeddings it's

29:28around a second maybe a bit less and do you have to use GPU for that or it also works uh we're using uh inference apis so we are we are not running that infrastructure currently but it we we we would really like to go into that direction because that enables open model support for open models good thank

29:49you yeah

FAQS

How does MotherDuck's prompt function work for AI-powered SQL?

MotherDuck's prompt() function lets you call small language models directly from SQL to process text data in your database. For each row, you can generate summaries, classify sentiment, extract entities, or run other NLP tasks. It also supports structured output, returning typed structs that can be unnested into new columns. For example, you can prompt the model to return a topic, sentiment score, and list of technologies mentioned, all as queryable columns in your dataset.

What is the DuckDB text-to-SQL model and how accurate is it?

MotherDuck fine-tuned a small language model specifically for generating DuckDB SQL syntax, available on Hugging Face. It helps users with DuckDB's 600+ scalar functions without needing to look up documentation. On MotherDuck's DuckDB SQL benchmark, O1 Preview leads, followed by Claude 3.5 Sonnet, with the smaller Qwen 2.5 model also performing well. The model can be run locally via Ollama, keeping your database schemas private.

Can you run embeddings and vector search directly in DuckDB SQL?

Yes, MotherDuck has an embedding function that computes vector embeddings within SQL. Combined with DuckDB's built-in array_cosine_similarity function, you can do end-to-end similarity search without external Python scripts. For most use cases, DuckDB's naive vector search over millions of entries completes in under a second. For larger-scale entity matching, the vss extension has indexed search options like IVF Flat for better performance.

Can machine learning models like k-means clustering run inside a database?

Yes, basic machine learning algorithms can be implemented directly in SQL. DuckDB already supports linear regression as an aggregate function, and k-means clustering can be implemented as a mini-batch version within the database engine. Research has even shown that gradient-boosted trees can be implemented fully in SQL and run faster than LightGBM or XGBoost. MotherDuck is exploring bringing some of these capabilities to its platform. Learn more about using DuckDB with Python for ML workflows.

What is the current state of text-to-SQL for business users?

Text-to-SQL works reasonably well for data analysts who can verify generated queries, but there is no good solution yet for non-technical business users who cannot validate the SQL output. Most academic research and commercial tools focus on analytical queries for analysts, not on the full range of DuckDB operations. Semantic layers like Malloy are a promising direction because they embed business logic into the query language itself, so syntactically correct queries are also semantically correct.

Related Videos

9:09

2026-02-13

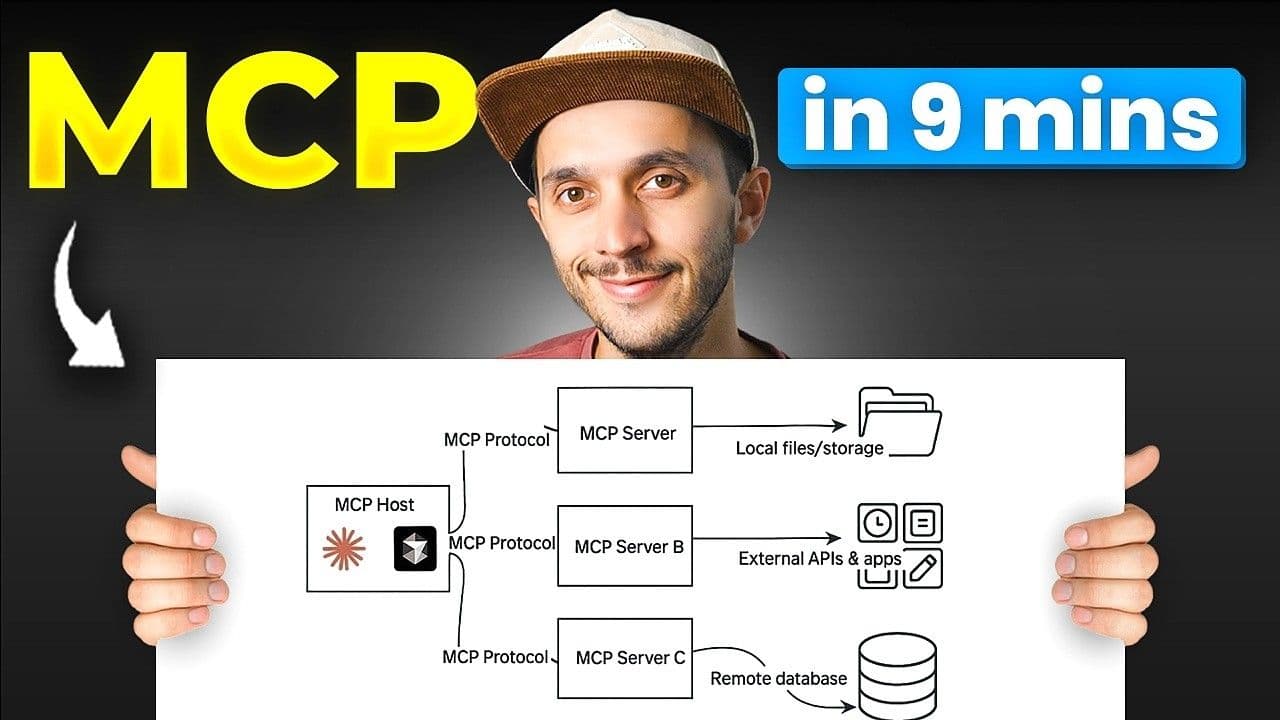

MCP: Understand It, Set It Up, Use It

Learn what MCP (Model Context Protocol) is, how its three building blocks work, and how to set up remote and local MCP servers. Includes a real demo chaining MotherDuck and Notion MCP servers in a single prompt.

YouTube

MCP

AI, ML and LLMs

2026-01-27

Preparing Your Data Warehouse for AI: Let Your Agents Cook

Jacob and Jerel from MotherDuck showcase practical ways to optimize your data warehouse for AI-powered SQL generation. Through rigorous testing with the Bird benchmark, they demonstrate that text-to-SQL accuracy can jump from 30% to 74% by enriching your database with the right metadata.

AI, ML and LLMs

SQL

MotherDuck Features

Stream

Tutorial

0:09:18

2026-01-21

No More Writing SQL for Quick Analysis

Learn how to use the MotherDuck MCP server with Claude to analyze data using natural language—no SQL required. This text-to-SQL tutorial shows how AI data analysis works with the Model Context Protocol (MCP), letting you query databases, Parquet files on S3, and even public APIs just by asking questions in plain English.

YouTube

Tutorial

AI, ML and LLMs