More Than a Vibe: AI-Driven SQL That Actually Works

2025/04/22Featuring:TL;DR: Learn how to safely "vibe code" with AI-generated SQL using DuckDB and MotherDuck, with practical tips for setting up Cursor IDE to write and execute SQL queries automatically.

Jacob Matson from MotherDuck demonstrates how to use AI assistants like Cursor to write SQL queries—what he calls "vibe coding"—while avoiding common pitfalls like running unvetted queries on production databases or generating expensive workloads.

Why Traditional AI SQL Can Be Risky

Vibe coding SQL directly against production has several problems:

- Running on prod: AI-generated queries might hit your production database

- Uncontrolled workloads: Bad queries could "fork bomb" your Snowflake instance with expensive compute

- Disconnected feedback: When code writing and execution happen separately, error handling becomes difficult

The DuckDB Solution

DuckDB solves these problems by enabling:

- Local replicas: Replicate your MotherDuck cloud data locally with a single SQL statement

- Isolated workloads: Run AI-generated queries safely on your laptop—if something goes wrong, just kill the process

- Instant feedback: DuckDB's speed means the AI can run queries, see errors, and fix them in a tight loop

Setting Up Cursor for SQL Development

Jacob shares his Cursor IDE configuration:

- Schema as XML: Export your database schema to XML format for better AI context (their AI researchers found XML produces better SQL)

- Cursor rules: Define rules telling the AI to use DuckDB CLI with the

-fflag to execute SQL files - Python environment: Use UV to manage dependencies seamlessly

Live Demo: Spatial Analysis

The demo walks through a real analysis using Foursquare places data:

- Query restaurants in Oakland, California

- Generate heat maps using Folium

- Run spatial queries to find optimal locations for a new restaurant

- Let the AI self-heal when queries return no results

Key Takeaways

- Observe and intervene: Guide the AI like a bloodhound—it needs direction

- Use visualization to validate: Charts help verify SQL correctness without reading every query

- Model selection matters: Gemini Pro 2.5 works well for precise SQL tasks; Claude can be more creative but less predictable

- Context is king: Use tools like repomix to give the AI full repository context for brownfield projects

The approach lets you iterate quickly on data analysis while maintaining safety and reproducibility—turning ad-hoc exploration into something that could become a proper pipeline.

Transcript

0:00I'm Jacob. I work at Motherduck uh as a a developer advocate. Motherduck is a cloud data warehouse built on top of DuckDB. Hopefully a few of you were able to see uh Hanis's talk this morning. Um I'm sure it was excellent. I was preparing this. So, uh I think for those of you who have done a little bit of of

0:21this exploration or maybe you're doing it already, like can you really vibe code SQL?

0:27And um it's kind of a bad idea

0:33actually. So uh there there's a few reasons why it can kind of be bad and not really work in the way you want it to work. And the first one is like you're running on prod probably like hopefully you're not doing that but you know at least for you like at least for you know me as someone who got prod like

0:5010 years ago the first you know there was no replica. Hopefully we have those now. Um, the second thing is your workload might not be isolated, right?

0:58If you're going to have a uh an AI writing code, you don't necessarily know that it's going to write good clean SQL and you might fork bomb your Snowflake instance. Like be careful. That's an expensive bill. Um, and the last thing is often when you're vibes vibe coding SQL, your codew writing and your execution are happening happening kind

1:17of in separate threads. And if you're doing that, you're not getting instant feedback in that you're actually having to like kind of feed back errors into your um into your into your kind of LLM workflow. So there's another way that we can solve this problem. Uh and the first one is kind of obvious, but let's let's use a replica, right? Probably most of

1:37you are running analytics on replicas already. Um what if you can isolate your workload and what if you could have the LLM run the SQL and fix it too? And so this is where the magic of mother duck and duct db really comes in um to the to

1:54the the picture here. And I've put together just like a basic framework of something you can use with cursor.

2:01Here's the link. You can grab the repo also with with your phone if you want to follow along. I think just given our time constraints, we'll pro I'm just going to kind of go up here and do it.

2:11If you want to follow along, great. You don't have to. Um I think we can learn a lot together without having to uh you know spend a bunch of time coding. So uh with that said I am going to hop into cursor and kind of show you a little bit of things that I've done to prep work to

2:28make this easy and then we can you know start prompting and see what we get.

2:37Okay. Let me escape that full screen. Okay. Is that readable for everyone in the back?

2:48Yeah, sure. Uh,

3:06Matsonjcursor_EDA. Okay. So I'm going to talk about a few things that I've put in here um that are are helpful. And of

3:13course at mother duck we do have um some AI researchers. So I collaborated with them really on kind of understanding like what is the state of our research today say for writing SQL like how do you make it work? Um well the first thing I did is because my data is in mother duck I can write a se I can write

3:29some a SQL query that looks like this and it will take the data that is on my cloud and replicate it locally. Right?

3:38So this is replicating the entire database uh FSQ which is just some four squares data um for for uh places. It's their places database and um it's creating it as local DB.

3:51I've actually already pulled this because this is a 15 gigabyte database. It I don't really want to do that over conference Wi-Fi. Uh I didn't think it would work. Um so I did that as a pre-step. There's also lots of fun kind of things that I think about here too that I'm not going to talk about. But

4:05duct DB has really nice sampling built in. So if you want to take like a smaller subset of your data set and you don't have maybe it's not um maybe it's not built on like you know modified dates. So you can't just say give me the last month you know just sample it bring bring it locally. That also works. Of

4:19course, this um this SQL statement was written by cursor, not by me. Um the other thing I did too is um I wrote some Python here that takes the uh schema out of duct DB and puts it into an XML file. And the reason I'm doing this is because my conversations internally with our AI researchers is really, hey, the best way to get good

4:41SQL out of it is to get your schema in XML and pass that in as part of your rag step. So, we're going to do those two things. Um the last thing that I have here is some cursor rules. How many of you have written cursor rules before? Okay, not very many of you. So what these rules are is they give us

4:58that they're basically further context that we can give to to the kind of LLM workflow so that it will do things kind of in a prescribed way. So in this project I'm writing SQL and Python and so I'm going to give it some very specific rules and then I can give those as context. So the first thing is I have

5:16these SQL rules. So I'm telling it that you know these apply to all the rules in the project. By the way, cursor also is great at writing its own rules. So you can say, "Hey, help me write a rule to do X, Y, and Z." It'll help you do that.

5:29Um and so we tell it about, you know, spacing, formatting, format on save. And then this is the critical bit here. So um we do have an MCP server at Motherduck. So, if you want to use MCP as part of your workflow, that's definitely possible. Um, kind of in my runup to this workshop, I've actually found that just interacting with with

5:50the database works really well. Um, and so what we're doing here is we can actually use this -f flag in the CLI to run a SQL file that we define. So, we're telling it, hey, you know, when we write this SQL, let's just run these two, right? And again, this is safe and isolated because duct db is on my local

6:06machine. if I mess it up, if I get a really bad query, I can just kill the process and start over, right? I'm not going to be in a situation where uh I I'm too too worried about uh the query that's being written. And I think there's a couple other minor things in here that are not showing up at my Zoom

6:24level. What is going on here? We'll close the terminal. Okay. And then I have some Python rules. Um the main thing that I want to call out about this Python rule is that uh I'm kind of taking a very basic set or a bas very basic set of rules for my environment and letting UV manage almost everything. So um because of that we get

6:48to uh we can kind of just like not think about our Python environment. It just fades into the background and I think like that's the best way to interact with Python.

6:59Um all right so uh I'm in my terminal. We're not going to pop into um we're not going to pop into the cursor quite yet.

7:06I'm just going to run my get schema Python. So, we're going to do UV run

7:12scripts if I can spell

7:17it. Okay. So, Oh, what happened? What did I do wrong?

7:27Yeah, exactly. Yeah, I might have to do that. No such file or directory local exit.

7:36should create it. Okay. Well, we can use cursor. That's fine. So, I'm gonna hit command L and um I'm just going to say uh I got an error in the terminal. Um not sure

7:50why. And then I can actually copy this and it's going to just put it in context in cursor with the at I think. Yeah. So, you see that it added. So here's some context that is is going to this question. And so I actually have it on auto right now, which we'll leave on, but I found that Gemini Pro 2.5 kind of

8:10is the best model right now um for for this for my kind of SQL experience. So we'll run this um and hopefully we solve our problem here quickly.

8:25Um yeah, this is this is the fun part of these demos is we get to wait a little bit.

8:31on the conference Wi-Fi. Let's go. Let's go. Do you need the local database? I have the local database.

8:40It's here. Do you need it for this? Um, you can use a Motherduct database. You can use a parquet file. You can kind of You don't have to have a local database.

8:49Um, I kind of recommend that flow. Let me see what's happening

8:56here. All right, we're going to stop. We're going to choose a different agent and see if we can get this to go, I guess, on

9:09Gemini. Okay. Interesting. Oh, I don't have a schema folder. That was the problem. That's very easy. All right. So, I'm going to um accept this. I must have deleted it when I when I cleaned up my branch. So, I can do this. Uh I just accepted the change. And now we'll go back in here.

9:30Okay. Go back into our terminal here and let's get our schema. No, not

9:40that. Okay. So, now we just have, you know, a beautiful um a beautiful XML file generated by

9:49cursor. Amazing. Okay. So now we can now now what we can do is actually start getting into into the real kind of meat of this workflow. And so um because I have a places data set, I kind of want to constrain it. Let's like um let's do something like I don't know, I'm looking to build a restaurant maybe

10:09um in Oakland. Let's look at where other restaurants are. Let's look at density of the area and let's figure out if we can use um use SQL to kind of guide us towards uh where I want to build this restaurant. So, let's let's do that first. So, um I'm going to give it different context.

10:28Oops. Get out of there. Um we're going to take this one out. We're going to add more context with the at sign here. So, we're going to say SQL, we're going to give it our SQL rule. We're going to give it our local DB schema and say, uh give me a SQL query. Make this slightly wider. How's

10:47the text size on this, by the way? Is that okay? A little small. Okay. All right. We're going slightly bigger. Okay.

10:54Um so we're going to say give me a SQL query uh for restaurants uh in Oakland, California. Uh California. Um okay. So we're going to give it two things that we give it the database schema and the data set. Uh run the query and see what comes back.

11:22So you can see it's thinking it's looking it's it's looking at the um what the categories are kind of um it's reading the schema right and now it gives us a query that looks like this right um let's see did it put the query in anywhere not yet okay so it's going to generate the query here which is not

11:42exactly what I want it to do um I kind of think about some of the stuff when you're using an LLM. It's kind of like you're handling like a blood hound. Like it's about the human and the dog, but it's not just about the dog, right? You need you need to kind of guide it. So, we got an error. Um, it

12:01recognized what it should do here actually, which it put a query in here and then it ran it the way that I want it to run it, right? So, we're going to run it.

12:09Um, and so we got back immediately 3,000 rows of restaurants um, in Oakland where the locality is Oakland in the region is California.

12:18Um, and the category label is like restaurant. So, it's trying to tell us something else here, too. What is it trying to tell us? Okay, I'm gonna make this even bigger. Can I Oh.

12:42Okay. So, it is deleting the SQL the SQL file. I'm going to stop it because it's kind of being a little silly here. All right. We we'll accept the delete.

12:50That's fine. But we're going to stop it and say let's create a queries directory and write the queries there.

13:02I did. That's pair programming.

13:10Um, all right. So, we're going to tell it just to do that. So, that way we kind of like keep the history. I really actually kind of like this trick when I'm writing SQL with an LLM because then I kind of just have all my queries there. I'm not worried about like a query history abstraction. It's just

13:23kind of all there. I can say, hey, like try to optimize this query. I actually do this like kind of fairly frequently as a way to learn um how to to use my database is like, hey, I have a query.

13:33How do I make this more optimal? I can run a bunch of different tests. You know, I have a then I have a bunch of SQL queries that are running kind of different queries. Um, you can also look at the query plan, but that's not very fun.

13:44Um, so all right, so it created some files for us. We'll just accept this.

13:48And that's really cool. Now, the problem is a SQL query is just a table. It's really hard to understand when we have a table like what it should look like. And so here's where uh we can use Python.

13:59And um I'm going to see if we can I

14:05can't remember what library I like. My my Python skills are mostly uh uh based on using LLM. So I don't know very many libraries, but one of them that I know is Folium. And I'm going to use that one. I'm going to say let's use Folium to chart this

14:26um data set. to chart. Let's see. I'm going to tag it just so we it doesn't like, you know. Why is it not finding

14:39it? I see it right there. Okay. We drag you in there. Okay, cool. Well, there we go.

14:47Um, in HTML and then serve it, then

14:51serve it with Python. So, I kind of like to at this point what we're what we're doing here is kind of building little mini data apps like just ad hoc throwaway with an LLM. It's so low lift that we can kind of just ask it to do these things and have it do it for us. Um, so it's

15:11running the query. It's recognizing that it needs to get latitude and longitude to use the library, right? So, um, it's actually adding this to a requirements txt, which I don't want to do. So, I'm actually going to stop it.

15:23Stop. We are using UV. So use pi project instead of

15:36requirements. So this is like where you know it observing it and helping it and kind of sending it the right way really really helps. Um okay it's adding pandas which is fine. I guess we'll use pandas too.

15:49Um, so we'll accept these. Um, and now we are starting up a little

16:00server.

16:08Great. Okay, looks like it even put it in a script that we can run, which is really nice. Um, let's see if it works.

16:23So, I guess I need a

16:28slash. Okay, so it creates the map and it serves it and we can open it in the browser and let's see.

16:37Wow, some of these are in Oakland. Some All right. I mean, that's pretty good.

16:46So yeah. Yeah. Like that's that's pretty good. Um so I I like these points, but

16:54like it's a little bit hard to see all these points on a map. I want to see if it can actually turn this into a heat map.

17:03Um so we're going to do that and then we'll go back to SQL. So um can we render this? I'm going to kill this process here.

17:13Can we render this as a heat map instead of point

17:30points? And the answer is usually yes. But you know, we'll see. We'll see if we'll see if that's

17:39true. Come on.

17:44Okay. Doesn't like my

17:50No, I think it I think that's actually a might be an issue with the Wi-Fi. I can't tell.

18:00Um, we'll just go back to auto and see if it'll render for us. Okay. Yeah, there we go. Perfect.

18:13So it's updating it's updating our our Python now real

18:22quickly. Cool. So we've imported the heat map function and we will run it.

18:32Sure. And now we get something that's a little more consumable. Right now, this still isn't that helpful because it doesn't really tell me what kind of restaurants we need to make where. And so, what I want to kind of do here is um we're going to go back to the SQL and see if it can do some analysis for us to

18:49kind of like steer us towards what type of restaurants maybe there's not enough of or um are underrepresented kind of in this area. So, let's go back into

19:03SQL. All right. So let's go back into

19:14SQL on which

19:18um restaurant types see I spell it right. The nice thing about tokens is your spelling can be very imprecise.

19:30um need to be let's see which restaurants are most represented.

19:43Um I'm adding a little hint here because when I did this analysis the first few times I didn't recognize that there was a is closed flag in the data set and we were looking at a bunch of restaurants that no longer existed. So, we're going to tell it to make sure we only look at uh restaurants that are still open.

20:14whether or not it will rewrite it or create a new one. I can probably ask um generally I kind of just send the query send send through my prompt and see what it says. But I can ask for a new query.

20:26Let's do that. Let's make sure it's a new

20:35query. And now we get to see like how good cursors logic is at like choosing the right models to like build this stuff for us. Right? Since I'm not using Gemini, I'm just kind of relying on what they have here.

20:49Okay. Cool. So, it's what's pretty funny here is um we have a problem and I don't know if cursor actually understands that we have a problem. I don't think it does. And the problem is that this SQL query returns this truncated data set and it

21:11uses cat to send that into the uh you know into the space that cursor can read. And um that means we're missing about 50 rows. So it's a fine analysis,

21:23but we're going to have it write it out to CSV and then and then um uh check again.

21:49I say run the same analysis on it.

22:02Okay. So, perfect. Okay. It doesn't like

22:07that. Great. Let's see. Do we get did we get a file? Oh, look at that. Very cool. Um, can't really see it though.

22:2420% restaurant. Wow, that's that's very helpful. Um, yeah, obviously, you know, we're not using this to clean the data set a little bit, although we can um, you know, do some do some types of scoring here, but looks like we have pretty good set here. This data looks like we can use it. So, that's great.

22:46Okay. Hey, it's just doing the same thing I was doing, right? Head and five.

22:53Smart. Um, great. So now it's creating a new new query here and running it. And again, we can kind of see what's happening over here. Analyze restaurant categories. Let's see what it tells

23:13us. Top cuisine categories.

23:22interesting patterns. Some categories like BBQ and seafood are very homogeneous. Okay. All right.

23:30Um let's see if uh let's see if it can

23:35tell us now we can do some like complex spatial queries. So, um, let's load the the spatial extension for

23:45DuctTV and let's see if we can

23:49identify the three locations

23:55um on a map. How many? Let's say let's say one acre in size

24:04um where we have high density and no I don't know let's pick a

24:15category. What category? African cuisine. Okay. Sure. Uh and no African cuisine within one mile.

24:29um score based on number of other restaurants nearby. So, we want to open where other restaurants are already located, but where there's none of the restaurant type that we have. And again, we're relying on, you know, the the four square data here. So, um let's see if we can get something fun. What's really cool is I don't

24:49really know a lot about spatial, but like I can kind of fake it a little bit by prompting.

24:54Well, um, okay. So, what's what's funny here is that because we're running our SQL queries kind of as we're running our analysis, you'll notice that um it returned uh nothing returned in our data set. I don't know if that if you caught that. It was really fast, but um so the cursor is like, well, that doesn't make

25:15sense. I didn't get any results, so let's try again. Right? And so now we have this loop of like writing SQL, running it, getting no results. Okay, let's let's figure out what's going on here. And hopefully I don't have to actually look at this data, but I may have

25:42to. Okay. Yeah. Let's see if it can fix its own query error here. Maybe is the answer. I think like one of the one of my favorite things about this is like because duct DB is so fast in running these queries, we get like really really fast feedback which is especially helpful when we have zero rows returning. We probably get that

26:00feedback fast in any database. Um we may have to actually peek at this. Let's run a diagnostic query.

26:11Sure. So it's like it's doing these things like these are things that I would do as an analyst, right? Like okay, I'm not seeing any rows. This query seems to make sense. Like why are we, you know, uh uh do we actually have African restaurants in our data set?

26:25Okay. Yes, we do. Like do we have other restaurants? Yes, we do. Okay. How do we how do we find these areas of opportunity here given our constraints?

26:33We may not be able to find anything here. Okay. This is interesting. I really don't want to look at this query. That's like what I don't want.

26:40This is like it's like reading the docs. Like I don't want to read the docs. Like just do the thing for me, please.

26:47Um, okay.

26:55So, one thing we can maybe do here that um I'm thinking about as we uh

27:02um work on this is I can potentially what I can do is actually take that heat map that we built earlier and then map the African restaurants as points and then I can actually, you know, maybe use my intuition a little bit to kind of determine, okay, it looks like this is a spot on the map where this is somewhere

27:18we might want to consider building a restaurant. How do we think about this a little bit more? um especially if our query doesn't work. But it did. All right. Hopefully it looks

27:32good. Okay.

27:37So, we're giving a little bit of connection issues here with the Wi-Fi, but that's okay. All right. So, let's add let's add these three

27:49uh locations as a box. for each location uh on as a layer to our

28:00map. So now we have our heat map layer and now we can put on another layer that says um what do these look like? And I think we should be good.

28:23One of my uh favorite prompts to use on Python in particular when I'm charting is like, "Can you make this look better?" And it actually works pretty

28:34well. Okay. All right. So, I'm going to cancel this because it lost context, right? Um it's now going into um a hell that we've all probably been in, which is what is happening to my Python environment.

28:48Um, I'm just going to say cancel and

28:52stop. Remember, we use

28:58UV. All right. Okay. So, I'm already running it. So, let me just let me just cancel this real quick. Um, and then uh cancel. Okay. Well, that's actually fairly clever. We'll we'll we'll allow it to run it on another port since I had the other one still running. All right.

29:22So, somewhere in here. Where did it go? Okay. I guess I'll have to copy paste this. Wait, no. Here we go. That's nice. Okay. Um, did it draw boxes for

29:38us? Okay.

29:47Yeah. Okay. So, I don't see the boxes. So, we'll just tell it see the layer with the boxes for the new uh or for the uh proposed locations. Make it more

30:08obvious. So, it's possible it didn't work right. I'm not sure because we're vibe coding. So, we want to avoid looking at the code here.

30:17Um, we'll try we'll try one prompt here and then we can uh and then we can uh take it from

30:27there. This is also like you know for these type of like you know precise updates the model really matters in these cases. I found um Gemini tends to

30:38be very very good at like precise taking precise instructions and only listening to it and Claude sometimes can be a little bit uh wild and so you know uh unfortunately as we as we kind of progress and some of you may have experienced this as you progress into like you know using these LLMs to drive things is you end up kind of almost

30:56needing like personal evals um which is I think it's own own set of problems that um maybe we will uh have to have to take on at some point. But okay, let's see if it figured it out here. Maybe is the answer to this. It also really helps to do this on a bigger screen than a laptop. Um, also

31:21just kind of so you have, you know, as a human you can have more context also.

31:28Yeah. Yeah. Exactly. Exactly. Okay.

31:37Let's see if it's smart enough to fix this problem. Okay. Yes, we do. So, one thing is because we're kind of interacting with DuckDB in a very like atomic manner, we're spinning it up to run a query and then turning it off.

31:49Every time we are handling extensions like spatial, we do need to kind of include those in our scripts. And it looks like Python is grabbing it here so it's in context.

32:05For I'm not sure if this approach will work, but we'll find

32:13out. Okay. Yep. Smart and last try here. Okay. Interesting.

32:28Well, this is great. It's fixing its own errors, right? And so, like the main thing that I kind of want to get at here is like because we're running SQL and our Python kind of all in the same loop, it can kind of heal itself, right? And so, this lets us kind of observe and intervene instead of like, you know,

32:43having to um apply the what things we're thinking about in other ways. Um,

32:49yeah, go ahead. Um earlier earlier while you were going through this you noticed that when it was truncating I'm guessing you knew that because you're very familiar um it's yeah can you repeat the question? Oh you want me to repeat the question? Okay sorry yes yeah okay so the question was I noticed that um when it was cadding that it truncated the

33:13data set. So the default settings in duct DB are going to basically give you an abbreviated uh view of the data called a duck box in the CLI. And so uh that was kind of that did indeed come from that. But you can also observe you can observe it in the in the terminal that it will not that it's returning

33:30kind of a truncated view of just what you can see in the terminal for sure.

33:33That was the that was the leadup. I think when it's erroring here that it can't produce something. Yeah. We're expecting a graph that gets error message. Yeah. Yeah.

33:44Um, and in that example, you had an expectation that that approach would have a truncation of some sort. We haven't looked at the SQL at all. How do you know that the data that it's producing is valid? How do we know there's not like yeah, a joint condition that's, you know, removing half the data set or Yeah. number. Yeah, really good

34:04question. So I think like the way that I like to think about this workflow in particular like when I am uh write writing SQL and maybe not looking at it closely um charting is really the way that I am uh kind of closing that gap initially right and then as I kind of go go along maybe I'll add tests right but

34:25um I do think that that's a totally valid a totally valid question right which is like how do you know the SQL is good um and uh yeah we definitely definitely you should look at it Um, I don't think that it is. Uh, okay. Looks like our internet's not working right now. Um, uh, so I'm going to pause it

34:41here, but I think you'll all kind of get the get the gist of this. Um, oh, it looks like it healed itself. Okay. Um, anyways, we'll let that keep running, but I do think that's totally valid. And I think like for me like the data data visualization fits right in with with the quality of the SQL which is like uh

34:57I'm going to use that to validate uh based on what I know about the data set that like the chart looks looks like how I would expect it, right? Um that's not that's imperfect, right? And um definitely you still need to be familiar with SQL and write this and read the SQL and understand it. I don't think that LM

35:14are replacement for like human understanding of of the data structures.

35:20Yeah. Could you comment on the schema definition as XML and the impact

35:27on like I know you said your team sorry. Yeah. So the schema is defined as XML.

35:32Yeah. And you mentioned that the AI engineering team mentioned that it does better than specifying the schema in other formats. Could you comment a bit more whatever you're aware of as to what other formats can work? Is XML the best way? Why we choose XML? Yeah. Okay. Um, so first off, it looks like our map rendered but then killed the heat map.

35:51So we are going to um, uh, we're gonna we're gonna kill this part of the demo, but um, okay. So, uh, the question was

36:00why XML for the schema? Um, I'm not an AI researcher. I just get to work with ones and, um, you know, their feedback very specifically was, hey, like what we're seeing in state of art in state-of-the-art is is X schema as XML.

36:13Give the give the LM as much context as possible. Um, you know, for me that was a that was a good enough answer in terms of how to implement this. Um, I will say it has definitely increased the quality and speed of the SQL that I'm getting.

36:25So, it definitely works. Am I running evals? No. Like, uh, you know, at some point will I be running a personal eval if things continue? Yes. I I think that I will be doing that soon. By the way, I'm feel free to ask questions at this point. Yeah.

36:48Cool. Thanks. Um, at the beginning, uh, we had some SQL generated and it kind of knew what to put in the wear clause. So, I didn't see the steps before, but is it actually making a query to the local DB to get the like possible column outputs there before it writes a SQL? Like, does it have that context? Yeah, that's a

37:05good question. Okay, so there's a couple things. Um uh so what's in so so with the schema

37:13and it's it's basically doing some work to infer like what those columns mean. There's also I can't remember if I have that in this data set. Um there's also column definitions that are fairly robust. Let me see if this is in the data set. I can actually check. Okay. By hitting the right button. Not that. Um let's

37:34see. So there's a categories table here that has a fairly robust set of of uh category labels and like definitions in u in here that um that it queries as well. But yes, I mean kind of the the general flow that I've noticed is that when it has questions about the data, it will sample the data because we have

37:53access to SQL, right? Because we've already kind of done the step of bringing the data locally.

37:58um I have don't have a lot of fear about the about the LLM to like writing SQL and then and basically learning by asking the question of the data. So yeah, it seems to be very effective in those scenarios to like write effective wear clauses um without too much without too much context. It's a really good question. Yeah, Taylor.

38:27So I'm curious about your personal use of cursor here. Two questions. One is are you almost at the point in this demo where you feel like that chat is losing coherence? Like how often are you starting a new chat and like bringing things back into it? And then kind of a second question is are you have you

38:44experimented with just being like more direct with the the prompting and is there any sort of effect on that?

38:48Because a lot of what you're doing is like let's see can we try? Yeah. or do you just like do this? Yeah. So, I think um Okay, it sounds like we're done. So, we'll answer we'll answer this question then we'll wrap. Um so, the first thing is like uh how often is it losing coherence? I found that mostly in cursor

39:07uh I've used windsurf as well um that it helps to re-emphasize context when it starts kind of losing like going off the rails. Like there was no reason for it to like look for the Python environment.

39:18We already gave it the rule that said, "Hey, we're using UV." But it's still, you know, lost coherence there. Um, what was the second question? Was just the directness. Oh, directness. So, when I'm when I'm kind of being, especially when I'm when I'm working on green field, I pretty much just like am pretty suggestive. When I'm working on

39:35something that's already exists, I will be very precise and I'll use something like repo mix to to give context, which which lets you turn um lets you turn a repo into XML and you can use that as as a rag step. Um, also very very helpful.

39:49Um, so I'll use something like re repo mix and then and then give it like a much much more specific prompt. Um, there's a few really good articles on like writing good prompts um that uh uh one of them which is uh that that I use regularly is kind of um saying hey I want to build a spec for developer ask

40:06me what questions you need to know and then just stepping through it. Um I think here I'll just share the link real quickly for the the purpose here which is this one.

40:15Um, so like this is the nature of kind of like an idea idea honing prompt that I find very very effective in the scenario of, you know, brownfield specifically where it's already something that already exists. But for green field, I like to kind of be pretty loose with it. Okay, thanks everybody. Thank you very much.

FAQS

Why is vibe coding SQL dangerous and how can you do it safely?

Vibe coding SQL is risky because you are often running on production databases, your workload is not isolated (you could fork-bomb an expensive Snowflake instance), and code generation and execution happen in separate threads without instant feedback. The safer approach is to use a replica, isolate your workload with a local DuckDB instance, and let the LLM both run the SQL and fix errors in the same loop. That way, if a bad query is generated, you can just kill the process and start over.

How do you get better SQL output from AI coding tools like Cursor?

The most effective technique is to export your database schema as XML and pass it as context to the LLM, which AI researchers found produces the highest quality SQL generation. You should also replicate your cloud data locally using DuckDB so the AI can safely sample and query it. Writing cursor rules that specify your project conventions and SQL formatting preferences, plus pointing the AI to relevant documentation, improves the accuracy and speed of generated queries.

How can DuckDB be used for safe local SQL exploration with AI assistants?

DuckDB lets you replicate a cloud database locally with a single SQL statement, creating a safe sandbox for AI-driven exploration. Because DuckDB runs in-process on your local machine, queries execute fast, giving rapid feedback when the AI generates and tests SQL. DuckDB's built-in sampling functions let you work with smaller subsets of large datasets, and its spatial extension handles complex geospatial queries, all without risking your production environment.

What is the best workflow for building data apps with LLMs and DuckDB?

The recommended workflow is to write SQL queries with an LLM, run them locally against DuckDB using CLI flags, store results to CSV files, and then use Python libraries like Folium for visualization. Keeping all generated queries in a directory gives you a natural query history. Data visualization is the key to validating SQL output since it lets you visually confirm whether the AI-generated queries produce sensible results without having to read every line of SQL.

Related Videos

9:09

2026-02-13

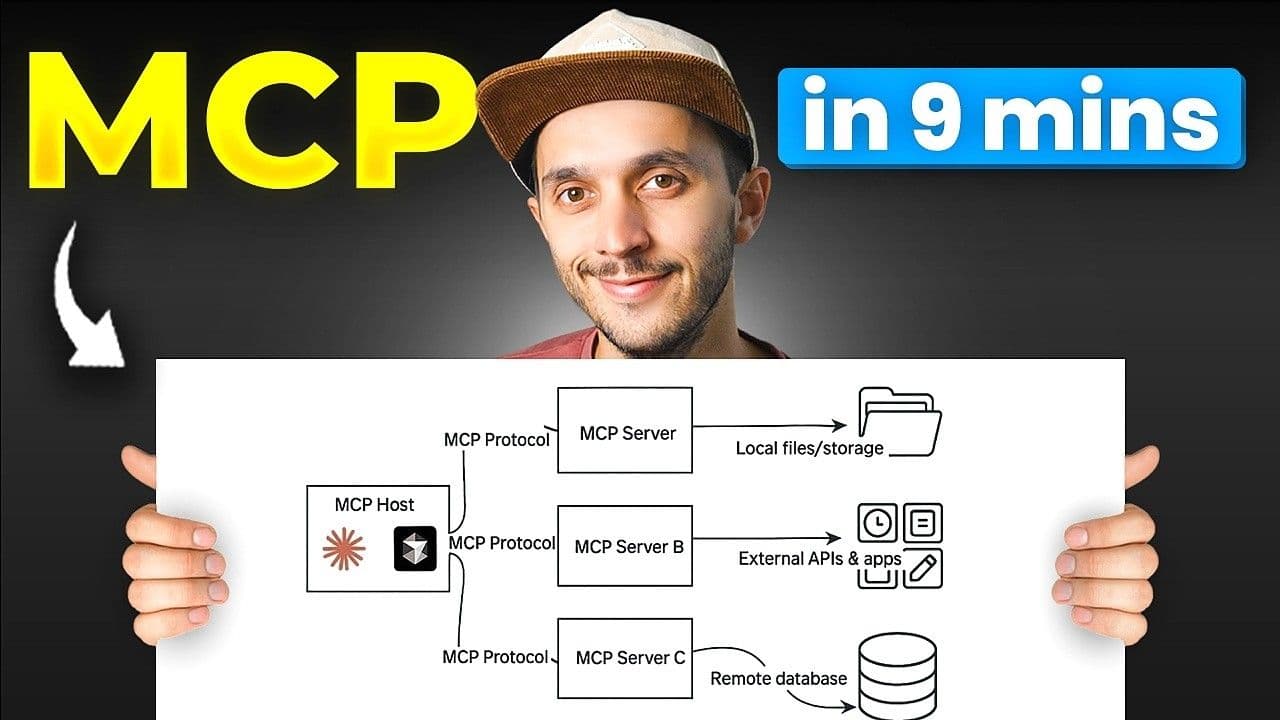

MCP: Understand It, Set It Up, Use It

Learn what MCP (Model Context Protocol) is, how its three building blocks work, and how to set up remote and local MCP servers. Includes a real demo chaining MotherDuck and Notion MCP servers in a single prompt.

YouTube

MCP

AI, ML and LLMs

2026-01-27

Preparing Your Data Warehouse for AI: Let Your Agents Cook

Jacob and Jerel from MotherDuck showcase practical ways to optimize your data warehouse for AI-powered SQL generation. Through rigorous testing with the Bird benchmark, they demonstrate that text-to-SQL accuracy can jump from 30% to 74% by enriching your database with the right metadata.

AI, ML and LLMs

SQL

MotherDuck Features

Stream

Tutorial

0:09:18

2026-01-21

No More Writing SQL for Quick Analysis

Learn how to use the MotherDuck MCP server with Claude to analyze data using natural language—no SQL required. This text-to-SQL tutorial shows how AI data analysis works with the Model Context Protocol (MCP), letting you query databases, Parquet files on S3, and even public APIs just by asking questions in plain English.

YouTube

Tutorial

AI, ML and LLMs