LLMs Meet Data Warehouses: Reliable AI Agents for Business Analytics

2025/11/19Featuring:Keynote at AI By the Bay

Transcript

I ordinarily wouldn't wear a hat during a talk, but (a) this is an awesome hat that I love, and (b) there's very bright spotlights up here. But let's talk about agents. Agents can use LLMs along with analytics databases to analyze business data, find patterns, speed up reporting and more. The title of the talk is talking about business data and I give some examples on business data. But customers and users of DuckDB and MotherDuck use the same technologies for all sorts of different types of data. So just replace some of the tables in my examples with game data or package delivery data, logistics, etc.

First of all, I want to just give a shout out. Obviously, LLMs are magical. They've advanced so much in such a short period of time. Amazing at improving our writing, amazing at understanding human language and sometimes even at generating human language as long as you're not trying to generate any sort of novel ideas. They're generally awesome at composing pieces of knowledge together. And of course at generating images of ducks. They're very good at generating images of ducks.

There are things though that make the duck sad. There are things where the duck magician can't get the LLMs to do what it wants it to do—things that sometimes they aren't even designed to do. However, LLMs are kind of designed to understand facts, but they sometimes fail. And when they fail, they're often failing in the same way that us humans fail. They make things up. They don't have current knowledge and they don't have your personal information or your personal context in order to work. How many of you as humans have these same problems at times? I certainly do. That's where LLMs are like humans. They're fallible. We're working at making them less fallible as an industry. Hundreds of billions of dollars have flown into this industry.

We've been able to augment LLMs with capabilities to make them even more magical, to make our ducks here happier. We can feed them information on recent facts through RAG. We started doing repeated calling of LLMs in order to emulate the human chain of thought reasoning. And now that is built into most LLMs, but they're still bad at some things that humans are decent at. I might not be able to count exactly the number of stars here because some are overlapping, but I'm not going to go from five, six to eight and skip the number seven. I think it's an unlucky number for some people. So or maybe it's lucky, I don't know. But LLMs are bad at counting and they're really bad at data aggregation. Of course, if you're bad at facts, you're probably not going to be very good at aggregating facts. It's kind of an exponential badness that happens here.

People originally were saying, "Well, RAG is a solution for everything." This was a while ago, right? RAG is a solution for everything. But RAG only helps encoding facts, though. LLMs still can't scan, aggregate, and compute data.

What if you want your LLM to answer business questions, and you want those LLMs to be quasi accurate? If you have questions like, "How many of my customers are spending greater than $1,000 a month?" or "Which of my customers are most likely to churn and why?" or "What is my company's annualized revenue?"—you're going to want some degree of accuracy on those. You're not going to want to just trust the LLM to pull the data off of its training data set because you might get someone else's annualized revenue or someone else's most likely to churn customers.

For that, you need an analytics database. Analytics databases can be very happy when you're using ducks at least as your analytics database. But you need something that can store large amounts of analytical information and efficiently compute aggregations to answer these questions.

Now how many of you in the room would classify yourselves as data people primarily focused on data? All right. How many would classify yourselves as software engineers—you're building things but maybe with data at times? Okay, so pretty much every software engineer is probably like, "Well what about Python? What about our elephant here?" It's funny that elephant in the room joke did not come up as I was preparing this, but the elephant in the room is Postgres does everything. Why don't we just use Postgres?

There are different types of databases. I literally have one slide on this to catch the folks up, but the different types of databases are basically transactional and analytics databases and it's largely based off of how the underlying data is stored and retrieved. If your underlying data is stored as rows, it's really easy to do inserts of a whole row at a time and it's really easy to do point lookups. But when you try to do like an average age in your data stored as rows, you end up reading all the data of every single row in order to do that and it's very inefficient. On a columnar storage database, an analytical database, you would just read the column age and it's much more efficient. That's why maybe you don't want to use Postgres as your analytical database. It will work, it just might not be the most efficient. We have tons of customers of MotherDuck who end up coming to MotherDuck out of using Postgres as their analytical database and realizing it hit scaling limits.

How do LLMs interact with data warehouses, databases, data analytics tools? RAG was sort of the version zero for LLMs interacting with external data, but like I said you could just basically feed in facts, not aggregations. Now we have various different ways of interacting with our databases.

First of all, there are built-in tools in LLMs nowadays. LLMs now actually do have calculators. You can feed basic math questions to an LLM and they will use the built-in tool which is a calculator. They have web search. They have tools for generating files and diagrams, things like that. The first thing that you can do if you want to interact with the database is you can build a custom tool that says what to do with a database and you do that using the agent SDKs. In my case, examples here, I'm using the OpenAI SDK but there's Claude ones and there's ones I'm sure for other LLMs.

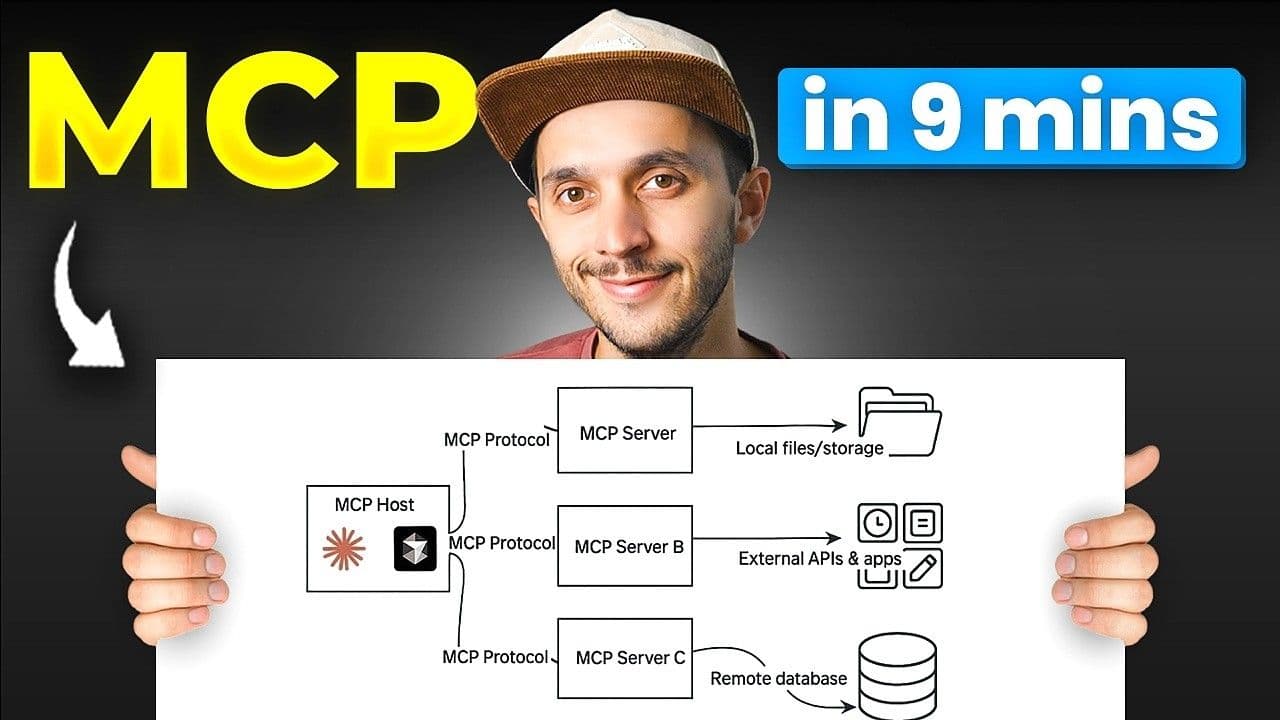

And then of course many of these analytics databases do have MCP servers. MCP servers are essentially the tools but exposed over a wire protocol instead of exposed directly running locally on the server. This gives you basically ultimate in flexibility. You can ask LLMs anything. It just interprets SQL and that's all that the MCP server is really going to do. Whereas if you want more control, you build your own custom tools.

Regardless, if you are working with an analytics database nowadays and for the last four decades or something, it's likely to use SQL as its language for interacting with it. It's one of the biggest staying power technologies out there I think that has just remained for decades.

Your biggest question comes down to who is going to generate the SQL for interacting with your database. Is it going to be generated by the LLM? This is a tool example here, just a function in a Python file showing, "Hey, I'm going to execute SQL and I'm going to define how that executing SQL happens." I can define it at that level and then let the LLM generate the SQL. Or if I do want more control over it, I can define a function and have that function be a very specific thing. So getting the sales by region for instance.

Now you might think that you really want to let just the LLM use SQL. Why are you manually encoding the SQL that the LLM is able to generate? That would be a reasonable conclusion. But the state-of-the-art of SQL generation from LLMs is still pretty poor. I think that—my vision's not amazing, but I think the numbers in the high 70% here at the best LLM, which actually uses multiple LLMs together to get to that score. So 78% I think is the accuracy versus 92% as a human. Now how many of you would be comfortable producing your board reports and producing maybe your public analyses for your company, your quarterly earnings reports with 78% accuracy? Not me. Heck, I won't even be comfortable with the 92% that a human is accurate. But that is the nature of humans. We are fallible.

We want to talk about different ways that we can improve the accuracy to supplement what these things are going to do out of the box. Now we can add additional information to the context window. This is basically as you're typing a question to an LLM or sending a question programmatically, you can add additional information to that window. You can use a semantic tool to define the details about what your models look like or you can basically build a very budget semantic tool yourself and we're going to show each of these.

But first of all, I want to talk about kind of the sample data sets that I'm using. First of all, you all are going to see revenue numbers. Those are not real revenue numbers. They have nothing to do with MotherDuck as a company. However I did try to use a very artificial data set when I created this presentation. I tried to use Northwind data. Northwind is a canonical data set from Microsoft that SQL Server used back in the day. And it's amazing. It is very well laid out. It's basically customers and their orders and what items were in those orders and what products the company sells. The problem is that data set is super well documented on the internet. I think Michael from Shadow Traffic emulated that data set in a few hours for us and he was very kind to do it. So I do want to give a shout out to Shadow Traffic. Awesome at generating data sets.

However, our actual internal data warehouse at MotherDuck I think is 217 different tables. We're a three-year-old company. We have 217 tables in our data warehouse and I think 41,000 columns in those 217 tables. It's a lot more complex and it's not well understood because it's not documented on the internet. So that is the data set that I'm using, but I'm fudging all the numbers. So don't take any meaning from the numbers.

But one of the biggest things that we do at MotherDuck is ask what is our annualized weekly revenue? This is similar to ARR that you might have heard of. It's called AWR. A lot of us are engineering background folks, so we don't really like to use a term that's not accurate. And annualized weekly revenue is a fairly accurate term for us. If you ask OpenAI what it is, it's basically saying it would be one week's of data repeated for all 52 weeks and that's annualized weekly revenue. Pretty good. It's the right definition, of course.

Then I went to the LLM and said, "What is our annualized weekly revenue for today?" And the LLM said, well, the formula is the annualized weekly revenue equals revenue on a day times 365 divided by 7. Anyone know what the problem with that is? What is this divided by seven thing? It's basically taking a day's revenue, figuring out what the whole year's revenue would be based off of that day, and then saying, "Oh, we'll just take a month and a half of that," or something like that. This is a completely ridiculous definition. But this is the definition that it used and then it computed a result.

Now what we are happy for is that it explained what it did. So as a smart human you can then say, "Oh wait, this seems a little bit wrong." But we don't want it to get it wrong. So we add additional information to the context window. In this case we basically just did markdown format files with all of our go-to-market definitions and saying annualized weekly revenue is defined as this, which is basically the current date's revenue and summed with the last 6 days of revenue multiplied times 52. You'll notice that my definition isn't very precise. It could be more precise. But it's additional helper data for the LLM.

So then I say what is our annualized weekly revenue for each day in November and I get this. At the top there 1.5 million, at the bottom 11.962 million, the original numbers before I fudged it. I looked at the bottom number and I was like, "This is right." All right. But why are the other numbers—why does it grow so rapidly at the beginning?

What happened is the LLM understood what annualized weekly revenue was. It generated a window function in SQL, but it said, "Oh, I'm talking about November." So it excluded all data from prior to November in its calculation. Even as it was doing let's say November 1st, obviously it needs to include the six prior days which are in a different month or in October, but it didn't have access to that data. So it just assumed zeros and we got our annualized weekly revenue—a really bad calculation that someone may actually miss depending on how much data that they're looking at.

Again we say let's update the context window and say always use a full seven days of data when calculating AWR. I did that. I ran it. It worked well. I patted myself on the back. Life was good. The next day as I continued preparing for this talk, I ran it again. It again got the first six days wrong. My feeding at that additional context worked in one run but not in another run. Very intermittent. Then I ran it again and all days were wrong. It generated really crappy SQL. I have no idea actually. I didn't even dive into what it did. But I did run it again and it worked fine. Very frustrating experience, especially if you're expecting to have accurate business data.

How many of you have kids? I think working with an LLM is like trying to teach calculus to your 2-year-old. It can be very frustrating at times. You don't know actually what is sinking in and what is not sinking in. But we do like our ducks to teach all the LLMs how to do accurate SQL. It just won't always happen if you don't have all of the right context. And the context that I provided obviously was not enough.

What can you do to provide additional context? You can actually use semantic modeling tools like Cube or the semantic modeling capabilities of BI and analysis tools like Omni and Malloy and you can feed it additional data in a lot of detail. So you can feed it basically what are your measures? What are the types of things that you want to compute? What are the dimensions? How do you want to categorize those measures? What are the filters? Do you want to be able to do by date range or by geography in these examples? And what are the calculated measures? What are the measures based off of other measures? You can go and spend a heck of a lot of time out there just kind of defining all of these things and life will be glorious. Maybe. But it is a lot of time and energy involved in doing that.

Another way that you can do it is you can take these 200-some odd tables and you can say, "I'm going to make a very simple view of this data. I'm going to make a view that looks more like that Northwind data set so that the system can understand something that's well documented." And luckily, it's really easy to create views in most databases.

The other thing though you can do is start commenting on the databases and basically describing your columns. This is sort of the poor person's semantic layer and I actually generated this from the LLM to start with and then you can go in and modify it and provide additional details. So if you wanted to say for instance that this is the join ID or the foreign key basically between this table and another table and describe how they join and what the name of the join key is. You can do all sorts of things like that to make the LLM slightly better.

The LLMs are still generating SQL not necessarily knowing what SQL syntax that it should generate though. So even if you get it accurate, you might have a lot of queries. We run a database company that is based off of a consumption-based billing model. I personally love LLMs because they'll write 20 queries because a human will only have to write one. So the LLM is able to rack up the bills faster. But here I'm going to give you some indications about how to prevent that—which is teach the model about your SQL dialect and provide performance optimizations of course and tell the model what not to do.

We actually do publish a full markdown file describing DuckDB SQL and the MotherDuck editions that you can feed to your LLM to teach your LLM in the context window how DuckDB works and how to do some types of operations which may be a little bit more esoteric in a particular variant of SQL.

You can also feed it things about how to do efficiency. You can teach, show an example window function here and show how to use the qualify function in order to limit the computation there and do other efficient patterns for when you're trying to figure out things beyond ordinary SQL that it might recognize.

Lastly, I mentioned you could tell it to avoid certain things or—what I started to realize is this looks pretty beautiful. I know the LLM can generate SQL. I could use LLM to generate my get sales by region SQL. But I as the person that understand how our data works can then say okay that is the SQL that represents get sales by region and essentially create a set of tools for each of our major business metrics and those set of tools can then define how to interact with the analytics database.

It still comes down to what are you doing as an agent analytic—what are you doing as an agent interacting with the analytics database?

The capabilities of an analytics database are things like transformation, ingesting data from a huge variety of different sources, everything from CSV and Parquet files to JSON and random things off of S3. You can ingest that all into the database and then transform that data. It can act as your memory if you're doing a lot of analytical functions. So if you're building an agent that needs memory and you're doing a lot of facts, you use a vector database if you're trying to store a lot of facts. But if you're trying to actually store data that you're going to analyze as numbers, an analytical database is really great for that memory. And then obviously the computation, the ability to scan across a bunch of data and do analytics.

To give you an example, if we did something like, "Which of my customers currently spending over $10,000 are likely to churn?"—you'd want an analytics database that can understand different data sources because you'd bring in different data from your business, from HubSpot, from Salesforce, from all of your various business systems. And if you have a product, you'd bring in product data. You want to be able to store temporary state and derive data because you want between different runs of the LLM to have some consistency there. Scaling up and down as needed—you can easily have runaway costs and be able to run a bunch of things at once of course and keep costs under control.

What would a great analytical database look like for that? First of all, what would it not look like? This is what a lot of data warehouses are. A lot of data warehouses are this monolithic very large engine that is scaled for peak traffic, peak performance. A lot of businesses overspend on idle resources for their data warehouses and you don't really want to do that. There's autoscaling and things like that that you may have used. A bunch of people actually came up to our booth and talked about how autoscaling on some of the major databases didn't really do what they need. You really don't have your cost scaling with the amount of usage. And you're just worried about—these are users, but let's say there's agents spinning up and running into your database. They can execute tons of queries.

This is where we talk about open-source DuckDB. How many of you actually used DuckDB? Okay, maybe a third. Super easy to install. It runs locally as a library on your computer. It does analytics much the same as SQLite might be used to do transactional data. It is very easy to use and nowadays occupies maybe 100 megabytes of RAM but has full SQL analytical capabilities. It is very popular and overall DuckDB gets high scores from everyone in the community in terms of the experience that it provides. Improved SQL dialect. It's a great CSV parser. It's an awesome library to use within your applications. It's in process. It's pretty fast. Go to ClickBench, which is the benchmark from the ClickHouse folks, and DuckDB oftentimes performs much better than ClickHouse in its own usage. And it's a tiny little library. It's easy to use, it scales, and it's free. And that's probably the best thing for a lot of people.

Of course, analytics often requires heavy resources. It's kind of difficult to predict how much resources you need. You're on your own to scale it if you're using the library for DuckDB. And do you really want to manage a fleet? And that's really where MotherDuck comes in as a cloud data warehouse. I don't want to pitch you on all the stuff about MotherDuck, but what I do want to talk about is kind of the differences in design versus what you might be used to for an analytical database.

With MotherDuck, we give every user their own duckling. Every user that goes and attaches to a data warehouse has their own compute. That's what the duckling is. It's essentially its own database instance. They're sharing data but have access to their own compute. They can scale vertically as needed. And this extends to applications.

A lot of usage of MotherDuck is customer-facing applications where the end users is another company that's a customer of ours or a customer of a customer. And each of those people also get their own databases in many of the use cases. What really helps there is that's highly partitioned data. These folks really want to make sure each of their customers' data stays completely separate and this is great for that. But it also helps preventing collision on resources. You get predictable performance when each of the users have their own data that spins up and spins down really quickly.

Now another thing that MotherDuck does which is really awesome for these types of things is something we call dual execution which is basically a user comes in and they send a SQL query to their local DuckDB. The local DuckDB open source DuckDB is the client for MotherDuck. They send a SQL query and the SQL query planner basically decides which parts of this data is local and which parts of this data is remote. So you write a very similar like a normal SQL statement here. But it knows that hey our super top secret data is only here in this local computer and the other data is in the cloud and it sends that part of the SQL query for the join over to the cloud and pulls out the data from there.

You can think of this for this architecture as being really useful for agents especially if the agent wants to do a lot of low-cost easy data analytics, low amounts of data. You could just have the agent use DuckDB locally, zero cost associated with that, but then when the agent needs the power of the cloud and scaling up, it is literally just changing one line to say I'm going to connect to "md:" instead of connecting to your local file system. DuckDB is really versatile that way. You can use your local file system. You can even use a local in-memory and then with one line you're actually using the cloud with this dual execution.

There are also ways, if you have an agent that you really want that agent to have its own playground, its own thing to work with, you can do that with zero copy clones. You can have this shared data set that everyone has access to, all the different agents have access to, and you can very easily say, "Okay, give me a copy of that for my local agent to work with." And it's basically a free operation there. And then your local agent can say, "I'm going to add some additional metadata. I'm going to add some additional data and then I'm going to eventually push it back so that other folks have access to that." But that cloning capability allows you to provide full write access to your agent to your underlying data if it needs that.

And of course you can scale out. If you have for instance an agent that needs access to a database but that agent needs a lot of power, you can basically use these read replica ducklings and say hey I'm going to have four other instances, eight other instances, 12 other instances—I think we're up to 16 in the UI right now—but I'm going to have all of these other instances out there to do my analytical workload and only this agent has access to do that. The other agents do not.

And you choose all of that stuff in the configuration. Basically I have three agents on the bottom and then the core data warehouse service. The core data warehouse service would be writing all the data that the agents need to access and then the three different agents we can configure differently. The core data warehouse has a jumbo instance. We configure one of the agents here that really needs a lot of power to have jumbos and then our churn predictor agent maybe needs to look at a lot of things at once and so we give it many ducklings or many instances to be able to do that.

All the cool kids are building agents nowadays. You want an analytical database that can help answer different types of questions. You want hyper-tenancy that allows the AI applications to have a sandboxed amount of compute and prevent runaway costs. And then DuckDB and MotherDuck can really help there. That's all I have for today but thank you very much.

Transcript

0:08Um, I ordinarily wouldn't wear a hat uh during a talk, but a this is an awesome hat that I love, and b there's very bright spotlights up here. But let's talk about agents. uh agents can use LLMs uh along with analytics databases to analyze business data, find patterns, speed up reporting and more. The title of the talk is talking about business

0:33data and I give some examples on business data. Uh but you know customers and users of DuckDB and Motherduck use the same technologies for all sorts of different types of data. Um, so just replace some of the tables in in my uh examples with game data or um or you

0:55know other sort package delivery data, logistics, etc. Um,

1:02first of all, I want to just give a shout out. Obviously, LLMs are magical.

1:07Um, and they've advanced so much in such a short period of time. Uh, amazing at improving our writing. amazing at understanding human language uh and sometimes even at generating human language as long as you're not trying to generate any sort of novel ideas.

1:26They're generally awesome at composing pieces of knowledge together. Uh and of course at generating images of ducks.

1:35They're very good at generating images of ducks. Um and there are things though

1:41that make the duck sad. There are things where the duck magician can't get the LLMs to do what it wants it to do. Um things that sometimes they aren't even designed to do. U however LLMs are kind

1:54of designed to understand facts. Um but they sometimes fail. And when they fail, they're often failing in the same way that us humans fail. They make things up. They don't have current knowledge.

2:08uh and they don't have your personal information or your personal context in order to work. Um so how many of you as humans have these same problems at times? I certainly do. That's uh that's where LLMs are like humans. They're fallible. Um and we're working at making them less fallible as an industry. You know, hundreds of billions of dollars

2:32have flown into this industry. So, we've been able to augment LLMs with capabilities to make them even more magical, to make our our ducks here happier.

2:43We can feed them information on recent facts through rag. We can started uh doing repeated calling of LLMs in order to emulate the human chain of thought reasoning. And uh now that is built into most LLMs, but they're still bad at some things that humans are decent at. Um I, you know, I might not be able to count

3:07exactly the number of stars here cuz some are overlapping, but I'm not going to go from five, six, uh to eight and skip the number seven. Uh I think it's an unlucky number for some people. So um or maybe it's lucky, I don't know. Um, but you know, LMS are bad at counting and they're really bad at data

3:27aggregation. Uh, of course, if you're bad at facts, you're probably not going to be very good at aggregating facts.

3:35Uh, it's kind of an exponential badness that happens here. So, you know, people originally were saying, well, rag is a solution for everything. This was a while ago, right? Rag is a solution for everything. So, rag only helps encoding facts, though. Um, LLM still can't scan,

3:51aggregate, and compute data. So, what if you want your LLM to answer business questions, and you want those LLMs to be quasi accurate? If you have questions like, how many of my customers are spending greater than $1,000 a month? Or, uh, which of my customers are most likely to churn and why? or what is my my company's annualized revenue.

4:18You're going to want some degree of accuracy on those. And you're not going to want to just trust the LLM to pull the data off of its training data set.

4:27Uh because you might get someone else's annualized revenue or someone else's most likely to churn customers.

4:36For that, you need an analytics database. And uh analytics databases can

4:42be very happy uh when you're using ducks at least as your analytics database. But you need something that can store large amounts of analytical information and efficiently compute aggregations to answer these questions.

4:57Now how many of you in the room would classify yourselves as uh as data people primarily focused on data?

5:04All right. How many would classify yourselves as as software engineers? you're building building things but maybe with data at times. Okay, so pretty much every software engineer is probably like well what about Python?

5:18What about our elephant here? Um and it's funny that elephant in the room joke did not come up as I was as I was preparing this but the elephant in the room is Postgress does everything. Why don't we just use Postgress? Um and there are different types of databases.

5:34I literally have one slide on this to catch the folks up, but uh the different types of databases are basically transactional and analytics databases and it's largely based off of how the underlying data is stored and retrieved.

5:48If your underlying data is stored as rows, it's really easy to do inserts of a whole row at a time and it's really easy to do point lookups. uh but when you try to do like an average age uh in your data stored as rows you end up reading all the data of every single row in order to do that and it's very

6:07inefficient. So on a colmer storage database an analytical database you would just read the column age and it's much more efficient. Uh so that's why maybe you don't want to use Postgress uh as your analytical database. It will work it just might not be the most efficient. We have tons of customers of motherduck who end up coming to mother

6:28duck out of using Postgress as their as their analytical database and realizing it hit scaling limits.

6:37So how do LLMs interact with data warehouses, databases, data analytics tools? Uh rag was sort of the vzero for LLMs interacting with external data but like I said you could just h you know basically feed in facts not aggregations.

6:52So now we have various different ways of interacting with our databases. Uh first of all there are built-in tools in LLMs nowadays. So LLMs now actually do have calculators. You can feed basic math questions to LLM and they will use the built-in tool which is a calculator.

7:13They have web search. They have tools for generating files and diagrams, things like that. So the first thing that you can do if you want to interact with the database is you can build a custom tool that says what to do with a database uh and you do that using the agent SDKs. In my case examples here uh

7:33I'm using the OpenAI SDK but you there's cloud ones and there's ones I'm sure for other LLMs.

7:40And then of course many of these analytics databases do have MCP servers. uh MCP servers are essentially the tools uh but exposed over a wire protocol instead of exposed uh directly running locally on the server. Um and this gives

7:58you basically ultimate in flexibility. You can ask CLMs anything. It just interprets uh SQL and that's all that the MCP server is really going to do.

8:09Whereas if you want more control, you build your own custom tools. Regardless if you are working with a analytics database uh nowadays and for the last four decades or something it's likely to use SQL as its language for interacting with it. Uh it's one of the biggest staying power technologies out there I think uh that has just remained

8:34for decades. Um, and your biggest question comes down to who is going to generate the SQL for uh interacting with your database. Is it going to be generated by the LLM? This is a tool example here, just a function in a in a Python uh file showing, hey, I'm going to execute SQL and I'm going to define how that executing SQL

9:00happens. I can define it at that level and then let the LLM generate the SQL.

9:07Or if I do want more control over it, uh I can define a function and have that function be a very specific thing. So getting the sales by region for instance. Um now you might think

9:22that you really want to let just the LLM use SQL. Why why are you manually encoding the SQL that the LLM is able to generate? Um, and

9:35that would be the a reasonable conclusion. Um, but the state-of-the-art of SQL generation uh from LLMs is still pretty poor. Um, I think that the my vision's not amazing, but I think that, you know, the numbers in the high 70% here at the best LLM,

9:55uh, which actually uses multiple LLMs together to get to that score. uh so 70 78% I think is the accuracy versus 92% as a human. Now how many of you would be comfortable producing your board reports and producing uh maybe your public analyses of for your company your quarterly earnings reports with 78% accuracy. Not me. Uh

10:23heck, I won't even be comfortable with the 92% that a human is accurate. Um but

10:29uh you know that's that is the nature of humans. We are f fallible. So um we want to talk about different ways that we can improve the accuracy to supplement what these things are going to do out of the box.

10:42Now we can add additional information to the context window. So this is basically as you're typing a question to an LLM or sending a question programmatically, you can add additional information to that window. You can use a semantic tool to define the details about what your models look like or you can basically build a very budget semantic tool

11:04yourself and we're going to show each of these. But first of all, I want to talk about kind of the sample data sets that I'm using. Uh first of all, you all are going to see revenue numbers. Those are not real revenue numbers. Um they have nothing to do with Mother Duck as a company. Uh however I did try to use a

11:24very artificial data set when I created this presentation. I tried to use Northwind data. Northwind is a canonical data set from Microsoft that SQL Server used back in the day. And it's amazing.

11:37Uh it is very welllaid out. It's basically customers and their orders and what items were in those orders and what products the company sells. The problem is is that data set is super well documented on the internet. Uh I think Michael from Shadow Traffic, you know, emulated that data set in a few hours uh for us and he was very kind to do it. So

11:59I do want to give a shout out to Shadow Traffic. Awesome at generating uh generating data sets. Um, however, our actual internal data warehouse at Motherduck uh I think is 217

12:15uh different tables. You know, we're a three-year-old company. We have 217 tables in our data warehouse and uh I think 41,000 columns in those 217 tables. It's a lot more complex and it's not well understood because it's not documented on the internet. Um, so that is the data set that I'm using, but I'm fudging all the numbers. So, uh, don't

12:38don't uh take any meaning from the numbers. But one of the biggest things that we do at Motherduck is ask what is our annualized weekly revenue? Um, so this is similar to ARR that you might have heard of. It's called AWR. Uh, a lot of us are uh engineering background folks, so we don't really like to use a

13:00term that's not accurate. And annualized weekly revenue is a fairly accurate term for us. Um, and if you ask OpenAI what it is, it's basically saying it would be one weeks of data uh repeated for all 52 weeks and that's annualized weekly revenue. Pretty good. It's the right definition, of course. Then I went to the LLM and

13:23said, "What is our annualized weekly revenue for today?" And the LLM said, well, the formula is the annualized weekly revenue equals revenue on a day times 365 / 7. Anyone

13:39know what the problem with that is?

13:43What is this divided by seven thing? It's basically taking a day's revenue, figuring out what the whole year's revenue would be based off of that day, and then saying, "Oh, we'll just take a month and a half of that," or something like that. like this is completely ridiculous definition. Uh but this is the definition that it used and then it

14:04computed a result. Now what we are happy for is that it explained what it did. So as a smart human you can then say oh wait this seems a little bit wrong. Uh but we don't want it to get it wrong.

14:18So we add additional information to the context window. Uh and in this case we basically just did markdown format files uh with all of our go to market definitions and saying annualized weekly revenue is defined as this uh which is basically the current dates revenue and summed with the last 6 days of revenue multiplied times 52. You'll notice that

14:41my definition isn't very precise. It could be more precise. Um but you know it's additional helper data for the LLM.

14:53So then I say what is our annualized weekly revenue for each day in November

15:00and I get this. So at the top there 1.5 million uh at the bottom 11.962 million um the original numbers before I fudged it. I looked at the bottom number and I was like, "This is right." All right.

15:18But why are the other numbers like why does it grow so rapidly at the beginning?

15:25So what happened is the LLM understood what annualized weekly revenue was. It generated a window function in SQL, but it said, "Oh, I'm talking about November." So it excluded all data from prior to November in its calculation. So even as it was doing let's say November 1st obviously it needs to include the six prior days which are in a different

15:46month or in October but it didn't have access to that data. So it just assumed zeros and we got our annualized weekly revenue.

15:55Um a really bad calculation that someone may actually miss depending on how much data that they're looking at. Um, so again we say let's update the context window and say always use a full seven days of data when calculating AWR.

16:15I did that. I ran it. It worked well. I patted myself on the back. Life was good. The next day uh as I continued preparing for this talk, I ran it again.

16:29It again got the first six days wrong. My feeding at that additional context worked in one run but not in another run. Very intermittent.

16:40Then I ran it again and all days were wrong. It generated really crappy SQL. I have no idea actually. I didn't even dive into what it did. But I did run it again and it worked fine.

16:52So very frustrating experience especially if you're expecting to have accurate business data. How many of you have kids?

17:03So, I think uh working with an LLM is

17:07like trying to teach calculus to your 2-year-old. Um it's not it can be very frustrating at times. You don't know actually what is sinking in and what is not sinking in.

17:20Um but you know, we we do like our ducks to teach uh all the LLMs how to do accurate SQL. It just won't always happen if you don't have all of the right context. And the context that I provided obviously was not enough. So what can you do to provide additional context?

17:39You can actually use semantic modeling tools like cube or the semantic modeling capabilities of of BI and analysis tools uh like Omni and Mallaloy and you can uh feed it additional data in a lot of detail. So you can feed it basically what are your measures? What are the types of things that you want to compute? Uh what are the dimensions? How

18:01do you want to categorize those uh those measures? What are the filters? Uh do you want to be able to do by date range or by geography in these examples? And what are the calculated measures? What are the measures based off of other measures? You can go and spend a heck of a lot of time out there just kind of

18:20defining all of these things and life will be glorious. maybe uh but it is a lot of time and energy involved in doing that.

18:29Another way that you can do it is you can take these you know what I said is like uh 200 some odd tables and you can say uh I'm going to make a very simple

18:40view of this data. I'm going to make a view that more looks more like that Northwind data set so that the system can understand something uh that's well documented. And luckily, you know, it's really easy to create views in most databases.

18:56The other thing though you can do is start commenting on the databases and basically describing your columns. Um, this is sort of the the poor person's semantic layer and uh I actually generated this from the LLM to start with and then you can go in and modify it and provide additional details. So if you wanted to say for instance that uh

19:19this is the the join ID or the foreign key basically between this table and another table uh and describe um you know how they they uh they join and what the name of the join key is. You can do all sorts of things like that to make the LLM slightly better.

19:38The LMS are still generating SQL not necessarily knowing what SQL syntax that it should generate though. So even you get it accurate, you might have a lot of queries. So we run a database company that is based off of a consumptionbased billing model. Uh I personally love LLMs because they'll write 20 queries because and a human will only have to write one.

20:01So the LLM is is able to rack up the bills faster. But here I'm going to give you some indications about how to prevent that. um which is you know teach the model about your SQL dialect uh and

20:15provide performance optimizations of course and tell the model what not to do. Um, and we actually do publish a uh

20:25full markdown file describing DuckDB SQL and the motherduck editions that you can feed to your LLM to teach your LLM in the context window how duct DB works uh

20:38and how to do some types of operations which may be a little bit more esoteric um you know in a particular variant of SQL.

20:48You can also feed it things about how to do efficiency. So you can teach show an example window function here uh and show how to use the qualify function in order to limit uh the computation there and do

21:03other efficient patterns um for when you're trying to figure out things uh you know beyond ordinary SQL that it might might recognize.

21:16Lastly, I mentioned you could tell it to avoid certain things or

21:24uh what I started to realize is this looks pretty beautiful. Uh I know the LLM can generate SQL. I could use LLM to generate my get sales by region SQL. But I as the person that understand how our data works can then say okay that is the SQL that represents get sales by region and essentially create a set of tools

21:47for each of our our major business metrics and those set of tools can then define how to interact with the analytics database.

21:58it still comes down to what are you doing uh as an agent analytic what are you doing as an agent interacting with the analytics database so the capabilities of an analytics database are things like transformation ingesting data from a huge variety of different sources everything from CSV and parquet files uh to JSON and random

22:21things off of S3 you can ingest that all into the database and then to transform that data. It can act as your memory if you're doing a lot of analytical functions. So if you're building an agent that needs memory um and you're you know doing a lot of of facts, you uh you know use a a a vector database if you're trying

22:45to store a lot of facts. But if you're trying to actually store data that you're going to analyze as numbers, uh an analyical database is really great for that memory. And then obviously the computation, the ability to scan across a bunch of data and do analytics.

23:00So to give you an example, if we did something like, you know, which of my customers currently spending over $10,000 are likely to churn, you'd want an analytics database that can understand different data sources because you'd bring in different data from your business, from HubSpot, from Salesforce, from um, you know, all of your various business systems. Uh, and

23:21if you have a product, you'd bring in product data. You want to be able to store temporary state uh and derive data because you want between different runs of the LLM to have some consistency there. Scaling up and down as needed um

23:36you know you can easily have runaway costs uh and be able to run a bunch of things at once of course um and keep costs under control. Um so what would a

23:48great analytical database look like for that? Uh first of all, what would it not look like? So this is what a lot of data warehouses are. A lot of data warehouses are this monolithic very large engine that is scaled for peak uh traffic, peak performance. Um so you know a lot of businesses overspend on idle resources for their data warehouses and you don't

24:13really want to do that. Uh so there's autoscaling and things like that that you may have used. A bunch of people actually came up to our booth and talked about how autoscaling on some of the major databases didn't really do what they need. Uh and you really don't have your cost scaling with the amount of usage. Um and you're just worried about,

24:32you know, these are users, but let's say there's agents uh there spinning up and running into your database. They can execute tons of queries.

24:42So this is where we talk about open-source duct DB. Um, how many of you actually used DuckDB?

24:51Okay, maybe a third. Um, super easy to install. Uh, it runs locally as a library on your computer. It does analytics much the same as, uh, SQL light might be used to do transactional data. Um, and it is very easy to use. uh

25:10and it you know nowadays occupies maybe 100 megabytes of RAM but has full SQL analytical capabilities.

25:19Uh it is very popular and overall duct DB gets high scores from everyone in the community in terms of the you know experience that it provides. Uh approved SQL dialect. It's a great CSV parser. It's an awesome library to use within your applications.

25:39It's in process. It's pretty fast. Um, you know, fast it like go to ClickBench, which is the, uh, benchmark from the Clickhouse folks, and DuckDB oftent times performs much better than than ClickHouse in its own usage. And it's a tiny little library. Uh, it's easy to use, it scales, um, and it's free. And

26:04that's probably the best thing for a lot of people. Uh, of course, analytics often requires heavy resources. It's kind of difficult to predict how much resources you need. You're on your own to scale it if you're using the the library for duct DB. And do you really want to manage a fleet? And that's really where Motherduct comes in as a

26:24cloud data warehouse. So, uh, I don't want to pitch you on all the stuff about mother duck, but what I do want to talk about is kind of the differences in design versus what you might be used to for an analytical database. So, with mother duck, we give every user their own duckling. So, every user that goes

26:44and attaches to a data warehouse has their own compute. That's what the duckling is. It's essentially its own database instance. They're sharing data but have access to their own compute.

26:54they can scale vertically as needed. Uh and this extends to applications. So a lot of use usage of motherduck is customerf facing applications where the uh end users uh is another company that's a customer of ours uh or a customer of a customer. Um and each of those people also get their own databases in many of the use cases. is

27:22what really helps there is that's highly partitioned data. These folks really uh want to make sure each of their customers data stays completely separate and this is great for that. Uh but it also helps preventing collision on resources. You get predictable performance when each of the users have their own uh data that spins up and spins down really quickly.

27:46Now another thing that motherduck does which is really awesome for these types of things is something we call dual execution which is basically a user comes in and they send a SQL query to their local duct DB. So the local duct DB open source duct DB is the client for motherduck. they send a SQL query and the uh the SQL query planner basically

28:11decides which parts of this data is local and which parts of this data is remote. So you write a very similar like a normal SQL statement here. Um but it knows that hey our super top secret data is only here in this local computer and the other data is in the cloud and it sends that part of the SQL query for the

28:31join uh over to the cloud and pulls out

28:36the data from there. Uh so you can think of this for this architecture as being really useful for agents especially if the agent wants to do you know a lot of lowcost easy data analytics low amounts of data you could just have the agent use duct DB locally zero zero cost associated with that but then when the

28:56agent needs the power of the cloud and scaling up uh it is it is literally uh

29:03let's see here it is literally just changing one line uh to say I'm going to connect to MD colon instead of connecting to your local file system. Uh so duct DB is really versatile that way. You can use your local file system. You can even use a local inmemory and then with one line you're out actually using the cloud with

29:23this dual execution.

29:28There are also ways, you know, if you have an agent that you really want that agent to have its own playground, its own thing to work with. Um, and

29:40you can do that with zero copy clones. So, you can have this shared data set that everyone has access to, all the different agents have access to, and you can very easily say, "Okay, give me a copy of that for my local agent to work with." And it's it's basically free operation there. And then your local agent can say, I'm going to add some

30:00additional metadata. I'm going to add some additional data and then I'm going to eventually push it back so that uh you know other folks have access to that. But that cloning capability allows you to provide full write access to your agent uh to your underlying data if it needs that.

30:20And of course you can scale out. So if you have for instance an agent that needs access to a database but that agent needs a lot of power um you can basically use these read replica ducklings and say hey I'm going to have four other instances eight other instances 12 other instances I think we're up to 16 in the UI right now but

30:42you know I'm going to have all of these other instances out there to do my analytical workload and only this agent has access to do that the other agents do not.

30:52and you choose all of that stuff in the configuration. So, uh for for those who can't see, basically I have three agents on the bottom and then the core data warehouse service. The core data warehouse service would be writing all the data that the agents need to access and then the three different agents we can configure differently. Uh so the

31:11core data warehouse has a jumbo instance. We configure one of the agents here that really needs a lot of power to have jumbos and then our uh churn predictor agent maybe needs to look at a lot of things at once and so we give it many ducklings uh or many instances to be able to do that. So all the cool kids

31:31are building agents nowadays. You want an analytical database that can help answer different types of questions. you want hypertendency that allows uh the AI applications to have a sandboxed amount of compute uh and prevent runaway costs and then you know the the duct DB and mother duck can really help there. So uh that's all I have for today but uh thank

31:55you very much [applause]

32:08Thank you, Ryan. And um we have about we can have five minutes of Q&A. Does anybody have any question for Ryan?

32:15 >> I can even plug this back in. >> No >> questions, questions. You can all get free ducks at our >> Oh, sorry. The question. So, thank you Ryan for that. Uh so, basically, it's it's suitable. Uh so u mother duck makes

32:32it easier for agents to ask questions but can you give us some examples of you know actual stacks how people use it because you need to write a bunch of stuff. Do you use lench chain? Do you use pantentic? Like what do people do you know put on top of of mother duck agents?

32:49 >> Yeah I think the the biggest use case that we've seen is is really around business analytics. So so we have customers who ingest data for all of their customers. So it's a SAS application uh as an example and it ingests all the business data for various uh of their customers and then they allow business questions to be

33:10asked. Um and they've done a lot more work on the semantic side and such than I did. Uh so hopefully they're producing more accurate answers. Uh but so then they have a fleet of of agents that are out there processing the new business data that's coming in um and answering answering questions in sort of a natural language form uh for their for their

33:32business users. Um but we have you know all sorts of other different types of customers uh logistics space I mentioned earlier you know package routing things like that where all this type of stuff would be would be useful to recognize patterns uh you know from the aggregated data >> okay great more question

33:58a little bit late but I don't know if you mentioned whether [clears throat] you support vector search or is there like a vector database option for it?

34:07 >> Yeah, so there is there is some work on vector search within within duct DB. Um

34:13I wasn't I wasn't really covering that but you if you look up I think it's called VSSS uh is an extension for duct DB to do some some vector search. Um but uh yeah I was focusing more on the aggregation than the looking up of facts but yeah that capability does exist.

34:33question. >> No. >> All right. Well, >> no. >> Thank you very much. >> Oh, question.

34:43 >> Okay. Thank you. >> Okay. >> Um, how do you handle multimodal, you know, sort of images, data, etc.? Is that something that's comfortable in in in in how you handle it? And then I didn't quite get the local versus cloud.

34:58Are you is this just the database engine or is this actually caching underlying data and storage? I just didn't quite and I may have just missed it.

35:08 >> Sure. Um I'm going to answer those as two separate questions. The one of local versus cloud and then the one of you know multimodal type of data. uh in the local versus cloud, you know, basically if you have an application server uh that is running your agents or you have a uh lambda function that is running

35:30your agents uh as an example that essentially becomes the client uh and mother duck is the server. On the client, you're running a full copy of duct DB. Um, and because duct DB is so

35:44lightweight, less than 100 megabytes, um, of, you know, memory space that it's occupying, um, you can do everything locally that you want with data as long as you're within those those resource constraints. Um, is it does it create a local cache? A lot of people do do that type of of work. So, they pull data down, use a local cache. Uh, but right

36:06now it's not an automatic kind of functionality. Um, so you would have to manually decide to pull it down. It's really easy though because you can do it all like in a you know catast statement create from select star from a table uh bring it local do a bunch of computation for free uh and then push back you know

36:27whatever you want for the cloud. Um the other thing on the multimodal side so uh duck DB as an engine and thus thus then mother duck as well do allow you to use data lakes and lakehouses where in a data lake like in S3 you're often storing these other types of formats alongside your uh tabular data um and

36:51but we don't have any special capabilities built in to process those types of data there is a huge ecosystem a huge partner network. Um, so there's folks like BEM, uh, which essentially offers an API that allows you to take, uh, the unstructured data like a PDF or an image and convert it into a structured form for your analysis. Um,

37:13and they've, you know, done workshops and how you can do that with mother duck, etc. So, there's ways, but is not a built-in capability.

37:20 >> Okay, great. And we have a question here. Uh, how do you handle the security when the data can be local?

37:30 >> Sorry, what I didn't see where the question was coming from to start with.

37:35Um, and uh, >> ah, thank you. >> Um, and your question is how to deal with security when the data is local.

37:44Can you expand on that a little bit more? Well, so that if you have a data locally, uh you probably can clone it or something, you know, bring it home or whatever. So, how do you handle the managing the local that being, you know, it's convenient, but from perspective of security, what do you do?

38:03 >> Yeah. I mean, I think in larger larger organizations that may become more of a concern. I think most of the organizations that we're working with today, they consider that a benefit as opposed to a liability. But um I see the liability there uh in terms of how you

38:21you you know prevent a local user from downloading a bunch of data. Uh we have not built controls around that. Um where we consider it sort of a value ad that you can run it locally is the idea that you can take uh you can keep only some data local and not put it into the into the cloud. Uh, and a lot of folks do

38:45appreciate that as as an ability. Um, but yeah, it does it would allow a local user to select a bunch of data out of the database and and dump it out. Um, and uh, that is just, you know, part of part of the architecture and I'm sure there will be constraints built in the future uh, for for organizations where

39:05that matters. >> Great. Thank you. I think u if there's no more question we actually have another speaker supposed to start in three minutes. So if you still have more question [clears throat] for Ryan please um you can meet him outside here in the hallway or like near the interview room as well. So thank you all for um joining

39:26Ryan's talk. Thank you Ryan.

FAQS

Why do LLMs need analytics databases instead of just using RAG for business questions?

RAG (Retrieval Augmented Generation) only helps encode individual facts into an LLM's context. It cannot scan, aggregate, or compute across large datasets. For business questions like "what is our annualized revenue" or "which customers are likely to churn," you need an analytics database that can process aggregations across many rows. LLMs are bad at counting and data aggregation, so pairing them with an OLAP database produces far more accurate results than relying on the LLM alone.

How accurate is LLM-generated SQL and how can you improve it?

Current state-of-the-art LLM SQL generation hits roughly 78% accuracy on benchmarks, compared to 92% for humans. That gap is too wide for business reporting you need to trust. You can close it by adding context to the LLM's prompt: define business metrics in markdown files, use semantic modeling tools like Cube or Malloy to specify measures, dimensions, and calculated metrics, add column comments describing your database schema, create simplified views of complex tables, and teach the LLM your specific SQL dialect and performance patterns.

What makes DuckDB and MotherDuck well-suited for AI agent workloads?

DuckDB is lightweight and in-process, so agents can run local analytics at zero cost for small queries, then scale to MotherDuck's cloud compute when needed. Switching requires changing just one line of code. MotherDuck's hyper-tenancy architecture gives each agent its own isolated compute instance (called a "duckling"), which prevents runaway costs and resource collisions. You can also use zero-copy clones to give agents their own sandboxed copies of shared datasets with full write access. Learn more about MotherDuck's MCP integration.

What are the different ways LLMs can interact with analytics databases?

There are three main approaches: (1) build custom tools using agent SDKs (like OpenAI's) that define specific functions such as "get sales by region" with pre-written SQL, (2) let the LLM generate arbitrary SQL through a generic execute-SQL tool for maximum flexibility, or (3) use MCP (Model Context Protocol) servers that expose database capabilities over a wire protocol. Custom tools give you more control and accuracy, while open SQL execution gives maximum flexibility but lower reliability.

Related Videos

9:09

2026-02-13

MCP: Understand It, Set It Up, Use It

Learn what MCP (Model Context Protocol) is, how its three building blocks work, and how to set up remote and local MCP servers. Includes a real demo chaining MotherDuck and Notion MCP servers in a single prompt.

YouTube

MCP

AI, ML and LLMs

2026-01-27

Preparing Your Data Warehouse for AI: Let Your Agents Cook

Jacob and Jerel from MotherDuck showcase practical ways to optimize your data warehouse for AI-powered SQL generation. Through rigorous testing with the Bird benchmark, they demonstrate that text-to-SQL accuracy can jump from 30% to 74% by enriching your database with the right metadata.

AI, ML and LLMs

SQL

MotherDuck Features

Stream

Tutorial

0:09:18

2026-01-21

No More Writing SQL for Quick Analysis

Learn how to use the MotherDuck MCP server with Claude to analyze data using natural language—no SQL required. This text-to-SQL tutorial shows how AI data analysis works with the Model Context Protocol (MCP), letting you query databases, Parquet files on S3, and even public APIs just by asking questions in plain English.

YouTube

Tutorial

AI, ML and LLMs